之所以写这篇随笔,是因为参考文章(见文尾)中的的代码是Python2的,放到Python3上无法运行,我花了些时间debug,并记录了调试经过。

参考文章中的代码主要有两处不兼容Python3,一个是lambda函数的使用,另一个是map()的使用。

先放我修改调试后的代码和运行结果,再记录调试经过。

源代码:

1 #coding=utf-8 2 3 from functools import reduce # for py3 4 5 class Perceptron(object): 6 def __init__(self, input_num, activator): 7 ''' 8 初始化感知器,设置输入参数的个数,以及激活函数。 9 激活函数的类型为double -> double 10 ''' 11 self.activator = activator 12 # 权重向量初始化为0 13 self.weights = [0.0 for _ in range(input_num)] 14 # 偏置项初始化为0 15 self.bias = 0.0 16 def __str__(self): 17 ''' 18 打印学习到的权重、偏置项 19 ''' 20 return 'weights :%s bias :%f ' % (self.weights, self.bias) 21 22 23 def predict(self, input_vec): 24 ''' 25 输入向量,输出感知器的计算结果 26 ''' 27 # 把input_vec[x1,x2,x3...]和weights[w1,w2,w3,...]打包在一起 28 # 变成[(x1,w1),(x2,w2),(x3,w3),...] 29 # 然后利用map函数计算[x1*w1, x2*w2, x3*w3] 30 # 最后利用reduce求和 31 32 #list1 = list(self.weights) 33 #print ("predict self.weights:", list1) 34 35 36 return self.activator( 37 reduce(lambda a, b: a + b, 38 list(map(lambda tp: tp[0] * tp[1], # HateMath修改 39 zip(input_vec, self.weights))) 40 , 0.0) + self.bias) 41 def train(self, input_vecs, labels, iteration, rate): 42 ''' 43 输入训练数据:一组向量、与每个向量对应的label;以及训练轮数、学习率 44 ''' 45 for i in range(iteration): 46 self._one_iteration(input_vecs, labels, rate) 47 48 def _one_iteration(self, input_vecs, labels, rate): 49 ''' 50 一次迭代,把所有的训练数据过一遍 51 ''' 52 # 把输入和输出打包在一起,成为样本的列表[(input_vec, label), ...] 53 # 而每个训练样本是(input_vec, label) 54 samples = zip(input_vecs, labels) 55 # 对每个样本,按照感知器规则更新权重 56 for (input_vec, label) in samples: 57 # 计算感知器在当前权重下的输出 58 output = self.predict(input_vec) 59 # 更新权重 60 self._update_weights(input_vec, output, label, rate) 61 62 def _update_weights(self, input_vec, output, label, rate): 63 ''' 64 按照感知器规则更新权重 65 ''' 66 # 把input_vec[x1,x2,x3,...]和weights[w1,w2,w3,...]打包在一起 67 # 变成[(x1,w1),(x2,w2),(x3,w3),...] 68 # 然后利用感知器规则更新权重 69 delta = label - output 70 self.weights = list(map( lambda tp: tp[1] + rate * delta * tp[0], zip(input_vec, self.weights)) ) # HateMath修改 71 72 # 更新bias 73 self.bias += rate * delta 74 75 print("_update_weights() -------------") 76 print("label - output = delta:" ,label, output, delta) 77 print("weights ", self.weights) 78 print("bias", self.bias) 79 80 81 82 83 84 def f(x): 85 ''' 86 定义激活函数f 87 ''' 88 return 1 if x > 0 else 0 89 90 def get_training_dataset(): 91 ''' 92 基于and真值表构建训练数据 93 ''' 94 # 构建训练数据 95 # 输入向量列表 96 input_vecs = [[1,1], [0,0], [1,0], [0,1]] 97 # 期望的输出列表,注意要与输入一一对应 98 # [1,1] -> 1, [0,0] -> 0, [1,0] -> 0, [0,1] -> 0 99 labels = [1, 0, 0, 0] 100 return input_vecs, labels 101 102 def train_and_perceptron(): 103 ''' 104 使用and真值表训练感知器 105 ''' 106 # 创建感知器,输入参数个数为2(因为and是二元函数),激活函数为f 107 p = Perceptron(2, f) 108 # 训练,迭代10轮, 学习速率为0.1 109 input_vecs, labels = get_training_dataset() 110 p.train(input_vecs, labels, 10, 0.1) 111 #返回训练好的感知器 112 return p 113 114 if __name__ == '__main__': 115 # 训练and感知器 116 and_perception = train_and_perceptron() 117 # 打印训练获得的权重 118 119 # 测试 120 print (and_perception) 121 print ('1 and 1 = %d' % and_perception.predict([1, 1])) 122 print ('0 and 0 = %d' % and_perception.predict([0, 0])) 123 print ('1 and 0 = %d' % and_perception.predict([1, 0])) 124 print ('0 and 1 = %d' % and_perception.predict([0, 1]))

运行输出:

======================== RESTART: F:桌面Perceptron.py ======================== _update_weights() ------------- label - output = delta: 1 0 1 weights [0.1, 0.1] bias 0.1 _update_weights() ------------- label - output = delta: 0 1 -1 weights [0.1, 0.1] bias 0.0 _update_weights() ------------- label - output = delta: 0 1 -1 weights [0.0, 0.1] bias -0.1 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.0, 0.1] bias -0.1 _update_weights() ------------- label - output = delta: 1 0 1 weights [0.1, 0.2] bias 0.0 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias 0.0 _update_weights() ------------- label - output = delta: 0 1 -1 weights [0.0, 0.2] bias -0.1 _update_weights() ------------- label - output = delta: 0 1 -1 weights [0.0, 0.1] bias -0.2 _update_weights() ------------- label - output = delta: 1 0 1 weights [0.1, 0.2] bias -0.1 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.1 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.1 _update_weights() ------------- label - output = delta: 0 1 -1 weights [0.1, 0.1] bias -0.2 _update_weights() ------------- label - output = delta: 1 0 1 weights [0.2, 0.2] bias -0.1 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.2, 0.2] bias -0.1 _update_weights() ------------- label - output = delta: 0 1 -1 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 1 1 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 1 1 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 1 1 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 1 1 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 1 1 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 1 1 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 _update_weights() ------------- label - output = delta: 0 0 0 weights [0.1, 0.2] bias -0.2 weights :[0.1, 0.2] bias :-0.200000 1 and 1 = 1 0 and 0 = 0 1 and 0 = 0 0 and 1 = 0

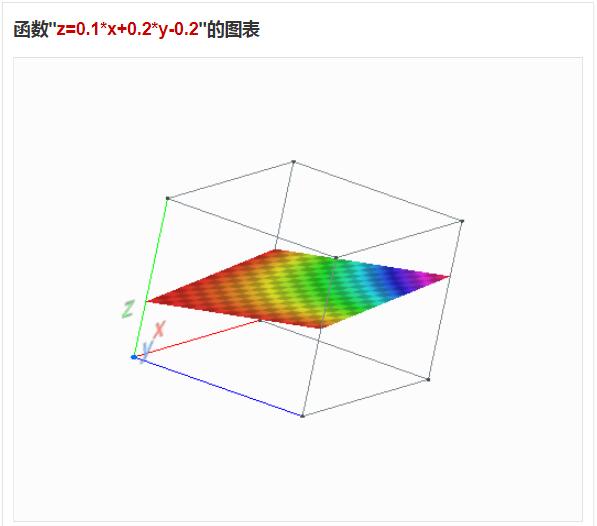

可以看到,最后训练出来的权重是 [0.1, 0.2],偏置 -0.2,根据感知机模型得到公式:f(x, y) = 0.1x + 0.2y -0.2

可以看到是个三维平面,这个平面实现了对样本中4个三维空间点分类。

调试经过:

1. lambda表达式的使用

第38和第70行中,原适用于Python2.7的代码无法正常运行,提示 invalid syntax。貌似是Python3中,在lambda表达式中使用元组的方式和Python2.7不一样。

我改了一下代码,语法问题没有了,可是预测结果不正常。于是就打印map()函数的返回值,试图调试。

2. 打印map()函数返回的对象

参见 https://www.cnblogs.com/lyy-totoro/p/7018597.html 的代码,先转为list再打印。

list1 = list(data)

print(list1)

打印输出表明,训练的值明显不对,到底是哪里的问题?

3. 真相【小】白

https://segmentfault.com/a/1190000000322433

关键句:在Python3中,如果不在map函数前加上list,lambda函数根本就不会执行。

于是加上list,就变成了最终的代码,工作正常。

只是“lambda函数根本就不会执行”这句,我没考证过,所以说真相小白。

原文链接:

零基础入门深度学习(1) - 感知器

https://www.zybuluo.com/hanbingtao/note/433855