对流行Hadoop做了一些最基本的了解,暂时没太大感觉,恩先记点笔记吧. = =

Hadoop 基本命令及环境安装

一、下载虚拟机镜像

目前比较流行的有以下三个:

(CHD) http://www.cloudera.com

(HDP) http://hortonworks.com/

(MapR) http://www.mapr.com

本文使用HDP的沙盘

下载地址 http://hortonworks.com/products/hortonworks-sandbox/#install

我使用的是 Hyper-V 的镜像 , 配置可以查看下载地址旁边的文档

二、使用HDP沙盘

- 显示指定路径的文件和目录

注意这里显示的hdfs的文件目录不是实际机器中的文件目录hadoop fs -ls /

- 建立一个目录并下载数据

mkdir /home/bihell wget http://www.grouplens.org/system/files/ml-100k.zip unzip ml-100k.zip

- 在Hadoop中建立目录

hadoop fs -mkdir /bihell/ hadoop fs -mkdir /bihell/movies hadoop fs -mkdir /bihell/userinfo

- Hadoop文件操作

hadoop支持两个文件系统命令

fs put 命令可以把文件传送到hadoop的文件系统,而fs get 命令可以从hadoop中获取文件hadoop fs -put u.item /bihell/movies hadoop fs -put u.info /bihell/userinfo

另外还有一个拷贝命令 fs –cphadoop fs -cp /bihell/movies/u.item /bihell

删除命令 fs -rmhadoop fs -rm /bihell/u.item

拷贝多个文件hadoop fs -mkdir /bihell/test hadoop fs -cp /bihell/movies/u.item /bihell/userinfo/u.info /bihell/test

递归删除文件hadoop fs -rm -r -skipTrash /bihell/test

显示文件内容hadoop fs -cat /bihell/movies/* |less

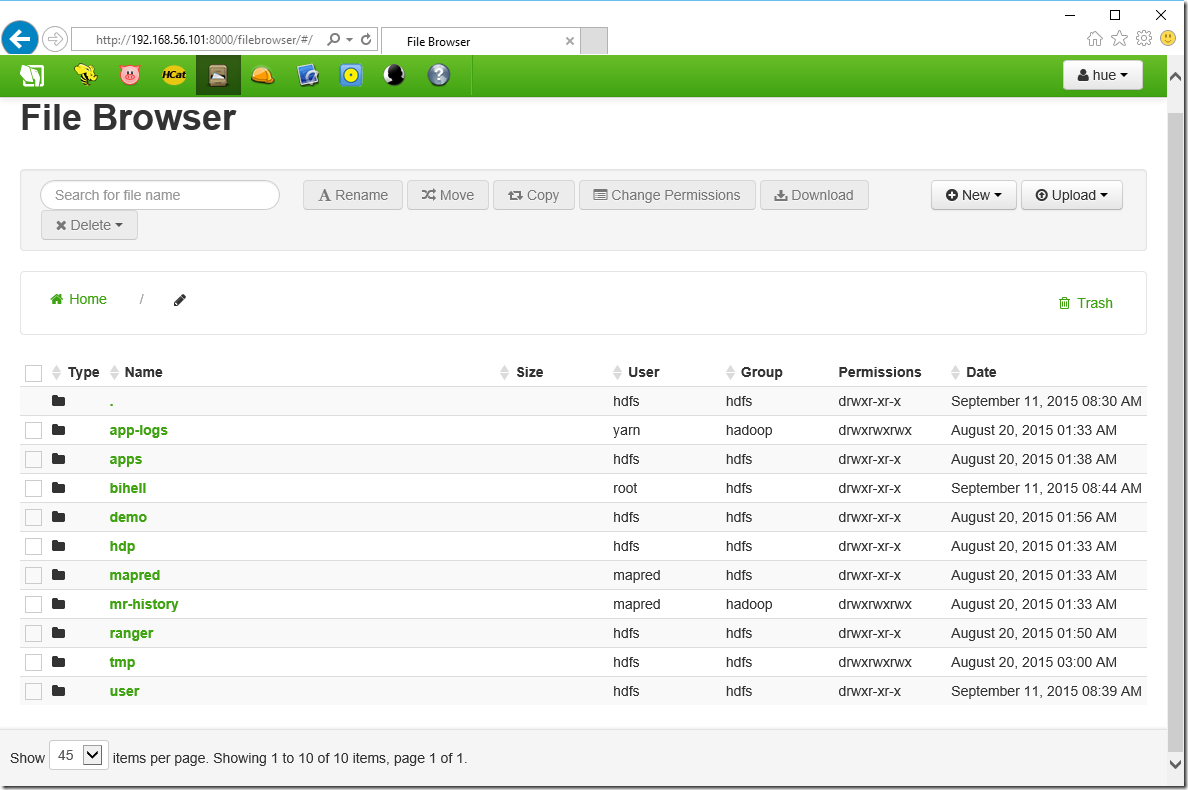

三、 使用hue ui 的文件浏览器操作文件

根据沙盘的提示访问 http://192.168.56.101:8000/filebrowser/#/ 我们可以看到刚才建立的目录。 (还是UI方便点啊)

使用Hive并且将数据导入仓库

一、先看一下Demo里面的Hive目录

hadoop fs -ls /apps/hive/warehouse

Found 3 items drwxrwxrwx - hive hdfs 0 2015-08-20 09:05 /apps/hive/warehouse/sample_07 drwxrwxrwx - hive hdfs 0 2015-08-20 09:05 /apps/hive/warehouse/sample_08 drwxrwxrwx - hive hdfs 0 2015-08-20 08:58 /apps/hive/warehouse/xademo.db

hadoop fs -ls /apps/hive/warehouse/sample_07

Found 1 items -rwxr-xr-x 1 hue hue 46055 2015-08-20 08:46 /apps/hive/warehouse/sample_07/sample_07

查看文件内容

hadoop fs -cat /apps/hive/warehouse/sample_07/sample_07 | less

二、使用hive命令

进入hive数据库

hive

显示hive中的数据库

show databases;

显示表格

show tables; show tables '*08*';

清空屏幕

!clear;

进一步查看表格结构

describe sample_07; describe extended sample_07 ;

创建数据库

create database bihell;

使用hadoop fs命令查看下hive 目录,我们刚才创建的数据库文件应该在里面了

!hadoop fs -ls /apps/hive/warehouse/;

结果如下:

Found 4 items drwxrwxrwx - root hdfs 0 2015-09-12 08:57 /apps/hive/warehouse/bihell.db drwxrwxrwx - hive hdfs 0 2015-08-20 09:05 /apps/hive/warehouse/sample_07 drwxrwxrwx - hive hdfs 0 2015-08-20 09:05 /apps/hive/warehouse/sample_08 drwxrwxrwx - hive hdfs 0 2015-08-20 08:58 /apps/hive/warehouse/xademo.db

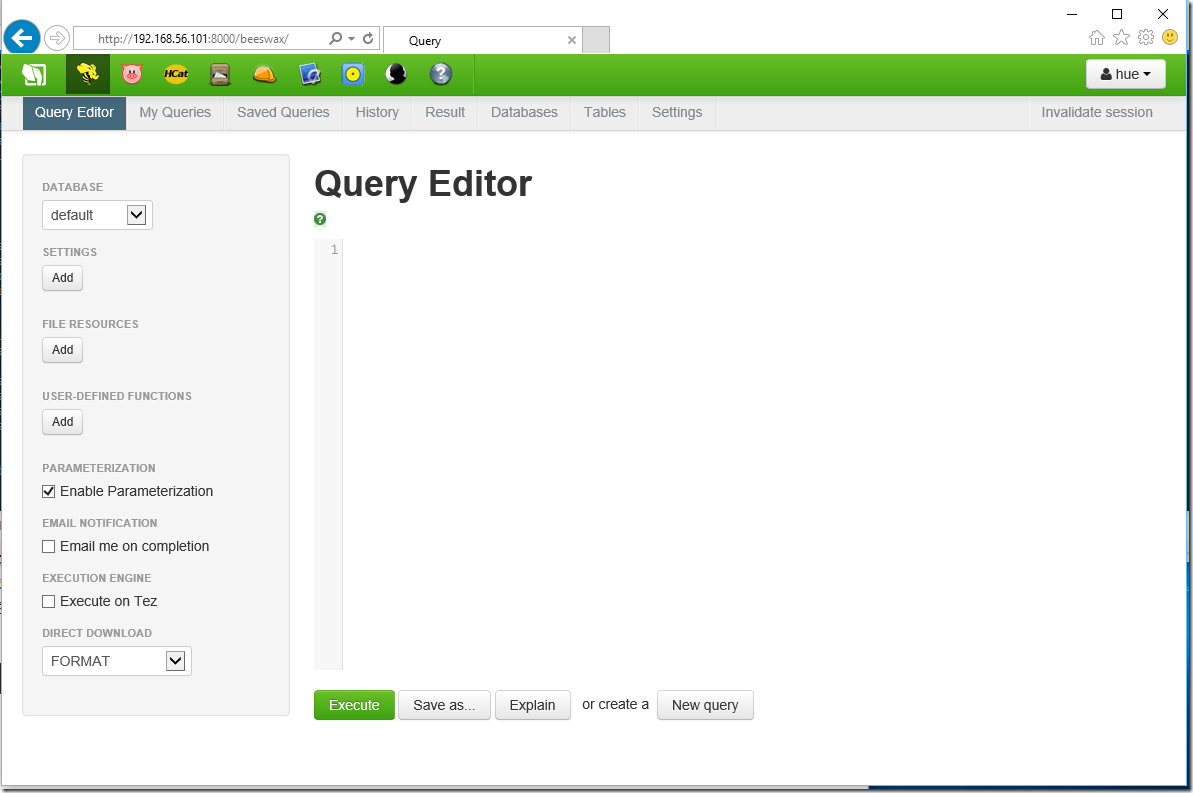

三、使用建立的数据库

一直用命令行比较吃力,我们也可用ui界面

在我们新建的bihell数据库中建立表格

CREATE TABLE movies (

movie_id INT,

movie_title STRING,

release_date STRING,

video_release_date STRING,

imdb_url STRING,

unknown INT,

action INT,

adventure INT,

animation INT,

children INT,

comedy INT,

crime INT,

documentary INT,

drama INT,

fantasy INT,

film_noir INT,

horror INT,

musical INT,

mystery INT,

romance INT,

sci_fi INT,

thriller INT,

war INT,

Western INT

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '|'

STORED AS TEXTFILE;

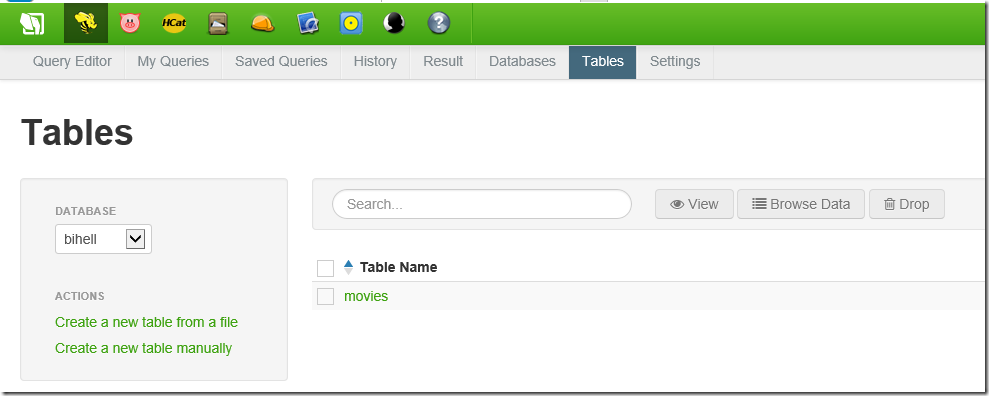

创建完毕以后点击Tables可以看到我们刚才创建的表格

在SSH执行文件命令,我们可以看到bihell.db下面多了一个目录

hadoop fs -ls /apps/hive/warehouse/bihell.db

Found 1 items drwxrwxrwx - hive hdfs 0 2015-09-12 09:09 /apps/hive/warehouse/bihell.db/movies

四、进入hive ,我们导入一些数据进去

导入数据

lOAD DATA INPATH '/bihell/userinfo' INTO TABLE movies;

清空数据

truncate table movies;

导入并覆盖原有数据

load data inpath '/bihell/movies' overwrite into table movies;

四、建立External表与RCFile 表

前面我们建立表以后导入数据到表中, 目录中的文件会被删除,现在我们直接建立表并指向我们所在的文件目录,建立外部表.

复原文件

!hadoop fs -put /home/bihell/ml-100k/u.user /bihell/userinfo;

建立另外一个表格,注意有指定路径

CREATE EXTERNAL TABLE users ( user_id INT, age INT, gender STRING, occupation STRING, zip_code STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION '/bihell/userinfo';

查看users的schema

describe formatted users;

查询表

SELECT * FROM users limit 100;

创建 RCFile 表格

CREATE TABLE occupation_count STORED AS RCFile AS SELECT COUNT(*), occupation FROM users GROUP BY occupation;

引用另外一个表创建一个空表

CREATE TABLE occupation2 LIKE occupation_count;

Hive 查询语言

我们之前已经用了部分hive查询,现在深入一下

一、复杂类型

Arrays – ARRAY<data_type>

Maps -- MAP<primitive,data_type>

Struct -- STRUCT<col_name:data_type[COMMENT col_comment],…>

Union Type – UNIONYTPE<data_type,data_type,…>

create table movies (

movie_name string,

participants ARRAY <string>,

release_dates MAP <string,timestamp>,

studio_addr STRUCT <state:string,city:string,zip:string,streetnbr:int,streetname:string,unit:string>,

complex_participants MAP<string,STRUCT<address:string,attributes MAP<string,string>>>

misc UNIONTYPE <int,string,ARRAY<double>>

);

查询方式

select movie_name,

participants[0],

release_dates[“USA”],

studio_addr.zip,

complex_participants[“Leonardo DiCaprio”].attributes[“fav_color”],

misc

from movies;

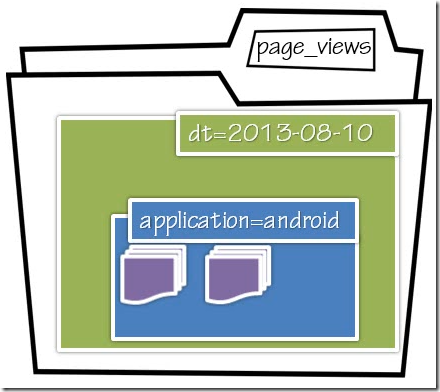

二、Partitioned Tables

这个章节主要讲述加载与管理Hive中的数据

前面我们使用了CREATE TABLE 以及 CREATE EXTERNAL TABLE 本文我们要看下Table Partitions

创建分区表:

CREATE TABLE page_views( eventTime STRING, userid STRING) PARTITIONED BY (dt STRING, applicationtype STRING) STORED AS TEXTFILE;

数据库文件默认地址 :

/apps/hive/warehouse/page_views

当你每次导入数据的时候都会为你建立partition ,比如

LOAD DATA INPATH ‘/mydata/android'/Aug_10_2013/pageviews/’ INTO TABLE page_views PARTITION (dt = ‘2013-08-10’, applicationtype = ‘android’);

生成分区如下:

/apps/hive/warehouse/page_views/dt=2013-08-10/application=android

当然我们也可以覆盖导入

LOAD DATA INPATH ‘/mydata/android'/Aug_10_2013/pageviews/’ OVERWRITE INTO TABLE page_views PARTITION (dt = ‘2013-08-10’, applicationtype = ‘android’);

创建语句中dt和applicationtype 是virtual partition columns. 如果你describe table,会发现所有字段显示和正常表一样

eventTime STRING

userid STRING

page STRING

dt STRING

applicationtype STRING

可以直接用于查询

select dt as eventDate,page,count(*) as pviewCount From page_views where applicationtype = ‘iPhone’;

三、External Partitioned Tables

相比分区表,只是多了一个EXTERNAL ,我们注意到这里没有指定location ,添加文件的时候才需要指定

CREATE EXTERNAL TABLE page_views( eventTime STRING, userid STRING) PARTITIONED BY (dt STRING, applicationtype STRING) STORED AS TEXTFILE;

添加文件

ALTER TABLE page_views ADD PARTITION ( dt = ‘2013-09-09’, applicationtype = ‘Windows Phone 8’) LOCATION ‘/somewhere/on/hdfs/data/2013-09-09/wp8’; ALTER TABLE page_view ADD PARTITION (dt=’2013-09-09’,applicationtype=’iPhone’) LOCATION ‘hdfs://NameNode/somewhere/on/hdfs/data/iphone/current’; ALTER TABLE page_views ADD IF NOT EXSTS PARTITION (dt=’2013-09-09’,applicationtype=’iPhone’) LOCATION ‘/somewhere/on/hdfs/data/iphone/current’; PARTITION (dt=’2013-09-08’,applicationtype=’iPhone’) LOCATION ‘/somewhere/on/hdfs/data/prev1/iphone; PARTITION (dt=’2013-09-07’,applicationtype=’iPhone’) LOCATION ‘/somewhere/on/hdfs/data/iphone/prev2;

四、实际操作

EXTERNAL PARTITION TABLE

--建立目录 hadoop fs -mkdir /bihell/logs/pv_ext/somedatafor_7_11 /bihell/logs/pv_ext/2013/08/11/log/data --建立EXTERNAL TABLE CREATE EXTERNAL TABLE page_views_ext (logtime STRING, userid INT, ip STRING, page STRING, ref STRING, os STRING, os_ver STRING, agent STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' ' LOCATION '/bihell/logs/pv_ext/'; --查看表格详细信息 DESCRIBE FORMATTED page_views_ext; --查看执行计划 EXPLAIN SELECT * FROM page_views_ext WHERE userid = 13; --删除表 DROP TABLE page_views_ext; --创建EXTERNAL Partition Table CREATE EXTERNAL TABLE page_views_ext (logtime STRING, userid INT, ip STRING, page STRING, ref STRING, os STRING, os_ver STRING, agent STRING) PARTITIONED BY (y STRING, m STRING, d STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' ' LOCATION '/bihell/logs/pv_ext/'; --将日志传送至Hadoop目录 !hadoop fs -put /media/sf_VM_Share/LogFiles/log_2013711_155354.log /bihell/logs/pv_ext/somedatafor_7_11 --因为是partition table 所以此时查询该表是没有任何内容的 SELECT * FROM page_views_ext; --添加文件 ALTER TABLE page_views_ext ADD PARTITION (y='2013', m='07', d='11') LOCATION '/bihell/logs/pv_ext/somedatafor_7_11'; --再次查询 SELECT * FROM page_views_ext LIMIT 100; --describe table DESCRIBE FORMATTED page_views_ext; --再次查看执行计划 我们发现predicate还是13, 并没有加上 m,d EXPLAIN SELECT * FROM page_views_ext WHERE userid=13 AND m='07'AND d='11' LIMIT 100; --再添加一个文件 !hadoop fs -put /media/sf_VM_Share/LogFiles/log_2013811_16136.log /bihell/logs/pv_ext/2013/08/11/log/data ALTER TABLE page_views_ext ADD PARTITION (y='2013', m='08', d='11') LOCATION '/bihell/logs/pv_ext/2013/08/11/log/data'; --查询 SELECT COUNT(*) as RecordCount, m FROM page_views_ext WHERE d='11' GROUP BY m; --另一种方式添加数据 !hadoop fs -put /media/sf_VM_Share/LogFiles/log_2013720_162256.log /bihell/logs/pv_ext/y=2013/m=07/d=20/data.log SELECT * FROM page_views_ext WHERE m='07' AND d='20' LIMIT 100; MSCK REPAIR TABLE page_views_ext; SELECT * FROM page_views_ext WHERE m='07' AND d='20' LIMIT 100;

PARTITION TABLE

CREATE TABLE page_views (logtime STRING, userid INT, ip STRING, page STRING, ref STRING, os STRING, os_ver STRING, agent STRING) PARTITIONED BY (y STRING, m STRING, d STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '; LOAD DATA LOCAL INPATH '/media/sf_VM_Share/LogFiles/log_2013805_16210.log' OVERWRITE INTO TABLE page_views PARTITION (y='2013', m='08', d='05'); !hadoop fs -ls /apps/hive/warehouse/bihell.db/page_views/;

批量插入及动态分区表插入

Multiple Inserts

--Syntax

FROM form_statement

INSERT OVERWRITE TABLE table1 [PARTITION(partcol1=val1,partcol2=val2)] select_statement1

INSERT INTO TABLE table2 [PARTITION(partcol1=val1,partcol2=val2)[IF NOT EXISTS]] select_statements2

INSERT OVERWRITE DIRECTORY ‘path’ select_statement3;

-- 提取操作 FROM movies INSERT OVERWRITE TABLE horror_movies SELECT * WHERE horror = 1 AND release_date=’8/23/2013’ INSERT INTO action_movies SELECT * WHERE action = 1 AND release_date = ‘8/23/2013’; FROM (SELECT * FROM movies WHERE release_date =’8/23/2013’) src INSERT OVERWRITE TABLE horror_movies SELECT * WHERE horror =1 INSERT INTO action_movies SELECT * WHERE action = 1;

Dynamic Partition Inserts

CREATE TABLE views_stg (eventTime STRING, userid STRING)

PARTITIONED BY(dt STRING,applicationtype STRING,page STRING);

FROM page_views src

INSERT OVERWRITE TABLE views_stg PARTITION (dt=’2013-09-13’,applicationtype=’Web’,page=’Home’)

SELECT src.eventTime,src.userid WHERE dt=’2013-09-13’ AND applicationtype=’Web’,page=’Home’

INSERT OVERWRITE TABLE views_stg PARTITION (dt=’2013-09-14,applicationtype=’Web’,page=’Cart’)

SELECT src.eventTime,src.userid WHERE dt=’2013-09-14’ AND applicationtype=’Web’,page=’Cart’

INSERT OVERWRITE TABLE views_stg PARTITION (dt=’2013-09-15’,applicationtype=’Web’,page=’Checkout’)

SELECT src.eventTime,src.userid WHERE dt=’2013-09-15’ AND applicationtype=’Web’,page=’Checkout’

FROM page_views src

INSERT OVERWRITE TABLE views_stg PARTITION (applicationtype=’Web’,dt,page)

SELECT src.eventTime,src.userid,src.dt,src.page WHERE applicationtype=’Web’

实例

!hadoop fs -mkdir /bihell/logs/multi_insert; !hadoop fs -put /media/sf_VM_Share/LogFiles/log_2012613_161117.log /media/sf_VM_Share/LogFiles/log_2013803_15590.log /bihell/logs/multi_insert -- 创建EXTERNAL TABLE CREATE EXTERNAL TABLE staging (logtime STRING, userid INT, ip STRING, page STRING, ref STRING, os STRING, os_ver STRING, agent STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' ' LOCATION '/bihell/logs/multi_insert'; --批量插入 PARTITION INSERT INTO TABLE page_views PARTITION (y, m, d) SELECT logtime, userid, ip, page, ref, os, os_ver, agent, substr(logtime, 7, 4), substr(logtime, 1, 2), substr(logtime, 4, 2) FROM staging; SET hive.exec.dynamic.partition.mode=nonstrict; INSERT INTO TABLE page_views PARTITION (y, m, d) SELECT logtime, userid, ip, page, ref, os, os_ver, agent, substr(logtime, 7, 4), substr(logtime, 1, 2), substr(logtime, 4, 2) FROM staging; SELECT * FROM page_views WHERE y='2012' LIMIT 100; select regexp_replace(logtime, '/', '-') from staging; select substr(logtime, 7, 4), substr(logtime, 1, 2), substr(logtime, 4, 2) from staging;