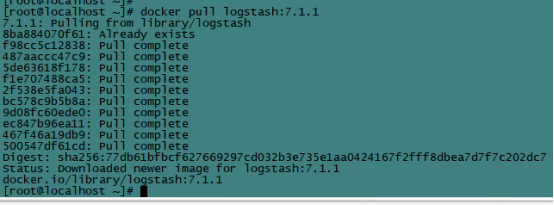

1、下载镜像

[root@vanje-dev01 ~]# docker pull logstash:7.0.1

2、安装部署

2.1 创建宿主映射目录

# mkdir /etc/logstash/

# mkdir /etc/logstash/conf.d

2.2 编辑配置文件

# vim /etc/logstash/logstash.yml

http.host: "0.0.0.0"

# touch /etc/logstash/patterns

# chown -R 1000:1000 /etc/logstash/

2.3 启动

# docker run -d --name logstash

-p 5044:5044 -p 9600:9600

--net=host --restart=always

-v /home/logstash:/usr/share/logstash

logstash:7.1.1

目录映射说明:

1、/etc/logstash/logstash.yml:logstash启动配置文件

2、/etc/logstash/conf.d: logstash过滤日志规则

3、/etc/logstash/patterns: 自定义匹配规则

生产中为了保证elk集群的稳定性,Logstash作为日志的解析分割,一般都会在前面添加redis或者kafka集群。

springcloud+logback+logstash+rabbitmq

pom

<!--logback日志写入rabbitmq-->

<dependency>

<groupId>org.springframework.amqp</groupId>

<artifactId>spring-rabbit</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

</dependency>

logback-spring.xml配置

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false">

<!--定义日志文件的存储地址 -->

<property name="LOG_HOME" value="../logs/system" />

<!--<property name="COLOR_PATTERN" value="%black(%contextName-) %red(%d{yyyy-MM-dd HH:mm:ss}) %green([%thread]) %highlight(%-5level) %boldMagenta( %replace(%caller{1}){' |Caller.{1}0| ', ''})- %gray(%msg%xEx%n)" />-->

<!-- 控制台输出 -->

<!--<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">-->

<!-- <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">-->

<!-- <!–格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符-->

<!-- <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50}:%L - %msg%n</pattern>–>-->

<!-- <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %highlight(%-5level) %cyan(%logger{50}:%L) - %msg%n</pattern>-->

<!-- </encoder>-->

<!--</appender>-->

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<layout class="ch.qos.logback.classic.PatternLayout">

<pattern>%highlight(%d{yyyy-MM-dd HH:mm:ss.SSS}) %boldYellow([%thread]) %-5level %logger{36}- %msg%n</pattern>

</layout>

</appender>

<!-- 按照每天生成日志文件 -->

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!--日志文件输出的文件名 -->

<FileNamePattern>${LOG_HOME}/jeecgboot-system-%d{yyyy-MM-dd}.log</FileNamePattern>

<!--日志文件保留天数 -->

<MaxHistory>7</MaxHistory>

</rollingPolicy>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符 -->

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50}:%L - %msg%n</pattern>

</encoder>

</appender>

<!-- 生成 error html格式日志开始 -->

<appender name="ERROR_LOG" class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!--设置日志级别,过滤掉info日志,只输入error日志-->

<level>ERROR</level>

</filter>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<!--<encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder">-->

<!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符 -->

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50}:%L - %msg%n</pattern>

<!--<layout class="ch.qos.logback.classic.html.HTMLLayout">-->

<!-- <pattern>%p%d%msg%M%F{32}%L</pattern>-->

<!--</layout>-->

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!--日志文件输出的文件名 -->

<FileNamePattern>${LOG_HOME}/error-log-%d{yyyy-MM-dd}.log</FileNamePattern>

<!--<!–日志文件保留天数 –>-->

<MaxHistory>7</MaxHistory>

</rollingPolicy>

<!--<file>${LOG_HOME}/error-log.html</file>-->

</appender>

<!--logback日志写入rabbitmq -->

<appender name="RabbitMq"

class="org.springframework.amqp.rabbit.logback.AmqpAppender">

<layout>

<pattern><![CDATA[ %d %p %t [%c] - <%m>%n ]]></pattern>

</layout>

<!--rabbitmq地址 -->

<addresses>192.168.0.20:5670</addresses>

<abbreviation>36</abbreviation>

<includeCallerData>true</includeCallerData>

<applicationId>system</applicationId>

<username>admin</username>

<password>admin</password>

<!--路邮件 -->

<!--{applicationId} -->

<!--%property{applicationId}.%c.%p 收集不到日志 -->

<!--最后改为精确匹配了 非常重要 -->

<routingKeyPattern>system</routingKeyPattern>

<generateId>true</generateId>

<charset>UTF-8</charset>

<durable>true</durable>

<deliveryMode>NON_PERSISTENT</deliveryMode>

<declareExchange>true</declareExchange>

<autoDelete>false</autoDelete>

</appender>

<!--myibatis log configure -->

<logger name="com.apache.ibatis" level="TRACE" />

<logger name="java.sql.Connection" level="DEBUG" />

<logger name="java.sql.Statement" level="DEBUG" />

<logger name="java.sql.PreparedStatement" level="DEBUG" />

<!-- 日志输出级别 -->

<root level="INFO">

<appender-ref ref="STDOUT" />

<appender-ref ref="FILE" />

<appender-ref ref="ERROR_LOG" />

<!--这块也要添加-->

<appender-ref ref="RabbitMq" />

</root>

</configuration>

Logstash 配置

vi /home/logstash/config/conf.d/system-log.conf

input {

rabbitmq {

type =>"all"

durable => true

exchange => "logs"

exchange_type => "topic"

key => "system"

host => "192.168.0.20:5670"

# port => 5670

user => "admin"

password => "admin"

queue => "system-mq"

auto_delete => false

}

}

output {

elasticsearch {

hosts => ["192.168.0.23:9200"]

index => "system_log_mq"

}

}