作者:Grey

原文地址:Java IO学习笔记二:DirectByteBuffer与HeapByteBuffer

ByteBuffer.allocate()与ByteBuffer.allocateDirect()的基本使用

这两个API封装了一个统一的ByteBuffer返回值,在使用上是无差别的。

import java.nio.ByteBuffer;

public class TestByteBuffer {

public static void main(String[] args) {

ByteBuffer buffer = ByteBuffer.allocateDirect(1024);

System.out.println("position: " + buffer.position());

System.out.println("limit: " + buffer.limit());

System.out.println("capacity: " + buffer.capacity());

System.out.println("mark: " + buffer);

buffer.put("123".getBytes());

System.out.println("-------------put:123......");

System.out.println("mark: " + buffer);

buffer.flip();

System.out.println("-------------flip......");

System.out.println("mark: " + buffer);

buffer.get();

System.out.println("-------------get......");

System.out.println("mark: " + buffer);

buffer.compact();

System.out.println("-------------compact......");

System.out.println("mark: " + buffer);

buffer.clear();

System.out.println("-------------clear......");

System.out.println("mark: " + buffer);

}

}

输出结果是:

mark: java.nio.DirectByteBuffer[pos=0 lim=1024 cap=1024]

-------------put:123......

mark: java.nio.DirectByteBuffer[pos=3 lim=1024 cap=1024]

-------------flip......

mark: java.nio.DirectByteBuffer[pos=0 lim=3 cap=1024]

-------------get......

mark: java.nio.DirectByteBuffer[pos=1 lim=3 cap=1024]

-------------compact......

mark: java.nio.DirectByteBuffer[pos=2 lim=1024 cap=1024]

-------------clear......

mark: java.nio.DirectByteBuffer[pos=0 lim=1024 cap=1024]

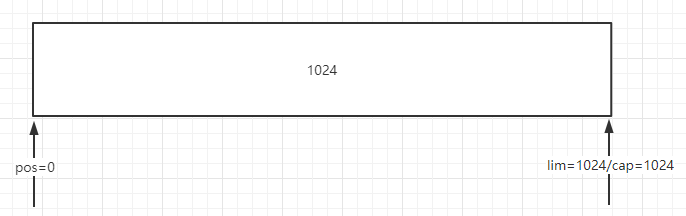

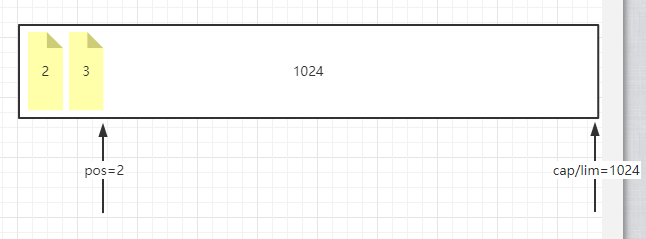

当分配好1024空间后,未对ByteBuffer做任何操作的时候,position最初就是0位置,limit和capcity都是1024位置,如图:

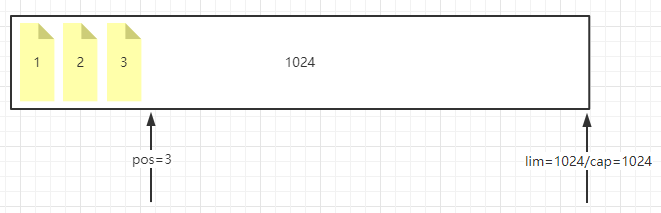

当put进去123三个字符以后:

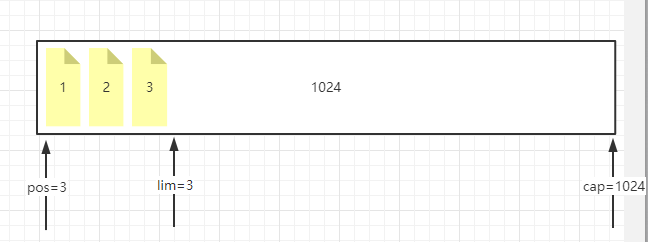

执行flip后,pos会回到原点,lim会到目前写入的位置,这个方法主要用于读取数据:

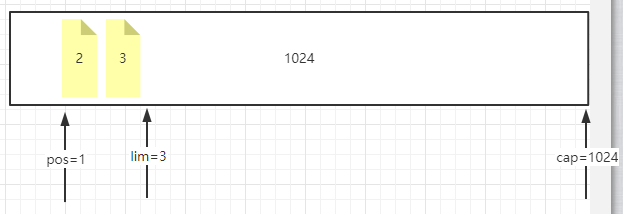

调用get方法,拿出一个byte,如下图:

调用compact,会把前面拿掉的1个Byte位置填充:

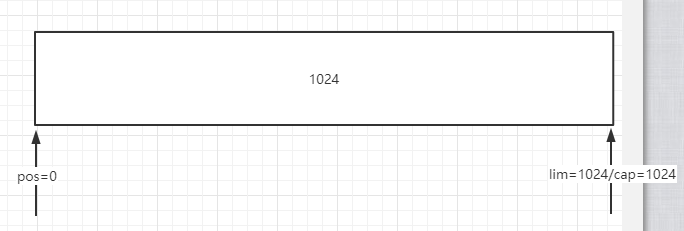

调用clear会让整个内存回到初始分配状态:

ByteBuffer.allocate()与ByteBuffer.allocateDirect()方法的区别

可以参考:

Ron Hitches in his excellent book Java NIO seems to offer what I thought could be a good answer to your question:

Operating systems perform I/O operations on memory areas. These memory areas, as far as the operating system is concerned, are contiguous sequences of bytes. It's no surprise then that only byte buffers are eligible to participate in I/O operations. Also recall that the operating system will directly access the address space of the process, in this case the JVM process, to transfer the data. This means that memory areas that are targets of I/O perations must be contiguous sequences of bytes. In the JVM, an array of bytes may not be stored contiguously in memory, or the Garbage Collector could move it at any time. Arrays are objects in Java, and the way data is stored inside that object could vary from one JVM implementation to another.

For this reason, the notion of a direct buffer was introduced. Direct buffers are intended for interaction with channels and native I/O routines. They make a best effort to store the byte elements in a memory area that a channel can use for direct, or raw, access by using native code to tell the operating system to drain or fill the memory area directly.

Direct byte buffers are usually the best choice for I/O operations. By design, they support the most efficient I/O mechanism available to the JVM. Nondirect byte buffers can be passed to channels, but doing so may incur a performance penalty. It's usually not possible for a nondirect buffer to be the target of a native I/O operation. If you pass a nondirect ByteBuffer object to a channel for write, the channel may implicitly do the following on each call:

Create a temporary direct ByteBuffer object.

Copy the content of the nondirect buffer to the temporary buffer.

Perform the low-level I/O operation using the temporary buffer.

The temporary buffer object goes out of scope and is eventually garbage collected.

This can potentially result in buffer copying and object churn on every I/O, which are exactly the sorts of things we'd like to avoid. However, depending on the implementation, things may not be this bad. The runtime will likely cache and reuse direct buffers or perform other clever tricks to boost throughput. If you're simply creating a buffer for one-time use, the difference is not significant. On the other hand, if you will be using the buffer repeatedly in a high-performance scenario, you're better off allocating direct buffers and reusing them.

Direct buffers are optimal for I/O, but they may be more expensive to create than nondirect byte buffers. The memory used by direct buffers is allocated by calling through to native, operating system-specific code, bypassing the standard JVM heap. Setting up and tearing down direct buffers could be significantly more expensive than heap-resident buffers, depending on the host operating system and JVM implementation. The memory-storage areas of direct buffers are not subject to garbage collection because they are outside the standard JVM heap.

The performance tradeoffs of using direct versus nondirect buffers can vary widely by JVM, operating system, and code design. By allocating memory outside the heap, you may subject your application to additional forces of which the JVM is unaware. When bringing additional moving parts into play, make sure that you're achieving the desired effect. I recommend the old software maxim: first make it work, then make it fast. Don't worry too much about optimization up front; concentrate first on correctness. The JVM implementation may be able to perform buffer caching or other optimizations that will give you the performance you need without a lot of unnecessary effort on your part.

allocate分配方式产生的内存开销是在JVM中的,allocateDirect分配方式产生的开销在JVM之外,以就是系统级的内存分配。系统级别内存的分配比JVM内存的分配要耗时多。所以并非不论什么时候 allocateDirect的操作效率都是很高的。

那什么时候使用堆内存,什么时候使用直接内存?

参考:NIO ByteBuffer 的 allocate 和 allocateDirect 的区别

什么情况下使用DirectByteBuffer(ByteBuffer.allocateDirect(int))?

1、频繁的native IO,即缓冲区 中转 从操作系统获取的文件数据、或者使用缓冲区中转网络数据等

2、不需要经常创建和销毁DirectByteBuffer对象

3、经常复用DirectByteBuffer对象,即经常写入数据到DirectByteBuffer中,然后flip,再读取出来,最后clear。。反复使用该DirectByteBuffer对象。

而且,DirectByteBuffer不会占用堆内存。。也就是不会受到堆大小限制,只在DirectByteBuffer对象被回收后才会释放该缓冲区。

什么情况下使用HeapByteBuffer(ByteBuffer.allocate(int))?

1、同一个HeapByteBuffer对象很少被复用,并且该对象经常是用一次就不用了,此时可以使用HeapByteBuffer,因为创建HeapByteBuffer开销比DirectByteBuffer低。

(但是!!创建所消耗时间差距只是一倍以下的差距,一般一次只会创建一个DirectByteBuffer对象反复使用,而不会创建几百个DirectByteBuffer,

所以在创建一个对象的情况下,HeapByteBuffer并没有什么优势,所以,开发中要使用ByteBuffer时,直接用DirectByteBuffer就行了)