整个工程使用的是Windows版pyCharm和tensorflow。

源码地址:https://github.com/Irvinglove/tensorflow_poems/tree/master

代码与上篇唐诗生成基本一致,不做过多解释。详细解释,请看:Tensorflow生成唐诗和歌词(上)

歌词生成

一、读取歌词的数据集(lyrics.py)

import collections import os import sys import numpy as np from utils.clean_cn import clean_cn_corpus #import jieba import pickle import codecs start_token = 'G' end_token = 'E' segment_list_file = os.path.abspath('./dataset/data/lyric_seg.pkl') def process_lyrics(file_name): base_dir = os.path.dirname(file_name) save_file = os.path.join(base_dir, os.path.basename(file_name).split('.')[0] + '_cleaned.txt') start_token = 'G' end_token = 'E' if not os.path.exists(save_file): clean_cn_corpus(file_name, clean_level='all', is_save=False) else: pass with codecs.open(save_file, 'r', encoding="utf-8") as f: lyrics = [] for line in f.readlines(): if len(line) < 40: continue line = start_token + line + end_token lyrics.append(line) lyrics = sorted(lyrics, key=lambda line: len(line)) print('all %d songs...' % len(lyrics)) # if not os.path.exists(os.path.dirname(segment_list_file)): # os.mkdir(os.path.dirname(segment_list_file)) # if os.path.exists(segment_list_file): # print('load segment file from %s' % segment_list_file) # with open(segment_list_file, 'rb') as p: # all_words = pickle.load(p) # else: all_words = [] for lyric in lyrics: all_words += [word for word in lyric] # with open(segment_list_file, 'wb') as p: # pickle.dump(all_words, p) # print('segment result have been save into %s' % segment_list_file) # calculate how many time appear per word counter = collections.Counter(all_words) print(counter['E']) # sorted depends on frequent counter_pairs = sorted(counter.items(), key=lambda x: -x[1]) words, _ = zip(*counter_pairs) print('E' in words) words = words[:len(words)] + (' ',) word_int_map = dict(zip(words, range(len(words)))) # translate all lyrics into int vector lyrics_vector = [list(map(lambda word: word_int_map.get(word, len(words)), lyric)) for lyric in lyrics] return lyrics_vector, word_int_map, words def generate_batch(batch_size, lyrics_vec, word_to_int): # split all lyrics into n_chunks * batch_size n_chunk = len(lyrics_vec) // batch_size x_batches = [] y_batches = [] for i in range(n_chunk): start_index = i * batch_size end_index = start_index + batch_size batches = lyrics_vec[start_index:end_index] # very batches length depends on the longest lyric length = max(map(len, batches)) # 填充一个这么大小的空batch,空的地方放空格对应的index标号 x_data = np.full((batch_size, length), word_to_int[' '], np.int32) for row in range(batch_size): x_data[row, :len(batches[row])] = batches[row] y_data = np.copy(x_data) # y的话就是x向左边也就是前面移动一个 y_data[:, :-1] = x_data[:, 1:] """ x_data y_data [6,2,4,6,9] [2,4,6,9,9] [1,4,2,8,5] [4,2,8,5,5] """ x_batches.append(x_data) y_batches.append(y_data) return x_batches, y_batches

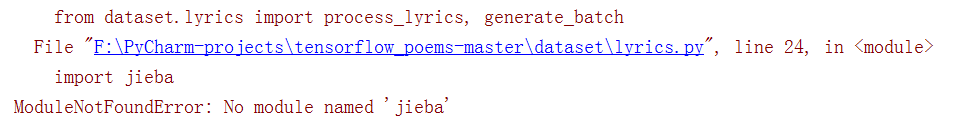

源代码那里有点问题

将"import jieba"注释掉

错误代码:

all_words += jieba.lcut(lyric, cut_all=False)

改为:

all_words += [word for word in lyric]

二、模型构建(model.py)

import tensorflow as tf import numpy as np def rnn_model(model, input_data, output_data, vocab_size, rnn_size=128, num_layers=2, batch_size=64, learning_rate=0.01): end_points = {} # 构建RNN基本单元RNNcell if model == 'rnn': cell_fun = tf.contrib.rnn.BasicRNNCell elif model == 'gru': cell_fun = tf.contrib.rnn.GRUCell else: cell_fun = tf.contrib.rnn.BasicLSTMCell cell = cell_fun(rnn_size, state_is_tuple=True) # 构建堆叠rnn,这里选用两层的rnn cell = tf.contrib.rnn.MultiRNNCell([cell] * num_layers, state_is_tuple=True) # 如果是训练模式,output_data不为None,则初始状态shape为[batch_size * rnn_size] # 如果是生成模式,output_data为None,则初始状态shape为[1 * rnn_size] if output_data is not None: initial_state = cell.zero_state(batch_size, tf.float32) else: initial_state = cell.zero_state(1, tf.float32) # 构建隐层 with tf.device("/cpu:0"): embedding = tf.get_variable('embedding', initializer=tf.random_uniform( [vocab_size + 1, rnn_size], -1.0, 1.0)) inputs = tf.nn.embedding_lookup(embedding, input_data) # [batch_size, ?, rnn_size] = [64, ?, 128] outputs, last_state = tf.nn.dynamic_rnn(cell, inputs, initial_state=initial_state) output = tf.reshape(outputs, [-1, rnn_size]) weights = tf.Variable(tf.truncated_normal([rnn_size, vocab_size + 1])) bias = tf.Variable(tf.zeros(shape=[vocab_size + 1])) logits = tf.nn.bias_add(tf.matmul(output, weights), bias=bias) # [?, vocab_size+1] if output_data is not None: # output_data must be one-hot encode labels = tf.one_hot(tf.reshape(output_data, [-1]), depth=vocab_size + 1) # should be [?, vocab_size+1] loss = tf.nn.softmax_cross_entropy_with_logits(labels=labels, logits=logits) # loss shape should be [?, vocab_size+1] total_loss = tf.reduce_mean(loss) train_op = tf.train.AdamOptimizer(learning_rate).minimize(total_loss) end_points['initial_state'] = initial_state end_points['output'] = output end_points['train_op'] = train_op end_points['total_loss'] = total_loss end_points['loss'] = loss end_points['last_state'] = last_state else: prediction = tf.nn.softmax(logits) end_points['initial_state'] = initial_state end_points['last_state'] = last_state end_points['prediction'] = prediction return end_points

三、模型训练(song_lyrics.py)

import collections import os import sys import numpy as np import tensorflow as tf from models.model import rnn_model from dataset.lyrics import process_lyrics, generate_batch tf.app.flags.DEFINE_integer('batch_size', 20, 'batch size.') tf.app.flags.DEFINE_float('learning_rate', 0.01, 'learning rate.') tf.app.flags.DEFINE_string('file_path', os.path.abspath('./dataset/data/周杰伦歌词大全.txt'), 'file path of lyrics.') tf.app.flags.DEFINE_string('checkpoints_dir', os.path.abspath('./checkpoints/lyrics'), 'checkpoints save path.') tf.app.flags.DEFINE_string('model_prefix', 'lyrics', 'model save prefix.') tf.app.flags.DEFINE_integer('epochs', 500, 'train how many epochs.') FLAGS = tf.app.flags.FLAGS start_token = 'G' end_token = 'E' def run_training(): if not os.path.exists(os.path.dirname(FLAGS.checkpoints_dir)): os.mkdir(os.path.dirname(FLAGS.checkpoints_dir)) if not os.path.exists(FLAGS.checkpoints_dir): os.mkdir(FLAGS.checkpoints_dir) poems_vector, word_to_int, vocabularies = process_lyrics(FLAGS.file_path) batches_inputs, batches_outputs = generate_batch(FLAGS.batch_size, poems_vector, word_to_int) input_data = tf.placeholder(tf.int32, [FLAGS.batch_size, None]) output_targets = tf.placeholder(tf.int32, [FLAGS.batch_size, None]) end_points = rnn_model(model='lstm', input_data=input_data, output_data=output_targets, vocab_size=len( vocabularies), rnn_size=128, num_layers=2, batch_size=FLAGS.batch_size, learning_rate=FLAGS.learning_rate) saver = tf.train.Saver(tf.global_variables()) init_op = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer()) with tf.Session() as sess: # sess = tf_debug.LocalCLIDebugWrapperSession(sess=sess) # sess.add_tensor_filter("has_inf_or_nan", tf_debug.has_inf_or_nan) sess.run(init_op) start_epoch = 0 checkpoint = tf.train.latest_checkpoint(FLAGS.checkpoints_dir) if checkpoint: saver.restore(sess, checkpoint) print("[INFO] restore from the checkpoint {0}".format(checkpoint)) start_epoch += int(checkpoint.split('-')[-1]) print('[INFO] start training...') try: for epoch in range(start_epoch, FLAGS.epochs): n = 0 n_chunk = len(poems_vector) // FLAGS.batch_size for batch in range(n_chunk): loss, _, _ = sess.run([ end_points['total_loss'], end_points['last_state'], end_points['train_op'] ], feed_dict={input_data: batches_inputs[n], output_targets: batches_outputs[n]}) n += 1 print('[INFO] Epoch: %d , batch: %d , training loss: %.6f' % (epoch, batch, loss)) if epoch % 20 == 0: saver.save(sess, os.path.join(FLAGS.checkpoints_dir, FLAGS.model_prefix), global_step=epoch) except KeyboardInterrupt: print('[INFO] Interrupt manually, try saving checkpoint for now...') saver.save(sess, os.path.join(FLAGS.checkpoints_dir, FLAGS.model_prefix), global_step=epoch) print('[INFO] Last epoch were saved, next time will start from epoch {}.'.format(epoch)) def to_word(predict, vocabs): t = np.cumsum(predict) s = np.sum(predict) sample = int(np.searchsorted(t, np.random.rand(1) * s)) if sample > len(vocabs)-1: sample = len(vocabs) - 100 return vocabs[sample] def gen_lyric(): batch_size = 1 poems_vector, word_int_map, vocabularies = process_lyrics(FLAGS.file_path) input_data = tf.placeholder(tf.int32, [batch_size, None]) end_points = rnn_model(model='lstm', input_data=input_data, output_data=None, vocab_size=len( vocabularies), rnn_size=128, num_layers=2, batch_size=64, learning_rate=FLAGS.learning_rate) saver = tf.train.Saver(tf.global_variables()) init_op = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer()) with tf.Session() as sess: sess.run(init_op) checkpoint = tf.train.latest_checkpoint(FLAGS.checkpoints_dir) saver.restore(sess, checkpoint) x = np.array([list(map(word_int_map.get, start_token))]) [predict, last_state] = sess.run([end_points['prediction'], end_points['last_state']], feed_dict={input_data: x}) word = to_word(predict, vocabularies) print(word) lyric = '' while word != end_token: lyric += word x = np.zeros((1, 1)) x[0, 0] = word_int_map[word] [predict, last_state] = sess.run([end_points['prediction'], end_points['last_state']], feed_dict={input_data: x, end_points['initial_state']: last_state}) word = to_word(predict, vocabularies) # word = words[np.argmax(probs_)] return lyric def main(is_train): if is_train: print('[INFO] train song lyric...') run_training() else: print('[INFO] compose song lyric...') lyric = gen_lyric() lyric_sentences = lyric.split(' ') for l in lyric_sentences: print(l) # if 4 < len(l) < 20: # print(l) if __name__ == '__main__': tf.app.run()

四、主函数(main.py)

import argparse def parse_args(): parser = argparse.ArgumentParser(description='Intelligence Poem and Lyric Writer.') help_ = 'you can set this value in terminal --write value can be poem or lyric.' parser.add_argument('-w', '--write', default='lyric', choices=['poem', 'lyric'], help=help_) help_ = 'choose to train or generate.' parser.add_argument('--train', dest='train', action='store_true', help=help_) parser.add_argument('--no-train', dest='train', action='store_false', help=help_) parser.set_defaults(train=True) args_ = parser.parse_args() return args_ if __name__ == '__main__': args = parse_args() if args.write == 'poem': from inference import tang_poems if args.train: tang_poems.main(True) else: tang_poems.main(False) elif args.write == 'lyric': from inference import song_lyrics print(args.train) if args.train: song_lyrics.main(True) else: song_lyrics.main(False) else: print('[INFO] write option can only be poem or lyric right now.')

1. 训练歌词模型,主要就是修改default和train的参数。将default='lyric',train=True

2. 生成歌词。default='lyric',train=False