【1】etcd介绍

(1.1)etcd简单介绍

etcd是CoreOS团队于2013年6月发起的开源项目,它的目标是构建一个高可用的分布式键值(key-value)数据库。

etcd内部采用 raft 协议作为一致性算法,etcd基于Go语言实现。

核心词汇:高可用、强一致性、KV数据存储;

适合存储少量核心重要数据;

不适合存储大量数据;

etcd作为服务发现系统,有以下的特点:

- 简单:安装配置简单,而且提供了HTTP API进行交互,使用也很简单

- 安全:支持SSL证书验证

- 快速:根据官方提供的benchmark数据,单实例支持每秒2k+读操作

- 可靠:采用raft算法,实现分布式系统数据的可用性和一致性

(1.2)etcd常见应用场景

1. 服务注册与服务发现 :为了解决微服务场景下,服务地址的注册和发现问题。和配置中心功能类似,不同之处在于服务注册和服务发现,还伴随着状态检测。

用户可以在etcd中注册服务,并且对注册的服务配置key TTL,定时保持服务的心跳以达到监控健康状态的效果。

2. leader选举组件 : 分布式场景下,常采用leader-follower模式来保证有状态服务的高可用(即使leader挂掉,其他follower立马补上),比如k8s和kafka partition高可用机制。

可以很方便的借助etcd来实现leader选举机制,这里有个leader election实现:https://github.com/willstudy/leaderelection

3. 配置中心 :etcd是一个分布式的键值存储系统,其优秀的读写性能、一致性和可用性的机制,非常适合用来做配置中心角色。

4. 分布式锁 :etcd的强一致性保证,可以用来做分布式场景下的同步机制保证。

5. 消息订阅和发布 :etcd内置watch机制,完全可以实现一个小型的消息订阅和发布组件。

6. more and more :etcd优秀的特性,适合的场景很多。

(1.3)基本使用(增删查改、事务、版本)

基本操作:

基本使用,put/get/del / watch

事务:会有compares =》 success requests / failure requests 三个判断步骤(txn 开启事务,-i 是交互式事务)

同时compares 也可以用 create("key") = "47" key的版本信息,mod(key) key的修改版本

【2】etcd集群架构(体系结构)

(2.1)架构的组件

(1)gPRC Server: 响应接收客户端连接请求,集群节点之间的通信

(2)wal(预写式日志):用来保证强一致性;log cache=》收到请求命令信息后(增删改),操作信息 以append方式记录日志;

在集群模式,主库会把日志信息发送到从库,有一半及以上节点收到并回复主节点确认已收到后,把请求进行提交;(其实就是mysql的半同步形式)

然后再把 log cache 持久化到 log file;

(3)snapshot:快照数据,与redis差别不大,用来主从复制中的主从初始化;

(4)boltdb:

key的存储:每个key就是一个索引,用b+树结构,因为它会存储key每一次操作的历史操作(get key -w josn 显示版本信息,get key --rev=47 获取key的历史版本值)

事务:会有compares =》 success requests / failure requests 三个判断步骤(txn 开启事务,-i 是交互式事务)

同时compares 也可以用 create("key") = "47" key的版本信息,mod(key) key的修改版本

(2.2)一个请求的处理过程

1》client: 发起请求到 gRPC Server

2》gRPC Server:接受连接请求,然后把请求命令信息发到主库的 wal

3》wal:把请求命令信息广播同步到从库,有一半及以上从库收到同意后,返回同意确认标识给主库

4》主库对命令请求进行提交落盘,然后再次广播给从库让从库也提交落盘

5》返回给客户端

(2.3)集群节点的状态

《1》followers:从节点 《2》candidate:候选者 《3》leader:主节点

leader election:当主库无响应;=》flowers 变成 candidate =》 然后根据多数的 vote 票数后 =》 candidate 变成 leader

数据变更:所有的数据变更,都是先修改 leader,然后 leader同步到其他 followers;

(2.4)集群提交/选举详细原理

【1】leader选举:

2个超时字段:

(1)选举超时(election timeout):选举时使用,这个时间是随机的,约在 150ms~300ms ;

- 当我们的选举超时最先过期的 follower 自动立马变成候选者(candidate);

- 然后开始新一轮的选举任期(term),term+1 ,(该值会主从复制 同步给从库)

- 重新随机选举超时

- 该 candidate 节点会给自己投一票,然后广播给其他节点拉票

- 其他 follower 节点收到拉票后,重置选举超时,然后如果手里有票,那么就给发起拉票的节点投票

- 每个人手里只有一张票

- 只有候选者才能给自己投票且

- 当收到被人的拉票后,只要手里有票,谁先发过就给谁投

(2)心跳超时(heartbeat timeout):检测节点之间网络是否正常

当 follower 收到 leader的数据同步包,都会重置两个超时事件;

平票案例:

如果 3个节点的集群,leader挂了;2个 follower 恰巧同时成为了 candidate ;

这时候2个节点各自拥有一票,要是这样成了,那不是会脑裂?

这个时候,会重新重置选票,然后重置,选举超时,再来一轮,新一轮谁先成为 candidate,那么谁就是主;

要是依旧那么巧,又同时,那么继续上一行内容的行为,以此反复直到会有一个人 先成为 candidate;( 每有一次这种情况,term同时也会+1 )

(2.5)主从日志复制

1》client: 发起请求到 gRPC Server

2》gRPC Server:接受连接请求,然后把请求命令信息发到主库的 wal

3》wal:把请求命令信息广播同步到从库,有一半及以上从库收到同意后,返回同意确认标识给主库

4》主库对命令请求进行提交落盘 然后再次广播给从库让从库也提交落盘

5》返回给客户端

【3】常见原理

(3.1)深入key版本信息

(1)raft_term:leader任期(每次选举的时候,都会+1),64位整数,全局单调递增

(2)revision: 全局的版本号 =》 对应数据库修改的版本,即只要对 etcd 进行增删改,版本号都会 +1 (无论是对什么key 操作都会 ) ,64 位整数,全局单调递增

(3)create_revision:创建key时候的版本号,即在创建key时的全局版本号(revision 的值);

(4)modify_revision:修改key的时候的全局版本号

(5)lease:租约(有效期):就是一个全局对象,设置好过期时间,然后某些key来与这个全局对象绑定,这个全局对象到了过期时间,key也会对应过期被删除;

有点和 redis expire key ttl 形式类似;

但区别是,etcd的租约 有针对多个key的优化;常见情况如下:

《1》我们有需求设置大量key在同一时间过期

《2》建一个全局对象,这个全局对象设置好过期时间,然后把key与这个 lease全局对象绑定

《3》这样,我们从原本的扫描这些key的过期情况,转而变成只扫描这个全局对象;如果这个全局对象过期,那么其绑定的多个具体key也过期了;

基本操作演示 如下:

(3.2)什么是 raft 算法?

【深入业务实现】

(1)服务发现

tcd比较多的应用场景是用于服务发现,服务发现(Service Discovery)要解决的是分布式系统中最常见的问题之一,即在同一个分布式集群中的进程或服务如何才能找到对方并建立连接。

从本质上说,服务发现就是要了解集群中是否有进程在监听upd或者tcp端口,并且通过名字就可以进行查找和链接。

要解决服务发现的问题,需要下面三大支柱,缺一不可。

- 一个强一致性、高可用的服务存储目录。

基于Ralf算法的etcd天生就是这样一个强一致性、高可用的服务存储目录。

- 一种注册服务和健康服务健康状况的机制。

用户可以在etcd中注册服务,并且对注册的服务配置key TTL,定时保持服务的心跳以达到监控健康状态的效果。

- 一种查找和连接服务的机制。

通过在etcd指定的主题下注册的服务业能在对应的主题下查找到。为了确保连接,我们可以在每个服务机器上都部署一个proxy模式的etcd,这样就可以确保访问etcd集群的服务都能够互相连接。

【4】ETCD 单实例

(4.1)下载安装与启动连接

mkdir /soft

cd /soft

wget https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz

tar -zxf etcd-v3.5.1-linux-amd64.tar.gz

mv etcd-v3.5.1-linux-amd64 etcd

cd etcd

mkdir bin

cp etcd* ./bin/

echo "export PATH=${PATH}:`pwd`/bin" >>/etc/profile

source /etc/profile

ls 查看里面的文件

是一些文档和两个二进制文件 etcd 和 etcdctl;

etcd 是server端,etcdctl是客户端

启动看看:

nohup ./etcd &

{"level":"info","ts":"2022-01-04T17:59:56.291+0800","caller":"etcdmain/etcd.go:72","msg":"Running: ","args":["./etcd"]}

{"level":"warn","ts":"2022-01-04T17:59:56.291+0800","caller":"etcdmain/etcd.go:104","msg":"'data-dir' was empty; using default","data-dir":"default.etcd"}

{"level":"info","ts":"2022-01-04T17:59:56.291+0800","caller":"embed/etcd.go:131","msg":"configuring peer listeners","listen-peer-urls":["http://localhost:2380"]}

{"level":"info","ts":"2022-01-04T17:59:56.292+0800","caller":"embed/etcd.go:139","msg":"configuring client listeners","listen-client-urls":["http://localhost:2379"]}

{"level":"info","ts":"2022-01-04T17:59:56.292+0800","caller":"embed/etcd.go:307","msg":"starting an etcd server","etcd-version":"3.5.1","git-sha":"e8732fb5f","go-version":"go1.16.3","go-os":"linux","go-arch":"amd64","max-cpu-set":1,"max-cpu-available":1,"member-initialized":false,"name":"default","data-dir":"default.etcd","wal-dir":"","wal-dir-dedicated":"","member-dir":"default.etcd/member","force-new-cluster":false,"heartbeat-interval":"100ms","election-timeout":"1s","initial-election-tick-advance":true,"snapshot-count":100000,"snapshot-catchup-entries":5000,"initial-advertise-peer-urls":["http://localhost:2380"],"listen-peer-urls":["http://localhost:2380"],"advertise-client-urls":["http://localhost:2379"],"listen-client-urls":["http://localhost:2379"],"listen-metrics-urls":[],"cors":["*"],"host-whitelist":["*"],"initial-cluster":"default=http://localhost:2380","initial-cluster-state":"new","initial-cluster-token":"etcd-cluster","quota-size-bytes":2147483648,"pre-vote":true,"initial-corrupt-check":false,"corrupt-check-time-interval":"0s","auto-compaction-mode":"periodic","auto-compaction-retention":"0s","auto-compaction-interval":"0s","discovery-url":"","discovery-proxy":"","downgrade-check-interval":"5s"}

{"level":"info","ts":"2022-01-04T17:59:56.295+0800","caller":"etcdserver/backend.go:81","msg":"opened backend db","path":"default.etcd/member/snap/db","took":"1.297072ms"}

{"level":"info","ts":"2022-01-04T17:59:56.328+0800","caller":"etcdserver/raft.go:448","msg":"starting local member","local-member-id":"8e9e05c52164694d","cluster-id":"cdf818194e3a8c32"}

{"level":"info","ts":"2022-01-04T17:59:56.328+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d switched to configuration voters=()"}

{"level":"info","ts":"2022-01-04T17:59:56.328+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d became follower at term 0"}

{"level":"info","ts":"2022-01-04T17:59:56.328+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"newRaft 8e9e05c52164694d [peers: [], term: 0, commit: 0, applied: 0, lastindex: 0, lastterm: 0]"}

{"level":"info","ts":"2022-01-04T17:59:56.328+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d became follower at term 1"}

{"level":"info","ts":"2022-01-04T17:59:56.328+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d switched to configuration voters=(10276657743932975437)"}

{"level":"warn","ts":"2022-01-04T17:59:56.329+0800","caller":"auth/store.go:1220","msg":"simple token is not cryptographically signed"}

{"level":"info","ts":"2022-01-04T17:59:56.331+0800","caller":"mvcc/kvstore.go:415","msg":"kvstore restored","current-rev":1}

{"level":"info","ts":"2022-01-04T17:59:56.333+0800","caller":"etcdserver/quota.go:94","msg":"enabled backend quota with default value","quota-name":"v3-applier","quota-size-bytes":2147483648,"quota-size":"2.1 GB"}

{"level":"info","ts":"2022-01-04T17:59:56.333+0800","caller":"etcdserver/server.go:843","msg":"starting etcd server","local-member-id":"8e9e05c52164694d","local-server-version":"3.5.1","cluster-version":"to_be_decided"}

{"level":"info","ts":"2022-01-04T17:59:56.337+0800","caller":"embed/etcd.go:276","msg":"now serving peer/client/metrics","local-member-id":"8e9e05c52164694d","initial-advertise-peer-urls":["http://localhost:2380"],"listen-peer-urls":["http://localhost:2380"],"advertise-client-urls":["http://localhost:2379"],"listen-client-urls":["http://localhost:2379"],"listen-metrics-urls":[]}

{"level":"info","ts":"2022-01-04T17:59:56.338+0800","caller":"etcdserver/server.go:728","msg":"started as single-node; fast-forwarding election ticks","local-member-id":"8e9e05c52164694d","forward-ticks":9,"forward-duration":"900ms","election-ticks":10,"election-timeout":"1s"}

{"level":"info","ts":"2022-01-04T17:59:56.338+0800","caller":"embed/etcd.go:580","msg":"serving peer traffic","address":"127.0.0.1:2380"}

{"level":"info","ts":"2022-01-04T17:59:56.338+0800","caller":"embed/etcd.go:552","msg":"cmux::serve","address":"127.0.0.1:2380"}

{"level":"info","ts":"2022-01-04T17:59:56.338+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d switched to configuration voters=(10276657743932975437)"}

{"level":"info","ts":"2022-01-04T17:59:56.338+0800","caller":"membership/cluster.go:421","msg":"added member","cluster-id":"cdf818194e3a8c32","local-member-id":"8e9e05c52164694d","added-peer-id":"8e9e05c52164694d","added-peer-peer-urls":["http://localhost:2380"]}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d is starting a new election at term 1"}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d became pre-candidate at term 1"}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d received MsgPreVoteResp from 8e9e05c52164694d at term 1"}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d became candidate at term 2"}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d received MsgVoteResp from 8e9e05c52164694d at term 2"}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"8e9e05c52164694d became leader at term 2"}

{"level":"info","ts":"2022-01-04T17:59:56.930+0800","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"raft.node: 8e9e05c52164694d elected leader 8e9e05c52164694d at term 2"}

{"level":"info","ts":"2022-01-04T17:59:56.931+0800","caller":"etcdserver/server.go:2476","msg":"setting up initial cluster version using v2 API","cluster-version":"3.5"}

{"level":"info","ts":"2022-01-04T17:59:56.931+0800","caller":"membership/cluster.go:584","msg":"set initial cluster version","cluster-id":"cdf818194e3a8c32","local-member-id":"8e9e05c52164694d","cluster-version":"3.5"}

{"level":"info","ts":"2022-01-04T17:59:56.931+0800","caller":"api/capability.go:75","msg":"enabled capabilities for version","cluster-version":"3.5"}

{"level":"info","ts":"2022-01-04T17:59:56.931+0800","caller":"etcdserver/server.go:2500","msg":"cluster version is updated","cluster-version":"3.5"}

{"level":"info","ts":"2022-01-04T17:59:56.931+0800","caller":"etcdserver/server.go:2027","msg":"published local member to cluster through raft","local-member-id":"8e9e05c52164694d","local-member-attributes":"{Name:default ClientURLs:[http://localhost:2379]}","request-path":"/0/members/8e9e05c52164694d/attributes","cluster-id":"cdf818194e3a8c32","publish-timeout":"7s"}

{"level":"info","ts":"2022-01-04T17:59:56.931+0800","caller":"embed/serve.go:98","msg":"ready to serve client requests"}

{"level":"info","ts":"2022-01-04T17:59:56.932+0800","caller":"embed/serve.go:140","msg":"serving client traffic insecurely; this is strongly discouraged!","address":"127.0.0.1:2379"}

{"level":"info","ts":"2022-01-04T17:59:56.932+0800","caller":"etcdmain/main.go:47","msg":"notifying init daemon"}

{"level":"info","ts":"2022-01-04T17:59:56.932+0800","caller":"etcdmain/main.go:53","msg":"successfully notified init daemon"}

日志信息如下:

-

name表示节点名称,默认为default。

-

data-dir 保存日志和快照的目录,默认为当前工作目录default.etcd/目录下。

-

在http://localhost:2380和集群中其他节点通信。

-

在http://localhost:2379提供HTTP API服务,供客户端交互。

-

heartbeat为100ms,该参数的作用是leader多久发送一次心跳到

-

followers,默认值是100ms。

-

election为1000ms,该参数的作用是重新投票的超时时间,如果follow在该+ 时间间隔没有收到心跳包,会触发重新投票,默认为1000ms。

-

snapshot count为10000,该参数的作用是指定有多少事务被提交时,触发+ 截取快照保存到磁盘。

-

集群和每个节点都会生成一个uuid。

-

启动的时候会运行raft,选举出leader。

-

上面的方法只是简单的启动一个etcd服务,但要长期运行的话,还是做成一个服务好一些。下面将以systemd为例,介绍如何建立一个etcd服务。

利用 etcdctl 命令进行连接

本地连接, 默认使用 http://127.0.0.1:2379 作为默认 endpoint

假如需要执行远程连接, 可以通过定义 endpoint 实现

ex: etcd --endpoint http://10.199.205.229:2379

实际上etcd服务端监听的地址是这个参数控制的,默认就是127.0.0.1:2379

--listen-client-urls http://0.0.0.0:2379

(4.2)etcd 两种 API

当前 etcdctl 支持 ETCDCTL-API=2 ETCDCTL_API=3 两种不同的操作类型

不同的 api 类型使用的命令参数不一样

通过环境变量切换方法定义当前 api 类型

版本2:

[root@terry ~]# etcdctl --help

NAME:

etcdctl - A simple command line client for etcd.

USAGE:

etcdctl [global options] command [command options] [arguments...]

VERSION:

3.2.7

COMMANDS:

backup backup an etcd directory

cluster-health check the health of the etcd cluster

mk make a new key with a given value

mkdir make a new directory

rm remove a key or a directory

rmdir removes the key if it is an empty directory or a key-value pair

get retrieve the value of a key

ls retrieve a directory

set set the value of a key

setdir create a new directory or update an existing directory TTL

update update an existing key with a given value

updatedir update an existing directory

watch watch a key for changes

exec-watch watch a key for changes and exec an executable

member member add, remove and list subcommands

user user add, grant and revoke subcommands

role role add, grant and revoke subcommands

auth overall auth controls

help, h Shows a list of commands or help for one command

版本3:(当前最新,就是我们现在用的这种)见(4.3)

(4.3)etcd 基本操作

我们可以 ./etcdctl --help 查看,命令如下:

NAME: etcdctl - A simple command line client for etcd3. USAGE: etcdctl [flags] VERSION: 3.5.1 API VERSION: 3.5 COMMANDS: alarm disarm Disarms all alarms alarm list Lists all alarms auth disable Disables authentication auth enable Enables authentication auth status Returns authentication status check datascale Check the memory usage of holding data for different workloads on a given server endpoint. check perf Check the performance of the etcd cluster compaction Compacts the event history in etcd defrag Defragments the storage of the etcd members with given endpoints del Removes the specified key or range of keys [key, range_end) elect Observes and participates in leader election endpoint hashkv Prints the KV history hash for each endpoint in --endpoints endpoint health Checks the healthiness of endpoints specified in `--endpoints` flag endpoint status Prints out the status of endpoints specified in `--endpoints` flag get Gets the key or a range of keys help Help about any command lease grant Creates leases lease keep-alive Keeps leases alive (renew) lease list List all active leases lease revoke Revokes leases lease timetolive Get lease information lock Acquires a named lock make-mirror Makes a mirror at the destination etcd cluster member add Adds a member into the cluster member list Lists all members in the cluster member promote Promotes a non-voting member in the cluster member remove Removes a member from the cluster member update Updates a member in the cluster move-leader Transfers leadership to another etcd cluster member. put Puts the given key into the store role add Adds a new role role delete Deletes a role role get Gets detailed information of a role role grant-permission Grants a key to a role role list Lists all roles role revoke-permission Revokes a key from a role snapshot restore Restores an etcd member snapshot to an etcd directory snapshot save Stores an etcd node backend snapshot to a given file snapshot status [deprecated] Gets backend snapshot status of a given file txn Txn processes all the requests in one transaction user add Adds a new user user delete Deletes a user user get Gets detailed information of a user user grant-role Grants a role to a user user list Lists all users user passwd Changes password of user user revoke-role Revokes a role from a user version Prints the version of etcdctl watch Watches events stream on keys or prefixes OPTIONS: --cacert="" verify certificates of TLS-enabled secure servers using this CA bundle --cert="" identify secure client using this TLS certificate file --command-timeout=5s timeout for short running command (excluding dial timeout) --debug[=false] enable client-side debug logging --dial-timeout=2s dial timeout for client connections -d, --discovery-srv="" domain name to query for SRV records describing cluster endpoints --discovery-srv-name="" service name to query when using DNS discovery --endpoints=[127.0.0.1:2379] gRPC endpoints -h, --help[=false] help for etcdctl --hex[=false] print byte strings as hex encoded strings --insecure-discovery[=true] accept insecure SRV records describing cluster endpoints --insecure-skip-tls-verify[=false] skip server certificate verification (CAUTION: this option should be enabled only for testing purposes) --insecure-transport[=true] disable transport security for client connections --keepalive-time=2s keepalive time for client connections --keepalive-timeout=6s keepalive timeout for client connections --key="" identify secure client using this TLS key file --password="" password for authentication (if this option is used, --user option shouldn't include password) --user="" username[:password] for authentication (prompt if password is not supplied) -w, --write-out="simple" set the output format (fields, json, protobuf, simple, table)

我们简单介绍最基础几个

- put:插入、更新

- get:查询

- del:删除

- txn:事务

- watch:持续观察

那么,从之前的介绍,这些我们都用过了;

【5】ETCD集群

(5.1)安装

官网:https://etcd.io/docs/v3.5/tutorials/how-to-setup-cluster/

三个机器都运行(记得修改好 host相关ip信息成你自己的ip地址)

TOKEN=token-01 CLUSTER_STATE=new NAME_1=machine-3 NAME_2=machine-4 NAME_3=machine-5 HOST_1=192.168.175.131 HOST_2=192.168.175.132 HOST_3=192.168.175.148 CLUSTER=${NAME_1}=http://${HOST_1}:2380,${NAME_2}=http://${HOST_2}:2380,${NAME_3}=http://${HOST_3}:2380

三个机器分别对应执行

# 在机器1 运行 THIS_NAME=${NAME_1} THIS_IP=${HOST_1} nohup etcd --data-dir=data.etcd --name ${THIS_NAME} \ --initial-advertise-peer-urls http://${THIS_IP}:2380 --listen-peer-urls http://${THIS_IP}:2380 \ --advertise-client-urls http://${THIS_IP}:2379 --listen-client-urls http://0.0.0.0:2379 \ --initial-cluster ${CLUSTER} \ --initial-cluster-state ${CLUSTER_STATE} --initial-cluster-token ${TOKEN} & # 在机器2 运行 THIS_NAME=${NAME_2} THIS_IP=${HOST_2} nohup etcd --data-dir=data.etcd --name ${THIS_NAME} \ --initial-advertise-peer-urls http://${THIS_IP}:2380 --listen-peer-urls http://${THIS_IP}:2380 \ --advertise-client-urls http://${THIS_IP}:2379 --listen-client-urls http://0.0.0.0:2379 \ --initial-cluster ${CLUSTER} \ --initial-cluster-state ${CLUSTER_STATE} --initial-cluster-token ${TOKEN} & # 在机器3运行 THIS_NAME=${NAME_3} THIS_IP=${HOST_3} nohup etcd --data-dir=data.etcd --name ${THIS_NAME} \ --initial-advertise-peer-urls http://${THIS_IP}:2380 --listen-peer-urls http://${THIS_IP}:2380 \ --advertise-client-urls http://${THIS_IP}:2379 --listen-client-urls http://0.0.0.0:2379 \ --initial-cluster ${CLUSTER} \ --initial-cluster-state ${CLUSTER_STATE} --initial-cluster-token ${TOKEN} &

参数释义:

–name:节点名称,默认为 default,在集群中应该保持唯一

–data-dir:服务运行数据保存的路径,默认为 ${name}.etcd

–snapshot-count:指定有多少事务(transaction)被提交时,触发截取快照保存到磁盘

–listen-peer-urls:和同伴通信的地址,比如 http://ip:2380,如果有多个,使用逗号分隔。需要所有节点都能够访问,所以不要使用 localhost

–listen-client-urls:对外提供服务的地址:比如 http://ip:2379,http://127.0.0.1:2379,客户端会连接到这里和 etcd 交互

–advertise-client-urls:对外公告的该节点客户端监听地址,这个值会告诉集群中其他节点

–initial-advertise-peer-urls:该节点同伴监听地址,这个值会告诉集群中其他节点

–initial-cluster:集群中所有节点的信息,格式为 node1=http://ip1:2380,node2=http://ip2:2380,…。注意:这里的 node1 是节点的 --name 指定的名字;后面的 ip1:2380 是 --initial-advertise-peer-urls 指定的值

–initial-cluster-state:新建集群的时候,这个值为 new;假如已经存在的集群,这个值为 existing

–initial-cluster-token:创建集群的 token,这个值每个集群保持唯一。这样的话,如果你要重新创建集群,即使配置和之前一样,也会再次生成新的集群和节点 uuid;否则会导致多个集群之间的冲突,造成未知的错误

–heartbeat-interval:leader 多久发送一次心跳到 followers。默认值 100ms

–eletion-timeout:重新投票的超时时间,如果 follow 在该时间间隔没有收到心跳包,会触发重新投票,默认为 1000 ms

安装后结果:

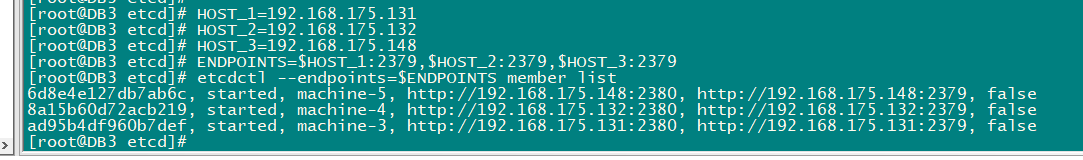

(5.2)使用 etcdctl 连接到集群

export ETCDCTL_API=3 //添加环境变量

HOST_1=192.168.175.131

HOST_2=192.168.175.132

HOST_3=192.168.175.148

ENDPOINTS=$HOST_1:2379,$HOST_2:2379,$HOST_3:2379

etcdctl --endpoints=$ENDPOINTS member list

(5.3)基本操作

#put: 指定了IP etcdctl --endpoints=$ENDPOINTS put foo "Hello World!" get: etcdctl --endpoints=$ENDPOINTS get foo etcdctl --endpoints=$ENDPOINTS --write-out="json" get foo #通过前缀获取: etcdctl --endpoints=$ENDPOINTS put web1 value1 etcdctl --endpoints=$ENDPOINTS put web2 value2 etcdctl --endpoints=$ENDPOINTS put web3 value3 etcdctl --endpoints=$ENDPOINTS get web -–prefix 获取前几字节web所有 etcdctl get –prefix / //获取键的第一个字节为 / 的所有信息 #通过前缀删除: etcdctl --endpoints=$ENDPOINTS put key myvalue etcdctl --endpoints=$ENDPOINTS del key etcdctl --endpoints=$ENDPOINTS put k1 value1 etcdctl --endpoints=$ENDPOINTS put k2 value2etcdctl --endpoints=$ENDPOINTS del k -–prefix 删除前几字节为k的所有 etcdctl del –prefix / //删除键的第一个字节为 / 的所有信息

【etcd和同类型产品的对比】

(1) etcd vs redis

etcd诞生之日起,就定位成一种分布式存储系统,并在k8s 服务注册和服务发现中,为大家所认识。它偏重的是节点之间的通信和一致性的机制保证,并不强调单节点的读写性能。

而redis最早作为缓存系统出现在大众视野,也注定了redis需要更加侧重于读写性能和支持更多的存储模式,它不需要care一致性,也不需要侧重事务,因此可以达到很高的读写性能。

总结一下,redis常用来做缓存系统,etcd常用来做分布式系统中一些关键数据的存储服务,比如服务注册和服务发现。

(2) etcd vs consul

consul定位是一个端到端的服务框架,提供了内置的监控检查、DNS服务等,除此之外,还提供了HTTP API和Web UI,如果要实现简单的服务发现,基本上可以开箱即用。

但是缺点同样也存在,封装有利有弊,就导致灵活性弱了不少。除此之外,consul还比较年轻,暂未在大型项目中实践,可靠性还未可知。

(3) etcd vs zookeeper

etcd站在了巨人的肩膀上。。

【etcd性能表现】

来自于官网介绍:https://etcd.io/docs/v3.4.0/op-guide/performance/ 大致总结一下:

- 读:1w ~ 10w 之间

- 写:1w左右

建议:

- etcd需要部署到ssd盘(强烈建议)

- 多个写采用batch操作。

- 非必要情况下,避免range操作。

etcd集群更偏重一致性和稳定性,并不强调高性能,在绝大部分场景下均不会到达etcd性能瓶颈,如果出现瓶颈的话,需要重新review架构设计,比如拆分或者优化流程。

【本文参考转载自文章】

官网:官网:https://etcd.io/docs/v3.5/tutorials/how-to-setup-cluster/

https://www.jianshu.com/p/f68028682192

https://zhuanlan.zhihu.com/p/339902098

etcd 入门篇:https://blog.csdn.net/jkwanga/article/details/106444556

etcd 集群维护:https://blog.csdn.net/hai330/article/details/118357407