1.DataFrame中某一列的值衍生为新的特征

#将LBL1特征的值衍生为one-hot形式的新特征 piao=df_train_log.LBL1.value_counts().index #先构造一个临时的df df_tmp=pd.DataFrame({'USRID':df_train_log.drop_duplicates('USRID').USRID.values}) #将所有的新特征列都置为0 for i in piao: df_tmp['PIAO_'+i]=0 #进行分组便利,有这个特征就置为1,原数据每个USRID有多条记录,所以分组统计 group=df_train_log.groupby(['USRID']) for k in group.groups.keys(): t = group.get_group(k) id=t.USRID.value_counts().index[0] tmp_list=t.LBL1.value_counts().index for j in tmp_list: df_tmp['PIAO_'+j].loc[df_tmp.USRID==id]=1

2.分组统计,选出同一USRID下该变量中出现次数最多的值项

group=df_train_log.groupby(['USRID']) lt=[] list_max_lbl1=[] list_max_lbl2=[] list_max_lbl3=[] for k in group.groups.keys(): t = group.get_group(k)

#通过value_counts找出出现次数最多的项 argmx = np.argmax(t['EVT_LBL'].value_counts()) lbl1_max=np.argmax(t['LBL1'].value_counts()) lbl2_max=np.argmax(t['LBL2'].value_counts()) lbl3_max=np.argmax(t['LBL3'].value_counts()) list_max_lbl1.append(lbl1_max) list_max_lbl2.append(lbl2_max) list_max_lbl3.append(lbl3_max)

#只留下出现次数最多的项 c = t[t['EVT_LBL']==argmx].drop_duplicates('EVT_LBL')

#放入list中 lt.append(c)

#构造一个新的df df_train_log_new = pd.concat(lt)

#另外又构造了三个特征,LBL1-LBL3分别出现次数最多的项 df_train_log_new['LBL1_MAX']=list_max_lbl1 df_train_log_new['LBL2_MAX']=list_max_lbl2 df_train_log_new['LBL3_MAX']=list_max_lbl3

3.衍生出某天是否发生的ont-hot新特征

#创造临时df,星期三,星期六,星期七,都默认置为0 df_day=pd.DataFrame({'USRID':df_train_log.drop_duplicates('USRID').USRID.values}) df_day['weekday_3']=0 df_day['weekday_6']=0 df_day['weekday_7']=0 #分组统计,有就置为1,没有置为0 group=df_train_log.groupby(['USRID']) for k in group.groups.keys(): t = group.get_group(k) id=t.USRID.value_counts().index[0] tmp_list=t.occ_dayofweek.value_counts().index for j in tmp_list: if j==3: df_day['weekday_3'].loc[df_tmp.USRID==id]=1 elif j==6: df_day['weekday_6'].loc[df_tmp.USRID==id]=1 elif j==7: df_day['weekday_7'].loc[df_tmp.USRID==id]=1

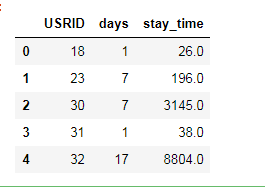

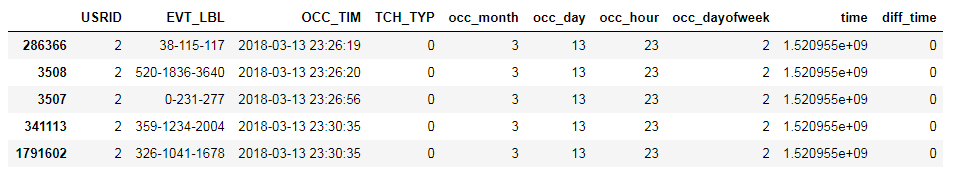

4.查看用户一共停留在APP上多少秒,共有几天看了APP

#首先将日期转化为时间戳,并赋予一个新特征 tmp_list=[] for i in df_train_log.OCC_TIM: d=datetime.datetime.strptime(str(i),"%Y-%m-%d %H:%M:%S") evt_time = time.mktime(d.timetuple()) tmp_list.append(evt_time) df_train_log['time']=tmp_list #每下一行减去上一行,得到app停留时间 df_train_log['diff_time']=df_train_log.time-df_train_log.time.shift(1) #构造一个新的dataFrame,分组得到查看app的天数 df_time=pd.DataFrame({'USRID':df_train_log.drop_duplicates('USRID').USRID.values}) #有几天查看 df_time['days']=0 group=df_train_log.groupby(['USRID']) for k in group.groups.keys(): t = group.get_group(k) id=set(t.USRID).pop() df_time['days'].loc[df_time.USRID==id]= len(t.occ_day.value_counts().index) #去掉一些异常时间戳,比如间隔两天的相减,肯定不合适,na的也去掉了 df_train_log=df_train_log[(df_train_log.diff_time>0)&(df_train_log.diff_time<8000)] #累计停留时间 group_stayTime=df_train_log['diff_time'].groupby(df_train_log['USRID']).sum() #创造新的df df_tmp=pd.DataFrame({'USRID':list(group_stayTime.index.values),'stay_time':list(group_stayTime.values)}) #合并成一个新的df df=pd.merge(df_time,df_tmp,on=['USRID'],how='left')

#合并后,缺失的停留时间,置为0

df.fillna(0,axis=1,inplace=True)