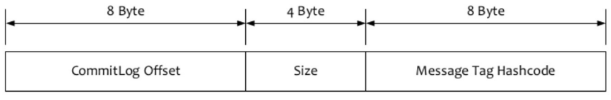

前面讲解到对于consumequeue的文件恢复和过期文件删除,和flush的过程这边就不再重点阐述,实际上consumequeue中的存储单元是一个20个字节的数据,前8个字节存储消息在commitlog上的物理点位,接着是4字节的消息size,最后是8字节的tag的hash值。可以看做消息在consumequeue上存储的是逻辑点位,作为commitlog中的索引。

存储结构

load过程

public boolean load() { File dir = new File(this.storePath); File[] files = dir.listFiles(); if (files != null) { // ascending order Arrays.sort(files); for (File file : files) { if (file.length() != this.mappedFileSize) { log.warn(file + " " + file.length() + " length not matched message store config value, ignore it"); return true; } try { // 每一个物理文件 建立mmap MappedFile mappedFile = new MappedFile(file.getPath(), mappedFileSize); // 初始化点位 mappedFile.setWrotePosition(this.mappedFileSize); mappedFile.setFlushedPosition(this.mappedFileSize); mappedFile.setCommittedPosition(this.mappedFileSize); this.mappedFiles.add(mappedFile); log.info("load " + file.getPath() + " OK"); } catch (IOException e) { log.error("load file " + file + " error", e); return false; } } } return true; }

dispatch过程

dispatch过程发生在ReputMessageService中,用于构建consumequeue和index中的索引数据。对于consumequeue主要实现在CommitLogDispatcherBuildConsumeQueue中

class CommitLogDispatcherBuildConsumeQueue implements CommitLogDispatcher { @Override public void dispatch(DispatchRequest request) { final int tranType = MessageSysFlag.getTransactionValue(request.getSysFlag()); switch (tranType) { case MessageSysFlag.TRANSACTION_NOT_TYPE: case MessageSysFlag.TRANSACTION_COMMIT_TYPE: DefaultMessageStore.this.putMessagePositionInfo(request); break; case MessageSysFlag.TRANSACTION_PREPARED_TYPE: case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE: break; } } }

接下来put message 允许30次的失败重试,如果全部失败则会设置运行状态标志WRITE_LOGICS_QUEUE_ERROR_BIT,如果写成功 则更新checkpoint。再次详解putMessage的过程

private boolean putMessagePositionInfo(final long offset, final int size, final long tagsCode, final long cqOffset) { // 说明对该数据已经完成了dispatch if (offset <= this.maxPhysicOffset) { return true; } this.byteBufferIndex.flip(); this.byteBufferIndex.limit(CQ_STORE_UNIT_SIZE); this.byteBufferIndex.putLong(offset); this.byteBufferIndex.putInt(size); this.byteBufferIndex.putLong(tagsCode); final long expectLogicOffset = cqOffset * CQ_STORE_UNIT_SIZE; // 此处可能会建立文件 MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile(expectLogicOffset); if (mappedFile != null) { if (mappedFile.isFirstCreateInQueue() && cqOffset != 0 && mappedFile.getWrotePosition() == 0) { this.minLogicOffset = expectLogicOffset; // 设置flushedWhere 用于flush点位比较 this.mappedFileQueue.setFlushedWhere(expectLogicOffset); this.mappedFileQueue.setCommittedWhere(expectLogicOffset); this.fillPreBlank(mappedFile, expectLogicOffset); log.info("fill pre blank space " + mappedFile.getFileName() + " " + expectLogicOffset + " " + mappedFile.getWrotePosition()); } if (cqOffset != 0) { long currentLogicOffset = mappedFile.getWrotePosition() + mappedFile.getFileFromOffset(); if (expectLogicOffset != currentLogicOffset) { LOG_ERROR.warn( "[BUG]logic queue order maybe wrong, expectLogicOffset: {} currentLogicOffset: {} Topic: {} QID: {} Diff: {}", expectLogicOffset, currentLogicOffset, this.topic, this.queueId, expectLogicOffset - currentLogicOffset ); } } this.maxPhysicOffset = offset; // 写数据的具体逻辑 return mappedFile.appendMessage(this.byteBufferIndex.array()); } return false; }

最后面的写数据相对简单,判断写入后的数据是否小于文件大小,如果是则设置fileChannel的position,然后write,最后更新写位置。

public boolean appendMessage(final byte[] data) { int currentPos = this.wrotePosition.get(); if ((currentPos + data.length) <= this.fileSize) { try { this.fileChannel.position(currentPos); this.fileChannel.write(ByteBuffer.wrap(data)); } catch (Throwable e) { log.error("Error occurred when append message to mappedFile.", e); } this.wrotePosition.addAndGet(data.length); return true; } return false; }

接下来讲解一下mappedFileQueue.getLastMappedFile,如何根据逻辑点位来获取到对于的MappedFile.

public MappedFile getLastMappedFile(final long startOffset, boolean needCreate) { long createOffset = -1; MappedFile mappedFileLast = getLastMappedFile(); if (mappedFileLast == null) { // 文件头的offset createOffset = startOffset - (startOffset % this.mappedFileSize); } // 对最后一个mappedFile进行判断,如果满了,则计算系哦啊一个文件的起始offset if (mappedFileLast != null && mappedFileLast.isFull()) { createOffset = mappedFileLast.getFileFromOffset() + this.mappedFileSize; } if (createOffset != -1 && needCreate) { String nextFilePath = this.storePath + File.separator + UtilAll.offset2FileName(createOffset); String nextNextFilePath = this.storePath + File.separator + UtilAll.offset2FileName(createOffset + this.mappedFileSize); MappedFile mappedFile = null; // 包含了预加载 if (this.allocateMappedFileService != null) { mappedFile = this.allocateMappedFileService.putRequestAndReturnMappedFile(nextFilePath, nextNextFilePath, this.mappedFileSize); } else { try { // 创建mmap mappedFile = new MappedFile(nextFilePath, this.mappedFileSize); } catch (IOException e) { log.error("create mappedFile exception", e); } } if (mappedFile != null) { if (this.mappedFiles.isEmpty()) { mappedFile.setFirstCreateInQueue(true); } this.mappedFiles.add(mappedFile); } return mappedFile; } return mappedFileLast; }

AllocateMappedFileService#putRequestAndReturnMappedFile会封装AllocateRequest并投递到requestQueue,然后由一个循环线程不断地读取队列获取request并创建mmap。

public MappedFile putRequestAndReturnMappedFile(String nextFilePath, String nextNextFilePath, int fileSize) { int canSubmitRequests = 2; if (this.messageStore.getMessageStoreConfig().isTransientStorePoolEnable()) { if (this.messageStore.getMessageStoreConfig().isFastFailIfNoBufferInStorePool() && BrokerRole.SLAVE != this.messageStore.getMessageStoreConfig().getBrokerRole()) { //if broker is slave, don't fast fail even no buffer in pool // 计算还能接收多少个AllocateRequest 内存池分配的数量 - 当前在排队中的请求 canSubmitRequests = this.messageStore.getTransientStorePool().remainBufferNumbs() - this.requestQueue.size(); } } AllocateRequest nextReq = new AllocateRequest(nextFilePath, fileSize); boolean nextPutOK = this.requestTable.putIfAbsent(nextFilePath, nextReq) == null; if (nextPutOK) { if (canSubmitRequests <= 0) { log.warn("[NOTIFYME]TransientStorePool is not enough, so create mapped file error, " + "RequestQueueSize : {}, StorePoolSize: {}", this.requestQueue.size(), this.messageStore.getTransientStorePool().remainBufferNumbs()); this.requestTable.remove(nextFilePath); return null; } boolean offerOK = this.requestQueue.offer(nextReq); if (!offerOK) { log.warn("never expected here, add a request to preallocate queue failed"); } canSubmitRequests--; } // 下下个文件也创建请求 AllocateRequest nextNextReq = new AllocateRequest(nextNextFilePath, fileSize); boolean nextNextPutOK = this.requestTable.putIfAbsent(nextNextFilePath, nextNextReq) == null; if (nextNextPutOK) { if (canSubmitRequests <= 0) { log.warn("[NOTIFYME]TransientStorePool is not enough, so skip preallocate mapped file, " + "RequestQueueSize : {}, StorePoolSize: {}", this.requestQueue.size(), this.messageStore.getTransientStorePool().remainBufferNumbs()); this.requestTable.remove(nextNextFilePath); } else { boolean offerOK = this.requestQueue.offer(nextNextReq); if (!offerOK) { log.warn("never expected here, add a request to preallocate queue failed"); } } } if (hasException) { log.warn(this.getServiceName() + " service has exception. so return null"); return null; } AllocateRequest result = this.requestTable.get(nextFilePath); try { if (result != null) { // 同步等待文件创建结果 boolean waitOK = result.getCountDownLatch().await(waitTimeOut, TimeUnit.MILLISECONDS); if (!waitOK) { log.warn("create mmap timeout " + result.getFilePath() + " " + result.getFileSize()); return null; } else { this.requestTable.remove(nextFilePath); return result.getMappedFile(); } } else { log.error("find preallocate mmap failed, this never happen"); } } catch (InterruptedException e) { log.warn(this.getServiceName() + " service has exception. ", e); } return null; }

而在run方法内会不断地执行mmapOperation

private boolean mmapOperation() { boolean isSuccess = false; AllocateRequest req = null; try { // 从队列中拉取请求 req = this.requestQueue.take(); AllocateRequest expectedRequest = this.requestTable.get(req.getFilePath()); if (null == expectedRequest) { log.warn("this mmap request expired, maybe cause timeout " + req.getFilePath() + " " + req.getFileSize()); return true; } if (expectedRequest != req) { log.warn("never expected here, maybe cause timeout " + req.getFilePath() + " " + req.getFileSize() + ", req:" + req + ", expectedRequest:" + expectedRequest); return true; } if (req.getMappedFile() == null) { long beginTime = System.currentTimeMillis(); MappedFile mappedFile; if (messageStore.getMessageStoreConfig().isTransientStorePoolEnable()) { try { mappedFile = ServiceLoader.load(MappedFile.class).iterator().next(); // 使用对外内存池来映射文件 mappedFile.init(req.getFilePath(), req.getFileSize(), messageStore.getTransientStorePool()); } catch (RuntimeException e) { log.warn("Use default implementation."); mappedFile = new MappedFile(req.getFilePath(), req.getFileSize(), messageStore.getTransientStorePool()); } } else { mappedFile = new MappedFile(req.getFilePath(), req.getFileSize()); } long eclipseTime = UtilAll.computeEclipseTimeMilliseconds(beginTime); if (eclipseTime > 10) { int queueSize = this.requestQueue.size(); log.warn("create mappedFile spent time(ms) " + eclipseTime + " queue size " + queueSize + " " + req.getFilePath() + " " + req.getFileSize()); } //预热 commitlog,每一个pagecache都会初始化一个数据 if (mappedFile.getFileSize() >= this.messageStore.getMessageStoreConfig() .getMapedFileSizeCommitLog() && this.messageStore.getMessageStoreConfig().isWarmMapedFileEnable()) { mappedFile.warmMappedFile(this.messageStore.getMessageStoreConfig().getFlushDiskType(), this.messageStore.getMessageStoreConfig().getFlushLeastPagesWhenWarmMapedFile()); } req.setMappedFile(mappedFile); this.hasException = false; isSuccess = true; } } catch (InterruptedException e) { log.warn(this.getServiceName() + " interrupted, possibly by shutdown."); this.hasException = true; return false; } catch (IOException e) { log.warn(this.getServiceName() + " service has exception. ", e); this.hasException = true; if (null != req) { requestQueue.offer(req); try { Thread.sleep(1); } catch (InterruptedException ignored) { } } } finally { if (req != null && isSuccess) req.getCountDownLatch().countDown(); } return true; }

这里边重要的是确定了使用对外内存池还是堆内内存,还有对commitlog的预加载

**

* !!预热 PUT数据的时候

* TODO 这里面会预先写数据

* @param type

* @param pages

*/

public void warmMappedFile(FlushDiskType type, int pages) {

long beginTime = System.currentTimeMillis();

ByteBuffer byteBuffer = this.mappedByteBuffer.slice();

int flush = 0;

long time = System.currentTimeMillis();

for (int i = 0, j = 0; i < this.fileSize; i += MappedFile.OS_PAGE_SIZE, j++) {

// 在一个4K的pagecache中,起始position写入一个字节

byteBuffer.put(i, (byte) 0);

// force flush when flush disk type is sync

//每写完一个pagecache 就flush

if (type == FlushDiskType.SYNC_FLUSH) {

if ((i / OS_PAGE_SIZE) - (flush / OS_PAGE_SIZE) >= pages) {

flush = i;

mappedByteBuffer.force();

}

}

// prevent gc

if (j % 1000 == 0) {

log.info("j={}, costTime={}", j, System.currentTimeMillis() - time);

time = System.currentTimeMillis();

try {

Thread.sleep(0);

} catch (InterruptedException e) {

log.error("Interrupted", e);

}

}

}

// force flush when prepare load finished

if (type == FlushDiskType.SYNC_FLUSH) {

log.info("mapped file warm-up done, force to disk, mappedFile={}, costTime={}",

this.getFileName(), System.currentTimeMillis() - beginTime);

mappedByteBuffer.force();

}

log.info("mapped file warm-up done. mappedFile={}, costTime={}", this.getFileName(),

System.currentTimeMillis() - beginTime);

// mlock目的就是把mappedByteBuffer内存在炒作系统层面给占用着,避免被重新分配 ,内部调用LibC.INSTANCE.mlock

this.mlock();

}