数据结构

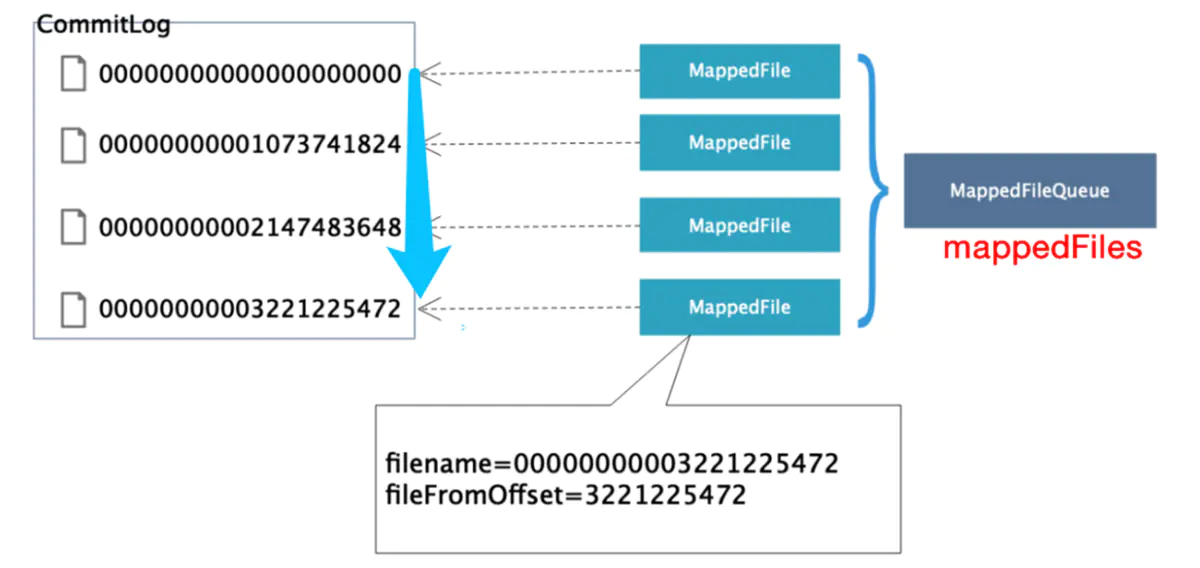

在commitlog中维护了一组MappedFile,属性为MappedFileQueue,具体看一下里面包含哪些字段

public class MappedFileQueue { private static final Logger log = LoggerFactory.getLogger(LoggerName.STORE_LOGGER_NAME); private static final Logger LOG_ERROR = LoggerFactory.getLogger(LoggerName.STORE_ERROR_LOGGER_NAME); private static final int DELETE_FILES_BATCH_MAX = 10; //文件路径 private final String storePath; // 每一个内存映射文件的大小 private final int mappedFileSize; //保存了多个mappedFile private final CopyOnWriteArrayList<MappedFile> mappedFiles = new CopyOnWriteArrayList<MappedFile>(); private final AllocateMappedFileService allocateMappedFileService; // pagecache -> disk的offset private long flushedWhere = 0; // mappedBytevuffer —-> pagecache 的offset private long committedWhere = 0; // 存储时时间戳 private volatile long storeTimestamp = 0;

初始化

commitlog的在初始化的时候 初始化了mappedFilequeue,定义了每一个mappedfile的size,同时根据配置初始化了异步刷盘FlushRealTimeService还是同步刷盘GroupCommitService,以及初始化了异步转存服务CommitRealTimeService。

public CommitLog(final DefaultMessageStore defaultMessageStore) { this.mappedFileQueue = new MappedFileQueue(defaultMessageStore.getMessageStoreConfig().getStorePathCommitLog(), defaultMessageStore.getMessageStoreConfig().getMapedFileSizeCommitLog(), defaultMessageStore.getAllocateMappedFileService()); this.defaultMessageStore = defaultMessageStore; if (FlushDiskType.SYNC_FLUSH == defaultMessageStore.getMessageStoreConfig().getFlushDiskType()) { this.flushCommitLogService = new GroupCommitService(); // 同步刷盘 } else { this.flushCommitLogService = new FlushRealTimeService(); // 异步刷盘 pagecache-> disk } this.commitLogService = new CommitRealTimeService(); // 异步转存服务 直接内存-> pagecache // 存放message的实现 this.appendMessageCallback = new DefaultAppendMessageCallback(defaultMessageStore.getMessageStoreConfig().getMaxMessageSize()); // 初始化批量消息的编码器 batchEncoderThreadLocal = new ThreadLocal<MessageExtBatchEncoder>() { @Override protected MessageExtBatchEncoder initialValue() { return new MessageExtBatchEncoder(defaultMessageStore.getMessageStoreConfig().getMaxMessageSize()); } }; //使用reentrantlock 还是自己实现的一个循环CAS的锁实现 this.putMessageLock = defaultMessageStore.getMessageStoreConfig().isUseReentrantLockWhenPutMessage() ? new PutMessageReentrantLock() : new PutMessageSpinLock(); }

加载

commitlog的加载实际上操作了mappedFilequeue的load(),对每一个commitlog文件做好内存映射,并写到 list中。具体映射过程定义在MappedFile的init方法中,如下

public boolean load() { File dir = new File(this.storePath); File[] files = dir.listFiles(); if (files != null) { // ascending order Arrays.sort(files); for (File file : files) { if (file.length() != this.mappedFileSize) { log.warn(file + " " + file.length() + " length not matched message store config value, ignore it"); return true; } try { MappedFile mappedFile = new MappedFile(file.getPath(), mappedFileSize); mappedFile.setWrotePosition(this.mappedFileSize); mappedFile.setFlushedPosition(this.mappedFileSize); mappedFile.setCommittedPosition(this.mappedFileSize); this.mappedFiles.add(mappedFile); log.info("load " + file.getPath() + " OK"); } catch (IOException e) { log.error("load file " + file + " error", e); return false; } } } return true; }

会不会有这样的疑问?可能最后一个文件还没有写满,

一:为何wrotePosition flushedPosition 还有commitposition直接初始化了mappedfilesize呢?

二:mappedFileQueue的FlushedWhere和CommittedWhere是在哪里赋值的呢?

所有的答案就在recover文件恢复的执行逻辑中

private void init(final String fileName, final int fileSize) throws IOException { this.fileName = fileName; this.fileSize = fileSize; this.file = new File(fileName); this.fileFromOffset = Long.parseLong(this.file.getName()); boolean ok = false; ensureDirOK(this.file.getParent()); try { this.fileChannel = new RandomAccessFile(this.file, "rw").getChannel(); this.mappedByteBuffer = this.fileChannel.map(MapMode.READ_WRITE, 0, fileSize); TOTAL_MAPPED_VIRTUAL_MEMORY.addAndGet(fileSize); TOTAL_MAPPED_FILES.incrementAndGet(); ok = true; } catch (FileNotFoundException e) { log.error("create file channel " + this.fileName + " Failed. ", e); throw e; } catch (IOException e) { log.error("map file " + this.fileName + " Failed. ", e); throw e; } finally { if (!ok && this.fileChannel != null) { this.fileChannel.close(); } } }

启动

commitlog的启动实际上启动了刷盘服务和异步转存服务。

public void start() { this.flushCommitLogService.start(); if (defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) { this.commitLogService.start(); } }

刷盘

具体是同步刷盘还是异步刷盘,根据指定参数flushDiskType,默认为异步刷盘。

在最新的master版本和4.2.0版本输盘的代码有些出入,着重介绍:

putMessage

在broker的SendMessageProcessor中processRequest里面。

@Override public RemotingCommand processRequest(ChannelHandlerContext ctx, RemotingCommand request) throws RemotingCommandException { SendMessageContext mqtraceContext; switch (request.getCode()) { case RequestCode.CONSUMER_SEND_MSG_BACK: // ACK处理 return this.consumerSendMsgBack(ctx, request); default: SendMessageRequestHeader requestHeader = parseRequestHeader(request); if (requestHeader == null) { return null; } mqtraceContext = buildMsgContext(ctx, requestHeader); // 暂时没有注册过SendMessageHook this.executeSendMessageHookBefore(ctx, request, mqtraceContext); RemotingCommand response; if (requestHeader.isBatch()) { response = this.sendBatchMessage(ctx, request, mqtraceContext, requestHeader); } else { response = this.sendMessage(ctx, request, mqtraceContext, requestHeader); } // 暂时没有注册过SendMessageHook,经过sendMessage执行后mqtraceContext的属性也发生改变

this.executeSendMessageHookAfter(response, mqtraceContext); return response; } }

sendmessage主要先是检验了Broker配置是否可写,检验topic名字是否为默认值,检验topic的配置是否存在,不存在则根据默认配置创建topicConfig(在msgcheck中),然后检验queueId与读写队列是否匹配,再检验是否支持事务。

然后执行

PutMessageResult putMessageResult = this.brokerController.getMessageStore().putMessage(msgInner)

1)判断存储模块是否shutdown

2)如果slave不支持写操作

3)检验模块运行标志位

4)检验topic的长度

5)检验扩展信息的长度

6)如果有其他的putmessage操作还没有执行完,且已经超过了1s,则认定为OSPageCache繁忙

接下来会执行

PutMessageResult result = this.commitLog.putMessage(msg)

1)设置消息保存时间为当前时间戳,设置CRC检验码

2)如果是延迟消息,将topic改成SCHEDULE_TOPIC_XXXX,并备份原来的topic和queueId

3)获取commitlog的最后一个mappedFile,然后加上写消息锁

4)如果MappedFile满了,则创建一个新的

接下来把message放到Pagecache中result = mappedFile.appendMessage(msg, this.appendMessageCallback);

1)查找即将写入的消息物理offset long wroteOffset = fileFromOffset + byteBuffer.position();

2)事务消息单独处理

3)putmessag到byteBuffer,即存放到了Pagecache,如果支持转存服务,则等待commit

4)更新topic+queueId对应的consumequeue中的逻辑点位自增

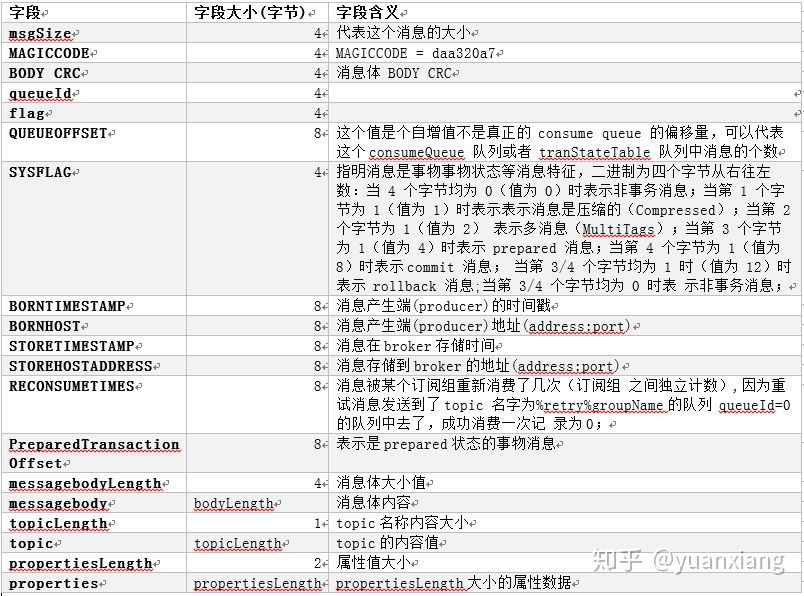

这边附上message存储结构

getMessage将在consumeQueue中介绍。

commitlog数据dispatch到consumelog和index文件

在ReputMessageService中实现了定时拉取consumelog的数据,经过封装后写到consumelog和index中。

private void doReput() { // 重复循环 直到reputFromOffset = MaxOffset for (boolean doNext = true; this.isCommitLogAvailable() && doNext; ) { if (DefaultMessageStore.this.getMessageStoreConfig().isDuplicationEnable() // && this.reputFromOffset >= DefaultMessageStore.this.getConfirmOffset()) { break; } // 拉取reputFromOffset后的commitlog数据 SelectMappedBufferResult result = DefaultMessageStore.this.commitLog.getData(reputFromOffset); if (result != null) { try { this.reputFromOffset = result.getStartOffset(); for (int readSize = 0; readSize < result.getSize() && doNext; ) { DispatchRequest dispatchRequest = DefaultMessageStore.this.commitLog.checkMessageAndReturnSize(result.getByteBuffer(), false, false); // 获取到有效mesage的size int size = dispatchRequest.getMsgSize(); if (dispatchRequest.isSuccess()) { if (size > 0) { // 在dispatcherList中存放 // CommitLogDispatcherBuildConsumeQueue & CommitLogDispatcherBuildIndex 对应写consumelog和index DefaultMessageStore.this.doDispatch(dispatchRequest); // 消息到达通知 这块在后续的长轮询讲解 if (BrokerRole.SLAVE != DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole() && DefaultMessageStore.this.brokerConfig.isLongPollingEnable()) { DefaultMessageStore.this.messageArrivingListener.arriving(dispatchRequest.getTopic(), dispatchRequest.getQueueId(), dispatchRequest.getConsumeQueueOffset() + 1, dispatchRequest.getTagsCode(), dispatchRequest.getStoreTimestamp(), dispatchRequest.getBitMap(), dispatchRequest.getPropertiesMap()); } // FIXED BUG By shijia this.reputFromOffset += size; readSize += size; // 状态统计 if (DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole() == BrokerRole.SLAVE) { DefaultMessageStore.this.storeStatsService .getSinglePutMessageTopicTimesTotal(dispatchRequest.getTopic()).incrementAndGet(); DefaultMessageStore.this.storeStatsService .getSinglePutMessageTopicSizeTotal(dispatchRequest.getTopic()) .addAndGet(dispatchRequest.getMsgSize()); } } else if (size == 0) { // 说明到了文件的尽头 获取下一个文件的起始offset 并复制给reputFromOffset this.reputFromOffset = DefaultMessageStore.this.commitLog.rollNextFile(this.reputFromOffset); readSize = result.getSize(); } } else if (!dispatchRequest.isSuccess()) { if (size > 0) { log.error("[BUG]read total count not equals msg total size. reputFromOffset={}", reputFromOffset); this.reputFromOffset += size; } else { doNext = false; if (DefaultMessageStore.this.brokerConfig.getBrokerId() == MixAll.MASTER_ID) { log.error("[BUG]the master dispatch message to consume queue error, COMMITLOG OFFSET: {}", this.reputFromOffset); this.reputFromOffset += result.getSize() - readSize; } } } } } finally { result.release(); } } else { doNext = false; } } }

对consumequeue和index的dispatch过程,以及commitlog的MappedFile的创建和预热将在对应的章节介绍

参考

https://www.cnblogs.com/allenwas3/p/12218235.html