部署nfs-provisioner external-storage-nfs

-

创建工作目录

$ mkdir -p /opt/k8s/nfs/data -

下载nfs-provisioner对应的镜像,上传到自己的私有镜像中

$ docker pull fishchen/nfs-provisioner:v2.2.2 $ docker tag fishchen/nfs-provisioner:v2.2.2 192.168.0.107/k8s/nfs-provisioner:v2.2.2 $ docker push 192.168.0.107/k8s/nfs-provisioner:v2.2.2 -

编辑启动nfs-provisioner的deploy.yml文件

$ cd /opt/k8s/nfs $ cat > deploy.yml << EOF apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner --- kind: Service apiVersion: v1 metadata: name: nfs-provisioner labels: app: nfs-provisioner spec: ports: - name: nfs port: 2049 - name: mountd port: 20048 - name: rpcbind port: 111 - name: rpcbind-udp port: 111 protocol: UDP selector: app: nfs-provisioner --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-provisioner spec: selector: matchLabels: app: nfs-provisioner replicas: 1 strategy: type: Recreate template: metadata: labels: app: nfs-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-provisioner image: 192.168.0.107/k8s/nfs-provisioner:v2.2.2 ports: - name: nfs containerPort: 2049 - name: mountd containerPort: 20048 - name: rpcbind containerPort: 111 - name: rpcbind-udp containerPort: 111 protocol: UDP securityContext: capabilities: add: - DAC_READ_SEARCH - SYS_RESOURCE args: - "-provisioner=myprovisioner.kubernetes.io/nfs" env: - name: POD_IP valueFrom: fieldRef: fieldPath: status.podIP - name: SERVICE_NAME value: nfs-provisioner - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace imagePullPolicy: "IfNotPresent" volumeMounts: - name: export-volume mountPath: /export volumes: - name: export-volume hostPath: path: /opt/k8s/nfs/data EOF- volumes.hostPath 指向刚创建的数据目录,作为nfs的export目录,此目录可以是任意的Linux目录

- args: - "-myprovisioner.kubernetes.io/nfs" 指定provisioner的名称,要和后面创建的storeClass中的名称保持一致

-

编辑自动创建pv相关的rbac文件

$ cd /opt/k8s/nfs $ cat > rbac.yml << EOF kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] - apiGroups: [""] resources: ["services", "endpoints"] verbs: ["get"] - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] resourceNames: ["nfs-provisioner"] verbs: ["use"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-provisioner apiGroup: rbac.authorization.k8s.io EOF -

编辑创建StorageClass的启动文件

$ cd /opt/k8s/nfs $ cat > class.yml << EOF kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: example-nfs provisioner: myprovisioner.kubernetes.io/nfs mountOptions: - vers=4.1 EOF- provisioner 对应的值要和前面deployment.yml中配置的值一样

-

启动nfs-provisioner

$ kubectl create -f deploy.yml -f rbac.yml -f class.yml

验证和使用nfs-provisioner

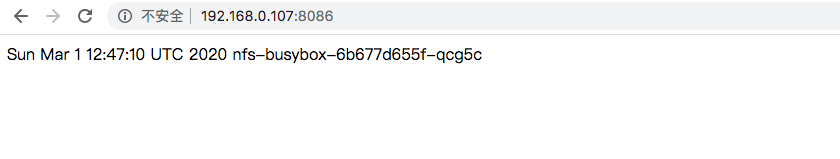

下面我们通过一个简单的例子来验证刚创建的nfs-provisioner,例子中主要包含两个应用,一个busyboxy和一个web,两个应用挂载同一个PVC,其中busybox负责向共享存储中写入内容,web应用读取共享存储中的内容,并展示到界面。

-

编辑创建PVC文件

$ cd /opt/k8s/nfs $ cat > claim.yml << EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: nfs storageClassName: example-nfs spec: accessModes: - ReadWriteMany resources: requests: storage: 100Mi EOF- storageClassName: 指定前面创建的StorageClass对应的名称

- accessModes: ReadWriteMany 允许多个node进行挂载和read、write

- 申请资源是100Mi

-

创建PVC,并检查是否能自动创建相应的pv

$ kubectl create -f claim.yml $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs Bound pvc-10a1a98c-2d0f-4324-8617-618cf03944fe 100Mi RWX example-nfs 11s $kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-10a1a98c-2d0f-4324-8617-618cf03944fe 100Mi RWX Delete Bound default/nfs example-nfs 18s- 可以看到自动给我们创建了一个pv对应的名称是pvc-10a1a98c-2d0f-4324-8617-618cf03944fe,STORAGECLASS是example-nfs,对应的claim是default/nfs

-

启动一个busybox应用,通过挂载共享目录,向其中写入数据

-

编辑启动文件

$ cd /opt/k8s/nfs $ cat > deploy-busybox.yml << EOF apiVersion: apps/v1 kind: Deployment metadata: name: nfs-busybox spec: replicas: 1 selector: matchLabels: name: nfs-busybox template: metadata: labels: name: nfs-busybox spec: containers: - image: busybox command: - sh - -c - 'while true; do date > /mnt/index.html; hostname >> /mnt/index.html; sleep 20; done' imagePullPolicy: IfNotPresent name: busybox volumeMounts: # name must match the volume name below - name: nfs mountPath: "/mnt" volumes: - name: nfs persistentVolumeClaim: claimName: nfs EOF- volumes.persistentVolumeClaim.claimName 设定成刚创建的PVC

-

启动busybox

$ cd /opt/k8s/nfs $ kubectl create -f deploy-busybox.yml查看是否在对应的pv下生成了index.html

$ cd /opt/k8s/nfs $ ls data/pvc-10a1a98c-2d0f-4324-8617-618cf03944fe/

index.html

$ cat data/pvc-10a1a98c-2d0f-4324-8617-618cf03944fe/index.html

Sun Mar 1 12:51:30 UTC 2020

nfs-busybox-6b677d655f-qcg5c``` * 可以看到在对应的pv下生成了文件,也正确写入了内容 -

-

启动web应用(nginx),读取共享挂载中的内容

-

编辑启动文件

$ cd /opt/k8s/nfs $ cat >deploy-web.yml << EOF apiVersion: v1 kind: Service metadata: name: nfs-web spec: type: NodePort selector: role: web-frontend ports: - name: http port: 80 targetPort: 80 nodePort: 8086 --- apiVersion: apps/v1 kind: Deployment metadata: name: nfs-web spec: replicas: 2 selector: matchLabels: role: web-frontend template: metadata: labels: role: web-frontend spec: containers: - name: web image: nginx:1.9.1 ports: - name: web containerPort: 80 volumeMounts: # name must match the volume name below - name: nfs mountPath: "/usr/share/nginx/html" volumes: - name: nfs persistentVolumeClaim: claimName: nfs EOF- volumes.persistentVolumeClaim.claimName 设定成刚创建的PVC

-

启动web程序

$ cd /opt/k8s/nfs $ kubectl create -f deploy-web.yml -

访问页面

- 可以看到正确读取了内容,没过20秒,持续观察可发现界面的时间可以刷新

-

遇到问题

参照github上的步骤执行,启动PVC后无法创建pv,查看nfs-provisioner服务的日志,有出现错误:

error syncing claim "20eddcd8-1771-44dc-b185-b1225e060c9d": failed to provision volume with StorageClass "example-nfs": error getting NFS server IP for volume: service SERVICE_NAME=nfs-provisioner is not valid; check that it has for ports map[{111 TCP}:true {111 UDP}:true {2049 TCP}:true {20048 TCP}:true] exactly one endpoint, this pod's IP POD_IP=172.30.22.3

错误原因issues/1262,之后把错误中提到端口保留,其他端口号都去掉,正常