论文

Megastore: Providing Scalable, Highly Available Storage for Interactive Services

http://blog.sciencenet.cn/blog-449420-444736.html

1. INTRODUCTION

Interactive online services are forcing the storage community to meet new demands as desktop applications migrate to the cloud. Services like email, collaborative documents, and social networking have been growing exponentially and are testing the limits of existing infrastructure. Meeting these services' storage demands is challenging due to a number of conicting requirements.

First, the Internet brings a huge audience of potential users, so the applications must be highly scalable. A service can be built rapidly using MySQL [10] as its datastore, but scaling the service to millions of users requires a complete redesign of its storage infrastructure.

Second, services must compete for users. This requires rapid development of features and fast time-to-market.

Third, the service must be responsive; hence, the storage system must have low latency.

Fourth, the service should provide the user with a consistent view of the data - the result of an update should be visible immediately and durably. Seeing edits to a cloud-hosted

spreadsheet vanish, however briefly, is a poor user experience.

Finally, users have come to expect Internet services to be up 24/7, so the service must be highly available. The service must be resilient to many kinds of faults ranging from the failure of individual disks, machines, or routers all the way up to large-scale outages a effecting entire datacenters.

These requirements are in conflict.

Relational databases provide a rich set of features for easily building applications, but they are difficult to scale to hundreds of millions of users.

NoSQL datastores such as Google's Bigtable [15], Apache Hadoop's HBase [1], or Facebook's Cassandra [6] are highly scalable, but their limited API and loose consistency models complicate application development.

Replicating data across distant datacenters while providing low latency is challenging, as is guaranteeing a consistent view of replicated data, especially during faults.

面对Interactive服务的流行, 对现有的存储系统提出了新的需求, 如上5点. 如果都满足, 就是完美的系统.

可惜, 按照CAP理论, 现有的系统无法满足所有的要求, 所以才会产生出如此多的各种NoSQL系统, 因为不同的场景就需要不同的balance, 没有一种存储系统可以one-size-fits-all.

Megastore is a storage system developed to meet the storage requirements of today's interactive online services. It is novel in that it blends the scalability of a NoSQL datastore with the convenience of a traditional RDBMS. It uses synchronous replication to achieve high availability and a consistent view of the data. In brief, it provides fully serializable ACID semantics over distant replicas with low enough latencies to support interactive applications.

We accomplish this by taking a middle ground in the RDBMS vs. NoSQL design space: we partition the datastore and replicate each partition separately, providing full ACID semantics within partitions, but only limited consistency guarantees across them. We provide traditional database features, such as secondary indexes, but only those features that can scale within user-tolerable latency limits, and only with the semantics that our partitioning scheme can support. We contend that the data for most Internet services can be suitably partitioned (e.g., by user) to make this approach viable, and that a small, but not spartan, set of features can substantially ease the burden of developing cloud applications.

Megastore是Google为了应对Interactive服务而开发的新一代的存储系统, 但是他也不能满足上面所有的需求, 也不能摆脱CAP理论. 只是在NoSQL和RDBMS之间找到了一种新的balance.

Contrary to conventional wisdom [24, 28], we were able to use Paxos [27] to build a highly available system that provides reasonable latencies for interactive applications while synchronously replicating writes across geographically distributed datacenters.

While many systems use Paxos solely for locking, master election, or replication of metadata and configurations, we believe that Megastore is the largest system deployed that uses Paxos to replicate primary user data across datacenters on every write. Megastore has been widely deployed within Google for several years [20]. It handles more than three billion write and 20 billion read transactions daily and stores nearly a petabyte of primary data across many global datacenters.

Paxos经常被用于分布式锁, master election, 元数据和配置的复本同步, 而Megastore是使用Paxos协议中最大的系统, 其使用Paxos来同步across datacenters的任何数据write.

The key contributions of this paper are:

1. the design of a data model and storage system that allows rapid development of interactive applications where high availability and scalability are built-in from the start;

2. an implementation of the Paxos replication and consensus algorithm optimized for low-latency operation across geographically distributed datacenters to provide high availability for the system;

3. a report on our experience with a large-scale deployment of Megastore at Google.

2. TOWARD AVAILABILITY AND SCALE

In contrast to our need for a storage platform that is global, reliable, and arbitrarily large in scale, our hardware building blocks are geographically confined, failure-prone, and suffer limited capacity. We must bind these components into a unified ensemble offering greater throughput and reliability.

To do so, we have taken a two-pronged approach:

- for availability, we implemented a synchronous, fault-tolerant log replicator optimized for long distance-links;

- for scale, we partitioned data into a vast space of small databases, each with its own replicated log stored in a per-replica NoSQL datastore.

2.1 Replication

Replicating data across hosts within a single datacenter improves availability by overcoming host-specific failures, but with diminishing returns. We still must confront the networks that connect them to the outside world and the infrastructure that powers, cools, and houses them. Economically constructed sites risk some level of facility-wide outages [25] and are vulnerable to regional disasters. For cloud storage to meet availability demands, service providers must replicate data over a wide geographic area.

2.1.1 Strategies

We evaluated common strategies for wide-area replication:

Asynchronous Master/Slave

A master node replicates write-ahead log entries to at least one slave. Log appends are acknowledged at the master in parallel with transmission to slaves. The master can support fast ACID transactions but risks downtime or data loss during failover to a slave. A consensus protocol is required to mediate mastership.

Synchronous Master/Slave

A master waits for changes to be mirrored to slaves before acknowledging them, allowing failover without data loss. Master and slave failures need timely detection by an external system.

Optimistic Replication

Any member of a homogeneous replica group can accept mutations [23], which are asynchronously propagated through the group. Availability and latency are excellent. However, the global

mutation ordering is not known at commit time, so transactions are impossible.

对于复本同步, 基本上有上面3种方法, 主从异步, 主从同步, 和乐观同步

对于主从架构, 因为有master来统一write, 所以不会有write-write冲突, 但是会有master负载过重, 和单点问题. 所以主要问题是write-read冲突问题.

对于异步主从, master write成功即返回, 其他slave异步同步, 可以达到low latency, 不过write-read冲突问题严重, 而且由于异步同步, 可能导致数据丢失.

而同步主从, 可以保证所有复本写成功, 从而避免write-read冲突问题, 但必然会导致较高的latency.

乐观同步, Dynamo的架构, 没有master, 任何replica都可以更新, 并通过Gossip协议进行propagate. 问题是只能达到eventually consistent, 并且会有read-modify-write冲突, 即写写冲突.

We avoided strategies which could lose data on failures, which are common in large-scale systems.

We also discarded strategies that do not permit ACID transactions. Despite the operational advantages of eventually consistent systems, it is currently too difficult to give up the read-modify-write idiom in rapid application development.

We also discarded options with a heavyweight master. Failover requires a series of high-latency stages often causing a user-visible outage, and there is still a huge amount of complexity. Why build a fault-tolerant system to arbitrate mastership and failover work ows if we could avoid distinguished masters altogether?

首先排除异步主从和乐观复本的方案, 又要避免master过重的负担, 所以就需要无master的同步复本的方案.

2.1.2 Enter Paxos

We decided to use Paxos, a proven, optimal, fault-tolerant consensus algorithm with no requirement for a distinguished master [14, 27]. We replicate a write-ahead log over a group of symmetric peers. Any node can initiate reads and writes.

Paxos就是无需master的一致性算法, Megastore用它来进行replica同步时, 任何节点都可以发起write/read操作

本节内容参考, Nosql数据一致性技术概要

Each log append blocks on acknowledgments from a majority of replicas, and replicas in the minority catch up as they are able - the algorithm's inherent fault tolerance eliminates the need for a distinguished “failed” state. A novel extension to Paxos, detailed in Section 4.4.1, allows local reads at any up-to-date replica. Another extension permits single-roundtrip writes.

Even with fault tolerance from Paxos, there are limitations to using a single log. With replicas spread over a wide area, communication latencies limit overall throughput. Moreover, progress is impeded when no replica is current or a majority fail to acknowledge writes. In a traditional SQL database hosting thousands or millions of users, using a synchronously replicated log would risk interruptions of widespread impact [11]. So to improve availability and throughput we use multiple replicated logs, each governing its own partition of the data set.

2.2 Partitioning and Locality

To scale our replication scheme and maximize performance of the underlying datastore, we give applications fine-grained control over their data's partitioning and locality.

2.2.1 Entity Groups

To scale throughput and localize outages, we partition our data into a collection of entity groups [24], each independently and synchronously replicated over a wide area.

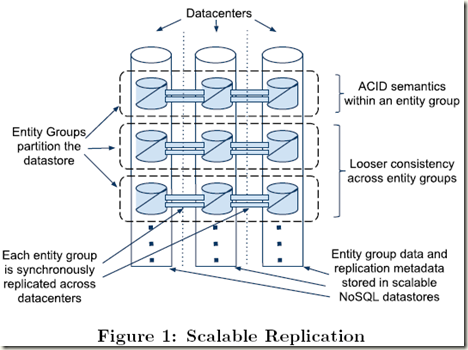

The underlying data is stored in a scalable NoSQL datastore in each datacenter (see Figure 1).

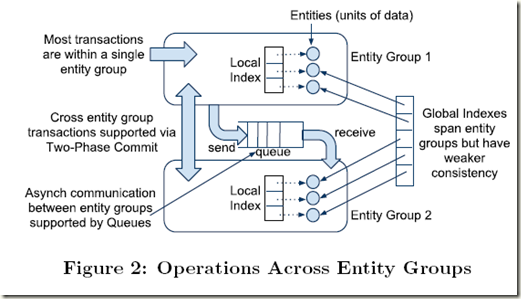

Entities within an entity group are mutated with single-phase ACID transactions (for which the commit record is replicated via Paxos).

Operations across entity groups could rely on expensive two-phase commits, but typically leverage Megastore's efficient asynchronous messaging.

A transaction in a sending entity group places one or more messages in a queue; transactions in receiving entity groups atomically consume those messages and apply ensuing mutations.

Note that we use asynchronous messaging between logically distant entity groups, not physically distant replicas.

All network traffic between datacenters is from replicated operations, which are synchronous and consistent.

Indexes local to an entity group obey ACID semantics; those across entity groups have looser consistency. See Figure 2 for the various operations on and between entity groups.

Megastore的关键是entity group, EQ作为强一致性的基本单元

对于基于paxos的强一致性方案, 响应时间肯定是个大问题, 所以不可能对所有数据都实现强一致性, 需要balance

采用的策略就是切分, 将大数据切分成更小的EQ, 只需要在EQ内部保证同步强一致性, 而在EQ之间只需要保证较弱的异步一致性, 通过二段提交和message queue, 所以EQ之间的一致性需要较长时间来同步

对于Index实现也是一样, 在EQ内部有Local index是可以保证ACID的, 但对于EQ间的Globle Indexes只能保证弱一致性

上面的说的EQ内或EQ间都是只单个data center, 当然对于分布式系统, 必须要在多data center上实现多replicas

而任何EQ在多个data center replicas上的同步一定是通过paxos保证强一致性的

见图1, 虽然不同replica分布在不同的data center, 但是在概念上仍然算同一个EQ, 所以必须保证同步强一致性

跨多EQ间的更新是异步的, 也许需要比较长的时间完成, 但对于其中任一个EQ, 所有replicas也需要同步被更新

2.2.2 Selecting Entity Group Boundaries

所以如果划分EQ的边界就显的很重要, EQ设的太大会导致大量不相干的数据要求序列化更新, 大大降低throughput

但是如果将EQ设的过小, 导致大量的EQ间操作, 那么Megastore就没有发挥作用

对于一些web应用, 本身就有很好的自然边界, 如Email, 每个email account设为一个EQ, 保证同步强一致性, 对于EQ之间, 比如一个user给另一个user发了封mail, 采用异步一致性, 延长一段时间都是没有问题的

对于Blog和Map, 划分EQ复杂一些, 不过划分的标准, 即高聚合, 低耦合

The entity group de nes the a priori grouping of data for fast operations. Boundaries that are too fine-grained force excessive cross-group operations, but placing too much unrelated data in a single group serializes unrelated writes, which degrades throughput.

The following examples show ways applications can work within these constraints:

Email Each email account forms a natural entity group.

Operations within an account are transactional and consistent: a user who sends or labels a message is guaranteed to observe the change despite possible fail-over to another replica. External mail routers handle communication between accounts.

Blogs A blogging application would be modeled with multiple classes of entity groups.

Each user has a profile, which is naturally its own entity group.

However, blogs are collaborative and have no single permanent owner. We create a second class of entity groups to hold the posts and metadata for each blog.

A third class keys off the unique name claimed by each blog. The application relies on asynchronous messaging when a single user operation affects both blogs and profiles.

For a lower-traffic operation like creating a new blog and claiming its unique name, two-phase commit is more convenient and performs adequately.

Maps Geographic data has no natural granularity of any consistent or convenient size.

A mapping application can create entity groups by dividing the globe into non-overlapping patches. For mutations that span patches, the application uses two-phase commit to make them

atomic. Patches must be large enough that two-phase transactions are uncommon, but small enough that each patch requires only a small write throughput. Unlike the previous examples, the number of entity groups does not grow with increased usage, so enough patches must be created initially for sufficient aggregate throughput at later scale.

Nearly all applications built on Megastore have found natural ways to draw entity group boundaries.

2.2.3 Physical Layout

We use Google's Bigtable [15] for scalable fault-tolerant storage within a single datacenter, allowing us to support arbitrary read and write throughput by spreading operations across multiple rows.

Google Megastore系统事务机制

http://www.dedecms.com/knowledge/data-base/nosql/2012/0820/8962.html

Comparing Google Megastore

http://glinden.blogspot.com/2011/02/comparing-google-megastore.html

Comparing Google Megastore

A small pile of Googlers recently presented a paper, "Megastore: Providing Scalable, Highly Available Storage for Interactive Services" (PDF) that details the architecture of a major distributed database used at Google.

Megastore "has been widely deployed within Google for several years ... handles more than three billion write and 20 billion read transitions daily ... [and] stores nearly a petabyte ... across many datacenters."

Others have already summarized the paper ([1] [2]), so I'll focus on my reaction to it. What I found surprising about Megastore, especially when comparing to other large scale databases, is that it favors consistency over performance.

For consistency, Megastore provides "full ACID semantics within partitions", "supports two-phase commit across entity groups", guarantees that reads always see the last write, uses Paxos for confirming consensus among replicas, and uses distributed locks between "coordinator processes" as part of detecting failures. This is all unusually strong compared to the more casual eventual consistency offered by databases like Amazon's Dynamo, Yahoo's HBase, and Facebook's Cassandra.

The problem with providing Megastore's level of consistency is performance. The paper mostly describes Megastore's performance in sunny terms, but, when you look at the details, it does not compare favorably with other databases. Megastore has "average read latencies of tens of milliseconds" and "average write latencies of 100-400 milliseconds". In addition, Megastore has a limit of "a few writes per second per entity group" because higher write rates will cause conflicts, retries, and even worse performance.

By comparison, Facebook expects 4ms reads and 5ms writes on their database, so Megastore is an order of magnitude or two slower than what Facebook developers appear to be willing to tolerate.

Google application developers seem to find the latency to be a hassle as well. The paper says that Googlers find the latency "tolerable" but often have to "hide write latency from users" and "choose entity groups carefully".

This makes me wonder if Google has made the right tradeoff here. Is it really easier for application developers to deal with high latency all of the time than to almost always have low latency but have to worry more about consistency issues? Most large scale databases have made the choice the other way. Quite surprising.

Update: Googler DeWitt Clinton writes with a good point: "We build on top of Megastore when we require some of those characteristics (availability, consistency), and to Bigtable directly when we require low latency and high throughput instead. So it's up to the application to decide what tradeoffs to make, definitely not one-size-fits-all."

Google Megastore - 3 Billion Writes and 20 Billion Read Transactions Daily

Google Megastore: The Data Engine Behind GAE

http://perspectives.mvdirona.com/2011/01/09/GoogleMegastoreTheDataEngineBehindGAE.aspx