要解决的问题就是对于云原生数据库,越来越大的meta应该怎么管理

传统的数据库,都是将catalog存在系统表里面

大数据系统,比如Colossus将meta存在Big table里面;Hadoop生态有Hive metastore

Delta lake用事务log的方式来记录meta

并且对于AP场景,meta不仅仅记录catalog,而记录统计数据来支持data skip,这导致meta大幅度的膨胀

针对上述各种方案的限制,本文想提出的是一种大规模的元数据管理方案

RELATED WORK

The idea of storing metadata inside the system itself has been employed by many storage and database systems.

Databases such as SQL Server, Oracle, Postgres all maintain a system catalog which is a set of tables that contain information about objects, constraints, data types and configuration settings.

Google’s distributed file system, Colossus [15] stores its metadata in BigTable [5], a key value store built on top of it.

The idea of using min-max values for partition elimination was proposed in [16].

Most databases such as Vertica [12], SQLServer [2], Netezza, SAP Hana, MonetDB, and Vectorwise use this technique.

DB2 BLU [19] creates internal tables called synopsis tables that store some column level metadata about the tables.

Our system tables are similar in spirit to this, yet it stores much richer column level metadata beyond min-max values.

More importantly, their system relies on the synopsis table being in memory for performance acceleration.

In contrast, our approach works for large amounts of metadata needed to support arbitrarily large tables.

Our approach doesn’t require metadata to be as compact, since it is not dependent on in-memory storage.

Metadata scalability has been a topic of interest in open source systems.

Hive metastore [9], a metadata repository for Hive [4] tables and partitions, can be configured to run on various relational databases.

Varying scalability limits exist around the number of partitions that can be queried in a single query in order to prevent overload.

Our approach uses distributed processing to avoid any scalability bottlenecks and single points of coordination for reading metadata during query processing.

Delta lake [3] uses a metadata management system that implements a transaction log compacted into Parquet format.

Our system is similar to it in terms of columnar metadata layout.

Our system intertwines the access of metadata into the query by simply treating it like another data table.

这里给出Bigquery的一个例子,对于生成的物理计划是动态的,

这个查询会分成3个stage,每个stage的cost决定了物理Operator的选择,而cost又依赖于meta中的统计数据。

Query plan can be described as a DAG (directed acyclic graph) of stages,

where each stage is replicated across a number of workers which run the same set of operators over different pieces of data.

The number of workers running for the given stage is the stage’s degree of parallelism.

Physical query plans in BigQuery are dynamic.

The Query Coordinator builds an initial plan, but as the query starts execution, the query plan starts changing based on the actual data statistics observed during the execution.

These statistics include: total amount of data flowing between the stages, number of rows for each table, data distribution and skew etc.

These statistics affect both stage’s degree of parallelism and choice of physical operators, e.g. shuffled vs. broadcast join.

这里进一步分析Meta,分为

逻辑Meta,就是传统的Catalog,这部分比较小。

物理Meta,row counts,列统计数据等,会很大,可能达到几十TB级别。 这是我们研究的对象

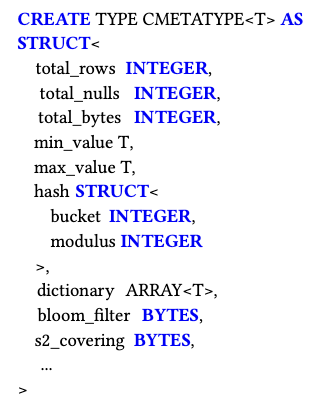

论文中称为,CMeta,包含range constraints,hash contraints,字典等。

We classify storage metadata into two broad categories: Logical metadata and Physical metadata.

Logical metadata is information about the table that is generally directly visible to the user.

Some examples of such metadata are: Table schema, Partitioning and Clustering specifications, column and row level ACLs.

This information is generally small in size and lends itself to quick access.

Physical metadata is information about the table’s storage that BigQuery maintains internally in order to map a table name to its actual data.

Examples of such information are: Locations of the blocks in the file system, row counts, lineage of data in the blocks,

MVCC information, statistics and properties of column values within each block.

If C is the number of columns in a table and N is the number of blocks, the cardinality of column metadata is O(C × N).

With C = 10K and N being of the order of millions, it is possible to have tens of TB of metadata.

It is clear that physical metadata, while being extremely valuable for query execution, is not easily accessible.

In the rest of this paper, we focus on physical metadata, which we simply refer to as “metadata”.

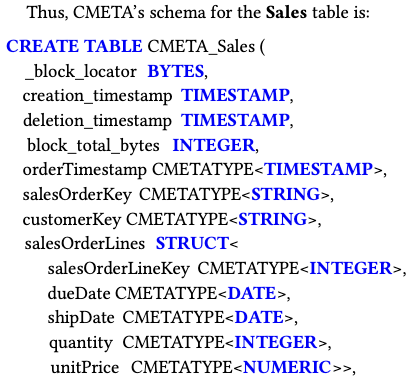

To solve this, we organize the physical metadata of each table as a set of system tables that are derived from the original table.

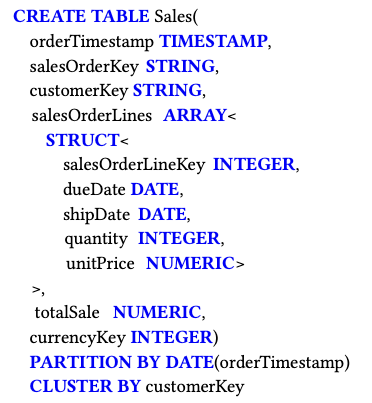

To illustrate our idea, we describe the metadata layout using one such system table

(hereafter referred to as CMETA) that stores column level information about the min/max values (called range constraints),

hash bucket and modulus values (called hash constraints) and a dictionary of column values.

Other variants of system tables include those that store posting lists of column values.

Query optimizer chooses one or more such system tables for planning and executing the query.

CMETA的定义可以如下,包含一堆的统计数据,一个CMETA对应于一个column,

那么之前的这张表就可以建立对应的Meta表,

记录了,block locator,时间戳,block total bytes,还有每个column对应的CMeta

首先变更的单位是block,block中有数据被更新,就会生成新的block,并删除旧的block

Meta更新会记录change log到高可靠的存储中,后台进程会以LSM的方式,不断的Merge这些deltas到baseline

任何读时刻,table的meta都可以通过baseline+delta得到

这个方案,和DeltaLake几乎一样

Mutations (such as DML) that write to the original table, create new blocks of data and/or mutate existing blocks of data.

Thus, in order for CMETA to be the source of truth for a table’s metadata, it needs to be updated whenever the table is mutated.

For simplicity of exposition, we consider the block as the unit of mutation.

In other words, when data is modified, it is rewritten at block granularity.

Modification of any rows inside a block will cause a new block to be created with the unmodified and modified rows while simultaneously marking the old block as deleted.

In reality, BigQuery contains blocks where only a subset of rows may be active at any given timestamp.

It is easy to see that our design below works for partial blocks as well.

A metadata change log is used to journal the history of mutations and additions of blocks.

When a new block is created, we gather the properties of the block, assign a creation timestamp

(this is the commit timestamp of the operation that is responsible for creation of this block) and write an entry to the metadata change log.

When a block needs to be deleted, we write a log entry with the deletion timestamp to the log.

This change log is written to a highly available, durable replicated storage system.

Operations on table may create and/or mutate millions of blocks, and the metadata change log guarantees ACID properties for these mutations.

A background process constantly performs LSM[17] style merges on the change log to produce baselines and deltas of changes.

These baselines and deltas are produced as columnar capacitor blocks with the aforementioned schema.

Note that baselines and deltas are merged incrementally based on load and write rate to the change log.

At any given read timestamp, the table’s metadata can be constructed by reading the baseline available at that timestamp and any deltas from the baseline up to the read timestamp.

Our incremental generation also works with high throughput streaming ingestion as well.

Data is received by ingestion servers and persisted to a replicated write ahead log in Colossus.

The most recently streamed data is optimized by compacting into capacitor blocks continuously.

Fine grained metadata for rows that have not yet been compacted into capacitor blocks is maintained in the memory of ingestion servers.

然后在查询的时候,可以直接将对原表的查询和Meta表的查询进行join

这个看着很牛逼,

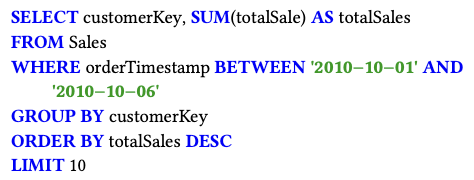

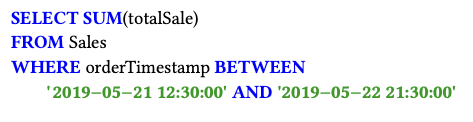

比如对于这样的查询,

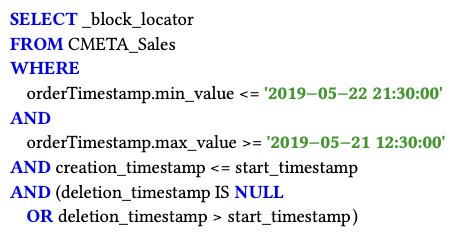

对应的查META的SQL,

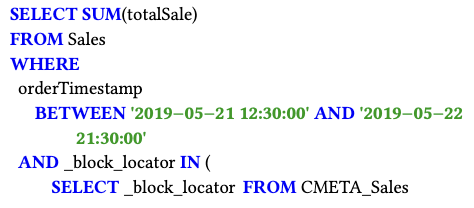

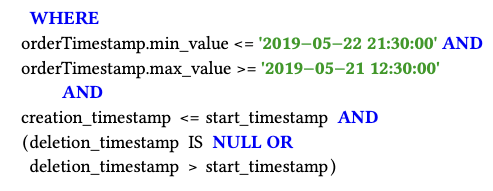

合一块,就这样,

这里提出了一种概念“falsifiable expression”,当这个expression为true时,这个block可以不用scan

并且提出,两种标准来衡量这个expression,复杂度和tightness

啥意思?底下给个例子,对于x=c这个条件,可以生成各种falsifiable expression

需要挑选,复杂度尽量高的,tightness范围尽量精确的

然后提出这样的标准后,论文后面给出大段的内容如何从filter condition推导出相应的falsifiable expression,这里就省略了

Partition elimination is a popular technique to improve query performance,

by inspecting the filter condition and eliminating scan of the partitions which cannot possibly satisfy the filter condition [16].

Typically, partition elimination is limited to simple comparison predicates between single column and constants and checking constants against min/max values of the block.

In this section we are going to present a generalized framework that applies to a rich set of complex expressions, and can take advantage of a variety of column statistics.

Our approach is based on the notion of a falsifiable expression which is a boolean expression derived from the query filters. It satisfies the following property:

For a given block, if the falsifiable expression evaluates to true, that block does not need to be scanned by the original query.

For any given filter condition, there exist many possible falsifiable expressions. We determine the “quality" of falsifiable expressions using the following two criteria:

-

Complexity of expression: The formal definition of expression complexity is beyond the scope of this paper, but informally x = ‘a’ is simpler than x LIKE ‘a%’

-

Tightness: Falsifiable expression may (and in practice will) have false negatives.

i.e., there will be values for which it will evaluate to - causing the block to be scanned.

However, subsequent application of the filter condition will return for all values in the block.

Falsifiable expression which doesn’t have false negatives is tight, while a falsifiable expression with false positives is loose.

Our algorithm prefers falsifiable expressions which are less complex but tighter.

As an example, consider filter condition = , and block containing values 1, 2, ..., .

Then the falsifiable expression <> 1 AND <> 2 AND...AND <> is tight, but potentially very complex for high values of N.

The falsifiable expression c NOT IN bloom_filter(1, ... ) is simpler, but less tight.

The falsifiable expression c > max(1, ..., ) OR c < min(1, ..., ) is potentially even less complex, but is looser.