参考,https://matt33.com/2019/03/17/apache-calcite-planner/

Volcano模型使用,分为下面几个步骤,

//1. 初始化 VolcanoPlanner planner = new VolcanoPlanner(); //2.addRelTrait planner.addRelTraitDef(ConventionTraitDef.INSTANCE); planner.addRelTraitDef(RelDistributionTraitDef.INSTANCE); //3.添加rule, logic to logic planner.addRule(FilterJoinRule.FilterIntoJoinRule.FILTER_ON_JOIN); planner.addRule(ReduceExpressionsRule.PROJECT_INSTANCE); //4.添加ConverterRule, logic to physical planner.addRule(EnumerableRules.ENUMERABLE_MERGE_JOIN_RULE); planner.addRule(EnumerableRules.ENUMERABLE_SORT_RULE); //5. setRoot 方法注册相应的RelNode planner.setRoot(relNode); //6. find best plan relNode = planner.findBestExp();

1和2 初始化

addRelTraitDef,就是把traitDef加到这个结构里面

/** * Holds the currently registered RelTraitDefs. */ private final List<RelTraitDef> traitDefs = new ArrayList<>();

3. 增加Rule

public boolean addRule(RelOptRule rule) { //加到ruleSet final boolean added = ruleSet.add(rule); mapRuleDescription(rule); // Each of this rule's operands is an 'entry point' for a rule call. // Register each operand against all concrete sub-classes that could match // it. for (RelOptRuleOperand operand : rule.getOperands()) { for (Class<? extends RelNode> subClass : subClasses(operand.getMatchedClass())) { classOperands.put(subClass, operand); } } // If this is a converter rule, check that it operates on one of the // kinds of trait we are interested in, and if so, register the rule // with the trait. if (rule instanceof ConverterRule) { ConverterRule converterRule = (ConverterRule) rule; final RelTrait ruleTrait = converterRule.getInTrait(); final RelTraitDef ruleTraitDef = ruleTrait.getTraitDef(); if (traitDefs.contains(ruleTraitDef)) { ruleTraitDef.registerConverterRule(this, converterRule); } } return true; }

a. 更新classOperands

记录Relnode和Rule的match关系,

multimap,一个relnode可以对应于多条rule的operand

/** * Operands that apply to a given class of {@link RelNode}. * * <p>Any operand can be an 'entry point' to a rule call, when a RelNode is * registered which matches the operand. This map allows us to narrow down * operands based on the class of the RelNode.</p> */ private final Multimap<Class<? extends RelNode>, RelOptRuleOperand> classOperands = LinkedListMultimap.create();

首先取出rule的operands,

operands包含所有rule中的operand,以flatten的方式

/** * Flattened list of operands. */ public final List<RelOptRuleOperand> operands; /** * Creates a flattened list of this operand and its descendants in prefix * order. * * @param rootOperand Root operand * @return Flattened list of operands */ private List<RelOptRuleOperand> flattenOperands( RelOptRuleOperand rootOperand) { final List<RelOptRuleOperand> operandList = new ArrayList<>(); // Flatten the operands into a list. rootOperand.setRule(this); rootOperand.setParent(null); rootOperand.ordinalInParent = 0; rootOperand.ordinalInRule = operandList.size(); operandList.add(rootOperand); flattenRecurse(operandList, rootOperand); return ImmutableList.copyOf(operandList); } /** * Adds the operand and its descendants to the list in prefix order. * * @param operandList Flattened list of operands * @param parentOperand Parent of this operand */ private void flattenRecurse( List<RelOptRuleOperand> operandList, RelOptRuleOperand parentOperand) { int k = 0; for (RelOptRuleOperand operand : parentOperand.getChildOperands()) { operand.setRule(this); operand.setParent(parentOperand); operand.ordinalInParent = k++; operand.ordinalInRule = operandList.size(); operandList.add(operand); flattenRecurse(operandList, operand); } }

subClasses(operand.getMatchedClass()

取到operand自身的class,比如join

//classes保存plan中的RelNode的class private final Set<Class<? extends RelNode>> classes = new HashSet<>(); public Iterable<Class<? extends RelNode>> subClasses( final Class<? extends RelNode> clazz) { return Util.filter(classes, clazz::isAssignableFrom); }

所以这里的逻辑是,找出哪些Rule中operands的class和plan中的RelNode的class是可以匹配上的

把这个对应关系加到classOperands,有了这个关系,我们后面在遍历的时候,就知道有哪些rule和这个RelNode可能会match上,缩小搜索空间

b. 将conversion rule注册到RelTraitDef

5. SetRoot

public void setRoot(RelNode rel) { // We're registered all the rules, and therefore RelNode classes, // we're interested in, and have not yet started calling metadata providers. // So now is a good time to tell the metadata layer what to expect. registerMetadataRels(); this.root = registerImpl(rel, null); if (this.originalRoot == null) { this.originalRoot = rel; } // Making a node the root changes its importance. this.ruleQueue.recompute(this.root); ensureRootConverters(); }

核心调用是 registerImpl

/** * Registers a new expression <code>exp</code> and queues up rule matches. * If <code>set</code> is not null, makes the expression part of that * equivalence set. If an identical expression is already registered, we * don't need to register this one and nor should we queue up rule matches. * * @param rel relational expression to register. Must be either a * {@link RelSubset}, or an unregistered {@link RelNode} * @param set set that rel belongs to, or <code>null</code> * @return the equivalence-set */ private RelSubset registerImpl( RelNode rel, RelSet set) { //如果已经注册过,直接合并返回 if (rel instanceof RelSubset) { return registerSubset(set, (RelSubset) rel); } // Ensure that its sub-expressions are registered. // 1. 递归注册 rel = rel.onRegister(this); // 2. 记录下该RelNode是由哪个Rule Call产生的 if (ruleCallStack.isEmpty()) { provenanceMap.put(rel, Provenance.EMPTY); } else { final VolcanoRuleCall ruleCall = ruleCallStack.peek(); provenanceMap.put( rel, new RuleProvenance( ruleCall.rule, ImmutableList.copyOf(ruleCall.rels), ruleCall.id)); } // 3. 注册RelNode树的class和trait registerClass(rel); registerCount++; //4. 注册RelNode到RelSet final int subsetBeforeCount = set.subsets.size(); RelSubset subset = addRelToSet(rel, set); final RelNode xx = mapDigestToRel.put(key, rel); // 5. 更新importance if (rel == this.root) { ruleQueue.subsetImportances.put( subset, 1.0); // root的importance固定为1 } //把inputs也加入到RelSubset里面 for (RelNode input : rel.getInputs()) { RelSubset childSubset = (RelSubset) input; childSubset.set.parents.add(rel); // 由于调整了RelSubset结构,重新计算importance ruleQueue.recompute(childSubset); } // 6. Fire rules fireRules(rel, true); // It's a new subset. if (set.subsets.size() > subsetBeforeCount) { fireRules(subset, true); } return subset; }

5.1 rel = rel.onRegister(this)

onRegister,目的就是递归的对RelNode树上的每个节点调用registerImpl

取出RelNode的inputs,这里bottom up的,join的inputs就是left,right children

然后对于每个input,调用ensureRegistered

public RelNode onRegister(RelOptPlanner planner) { List<RelNode> oldInputs = getInputs(); List<RelNode> inputs = new ArrayList<>(oldInputs.size()); for (final RelNode input : oldInputs) { RelNode e = planner.ensureRegistered(input, null); } inputs.add(e); } RelNode r = this; if (!Util.equalShallow(oldInputs, inputs)) { r = copy(getTraitSet(), inputs); } r.recomputeDigest(); return r; }

ensureRegistered

public RelSubset ensureRegistered(RelNode rel, RelNode equivRel) { final RelSubset subset = getSubset(rel); if (subset != null) { if (equivRel != null) { final RelSubset equivSubset = getSubset(equivRel); if (subset.set != equivSubset.set) { merge(equivSubset.set, subset.set); } } return subset; } else { return register(rel, equivRel); } }

public RelSubset register( RelNode rel, RelNode equivRel) { final RelSet set; if (equivRel == null) { set = null; } else { set = getSet(equivRel); } //递归调用registerImpl final RelSubset subset = registerImpl(rel, set); return subset; }

5.2 RuleProvenance

记录下由那个Rule Call,产生这个RelNode;RuleCall可能为null

final Map<RelNode, Provenance> provenanceMap;

/** * A RelNode that came via the firing of a rule. */ static class RuleProvenance extends Provenance { final RelOptRule rule; final ImmutableList<RelNode> rels; final int callId; RuleProvenance(RelOptRule rule, ImmutableList<RelNode> rels, int callId) { this.rule = rule; this.rels = rels; this.callId = callId; } }

5.3 registerClass

将Relnode的Class和traits注册到相应的机构中,记录planner包含何种RelNode和Traits

private final Set<Class<? extends RelNode>> classes = new HashSet<>();

private final Set<RelTrait> traits = new HashSet<>();

public void registerClass(RelNode node) { final Class<? extends RelNode> clazz = node.getClass(); if (classes.add(clazz)) { onNewClass(node); } for (RelTrait trait : node.getTraitSet()) { if (traits.add(trait)) { trait.register(this); } } }

5.4 addRelToSet

RelSubset subset = addRelToSet(rel, set);

private RelSubset addRelToSet(RelNode rel, RelSet set) { RelSubset subset = set.add(rel); mapRel2Subset.put(rel, subset); // While a tree of RelNodes is being registered, sometimes nodes' costs // improve and the subset doesn't hear about it. You can end up with // a subset with a single rel of cost 99 which thinks its best cost is // 100. We think this happens because the back-links to parents are // not established. So, give the subset another change to figure out // its cost. final RelMetadataQuery mq = rel.getCluster().getMetadataQuery(); subset.propagateCostImprovements(this, mq, rel, new HashSet<>()); return subset; }

主要就是注册RelNode所对应的RelSubset

注意这里是IdentityHashMap,所以比较的是RelNode的reference,而不是hashcode,不同的RelNode对象,就会对应各自不同的RelSubset

因为一个RelNode对象一定在一个RelSet中,但是不同的RelSet中可能包含相同RelNode对象实例,比如都有join对象

/** * Map each registered expression ({@link RelNode}) to its equivalence set * ({@link RelSubset}). * * <p>We use an {@link IdentityHashMap} to simplify the process of merging * {@link RelSet} objects. Most {@link RelNode} objects are identified by * their digest, which involves the set that their child relational * expressions belong to. If those children belong to the same set, we have * to be careful, otherwise it gets incestuous.</p> */ private final IdentityHashMap<RelNode, RelSubset> mapRel2Subset = new IdentityHashMap<>();

propagateCostImprovements,因为RelSet发生变化,可能产生新的best cost,所以把当前的change告诉其他的节点,更新cost,看看是否产生新的best cost

final RelNode xx = mapDigestToRel.put(key, rel);

表示这个RelNode,已经完成注册,因为前面是通过这个Digest来判断是否注册过的

5.5 importance

importance用于表示RelSubset的优先级,优先级越高,越先进行优化

在RuleQueue里面,用这个结构来保存各个subset的importance

/** * The importance of each subset. */ final Map<RelSubset, Double> subsetImportances = new HashMap<>();

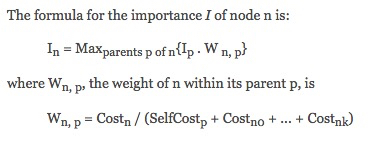

importance的计算方法如下,

Computes the importance of a node. Importance is defined as follows:

the root RelSubset has an importance of 1

其实很简单,

比如root cost 3,两个child的cost,2,5;而root的importance为1

那么两个child的importance就是,0.2和0.5

所以越top的节点,importance越大,cost越大的节点,importance越大

5.6 fireRules

这里的fireRules,一般都是选择DeferringRuleCall,所以不是马上执行rule的,因为那样比较低效,而是等真正需要的时候才去执行

/** * Fires all rules matched by a relational expression. * * @param rel Relational expression which has just been created (or maybe * from the queue) * @param deferred If true, each time a rule matches, just add an entry to * the queue. */ void fireRules( RelNode rel, boolean deferred) { for (RelOptRuleOperand operand : classOperands.get(rel.getClass())) { if (operand.matches(rel)) { final VolcanoRuleCall ruleCall; if (deferred) { ruleCall = new DeferringRuleCall(this, operand); } else { ruleCall = new VolcanoRuleCall(this, operand); } ruleCall.match(rel); } } }

classOperands里面保存,每个RelNode所匹配到的所有的RuleOperand

classOperands只是说明当前Operands和RelNode匹配,但是当前RelNode子树是否匹配Rule,需要进一步看

可以看到这里会Recurse的match,match的逻辑很长,这里就不看了

每匹配一次,solve+1,当solve == operands.size(),说明对整个Rule完成匹配

会调用onMatch

/** * Applies this rule, with a given relational expression in the first slot. */ void match(RelNode rel) { assert getOperand0().matches(rel) : "precondition"; final int solve = 0; int operandOrdinal = getOperand0().solveOrder[solve]; this.rels[operandOrdinal] = rel; matchRecurse(solve + 1); } /** * Recursively matches operands above a given solve order. * * @param solve Solve order of operand (> 0 and ≤ the operand count) */ private void matchRecurse(int solve) { assert solve > 0; assert solve <= rule.operands.size(); final List<RelOptRuleOperand> operands = getRule().operands; if (solve == operands.size()) { // We have matched all operands. Now ask the rule whether it // matches; this gives the rule chance to apply side-conditions. // If the side-conditions are satisfied, we have a match. if (getRule().matches(this)) { onMatch(); } } else {......}}

对于DeferringRuleCall,

onMatch的逻辑,就是封装成VolcanoRuleMatch,并丢到RuleQueue里面去

并没有真正的执行Rule的onMatch,这就是Deferring

其实RuleQueue,RuleMatch, Importance 这些概念都是为了实现Deferring创造出来的,如果直接fire,机制就很简单

/** * Rather than invoking the rule (as the base method does), creates a * {@link VolcanoRuleMatch} which can be invoked later. */ protected void onMatch() { final VolcanoRuleMatch match = new VolcanoRuleMatch( volcanoPlanner, getOperand0(), rels, nodeInputs); volcanoPlanner.ruleQueue.addMatch(match); }

6. findBestExp

public RelNode findBestExp() { int cumulativeTicks = 0; //总步数,tick代表优化一次,触发一个RuleMatch //这个for只会执行一次,因为只有Optimize phase里面加了RuleMatch,其他都是空的 //RuleQueue.addMatch中,phaseRuleSet != ALL_RULES 会过滤到其他的phase for (VolcanoPlannerPhase phase : VolcanoPlannerPhase.values()) { setInitialImportance(); //初始化impoartance RelOptCost targetCost = costFactory.makeHugeCost(); //目标cost,设为Huge int tick = 0; //如果for执行一次,等同于cumulativeTicks int firstFiniteTick = -1; //第一次找到可执行plan用的tick数 int giveUpTick = Integer.MAX_VALUE; //放弃优化的tick数 while (true) { ++tick; //开始一次优化,tick+1 ++cumulativeTicks; if (root.bestCost.isLe(targetCost)) { //如果bestcost < targetCost,说明找到可执行的计划 if (firstFiniteTick < 0) { //如果是第一次找到 firstFiniteTick = cumulativeTicks; //更新firstFiniteTick clearImportanceBoost(); //清除ImportanceBoost,RelSubset中有个field,boolean boosted,表示是否被boost } if (ambitious) { // 会试图找到更优的计划 targetCost = root.bestCost.multiplyBy(0.9); //适当降低targetCost //如果impatient,需要设置giveUpTick //giveUpTick初始MAX_VALUE,当成功找到一个计划后,才会设置成相应的值 if (impatient) { if (firstFiniteTick < 10) { //如果第一次找到计划,步数小于10 //下一轮如果25步找不到更优计划,放弃 giveUpTick = cumulativeTicks + 25; } else { //如果计划比较复杂,步数放宽些 giveUpTick = cumulativeTicks + Math.max(firstFiniteTick / 10, 25); } } } else { break; //非ambitious,有可用的计划就行,结束 } } else if (cumulativeTicks > giveUpTick) { //放弃优化 // We haven't made progress recently. Take the current best. break; } else if (root.bestCost.isInfinite() && ((tick % 10) == 0)) { //步数为整10,仍然没有找到可用的计划 //bestCost的初始值就是Infinite injectImportanceBoost(); //提高某些RelSubSet的Importance,加快cost降低 } VolcanoRuleMatch match = ruleQueue.popMatch(phase); //从RuleQueue中找到importance最大的Match if (match == null) { break; } match.onMatch(); //触发match // The root may have been merged with another // subset. Find the new root subset. root = canonize(root); } ruleQueue.phaseCompleted(phase); } RelNode cheapest = root.buildCheapestPlan(this); return cheapest; }

injectImportanceBoost

把仅仅包含Convention.NONE的RelSubSets的Importance提升,意思就让这些RelSubsets先被优化

/** * Finds RelSubsets in the plan that contain only rels of * {@link Convention#NONE} and boosts their importance by 25%. */ private void injectImportanceBoost() { final Set<RelSubset> requireBoost = new HashSet<>(); SUBSET_LOOP: for (RelSubset subset : ruleQueue.subsetImportances.keySet()) { for (RelNode rel : subset.getRels()) { if (rel.getConvention() != Convention.NONE) { continue SUBSET_LOOP; } } requireBoost.add(subset); } ruleQueue.boostImportance(requireBoost, 1.25); }

Convention.NONE,都是infinite cost,所以先优化他们会更有效的降低cost

public interface Convention extends RelTrait { /** * Convention that for a relational expression that does not support any * convention. It is not implementable, and has to be transformed to * something else in order to be implemented. * * <p>Relational expressions generally start off in this form.</p> * * <p>Such expressions always have infinite cost.</p> */ Convention NONE = new Impl("NONE", RelNode.class);

PopMatch

找出importance最大的match,并且返回

/** * Removes the rule match with the highest importance, and returns it. * * <p>Returns {@code null} if there are no more matches.</p> * * <p>Note that the VolcanoPlanner may still decide to reject rule matches * which have become invalid, say if one of their operands belongs to an * obsolete set or has importance=0. * * @throws java.lang.AssertionError if this method is called with a phase * previously marked as completed via * {@link #phaseCompleted(VolcanoPlannerPhase)}. */ VolcanoRuleMatch popMatch(VolcanoPlannerPhase phase) { PhaseMatchList phaseMatchList = matchListMap.get(phase); final List<VolcanoRuleMatch> matchList = phaseMatchList.list; VolcanoRuleMatch match; for (;;) { if (matchList.isEmpty()) { return null; } if (LOGGER.isTraceEnabled()) { //... } else { match = null; int bestPos = -1; int i = -1; //找出importance最大的match for (VolcanoRuleMatch match2 : matchList) { ++i; if (match == null || MATCH_COMPARATOR.compare(match2, match) < 0) { bestPos = i; match = match2; } } match = matchList.remove(bestPos); } if (skipMatch(match)) { LOGGER.debug("Skip match: {}", match); } else { break; } } // A rule match's digest is composed of the operand RelNodes' digests, // which may have changed if sets have merged since the rule match was // enqueued. match.recomputeDigest(); phaseMatchList.matchMap.remove( planner.getSubset(match.rels[0]), match); return match; }

onMatch

/** * Called when all operands have matched. */ protected void onMatch() { volcanoPlanner.ruleCallStack.push(this); try { getRule().onMatch(this); } finally { volcanoPlanner.ruleCallStack.pop(); } }

ruleCallStack记录当前在执行的RuleCall

最终调用到具体Rule的onMatch函数,做具体的转换

buildCheapestPlan

/** * Recursively builds a tree consisting of the cheapest plan at each node. */ RelNode buildCheapestPlan(VolcanoPlanner planner) { CheapestPlanReplacer replacer = new CheapestPlanReplacer(planner); final RelNode cheapest = replacer.visit(this, -1, null); return cheapest; }

可以看到逻辑其实比较简单,就是遍历RelSubSet树,然后从上到下都选best的RelNode形成新的树

/** * Visitor which walks over a tree of {@link RelSet}s, replacing each node * with the cheapest implementation of the expression. */ static class CheapestPlanReplacer { VolcanoPlanner planner; CheapestPlanReplacer(VolcanoPlanner planner) { super(); this.planner = planner; } public RelNode visit( RelNode p, int ordinal, RelNode parent) { if (p instanceof RelSubset) { RelSubset subset = (RelSubset) p; RelNode cheapest = subset.best; //取出SubSet中的best p = cheapest; //替换 } List<RelNode> oldInputs = p.getInputs(); List<RelNode> inputs = new ArrayList<>(); for (int i = 0; i < oldInputs.size(); i++) { RelNode oldInput = oldInputs.get(i); RelNode input = visit(oldInput, i, p); //递归执行visit inputs.add(input); //新的input } if (!inputs.equals(oldInputs)) { final RelNode pOld = p; p = p.copy(p.getTraitSet(), inputs); //生成新的p planner.provenanceMap.put( p, new VolcanoPlanner.DirectProvenance(pOld)); } return p; } } }

BestCost是如何变化的?

每个RelSubSet都会记录,

bestCost和bestPlan

/** * cost of best known plan (it may have improved since) */ RelOptCost bestCost; /** * The set this subset belongs to. */ final RelSet set; /** * best known plan */ RelNode best;

初始化

首先RelSubSet初始化的时候,会执行computeBestCost

private void computeBestCost(RelOptPlanner planner) { bestCost = planner.getCostFactory().makeInfiniteCost(); //bestCost初始化成,Double.POSITIVE_INFINITY final RelMetadataQuery mq = getCluster().getMetadataQuery(); for (RelNode rel : getRels()) { final RelOptCost cost = planner.getCost(rel, mq); if (cost.isLt(bestCost)) { bestCost = cost; best = rel; } } }

getCost

public RelOptCost getCost(RelNode rel, RelMetadataQuery mq) { if (rel instanceof RelSubset) { return ((RelSubset) rel).bestCost; //如果是RelSubSet直接返回结果,因为动态规划,重用之前的结果,不用反复算 } if (noneConventionHasInfiniteCost //Convention.NONE的cost为InfiniteCost,返回 && rel.getTraitSet().getTrait(ConventionTraitDef.INSTANCE) == Convention.NONE) { return costFactory.makeInfiniteCost(); } RelOptCost cost = mq.getNonCumulativeCost(rel); //算cost if (!zeroCost.isLt(cost)) { // cost must be positive, so nudge it cost = costFactory.makeTinyCost(); //如果算出负的cost,用TinyCost替代,1.0 } for (RelNode input : rel.getInputs()) { cost = cost.plus(getCost(input, mq)); //递归把整个数的cost都加到root } return cost; }

getNonCumulativeCost

/** * Estimates the cost of executing a relational expression, not counting the * cost of its inputs. (However, the non-cumulative cost is still usually * dependent on the row counts of the inputs.) The default implementation * for this query asks the rel itself via {@link RelNode#computeSelfCost}, * but metadata providers can override this with their own cost models. * * @return estimated cost, or null if no reliable estimate can be * determined */ RelOptCost getNonCumulativeCost(); /** Handler API. */ interface Handler extends MetadataHandler<NonCumulativeCost> { RelOptCost getNonCumulativeCost(RelNode r, RelMetadataQuery mq); }

getNonCumulativeCost最终调用的是RelNode#computeSelfCost

这是个抽象接口,每个RelNode的实现不同,看下比较简单的Filter的实现,

@Override public RelOptCost computeSelfCost(RelOptPlanner planner, RelMetadataQuery mq) { double dRows = mq.getRowCount(this); double dCpu = mq.getRowCount(getInput()); double dIo = 0; return planner.getCostFactory().makeCost(dRows, dCpu, dIo); }

这里的实现就是单纯用rowCount来表示cost

makeCost也是直接封装成VolcanoCost对象

节点变更

各个地方当产生新的RelNode时,会调用Register,ensureRegistered,或registerImpl进行注册

public RelSubset register( RelNode rel, RelNode equivRel) { final RelSet set; if (equivRel == null) { set = null; } else { set = getSet(equivRel); } final RelSubset subset = registerImpl(rel, set); return subset; }

public RelSubset ensureRegistered(RelNode rel, RelNode equivRel) { final RelSubset subset = getSubset(rel); if (subset != null) { if (equivRel != null) { final RelSubset equivSubset = getSubset(equivRel); if (subset.set != equivSubset.set) { merge(equivSubset.set, subset.set); } } return subset; } else { return register(rel, equivRel); } }

registerImpl调用addRelToSet,registerImpl的实现前面有

private RelSubset addRelToSet(RelNode rel, RelSet set) { RelSubset subset = set.add(rel); mapRel2Subset.put(rel, subset); // While a tree of RelNodes is being registered, sometimes nodes' costs // improve and the subset doesn't hear about it. You can end up with // a subset with a single rel of cost 99 which thinks its best cost is // 100. We think this happens because the back-links to parents are // not established. So, give the subset another change to figure out // its cost. final RelMetadataQuery mq = rel.getCluster().getMetadataQuery(); subset.propagateCostImprovements(this, mq, rel, new HashSet<>()); return subset; }

propagateCostImprovements

/** * Checks whether a relexp has made its subset cheaper, and if it so, * recursively checks whether that subset's parents have gotten cheaper. * * @param planner Planner * @param mq Metadata query * @param rel Relational expression whose cost has improved * @param activeSet Set of active subsets, for cycle detection */ void propagateCostImprovements(VolcanoPlanner planner, RelMetadataQuery mq, RelNode rel, Set<RelSubset> activeSet) { for (RelSubset subset : set.subsets) { if (rel.getTraitSet().satisfies(subset.traitSet)) { subset.propagateCostImprovements0(planner, mq, rel, activeSet); } } }

void propagateCostImprovements0(VolcanoPlanner planner, RelMetadataQuery mq, RelNode rel, Set<RelSubset> activeSet) { ++timestamp; if (!activeSet.add(this)) { //检测到环 // This subset is already in the chain being propagated to. This // means that the graph is cyclic, and therefore the cost of this // relational expression - not this subset - must be infinite. LOGGER.trace("cyclic: {}", this); return; } try { final RelOptCost cost = planner.getCost(rel, mq); //获取cost if (cost.isLt(bestCost)) { bestCost = cost; best = rel; // Lower cost means lower importance. Other nodes will change // too, but we'll get to them later. planner.ruleQueue.recompute(this); //cost变了,所以importance要重新算 //递归的执行propagateCostImprovements for (RelNode parent : getParents()) { final RelSubset parentSubset = planner.getSubset(parent); parentSubset.propagateCostImprovements(planner, mq, parent, activeSet); } planner.checkForSatisfiedConverters(set, rel); } } finally { activeSet.remove(this); } }