1.使用Docker-compose实现Tomcat+Nginx负载均衡

-

nginx反向代理原理

以代理服务器来接受Internet上的连接请求,然后将请求转发给内部网络上的服务器;并将从服务器上得到的结果返回给Internet上请求连接的客户端,此时代理服务器对外就表现为一个服务器。

-

nginx代理tomcat集群

-

项目结构

-

docker-compose.yml

version: "3" services: nginx: image: nginx container_name: "nginx-tomcat" ports: - 80:8086 volumes: - ./nginx/default.conf:/etc/nginx/conf.d/default.conf # 挂载配置文件 depends_on: - tomcat01 - tomcat02 - tomcat03 tomcat01: image: tomcat container_name: "tomcat01" volumes: - ./tomcat1:/usr/local/tomcat/webapps/ROOT # 挂载web目录 tomcat02: image: tomcat container_name: "tomcat02" volumes: - ./tomcat2:/usr/local/tomcat/webapps/ROOT tomcat03: image: tomcat container_name: "tomcat03" volumes: - ./tomcat3:/usr/local/tomcat/webapps/ROOT -

default.conf

upstream tomcats { server tomcat01:8080; server tomcat02:8080; server tomcat03:8080; } server { listen 8086; server_name localhost; location / { proxy_pass http://tomcats; # 请求转向tomcats } } -

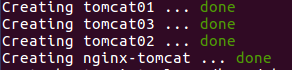

运行docker-compose

docker-compose up -d

-

查看容器

-

查看web端

-

-

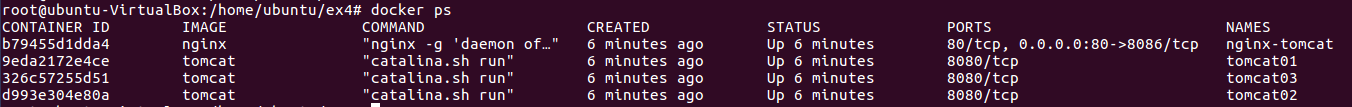

负载均衡策略

- 轮询策略

import requests url="http://127.0.0.1" for i in range(0,12): reponse=requests.get(url) print(reponse.text)

- 权重策略

- 修改default.conf

upstream tomcats { server tomcat01:8080; #默认weight=1 server tomcat02:8080 weight=2; server tomcat03:8080 weight=3; } server { listen 8086; server_name localhost; location / { proxy_pass http://tomcats; # 请求转向tomcats } } - 重启容器,执行python代码

- 修改default.conf

- 轮询策略

2.使用Docker-compose部署javaweb运行环境

- docker-compose.yml

version: "3" #版本 services: #服务节点 tomcat00: #tomcat 服务 image: tomcat #镜像 hostname: hostname #容器的主机名 container_name: tomcat00 #容器名 ports: #端口 - "5050:8080" volumes: #数据卷 - "./webapps:/usr/local/tomcat/webapps" - ./wait-for-it.sh:/wait-for-it.sh networks: #网络设置静态IP webnet: ipv4_address: 15.22.0.15 tomcat01: #tomcat 服务 image: tomcat #镜像 hostname: hostname #容器的主机名 container_name: tomcat01 #容器名 ports: #端口 - "5055:8080" volumes: #数据卷 - "./webapps:/usr/local/tomcat/webapps" - ./wait-for-it.sh:/wait-for-it.sh networks: #网络设置静态IP webnet: ipv4_address: 15.22.0.16 mymysql: #mymysql服务 build: . #通过MySQL的Dockerfile文件构建MySQL image: mymysql:test container_name: mymysql ports: - "3309:3306" #红色的外部访问端口不修改的情况下,要把Linux的MySQL服务停掉 #service mysql stop #反之,将3306换成其它的 command: [ '--character-set-server=utf8mb4', '--collation-server=utf8mb4_unicode_ci' ] environment: MYSQL_ROOT_PASSWORD: "123456" networks: webnet: ipv4_address: 15.22.0.6 nginx: image: nginx container_name: "nginx-tomcat" ports: - 8080:8080 volumes: - ./default.conf:/etc/nginx/conf.d/default.conf # 挂载配置文件 tty: true stdin_open: true depends_on: - tomcat00 - tomcat01 networks: webnet: ipv4_address: 15.22.0.7 networks: #网络设置 webnet: driver: bridge #网桥模式 ipam: config: - subnet: 15.22.0.0/24 #子网 - docker-entrypoint.sh

#!/bin/bash mysql -uroot -p123456 << EOF # << EOF 必须要有 source /usr/local/grogshop.sql; - Dockerfile

# 这个是构建MySQL的dockerfile FROM registry.saas.hand-china.com/tools/mysql:5.7.17 # mysql的工作位置 ENV WORK_PATH /usr/local/ # 定义会被容器自动执行的目录 ENV AUTO_RUN_DIR /docker-entrypoint-initdb.d #复制gropshop.sql到/usr/local COPY grogshop.sql /usr/local/ #把要执行的shell文件放到/docker-entrypoint-initdb.d/目录下,容器会自动执行这个shell COPY docker-entrypoint.sh $AUTO_RUN_DIR/ #给执行文件增加可执行权限 RUN chmod a+x $AUTO_RUN_DIR/docker-entrypoint.sh # 设置容器启动时执行的命令 #CMD ["sh", "/docker-entrypoint-initdb.d/import.sh"] - default.conf

upstream tomcat123 { server tomcat00:8080; server tomcat01:8080; } server { listen 8080; server_name localhost; location / { proxy_pass http://tomcat123; } } - 修改连接数据库的IP

driverClassName=com.mysql.jdbc.Driver url=jdbc:mysql://192.168.133.132:3306/grogshop?useUnicode=true&characterEncoding=utf-8 username=root password=123456 - 启动容器

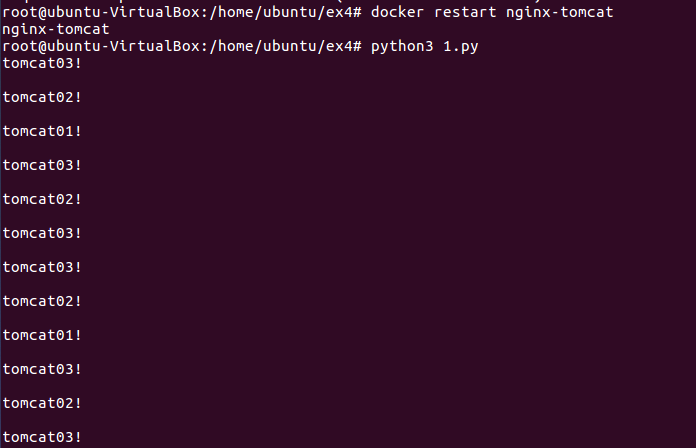

- 浏览器访问前端页面

- 新增商品

- 修改商品

- 测试两个tomcat服务器负载均衡

3.使用Docker搭建大数据集群环境

-

搭建hadoop环境

- 树形结构

- Dockerfile

#Base images 基础镜像 FROM ubuntu:18.04 #MAINTAINER 维护者信息 MAINTAINER y00 COPY ./sources.list /etc/apt/sources.list - sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # 预发布软件源,不建议启用 # deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse - 创建并运行容器

docker build -t ubuntu:18.04 . docker run -it --name ubuntu ubuntu:18.04

-

容器初始化

- 安装必要工具

apt-get update apt-get install vim # 用于修改配置文件 apt-get install ssh # 分布式hadoop通过ssh连接 /etc/init.d/ssh start # 开启sshd服务器 vim ~/.bashrc # 在文件末尾添加/etc/init.d/ssh start,实现ssd开机自启 - 实现ssh无密码登陆

ssh-keygen -t rsa cd ~/.ssh cat id_rsa.pub >> authorized_keys

- 安装jdk

apt-get install openjdk-8-jdk - 安装hadoop

docker cp ./build/hadoop-3.2.1.tar.gz 容器ID:/root/hadoop-3.2.1.tar.gz cd /root tar -zxvf hadoop-3.2.1.tar.gz -C /usr/local - 配置环境

vim ~/.bashrc- 写入以下内容

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ export PATH=$PATH:$JAVA_HOME/bin export HADOOP_HOME=/usr/local/hadoop-3.2.1 export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$JAVA_HOME/bin - 使配置环境生效

source ~/.bashrc

- 写入以下内容

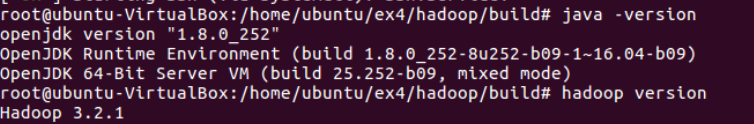

- 验证是否安装完成

java -version hadoop version

- 安装必要工具

-

配置hadoop集群

- 进入配置目录

cd /usr/local/hadoop-3.2.1/etc/hadoop- hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ # 在任意位置添加 - core-site.xml

<?xml version="1.0" encoding="UTF-8" ?> <?xml-stylesheet type="text/xsl" href="configuration.xsl" ?> <configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/hadoop-3.2.1/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> </configuration> - hdfs-site.xml

<?xml version="1.0" encoding="UTF-8" ?> <?xml-stylesheet type="text/xsl" href="configuration.xsl" ?> <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop-3.2.1/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop-3.2.1/tmp/dfs/data</value> </property> <property> <name>dfs.permissions.enabled</name> <value>false</value> </property> </configuration> - mapred-site.xml

<?xml version="1.0" ?> <?xml-stylesheet type="text/xsl" href="configuration.xsl" ?> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.1</value> </property> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.1</value> </property> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.1</value> </property> </configuration> - yarn-site.xml

<?xml version="1.0" ?> <configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>Master</value> </property> <!--虚拟内存和物理内存比,不加这个模块程序可能跑不起来--> <property> <name>yarn.nodemanager.vmem-pmem-ratio</name> <value>2.5</value> </property> </configuration>

- hadoop-env.sh

- 进入脚本目录

cd /usr/local/hadoop-3.2.1/sbin - 对于start-dfs.sh和stop-dfs.sh文件,添加下列参数

HDFS_DATANODE_USER=root HADOOP_SECURE_DN_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root - 对于start-yarn.sh和stop-yarn.sh,添加下列参数

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

- 进入配置目录

-

构建镜像

docker commit 容器ID ubuntu/hadoop -

利用构建好的镜像运行主机

- 开启三个终端分别运行

docker run -it -h master --name master ubuntu/hadoop docker run -it -h slave01 --name slave01 ubuntu/hadoop docker run -it -h slave02 --name slave02 ubuntu/hadoop - 分别修改/etc/hosts

- 测试ssh

ssh slave01 ssh slave02

- 修改master上workers文件

vim /usr/local/hadoop-3.1.3/etc/hadoop/workers - 将localhost替换成两个slave的主机名

slave01 slave02 - 开启服务

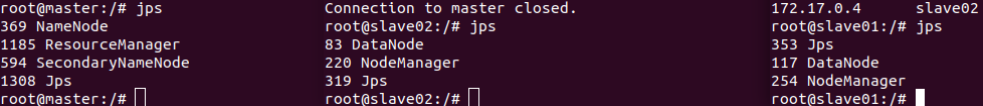

start-dfs.sh start-yarn.sh - jps查看服务是否开启成功

- 开启三个终端分别运行

-

运行hadoop示例程序

- grep测试

hdfs namenode -format # 格式化文件系统 hdfs dfs -mkdir -p /user/root/input #新建input文件夹 hdfs dfs -put /usr/local/hadoop-3.2.1/etc/hadoop/*s-site.xml input #将部分文件放入input文件夹 hadoop jar /usr/local/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+' #运行示例程序grep hdfs dfs -cat output/* #查看运行结果

- wordcount测试

hdfs dfs -rm root #删除上一次运行的输入和输出 hdfs dfs -mkdir -p /user/root/input #新建input文件夹 vim txt1.txt #在当前目录下新建txt1.txt vim txt2.txt #在当前目录下新建txt2.txt hdfs dfs -put ./*.txt input #将新建的文本文件放入input文件夹 hadoop jar /usr/local/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount input output #运行示例程序wordcount hdfs dfs -cat output/* #查看运行结果

- grep测试

3.主要问题和解决方法

- docker-compose部署javaweb环境出错

- 配置了gateway会出错

- 删除这一行就可以部署成功

4.时间花费

这次实验比上次难多了,花了十多个小时才能做完