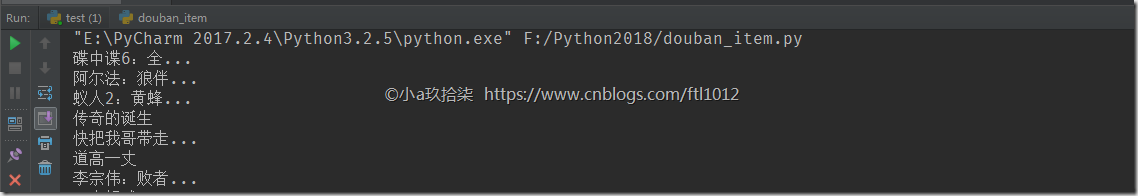

豆瓣

# coding:utf - 8

from urllib.request import urlopen

from bs4 import BeautifulSoup

html = urlopen("https://movie.douban.com/")

bsObj = BeautifulSoup(html, "lxml") # 将html对象转化为BeautifulSoup对象

liList = bsObj.findAll("li", {"class": "title"}) # 找到所有符合此class属性的li标签

for li in liList:

name = li.a.get_text() # 获取标签<a>中文字

print(name)

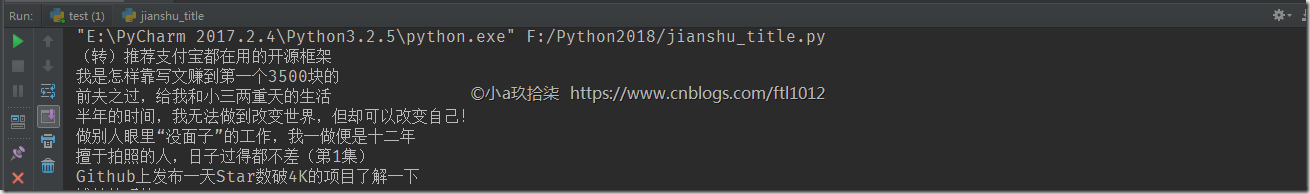

简书

# -*- coding:utf-8 -*-

from urllib import request

from bs4 import BeautifulSoup

url = r'http://www.jianshu.com'

# 模拟真实浏览器进行访问

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'}

page = request.Request(url, headers=headers)

page_info = request.urlopen(page).read()

page_info = page_info.decode('utf-8')

# 将获取到的内容转换成BeautifulSoup格式,并将html.parser作为解析器

soup = BeautifulSoup(page_info, 'lxml')

# 以格式化的形式打印html

# print(soup.prettify())

titles = soup.find_all('a', 'title') # 查找所有a标签中class='title'的语句

# 打印查找到的每一个a标签的string

for title in titles:

print(title.string)

酷狗

def getInfo(self, url):

html = requests.get(url, headers=self.header)

soup = BeautifulSoup(html.text, 'html.parser')

# print(soup.prettify())

ranks = soup.select('.pc_temp_num')

titles = soup.select('.pc_temp_songlist > ul > li > a') # 层层标签查找

times = soup.select('.pc_temp_time')

for rank, title, songTime in zip(ranks, titles, times):

data = {

# rank 全打印就是带HTML标签的

'rank': rank.get_text().strip(),

'title': title.get_text().split('-')[1].strip(),

'singer': title.get_text().split('-')[0].strip(),

'songTime': songTime.get_text().strip()

}

s = str(data)

print('rank:%2s ' % data['rank'], 'title:%2s ' % data['title'], 'singer:%2s ' %data['singer'], 'songTime:%2s ' % data['songTime'])

with open('hhh.txt', 'a', encoding='utf8') as f:

f.writelines(s + '

')【更多参考】