项目一:获取酷狗TOP 100

http://www.kugou.com/yy/rank/home/1-8888.html

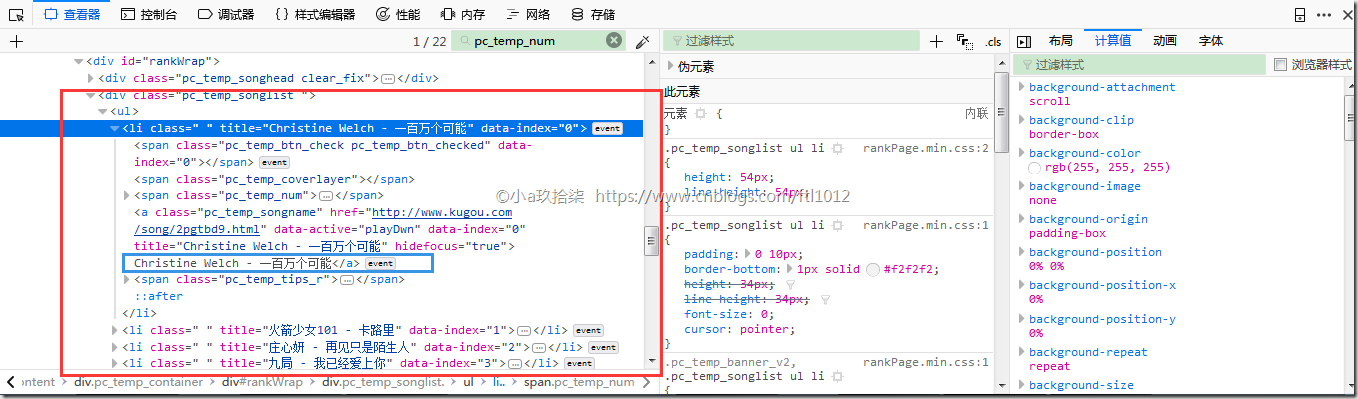

排名

文件&&歌手

时长

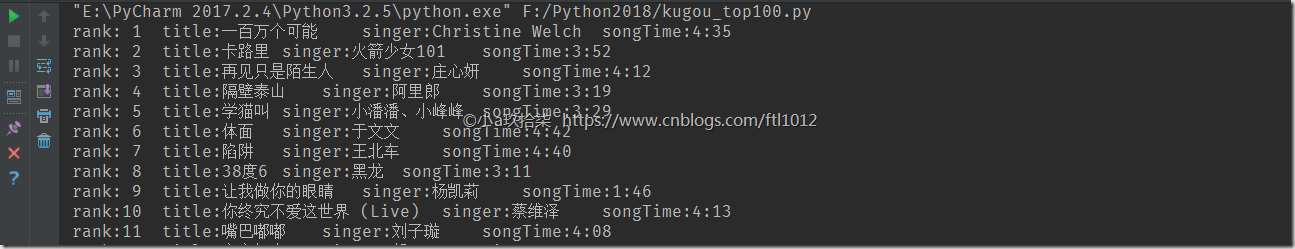

效果:

附源码:

import time

import json

from bs4 import BeautifulSoup

import requests

class Kugou(object):

def __init__(self):

self.header = {

"User-Agent": 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:60.0) Gecko/20100101 Firefox/60.0'

}

def getInfo(self, url):

html = requests.get(url, headers=self.header)

soup = BeautifulSoup(html.text, 'html.parser')

# print(soup.prettify())

ranks = soup.select('.pc_temp_num')

titles = soup.select('.pc_temp_songlist > ul > li > a') # 层层标签查找

times = soup.select('.pc_temp_time')

for rank, title, songTime in zip(ranks, titles, times):

data = {

# rank 全打印就是带HTML标签的

'rank': rank.get_text().strip(),

'title': title.get_text().split('-')[1].strip(),

'singer': title.get_text().split('-')[0].strip(),

'songTime': songTime.get_text().strip()

}

s = str(data)

print('rank:%2s ' % data['rank'], 'title:%2s ' % data['title'], 'singer:%2s ' %data['singer'], 'songTime:%2s ' % data['songTime'])

with open('hhh.txt', 'a', encoding='utf8') as f:

f.writelines(s + '

')

if __name__ == '__main__':

urls = [

'http://www.kugou.com/yy/rank/home/{}-8888.html'.format(str(i)) for i in range(30)

]

kugou = Kugou()

for url in urls:

kugou.getInfo(url)

time.sleep(1)

部分代码解析

--------------------------------------------------------------------

urls = ['http://www.kugou.com/yy/rank/home/{}-8888.html'.format(str(i)) for i in range(1, 5)]

for i in urls:

print(i)

结果打印:

http://www.kugou.com/yy/rank/home/1-8888.html

http://www.kugou.com/yy/rank/home/2-8888.html

http://www.kugou.com/yy/rank/home/3-8888.html

http://www.kugou.com/yy/rank/home/4-8888.html

--------------------------------------------------------------------

for rank, title, songTime in zip(ranks, titles, times):

data = {

# rank 全打印就是带HTML标签的

'rank': rank.get_text().strip(),

'title': title.get_text().split('-')[0].strip(),

'singer': title.get_text().split('-')[1].strip(),

'songTime': songTime.get_text()

}

print(data['rank'])

print(data['title'])

print(data['singer'])

print(data['songTime'])

结果打印:

1

飞驰于你

许嵩

4: 04

--------------------------------------------------------------------

for rank, title, songTime in zip(ranks, titles, times):

data = {

# rank 全打印就是带HTML标签的

'rank': rank,

'title': title,

'songTime': songTime

}

print(data['rank'])

print(data['title'])

print(data['songTime'])

结果打印:

<span class="pc_temp_num">

<strong>1</strong>

</span>

<a class="pc_temp_songname" data-active="playDwn" data-index="0" hidefocus="true" href="http://www.kugou.com/song/pjn5xaa.html" title="许嵩 - 飞驰于你">许嵩 - 飞驰于你</a>

<span class="pc_temp_time"> 4:04 </span>

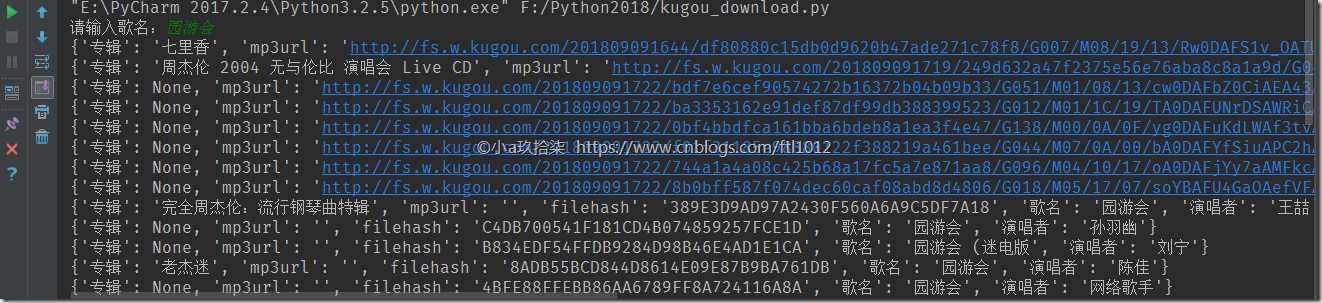

项目二:搜索曲目获取URL

根据关键字搜索后的结果:

# encoding=utf-8

# Time : 2018/4/27

# Email : z2615@163.com

# Software: PyCharm

# Language: Python 3

import requests

import json

class KgDownLoader(object):

def __init__(self):

self.search_url = 'http://songsearch.kugou.com/song_search_v2?callback=jQuery191034642999175022426_1489023388639&keyword={}&page=1&pagesize=30&userid=-1&clientver=&platform=WebFilter&tag=em&filter=2&iscorrection=1&privilege_filter=0&_=1489023388641'

# .format('园游会')

self.play_url = 'http://www.kugou.com/yy/index.php?r=play/getdata&hash={}'

self.song_info = {

'歌名': None,

'演唱者': None,

'专辑': None,

'filehash': None,

'mp3url': None

}

def get_search_data(self, keys):

search_file = requests.get(self.search_url.format(keys))

search_html = search_file.content.decode().replace(')', '').replace(

'jQuery191034642999175022426_1489023388639(', '')

views = json.loads(search_html)

for view in views['data']['lists']:

song_name = view['SongName'].replace('<em>', '').replace('</em>', '')

album_name = view['AlbumName'].replace('<em>', '').replace('</em>', '')

sing_name = view['SingerName'].replace('<em>', '').replace('</em>', '')

file_hash = view['FileHash']

new_info = {

'歌名': song_name,

'演唱者': sing_name,

'专辑': album_name if album_name else None,

'filehash': file_hash,

'mp3url': None

}

self.song_info.update(new_info)

yield self.song_info

def get_mp3_url(self, filehash):

mp3_file = requests.get(self.play_url.format(filehash)).content.decode()

mp3_json = json.loads(mp3_file)

real_url = mp3_json['data']['play_url']

self.song_info['mp3url'] = real_url

yield self.song_info

def save_mp3(self, song_name, real_url):

with open(song_name + ".mp3", "wb")as fp:

fp.write(requests.get(real_url).content)

if __name__ == '__main__':

kg = KgDownLoader()

mp3_info = kg.get_search_data(input('请输入歌名:'))

for x in mp3_info:

mp3info = kg.get_mp3_url(x['filehash'])

for i in mp3info:

print(i)

项目三:搜索下载歌曲

代码仅供学习参考

from selenium import webdriver

from bs4 import BeautifulSoup

import urllib.request

from selenium.webdriver.common.action_chains import ActionChains

input_string = input('>>>please input the search key:')

#input_string="你就不要想起我"

driver = webdriver.Chrome()

driver.get('http://www.kugou.com/')

a=driver.find_element_by_xpath('/html/body/div[1]/div[1]/div[1]/div[1]/input') #输入搜索内容/html/body/div[1]/div[1]/div[1]/div[1]/input

a.send_keys(input_string)

driver.find_element_by_xpath('/html/body/div[1]/div[1]/div[1]/div[1]/div/i').click() #点击搜索/html/body/div[1]/div[1]/div[1]/div[1]/div/i

for handle in driver.window_handles:#方法二,始终获得当前最后的窗口,所以多要多次使用

driver.switch_to_window(handle)

#result_url = driver.current_url

#driver = webdriver.Firefox()

#driver.maximize_window()

#driver.get(result_url)

#j=driver.find_element_by_xpath('/html/body/div[4]/div[1]/div[2]/ul[2]/li[2]/div[1]/a').get_attribute('title')测试

#print(j)

soup = BeautifulSoup(driver.page_source,'lxml')

PageAll = len(soup.select('ul.list_content.clearfix > li'))

print(PageAll)

for i in range(1,PageAll+1):

j=driver.find_element_by_xpath('/html/body/div[4]/div[1]/div[2]/ul[2]/li[%d]/div[1]/a'%i).get_attribute('title')

print('%d.'%i + j)

choice=input("请输入你要下载的歌曲(输入序号):")

#global mname

#mname=driver.find_element_by_xpath('/html/body/div[4]/div[1]/div[2]/ul[2]/li[%d]/div[1]/a'%choice).get_attribute('title')#歌曲名

a=driver.find_element_by_xpath('/html/body/div[4]/div[1]/div[2]/ul[2]/li[%s]/div[1]/a'%choice)#定位

b=driver.find_element_by_xpath('/html/body/div[4]/div[1]/div[2]/ul[2]/li[%s]/div[1]/a'%choice).get_attribute('title')

actions=ActionChains(driver)#selenium中定义的一个类

actions.move_to_element(a)#将鼠标移动到指定位置

actions.click(a)#点击

actions.perform()

#wait(driver)?

#driver = webdriver.Firefox()

#driver.maximize_window()

#driver.get(result_url)

#windows = driver.window_handles

#driver.switch_to.window(windows[-1])

#handles = driver.window_handles

for handle in driver.window_handles:#方法二,始终获得当前最后的窗口,所以多要多次使用

driver.switch_to_window(handle)

Local=driver.find_element_by_xpath('//*[@id="myAudio"]').get_attribute('src')

print(driver.find_element_by_xpath('//*[@id="myAudio"]').get_attribute('src'))

def cbk(a, b, c):

per = 100.0 * a * b / c

if per > 100:

per = 100

print('%.2f%%' % per)

soup=BeautifulSoup(b)

name=soup.get_text()

path='D:\%s.mp3'%name

urllib.request.urlretrieve(Local, path, cbk)

print('finish downloading %s.mp3' % name + '

')

【更多参考】https://blog.csdn.net/abc_123456___/article/details/81101845