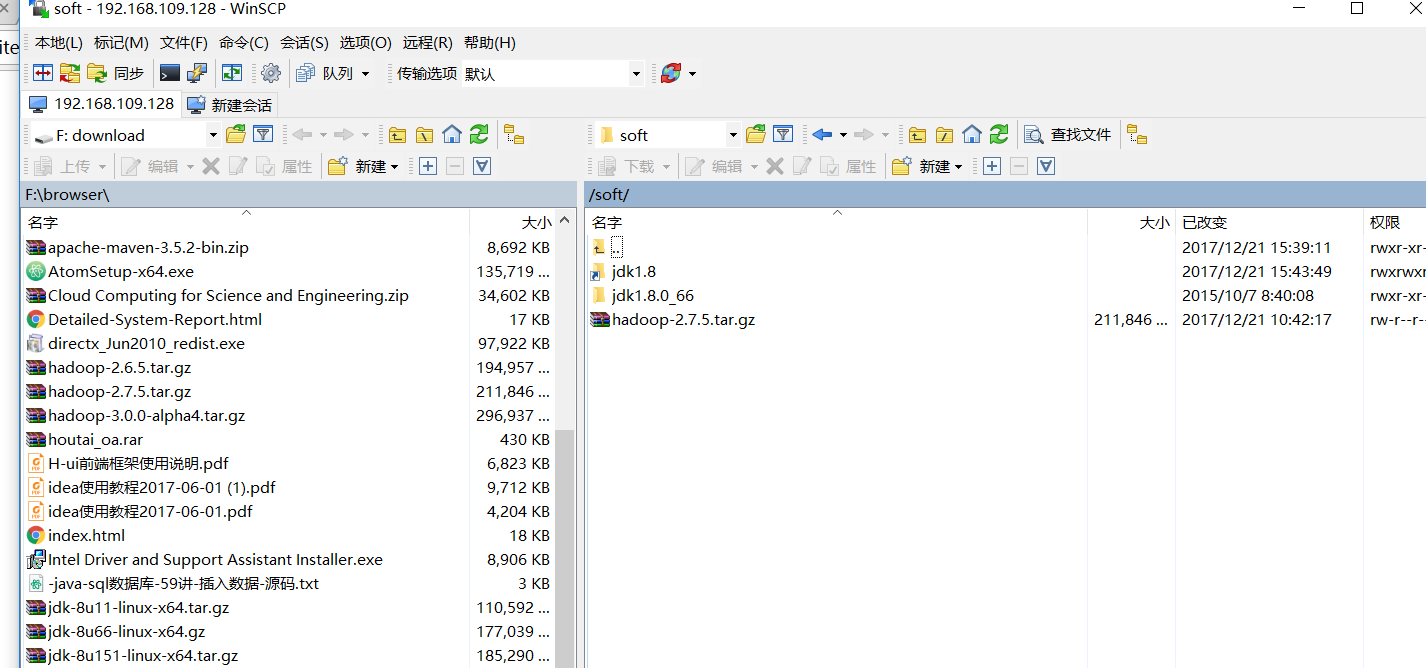

- 将安装包复制到/soft文件目录下

- 解压

[hadoop@localhost soft]$ sudo tar -zxvf hadoop-2.7.5.tar.gz- 删除安装包

[hadoop@localhost soft]$ sudo rm -rf hadoop-2.7.5.tar.gz

- 创建符号连接

[hadoop@localhost soft]$ sudo ln -s hadoop-2.7.5 hadoop2.7

[hadoop@localhost soft]$ ll

total 8

lrwxrwxrwx. 1 root root 12 Dec 21 03:02 hadoop2.7 -> hadoop-2.7.5

drwxr-xr-x. 9 20415 101 4096 Dec 15 20:12 hadoop-2.7.5

lrwxrwxrwx. 1 root root 11 Dec 21 02:43 jdk1.8 -> jdk1.8.0_66

drwxr-xr-x. 8 10 143 4096 Oct 6 2015 jdk1.8.0_66

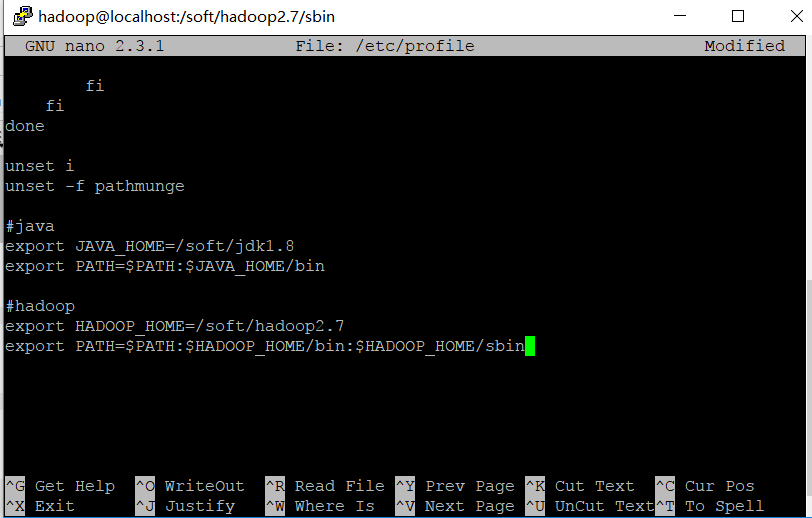

[hadoop@localhost soft]- 修改环境变量

[hadoop@localhost sbin]$ sudo nano /etc/profile

- 使得环境变量生效,并查看Hadoop是否安装成功

[hadoop@localhost sbin]$ source /etc/profile

[hadoop@localhost sbin]$ hadoop version

Hadoop 2.7.5

Subversion https://shv@git-wip-us.apache.org/repos/asf/hadoop.git -r 18065c2b6806ed4aa6a3187d77cbe21bb3dba075

Compiled by kshvachk on 2017-12-16T01:06Z

Compiled with protoc 2.5.0

From source with checksum 9f118f95f47043332d51891e37f736e9

This command was run using /soft/hadoop-2.7.5/share/hadoop/common/hadoop-common-2.7.5.jar

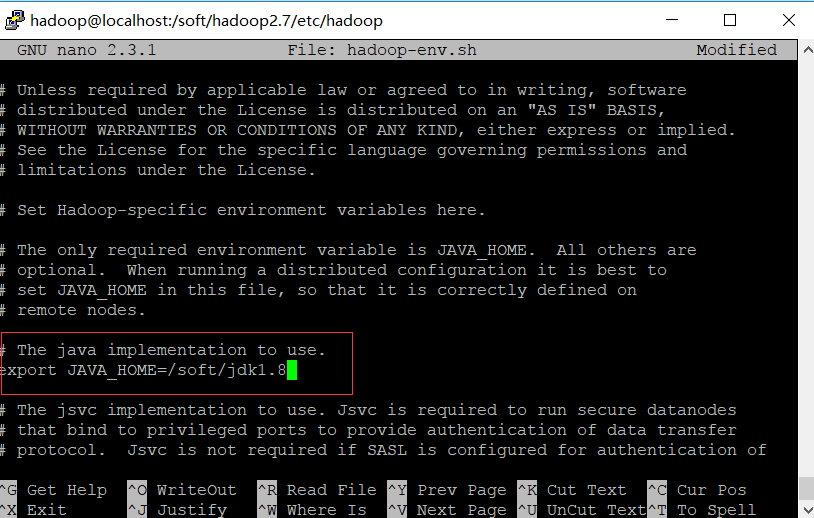

- 进入etc/hadoop目录

[hadoop@localhost hadoop2.7]$ cd etc/hadoop

- 配置文件hadoop-env.sh(配置Java环境)

[hadoop@localhost hadoop]$ nano hadoop-env.sh

参考博客:点击打开链接

- 配置core-site.xml ===>指定hdfs的地址,即namenode,指定Hadoop运行时产生文件的存储目录

[hadoop@localhost hadoop]$ sudo nano core-site.xml

[sudo] password for hadoop:

添加如下代码:

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/soft/hadoop2.7/tmp</value>

</property>- 配置hdfs-site.xml ====>指定hdfs副本的数量

<property>

<name>dfs.replication</name>

<value>1</value><property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</property>- 配置mapred-site.xml===>指定map reduce运行在yarn上

[hadoop@localhost hadoop]$ sudo cp mapred-site.xml.template mapred-site.xml//修改文件名

[hadoop@localhost hadoop]$ sudo nano mapred-site.xml

[hadoop@localhost hadoop]$

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property><property>

<name>mapred.job.tracker</name>

<value>hadoop:9001</value>

</property>

- 配置yarn-site.xml====>指定yarn资源管理器的地址,以及reducer获取数据的方式

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property><property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop</value>

</property> requested address,后来去掉了这个属性就可以了- 开始配置ssh

- 查看是否安装了ssh相关的软件包

[hadoop@localhost hadoop]$ yum list installed|grep ssh

libssh2.x86_64 1.4.3-8.el7 @anaconda

openssh.x86_64 6.4p1-8.el7 @anaconda

openssh-clients.x86_64 6.4p1-8.el7 @anaconda

openssh-server.x86_64 6.4p1-8.el7 @anaconda

[hadoop@localhost hadoop]$

- 检查是否启动了ssh进程

[hadoop@localhost hadoop]$ ps -Af |grep sshd

root 2208 1 0 02:22 ? 00:00:00 /usr/sbin/sshd -D

root 14509 2208 0 02:28 ? 00:00:00 sshd: hadoop [priv]

hadoop 14574 14509 0 02:29 ? 00:00:00 sshd: hadoop@pts/1

root 16620 2208 0 02:40 ? 00:00:03 sshd: root@notty

root 45692 2208 0 03:31 ? 00:00:00 sshd: hadoop [priv]

hadoop 45704 45692 0 03:31 ? 00:00:00 sshd: hadoop@pts/2

hadoop 45985 16486 0 03:48 pts/1 00:00:00 grep --color=auto sshd

[hadoop@localhost hadoop]$

- 在客户端client生成公私密钥对

[hadoop@localhost hadoop]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa//表示将密钥对放在文件夹id_rsa下

Generating public/private rsa key pair.

Created directory '/home/hadoop/.ssh'.

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

36:db:ae:21:48:b6:e4:ed:5e:80:cf:1c:cc:47:db:d9 hadoop@localhost.localdomain

The key's randomart image is:

+--[ RSA 2048]----+

| |

| |

| . |

| + . o o |

| = = S o E |

| = B = + |

| + * + . |

| . o o |

| .o ... |

+-----------------+

- 查看密钥对(公钥和私钥)

[hadoop@localhost ~]$ cd .ssh

[hadoop@localhost .ssh]$ ls

id_rsa id_rsa.pub- 将公钥追加到server的authorizekey里面

[hadoop@localhost .ssh]$ cat id_rsa.pub>>authorized_keys

- 修改authorized——keys的权限为644

[hadoop@localhost .ssh]$ chmod 644 authorized_keys

- 测试ssh退出session之后是否可以免密码登录

[hadoop@localhost .ssh]$ ssh localhost

The authenticity of host 'localhost (::1)' can't be established.

ECDSA key fingerprint is a7:5b:2c:55:73:e9:9a:2e:8d:48:a5:8b:98:dd:f8:05.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

Last login: Thu Dec 21 03:31:45 2017 from 192.168.109.1

[hadoop@localhost ~]$ exit

logout

Connection to localhost closed.

[hadoop@localhost .ssh]$ ssh localhost

Last login: Thu Dec 21 04:01:32 2017 from localhost

[hadoop@localhost ~]$- Hadoop完全分布式

- 在本地模式,伪分布模式,完全分布式模式之间切换

- 复制一个本地模式local

[hadoop@localhost etc]$ sudo cp -r hadoop local- 将刚才的配置文件内容清除core-site.xml mapred-site.xml .....

[hadoop@localhost local]$ nano core-site.xml

[hadoop@localhost local]$ nano mapred-site.xml

[hadoop@localhost local]$ nano hdfs-site.xml

[hadoop@localhost local]$ nano yarn-site.xml

[hadoop@localhost local]$

- 复制伪分布模式,完全分布式模式

[hadoop@localhost etc]$ sudo cp -r hadoop pseudo

[hadoop@localhost etc]$ ls

hadoop local pseudo

[hadoop@localhost etc]$ sudo cp -r hadoop full

[hadoop@localhost etc]$ ls

full hadoop local pseudo

[hadoop@localhost etc]$- 删除Hadoop文件夹,创建符号链接来切换模式

[hadoop@localhost etc]$ sudo rm -rf hadoop

[hadoop@localhost etc]$ sudo ln -s pseudo hadoop

[hadoop@localhost etc]$ ll

total 12

drwxr-xr-x. 2 root root 4096 Dec 21 04:28 full

lrwxrwxrwx. 1 root root 6 Dec 21 04:30 hadoop -> pseudo

drwxr-xr-x. 2 hadoop hadoop 4096 Dec 21 04:10 local

drwxr-xr-x. 2 root root 4096 Dec 21 04:28 pseudo

[hadoop@localhost etc]$- 现在切换到伪分布模式,需要启动Hadoop进程,启动进程之前需要初始化Hadoop文件系统hdfs

[hadoop@localhost:/soft/hadoop2.7/bin]hadoop namenode format

- 启动所有进程 这里我使用Hadoop用户启动start-all.sh一直报错,查了很多资料说是ssh没有启动,但是我的ssh时启动成功了的,至今也不知道什么原因

错误如下

hadoop: ssh: connect to host hadoop port 22: Connection refused

localhost: chown: changing ownership of ‘/soft/hadoop-2.7.5/logs’: Operation not permitted

localhost: starting datanode, logging to /soft/hadoop-2.7.5/logs/hadoop-hadoop-datanode-localhost.localdomain.out查看ssh进程[hadoop@localhost:/home/hadoop]yum list installed|grep ssh

libssh2.x86_64 1.4.3-8.el7 @anaconda

openssh.x86_64 6.4p1-8.el7 @anaconda

openssh-clients.x86_64 6.4p1-8.el7 @anaconda

openssh-server.x86_64 6.4p1-8.el7 @anaconda

[hadoop@localhost:/home/hadoop]ps -Af|grep sshd

root 1712 1 0 06:51 ? 00:00:00 /usr/sbin/sshd -D //这里的sshd为root用户,我想估计是这里的问题,需要将Hadoop加入sshd -D

root 12975 1712 0 06:53 ? 00:00:00 sshd: hadoop [priv]

hadoop 12981 12975 0 06:53 ? 00:00:00 sshd: hadoop@pts/1

root 13876 1712 0 07:04 ? 00:00:00 sshd: hadoop [priv]

hadoop 13879 13876 0 07:04 ? 00:00:00 sshd: hadoop@pts/2

hadoop 18834 18788 0 07:37 pts/2 00:00:00 grep --color=auto sshd

[hadoop@localhost:/home/hadoop]ssh localhost

[root@localhost:/soft/hadoop2.7/etc]start-all.sh

[root@localhost:/soft/hadoop2.7/etc]jps

18579 NodeManager

18691 Jps

18423 ResourceManager

18249 SecondaryNameNode

18043 DataNode单独启动namenode进程

[root@localhost:/soft/hadoop2.7/sbin]hadoop-daemon.sh start namenode

- 查看HDFS文件系统

又出现了这个问题真是头疼

Call From localhost/127.0.0.1 to hadoop:9000 failed on connection exception: java.net.ConnectException: Connection refused;修改如下

[root@localhost:/soft/hadoop2.7/etc/hadoop]cat core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>//把Hadoop改成了local host

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/root/hadoop_tmp</value>//把Hadoop的临时缓存目录重新在家目录新建了一个文件夹,然后作为Hadoop的tmp文件夹//见我的另外一篇博客http://blog.csdn.net/xiaoqiu_cr/article/details/78868420,这里也主要和我每次启动start-all.sh的时候,namenode总是需要手动启动有关

</property>

</configuration>[root@localhost:/soft/hadoop2.7/etc/hadoop]hadoop fs -ls - 创建文件夹

[root@localhost:/soft/hadoop2.7/etc/hadoop]hadoop fs -mkdir -p /user/centos/hadoop

[root@localhost:/soft/hadoop2.7/etc/hadoop]hadoop fs -lsr /

lsr: DEPRECATED: Please use 'ls -R' instead.

drwxr-xr-x - root supergroup 0 2017-12-21 09:38 /user

drwxr-xr-x - root supergroup 0 2017-12-21 09:38 /user/centos

drwxr-xr-x - root supergroup 0 2017-12-21 09:38 /user/centos/hadoop

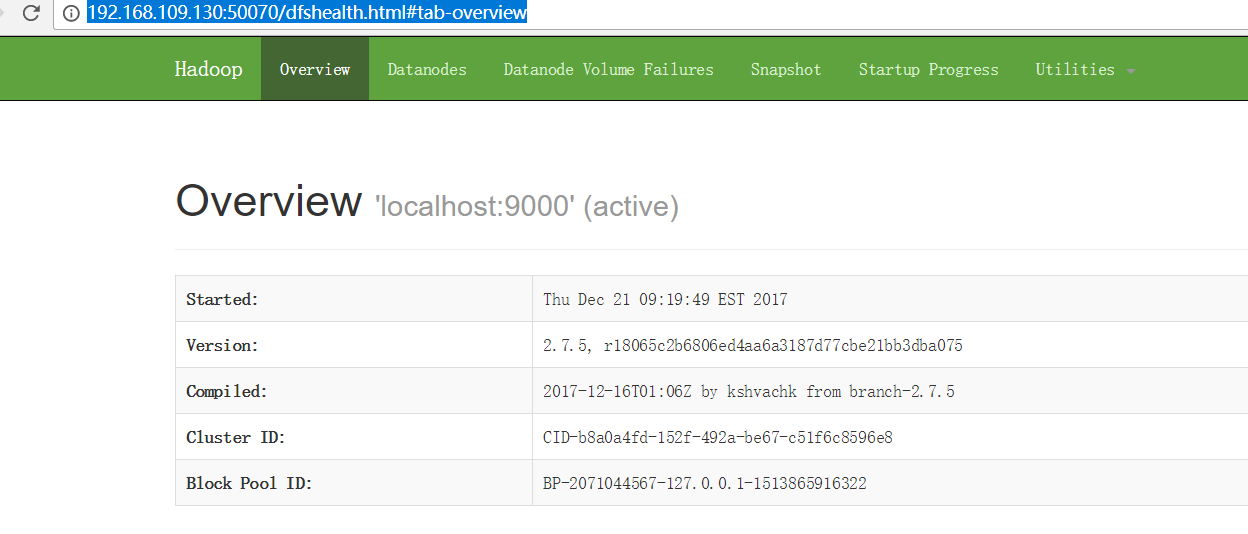

- web端访问50070

发现又访问失败,真是命运多舛,后来发现是防火墙的原因

先关闭防火墙

[root@localhost:/soft/hadoop2.7/etc/hadoop]systemctl stop firewalld.service- 重新访问http://192.168.109.130:50070

终于看到了感人的页面