HDFS是配合Hadoop使用的分布式文件系统,分为

namenode: nn1.hadoop nn2.hadoop

datanode: s1.hadoop s2.hadoop s3.hadoop

(看不明白这5台虚拟机的请看前面 01前期准备 )

解压配置文件

[hadoop@nn1 hadoop_base_op]$ ./ssh_all.sh mv /usr/local/hadoop/etc/hadoop /usr/local/hadoop/etc/hadoop_back

[hadoop@nn1 hadoop_base_op]$ ./scp_all.sh ../up/hadoop.tar.gz /tmp/

[hadoop@nn1 hadoop_base_op]$ #批量将自定义配置 压缩包解压到/usr/local/hadoop/etc/

#批量检查配置是否正确解压

[hadoop@nn1 hadoop_base_op]$ ./ssh_all.sh head /usr/local/hadoop/etc/hadoop/hadoop-env.sh

[hadoop@nn1 hadoop_base_op]$ ./ssh_root.sh chmown -R hadoop:hadoop /usr/local/hadoop/etc/hadoop

[hadoop@nn1 hadoop_base_op]$ ./ssh_root.sh chmod -R 770 /usr/local/hadoop/etc/hadoop

初始化HDFS

流程:

- 启动zookeeper

- 启动journalnode

- 启动zookeeper客户端,初始化HA的zookeeper信息

- 对nn1上的namenode进行格式化

- 启动nn1上的namenode

- 在nn2上启动同步namenode

- 启动nn2上的namenode

- 启动ZKFC

- 启动dataname

1.查看zookeeper状态

[hadoop@nn1 zk_op]$ ./zk_ssh_all.sh /usr/local/zookeeper/bin/zkServer.sh status

ssh hadoop@"nn1.hadoop" "/usr/local/zookeeper/bin/zkServer.sh status"

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: follower

OK!

ssh hadoop@"nn2.hadoop" "/usr/local/zookeeper/bin/zkServer.sh status"

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: leader

OK!

ssh hadoop@"s1.hadoop" "/usr/local/zookeeper/bin/zkServer.sh status"

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: follower

OK!

看到两个follower和一个leader说明正常运行,如果没有,就用下边的命令启动

[hadoop@nn1 zk_op]$ ./zk_ssh_all.sh /usr/local/zookeeper/bin/zkServer.sh start

2.启动journalnode

这个玩意就是namenode的同步器。

#在nn1上启动journalnode

[hadoop@nn1 zk_op]$ hadoop-daemon.sh start journalnode

#在nn2上启动journalnode

[hadoop@nn1 zk_op]$ hadoop-daemon.sh start journalnode

#可以分别打开log来查看启动状态

[hadoop@nn1 zk_op]$ tail /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-nn1.hadoop.log

2019-07-22 17:15:54,164 INFO org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 8485

2019-07-22 17:15:54,190 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2019-07-22 17:15:54,191 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 8485: starting

#发现IPC通信已经建立起来了,journalnode进程在8485

3.初始化HA信息(仅第一次运行,以后不需要)

[hadoop@nn1 zk_op]$ hdfs zkfc -formatZK

[hadoop@nn1 zk_op]$ /usr/local/zookeeper/bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper, hadoop-ha]

[zk: localhost:2181(CONNECTED) 1] quit

Quitting...

4.对nn1上的namenode进行格式化(仅第一次运行,以后不需要)

[hadoop@nn1 zk_op]$ hadoop namenode -format

#出现下边的说明初始化成功

#19/07/22 17:23:09 INFO common.Storage: Storage directory /data/dfsname has been successfully formatted.

5.启动nn1的namenode

[hadoop@nn1 zk_op]$ hadoop-daemon.sh start namenode

[hadoop@nn1 zk_op]$ tail /usr/local/hadoop/logs/hadoop-hadoop-namenode-nn1.hadoop.log

#

#2019-07-22 17:24:57,321 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

#2019-07-22 17:24:57,322 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 9000: starting

#2019-07-22 17:24:57,385 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: NameNode RPC up at: nn1.hadoop/192.168.10.6:9000

#2019-07-22 17:24:57,385 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Starting services required for standby state

#2019-07-22 17:24:57,388 INFO org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer: Will roll logs on active node at nn2.hadoop/192.168.10.7:9000 every 120 seconds.

#2019-07-22 17:24:57,394 INFO org.apache.hadoop.hdfs.server.namenode.ha.StandbyCheckpointer: Starting standby checkpoint thread...

#Checkpointing active NN at http://nn2.hadoop:50070

#Serving checkpoints at http://nn1.hadoop:50070

6.在nn2机器上同步nn1的namenode状态(仅第一次运行,以后不需要)

我们来到nn2的控制台!

###########一定要在nn2机器上运行这个!!!!############

[hadoop@nn2 ~]$ hadoop namenode -bootstrapStandby

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: ns1

Other Namenode ID: nn1

Other NN's HTTP address: http://nn1.hadoop:50070

Other NN's IPC address: nn1.hadoop/192.168.10.6:9000

Namespace ID: 1728347664

Block pool ID: BP-581543280-192.168.10.6-1563787389190

Cluster ID: CID-42d2124d-9f54-4902-aa31-948fb0233943

Layout version: -63

isUpgradeFinalized: true

=====================================================

19/07/22 17:30:24 INFO common.Storage: Storage directory /data/dfsname has been successfully formatted.

7.启动nn2的namenode

还是在nn2控制台运行!!

[hadoop@nn2 ~]$ hadoop-daemon.sh start namenode

#查看log来看看有没有启动成功

[hadoop@nn2 ~]$ tail /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-namenode-nn2.hadoop.log

8.启动ZKFC

这时候在nn1和nn2分别启动ZKFC,这时候两台机器的namenode,一个变成active一个变成standby!!ZKFC实现了HA高可用的自动切换!!

#############在nn1运行#################

[hadoop@nn1 zk_op]$ hadoop-daemon.sh start zkfc

#############在nn2运行####################

[hadoop@nn2 zk_op]$ hadoop-daemon.sh start zkfc

这时候在浏览器输入地址访问两台机器的hadoop界面

http://192.168.10.6:50070/dfshealth.html#tab-overview

http://192.168.10.7:50070/dfshealth.html#tab-overview

这两个有一个active有一个是standby状态。

9.启动dataname就是启动后三台机器

########首先确定slaves文件里存放了需要配置谁为datanode

[hadoop@nn1 hadoop]$ cat slaves

s1.hadoop

s2.hadoop

s3.hadoop

###########在显示为active的机器上运行##############

[hadoop@nn1 zk_op]$ hadoop-daemons.sh start datanode

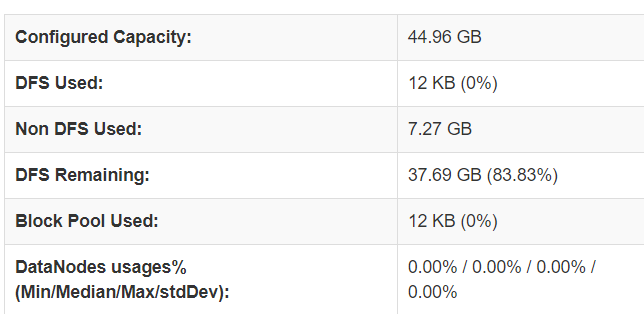

10.查看硬盘容量

打开刚才hadoop网页,查看hdfs的硬盘格式化好了没有。

这里是HDFS系统为每台实体机器的硬盘默认预留了2G(可以在配置文件hdfs-site.xml里更改),然后实际用来做hdfs的是每台机器15G,所以三台一共45G。

如图成功配置好HDFS。

之前写的文章在这里: