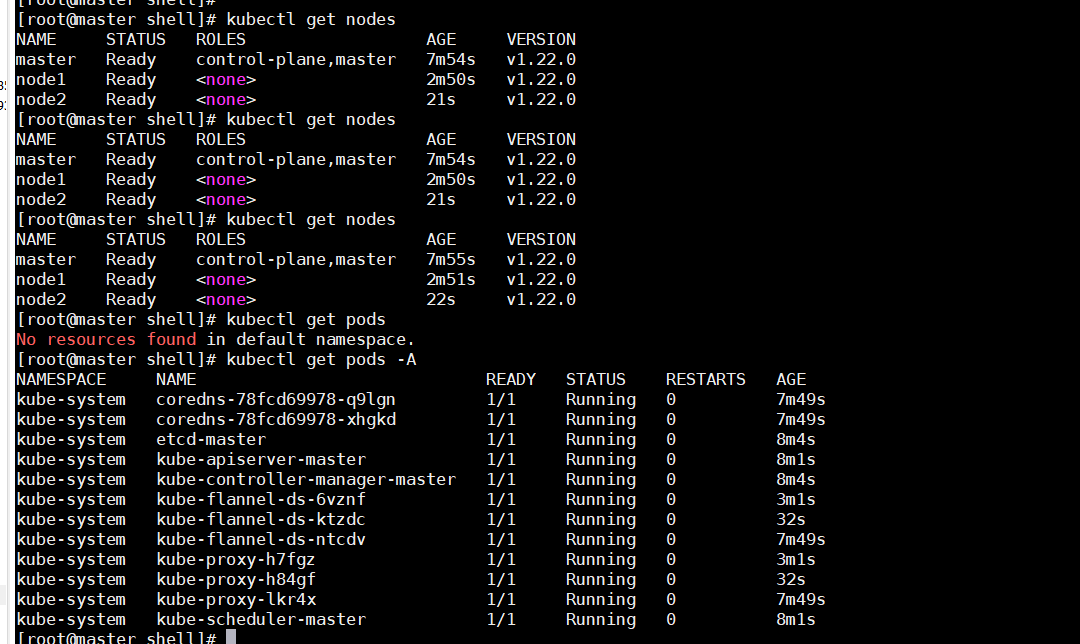

第一步,需要一个安装好的k8s集群,这里省略

第二步,搭建nfs存储,把/share目录共享出来

[root@master active_pvc]# vim /etc/exports /share *(insecure,rw,sync,fsid=0,crossmnt,no_subtree_check,anonuid=666,anongid=666,no_root_squash)

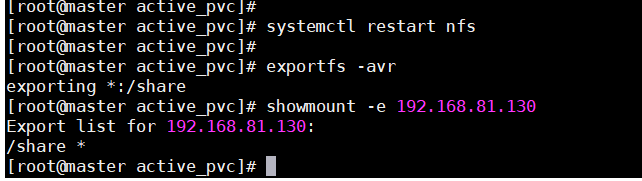

第三步,重启nfs服务,然后验证

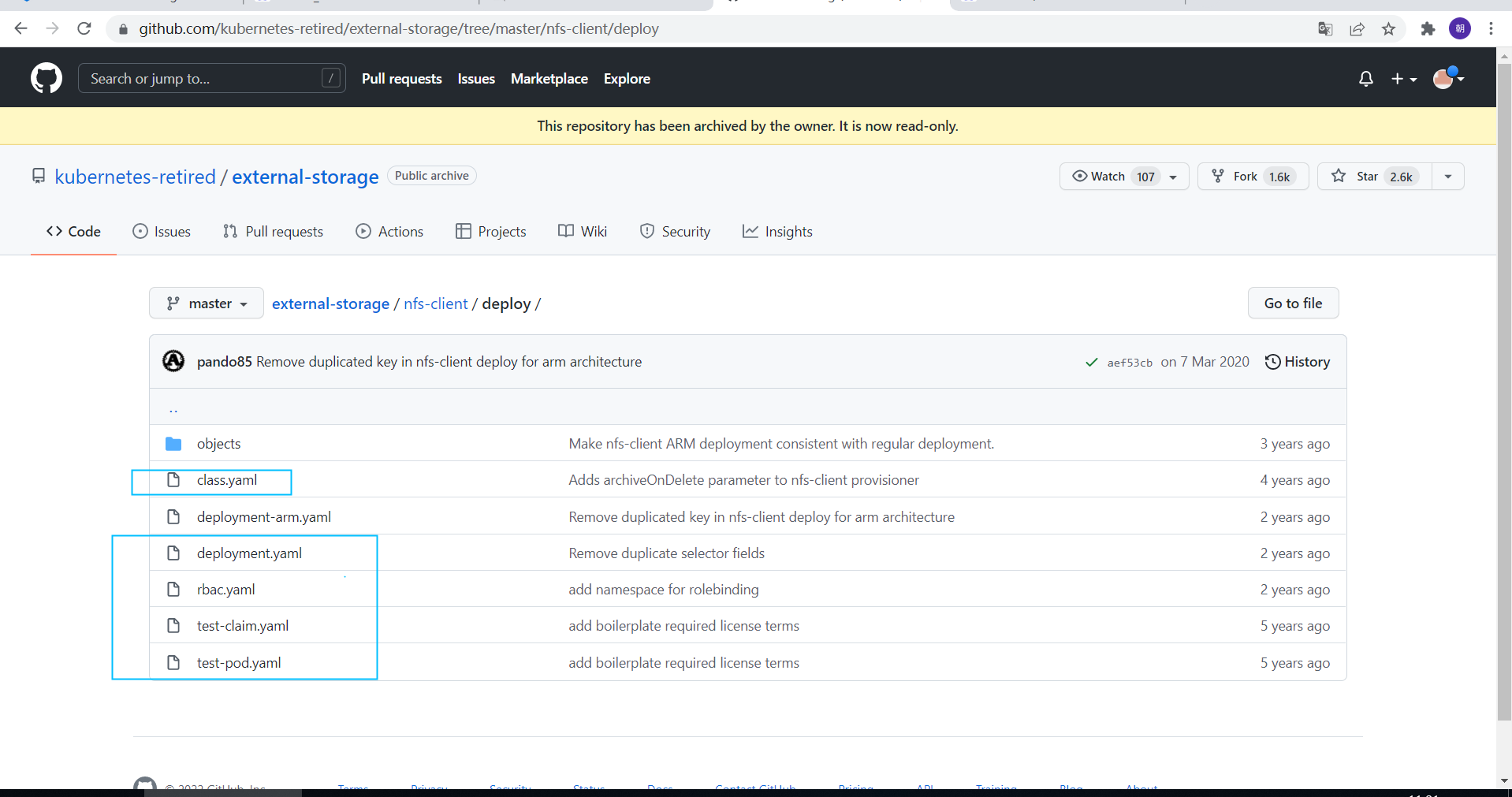

第四步,因为我的k8s用的是nfs存储,不支持动态补给,如果需要动态补给,就需要插件

nfs-client-provisioner

网址:https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client/deploy

需要这些k8s配置清单来部署,我们现在下载下来,如果github上下载很慢,可以去gitee,搜索external-storage也可以,很快

[root@master active_pvc]#uri="https://raw.githubusercontent.com/kubernetes-retired/external-storage/master/nfs-client/deploy/" [root@master active_pvc]#for i in class.yaml deployment.yaml test-claim.yaml test-pod.yaml;do wget -c $uri$i;done

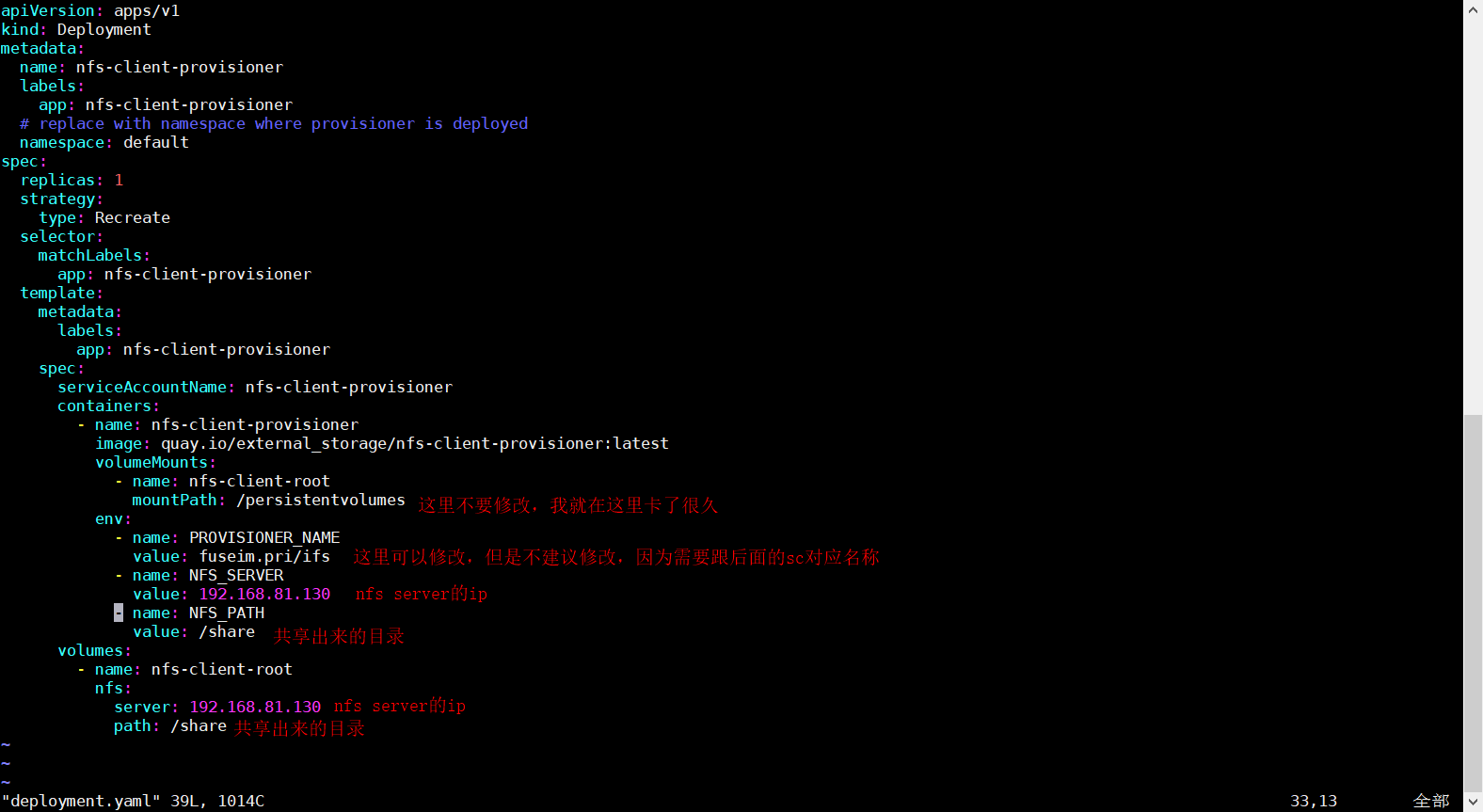

第五步,修改deployment.yaml

应用deployment

[root@master active_pvc]# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

应用rbac,需要给deploy权限,否则,pod创建不出来

[root@master active_pvc]# kubectl apply -f rbac.yaml serviceaccount/nfs-client-provisioner created clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

应用sc

[root@master active_pvc]# kubectl apply -f class.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

至于test-claim和test-pod可以不用,我这里直接给一个真实环境elasticsearch集群

es-cluster.yaml

--- kind: Service apiVersion: v1 metadata: name: es namespace: bigdata labels: app: elasticsearch spec: selector: app: elasticsearch type: NodePort ports: - port: 9200 nodePort: 30080 name: rest - port: 9300 nodePort: 30070 name: inter-node --- apiVersion: apps/v1 kind: StatefulSet metadata: name: es-cluster namespace: bigdata spec: serviceName: es replicas: 3 selector: matchLabels: app: elasticsearch template: metadata: labels: app: elasticsearch spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: "app" operator: In values: - elasticsearch - key: "kubernetes.io/hostname" operator: NotIn values: - master topologyKey: "kubernetes.io/hostname" containers: - name: elasticsearch image: elasticsearch:7.2.0 imagePullPolicy: IfNotPresent resources: limits: cpu: 1000m requests: cpu: 100m ports: - containerPort: 9200 name: rest protocol: TCP - containerPort: 9300 name: inter-node protocol: TCP volumeMounts: - name: data mountPath: /usr/share/elasticsearch/data env: - name: cluster.name value: k8s-logs - name: node.name valueFrom: fieldRef: fieldPath: metadata.name - name: discovery.seed_hosts value: "es-cluster-0.es,es-cluster-1.es,es-cluster-2.es" - name: cluster.initial_master_nodes value: "es-cluster-0,es-cluster-1,es-cluster-2" - name: ES_JAVA_OPTS value: "-Xms512m -Xmx512m" initContainers: - name: fix-permissions image: busybox imagePullPolicy: IfNotPresent command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"] securityContext: privileged: true volumeMounts: - name: pvc01 mountPath: /usr/share/elasticsearch/data - name: increase-vm-max-map image: busybox imagePullPolicy: IfNotPresent command: ["sysctl", "-w", "vm.max_map_count=262144"] securityContext: privileged: true - name: increase-fd-ulimit image: busybox imagePullPolicy: IfNotPresent command: ["sh", "-c", "ulimit -n 65536"] securityContext: privileged: true volumeClaimTemplates: - metadata: name: pvc01 labels: app: elasticsearch spec: accessModes: [ "ReadWriteMany" ] storageClassName: es resources: requests: storage: 10Gi

redis-cluster.yaml

[root@master active_pvc]# cat redis-cluster.yaml --- apiVersion: v1 kind: Service metadata: namespace: redis name: redis-cluster spec: clusterIP: None ports: - port: 6379 targetPort: 6379 name: client - port: 16379 targetPort: 16379 name: gossip selector: app: redis-cluster --- apiVersion: apps/v1 kind: StatefulSet metadata: namespace: redis name: redis-cluster spec: serviceName: redis-cluster podManagementPolicy: OrderedReady replicas: 6 selector: matchLabels: app: redis-cluster template: metadata: labels: app: redis-cluster spec: containers: - name: redis image: redis:5.0.0 ports: - containerPort: 6379 name: client - containerPort: 16379 name: gossip command: ["/etc/redis/fix-ip.sh", "redis-server", "/etc/redis/redis.conf"] env: - name: POD_IP valueFrom: fieldRef: fieldPath: status.podIP volumeMounts: - name: conf mountPath: /etc/redis/ readOnly: false - name: data mountPath: /data readOnly: false volumes: - name: conf configMap: name: redis-cluster defaultMode: 0755 volumeClaimTemplates: - metadata: name: data spec: storageClassName: managed-nfs-storage accessModes: - ReadWriteMany resources: requests: storage: 1Gi

redis-configmap.yaml

[root@master active_pvc]# cat redis-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: redis-cluster namespace: redis data: fix-ip.sh: | #!/bin/sh CLUSTER_CONFIG="/data/nodes.conf" if [ -f ${CLUSTER_CONFIG} ]; then if [ -z "${POD_IP}" ]; then echo "Unable to determine Pod IP address!" exit 1 fi echo "Updating my IP to ${POD_IP} in ${CLUSTER_CONFIG}" sed -i.bak -e '/myself/ s/[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}/'${POD_IP}'/' ${CLUSTER_CONFIG} fi exec "$@" redis.conf: | cluster-enabled yes cluster-config-file /data/nodes.conf cluster-node-timeout 10000 protected-mode no daemonize no pidfile /var/run/redis.pid port 6379 tcp-backlog 511 bind 0.0.0.0 timeout 3600 tcp-keepalive 1 loglevel verbose logfile /data/redis.log databases 16 save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename dump.rdb dir /data appendonly yes appendfilename "appendonly.aof" appendfsync everysec no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb lua-time-limit 20000 slowlog-log-slower-than 10000 slowlog-max-len 128 latency-monitor-threshold 0 notify-keyspace-events "" hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-entries 512 list-max-ziplist-value 64 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 hz 10 aof-rewrite-incremental-fsync yes

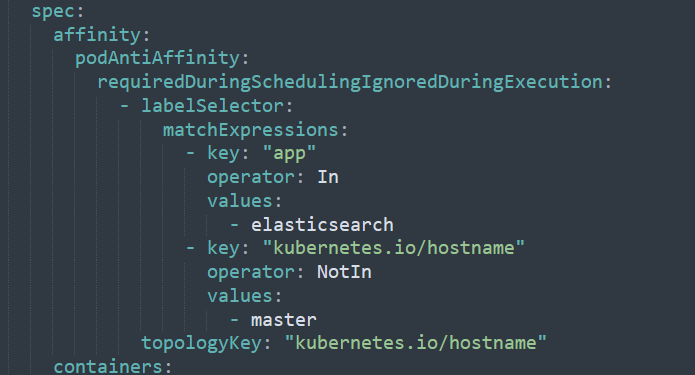

这里需要注意的是亲和性这块,如果你没有3个node节点的话,有一个会pending,如果2个node想运行3个es集群的话,需要把亲和性这块删除掉

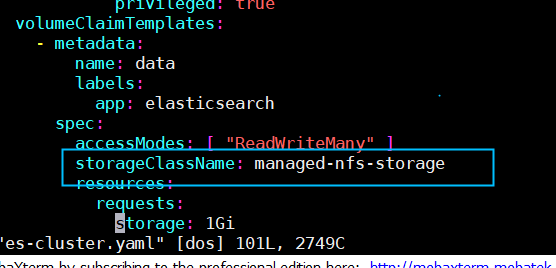

这里要注意的是,蓝色筐里需要填写sc,存储类的名称,否则无法分配pv和pvc

应用es-cluster.yaml

[root@master active_pvc]# kubectl apply -f es-cluster.yaml service/es created statefulset.apps/es-cluster created

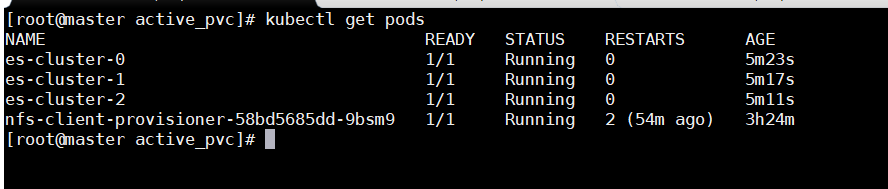

最后可以看到3个es全部都启动起来了

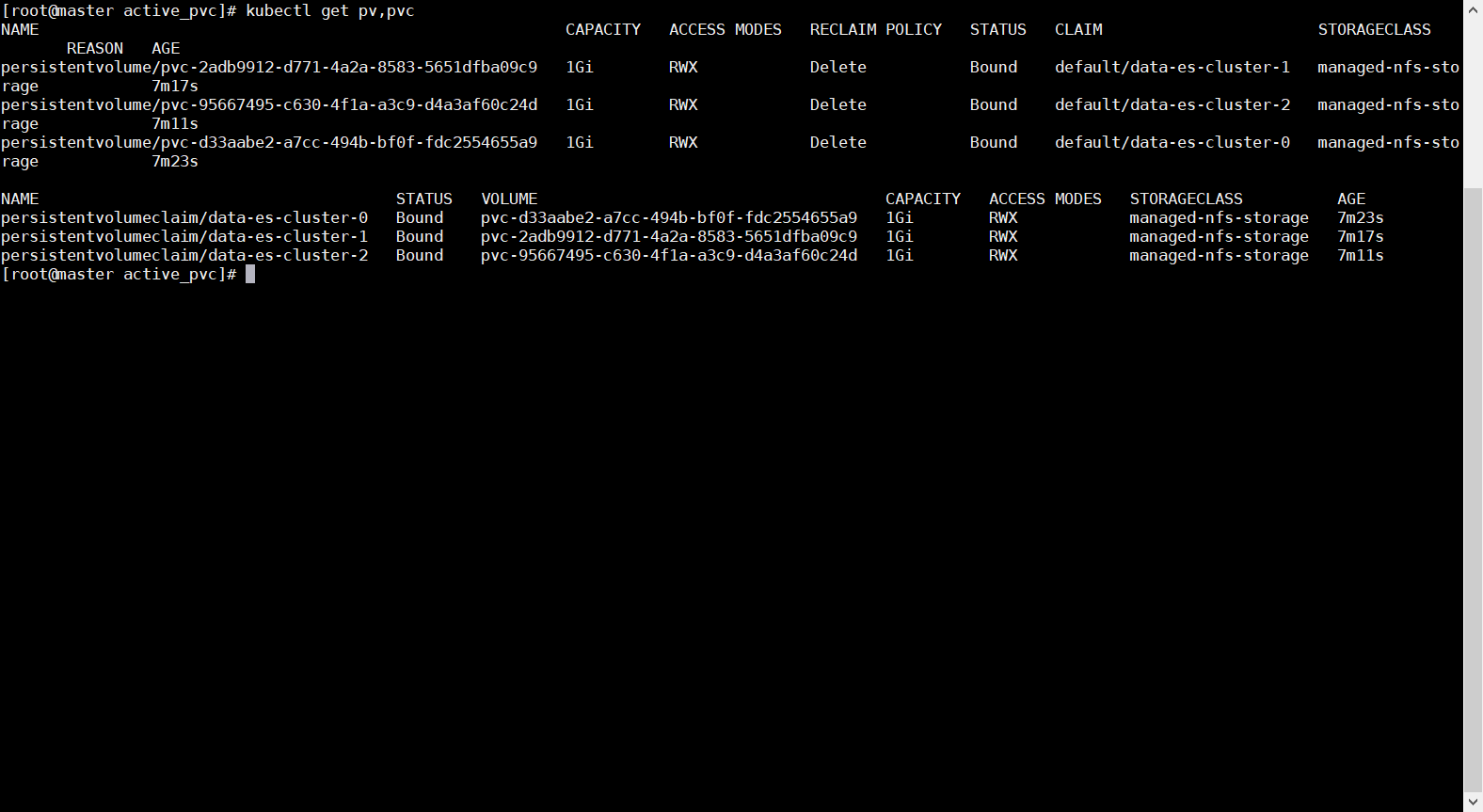

再看是否自动绑定pv和pvc相关联

可以看到,没有问题

补充

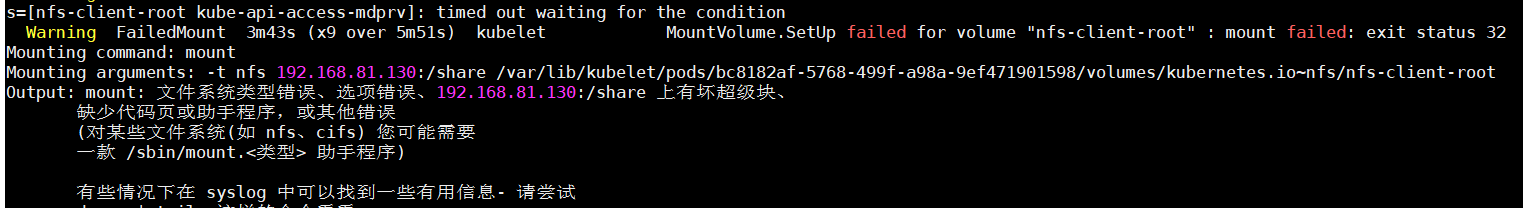

如果遇到这种报错

那么需要在所在的节点安装nfs-utils包,最好是每一个节点都安装

监控

[root@master active_pvc]# cat components.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - nodes/metrics verbs: - get - apiGroups: - "" resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: labels: k8s-app: metrics-server spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 4443 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 20 periodSeconds: 10 resources: requests: cpu: 100m memory: 200Mi securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100