有两个海量日志文件存储在hdfs上,

其中登陆日志格式:user,ip,time,oper(枚举值:1为上线,2为下线);

访问之日格式为:ip,time,url,假设登陆日志中上下线信息完整,切同一上下线时间段内是用的ip唯一,

计算访问日志中独立user数量最多的前10个url,用MapReduce实现。

提示:

1、要统计前10,需要两个步骤,第一个步骤实现join,统计出每个url对应的独立用户数,第二步骤求出top10

2、两个大表join,用同一job多输入

3、要根据ip字段join,所以要根据ip分区

4、求top10

数据:

login.log

tom,192.168.1.11,2017-11-20 10:00,1

tom,192.168.1.11,2017-11-20 11:00,2

sua,192.168.1.12,2017-11-20 10:01,1

sua,192.168.1.12,2017-11-20 10:30,2

lala,192.168.1.11,2017-11-20 11:01,1

lala,192.168.1.11,2017-11-20 11:30,2

tom,192.168.1.14,2017-11-20 11:01,1

tom,192.168.1.14,2017-11-20 11:40,2

jer,192.168.1.15,2017-11-20 10:00,1

jer,192.168.1.15,2017-11-20 10:40,2

sua,192.168.1.16,2017-11-20 11:00,1

sua,192.168.1.16,2017-11-20 12:00,2

visit.log

192.168.1.11,2017-11-20 10:02,url1

192.168.1.11,2017-11-20 10:04,url1

192.168.1.14,2017-11-20 11:02,url1

192.168.1.12,2017-11-20 10:02,url1

192.168.1.11,2017-11-20 11:02,url1

192.168.1.15,2017-11-20 10:02,url1

192.168.1.16,2017-11-20 11:02,url1

192.168.1.11,2017-11-20 10:03,url2

192.168.1.14,2017-11-20 11:03,url2

192.168.1.12,2017-11-20 10:03,url2

192.168.1.15,2017-11-20 10:03,url2

192.168.1.16,2017-11-20 11:03,url2

192.168.1.12,2017-11-20 10:15,url3

192.168.1.15,2017-11-20 10:16,url3

192.168.1.16,2017-11-20 11:02,url3

实现代码:

pom.xml

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.cyf</groupId> <artifactId>TwoLog</artifactId> <packaging>jar</packaging> <version>1.0-SNAPSHOT</version> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> </properties> <dependencies> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.6.4</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.6.4</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.6.4</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-core</artifactId> <version>2.6.4</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-jar-plugin</artifactId> <version>2.4</version> <configuration> <archive> <manifest> <addClasspath>true</addClasspath> <classpathPrefix>lib/</classpathPrefix> <mainClass>com.cyf.LoginlogFormatMP</mainClass> </manifest> </archive> </configuration> </plugin> </plugins> </build> </project>

IpJoinBean.java

package log; import org.apache.hadoop.io.WritableComparable; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; /** * Created by mac on 17/11/24. * 根据IPjoin登陆表和访问表的输出值 */ public class IpJoinBean implements WritableComparable<IpJoinBean>{ private String user = ""; private String ip = ""; private String loginTime = ""; private String logoutTime = ""; private String type = ""; private String url = ""; private String visitTime = ""; public String getUser() { return user; } public void setUser(String user) { this.user = user; } public String getIp() { return ip; } public void setIp(String ip) { this.ip = ip; } public String getLoginTime() { return loginTime; } public void setLoginTime(String loginTime) { this.loginTime = loginTime; } public String getLogoutTime() { return logoutTime; } public void setLogoutTime(String logoutTime) { this.logoutTime = logoutTime; } public String getType() { return type; } public void setType(String type) { this.type = type; } public String getUrl() { return url; } public void setUrl(String url) { this.url = url; } public String getVisitTime() { return visitTime; } public void setVisitTime(String visitTime) { this.visitTime = visitTime; } public void write(DataOutput dataOutput) throws IOException { dataOutput.writeUTF(type); dataOutput.writeUTF(ip); dataOutput.writeUTF(user); dataOutput.writeUTF(url); dataOutput.writeUTF(loginTime); dataOutput.writeUTF(logoutTime); dataOutput.writeUTF(visitTime); } public void readFields(DataInput dataInput) throws IOException { this.type = dataInput.readUTF(); this.ip = dataInput.readUTF(); this.user = dataInput.readUTF(); this.url = dataInput.readUTF(); this.loginTime = dataInput.readUTF(); this.logoutTime = dataInput.readUTF(); this.visitTime = dataInput.readUTF(); } public boolean visitMatchLogin(IpJoinBean login){ if(this.visitTime.compareTo(login.loginTime)>=0&&this.visitTime.compareTo(login.logoutTime)<=0){ return true; } return false; } public int compareTo(IpJoinBean o) { return this.ip.compareTo(o.ip)>=0?1:-1; } @Override public String toString() { return "ip:"+ip+" user:"+user+" url:"+url+" loginTime:"+loginTime+" logouttime:"+logoutTime+" visitTime:" +visitTime; } public IpJoinBean(String ip,String user,String url,String loginTime,String logoutTime,String visitTime){ this.ip = ip; this.user = user; this.url = url; this.loginTime = loginTime; this.logoutTime = logoutTime; this.visitTime = visitTime; } public IpJoinBean(){ super(); } }

ReversBean.java

package log; import org.apache.hadoop.io.WritableComparable; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; /** * Created by mac on 17/11/27. */ public class ReversBean implements WritableComparable<ReversBean>{ private String count; public int compareTo(ReversBean o) { return o.count.compareTo(this.count); } public void write(DataOutput dataOutput) throws IOException { dataOutput.writeUTF(count); } public void readFields(DataInput dataInput) throws IOException { this.count=dataInput.readUTF(); } public ReversBean(String count) { this.count = count; } public ReversBean() { } public String getCount() { return count; } public void setCount(String count) { this.count = count; } }

AllToOneGroupingComparator.java

package log; import org.apache.hadoop.io.WritableComparable; import org.apache.hadoop.io.WritableComparator; /** * Created by mac on 17/11/25. */ public class AllToOneGroupingComparator extends WritableComparator { protected AllToOneGroupingComparator(){ super(ReversBean.class,true); } @Override public int compare(WritableComparable a, WritableComparable b) { return 0; } }

LoginlogFormatMP.java

package com.cyf; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; import java.util.ArrayList; import java.util.Collections; import java.util.List; /** * Created by mac on 17/11/24. * 将用户登陆信息整理为 ip-user-loginTime-logoutTime格式文件 */ public class LoginlogFormatMP { public static class readFilesMapper extends Mapper<LongWritable,Text,Text,Text>{ Text outKey = new Text(); Text outValue = new Text(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String[] line = value.toString().split(","); String user = line[0]; String ip = line[1]; String time = line[2]; String opr = line[3]; System.out.print("map=============================line"+user+ip+time+opr); outKey.set(user+"_"+ip); outValue.set(time+"_"+opr); context.write(outKey,outValue); } } public static class timeConcatReducer extends Reducer<Text,Text,Text,NullWritable> { List loginTimes = new ArrayList(); List logoutTimes = new ArrayList(); @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { for(Text value :values){ String time = value.toString().split("_")[0]; String opr = value.toString().split("_")[1]; if(opr.equals("1")){ loginTimes.add(time); }else if(opr.equals("2")){ logoutTimes.add(time); } } Collections.sort(loginTimes); Collections.sort(logoutTimes); for(int i = 0;i<loginTimes.size();i++){ System.out.print(key+"_"+loginTimes.get(i)+"_"+logoutTimes.get(i)); //假设登陆和登出信息完整 context.write(new Text(key+"_"+loginTimes.get(i)+"_"+logoutTimes.get(i)),NullWritable.get()); } loginTimes.clear(); logoutTimes.clear(); } } public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Configuration conf = new Configuration(); Job job = Job.getInstance(conf); job.setJarByClass(LoginlogFormatMP.class); job.setMapperClass(readFilesMapper.class); job.setReducerClass(timeConcatReducer.class); job.setInputFormatClass(TextInputFormat.class); job.setNumReduceTasks(1); job.setMapOutputKeyClass(Text.class); job.setMapOutputKeyClass(Text.class); FileInputFormat.addInputPath(job,new Path("/logs/input/login.log")); Path outPath = new Path("/logs/output/out"); FileOutputFormat.setOutputPath(job,outPath); job.waitForCompletion(true); } }

JoinWithIpMp.java

package com.cyf; import log.IpJoinBean; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.FileSplit; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; import java.util.ArrayList; import java.util.List; /** * Created by mac on 17/11/25. * 通过IP将处理后的登陆日志与访问日志关联 */ public class JoinWithIpMp { public static class readFilesMapper extends Mapper<LongWritable,Text,Text,IpJoinBean> { private String inputPath ; private String fileName; private IpJoinBean outPutValue = new IpJoinBean(); @ Override protected void setup(Context context) throws IOException, InterruptedException { // 每个文件传进来时获得文件中属性前缀 FileSplit input = (FileSplit) context.getInputSplit(); try { //获得文件名 fileName = input.getPath().getName();; } catch (Exception e) { e.printStackTrace(); } } @Override protected void map(LongWritable key,Text value,Context context) throws IOException, InterruptedException { //将Ip作为key,把两份日志中的统一Ip分到同一分区分组下,在reduce中进行join if(fileName.startsWith("visit")){ String[] parms = value.toString().split(","); String ip = parms[0]; String time = parms[1]; String url = parms[2]; outPutValue.setIp(ip); outPutValue.setVisitTime(time); outPutValue.setUrl(url); outPutValue.setType("visit"); }else{ String[] parms = value.toString().split("_"); String user = parms[0]; String ip = parms[1]; String loginTime = parms[2]; String logoutTime = parms[3]; outPutValue.setUser(user); outPutValue.setIp(ip); outPutValue.setType("login"); outPutValue.setLoginTime(loginTime); outPutValue.setLogoutTime(logoutTime); } context.write(new Text(outPutValue.getIp()),outPutValue); } } public static class countClicksReducer extends Reducer<Text,IpJoinBean,Text,NullWritable> { private List<IpJoinBean> loginData = new ArrayList<IpJoinBean>(); private List<IpJoinBean> visitData = new ArrayList<IpJoinBean>(); @Override protected void reduce(Text key,Iterable<IpJoinBean> values,Context context) throws IOException,InterruptedException{ for(IpJoinBean value:values){ System.out.println("value============================ "+value); if(value.getType().equals("visit")){ visitData.add(new IpJoinBean(value.getIp(),value.getUser(),value.getUrl(),value.getLoginTime(),value.getLogoutTime(),value.getVisitTime())); }else if(value.getType().equals("login")){ loginData.add(new IpJoinBean(value.getIp(),value.getUser(),value.getUrl(),value.getLoginTime(),value.getLogoutTime(),value.getVisitTime())); } } //统计出能够关联到登陆信息的访问时间和ip,与用户关联 for(IpJoinBean visit:visitData){ for(IpJoinBean login:loginData){ if(visit.visitMatchLogin(login)){ context.write(new Text(visit.getIp()+"_"+login.getUser()+"_"+visit.getUrl()),NullWritable.get()); } } } loginData.clear(); visitData.clear(); } } public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Configuration conf = new Configuration(); Job job = Job.getInstance(conf); job.setJarByClass(JoinWithIpMp.class); job.setMapperClass(readFilesMapper.class); job.setReducerClass(countClicksReducer.class); //job.setInputFormatClass(TextInputFormat.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IpJoinBean.class); job.setNumReduceTasks(1); Path[] inPaths = new Path[2]; inPaths[0] = new Path("/logs/output/out/"); inPaths[1] = new Path("/logs/input/visit.log"); FileInputFormat.setInputPaths(job,inPaths); Path outPath = new Path("/logs/joinOut"); FileOutputFormat.setOutputPath(job,outPath); job.waitForCompletion(true); } }

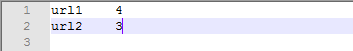

CountUVMP.java

package com.cyf; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; import java.util.*; /** * Created by mac on 17/11/25. * 统计每个url的独立访问数 */ public class CountUVMP { public static class readFilesMapper extends Mapper<LongWritable,Text,Text,Text> { Text outKey = new Text(); Text outValue = new Text(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String[] line = value.toString().split("_"); String user = line[1]; String url = line[2]; context.write(new Text(url),new Text(user)); } } public static class timeConcatReducer extends Reducer<Text,Text,Text,NullWritable> { @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { int i = 0; List<String> users = new ArrayList<String>(); for(Text value : values){ if( users.contains(value.toString())){ continue; } users.add(value.toString()); } context.write(new Text(key+"_"+users.size()),NullWritable.get()); } } public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Configuration conf = new Configuration(); Job job = Job.getInstance(conf); job.setJarByClass(CountUVMP.class); job.setMapperClass(readFilesMapper.class); job.setReducerClass(timeConcatReducer.class); job.setInputFormatClass(TextInputFormat.class); job.setNumReduceTasks(1); job.setMapOutputKeyClass(Text.class); job.setMapOutputKeyClass(Text.class); FileInputFormat.addInputPath(job,new Path("/logs/joinOut")); Path outPath = new Path("/logs/uvResult"); FileOutputFormat.setOutputPath(job,outPath); job.waitForCompletion(true); } }

Top10MP.java

package com.cyf; import java.io.IOException; import log.AllToOneGroupingComparator; import log.ReversBean; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.*; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; /** * Created by mac on 17/11/25. * 求出UV前10的url */ public class Top10MP { public static class TopOneMap extends Mapper<LongWritable, Text, ReversBean, Text> { int i = 0 ; @Override protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException { //以url的访客数作为key,实现倒序排序 String[] values = value.toString().split("_"); String url = values[0]; String count = values[1]; context.write(new ReversBean(count),value); } } public static class TopReduce extends Reducer<ReversBean, Text, Text,IntWritable> { int count = 0; int top = 0; @Override protected void setup(Context context) throws IOException, InterruptedException { Configuration conf = context.getConfiguration(); top = Integer.parseInt(conf.get("top")); } //每个分区下的所有key分为一个组,按照uv数从大到小排序,每组取出前10即可 @Override protected void reduce(ReversBean key, Iterable<Text> values,Context context) throws IOException, InterruptedException { for (Text value : values ){ if(count >= top){ return; }else{ count++; String[] countresults = value.toString().split("_"); String url = countresults[0]; String count = countresults[1]; context.write(new Text(url),new IntWritable((Integer.parseInt(count)))); } } } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); conf.set("top", "2"); /*FileSystem fileSystem = FileSystem.get(conf); Path dr = new Path("/Users/mac/Test/uvTop10"); if(fileSystem.exists(dr)){ fileSystem.delete(dr); }*/ Job job = Job.getInstance(conf); job.setJarByClass(Top10MP.class); job.setMapperClass(TopOneMap.class); job.setReducerClass(TopReduce.class); job.setMapOutputKeyClass(ReversBean.class); job.setMapOutputValueClass(Text.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setGroupingComparatorClass(AllToOneGroupingComparator.class); FileInputFormat.setInputPaths(job,new Path("/logs/uvResult/")); FileOutputFormat.setOutputPath(job, new Path("/logs/uvTop10")); //根据数据量决定,数据量太大可以先分两步,第一步骤分区数尽量大,第二步骤分区数唯一实现全局topN job.setNumReduceTasks(1); boolean b = job.waitForCompletion(true); System.exit(b?0:1); } }

修改pom.xml

<mainClass>com.cyf.LoginlogFormatMP</mainClass>

<mainClass>com.cyf.JoinWithIpMp</mainClass>

<mainClass>com.cyf.CountUVMP</mainClass>

<mainClass>com.cyf.Top10MP</mainClass>

运行命令

hadoop jar TwoLog-1.0.jar com.cyf.LoginlogFormatMP

hadoop jar TwoLog-2.0.jar com.cyf.JoinWithIpMp

hadoop jar TwoLog-3.0.jar com.cyf.CountUVMP

hadoop jar TwoLog-4.0.jar com.cyf.Top10MP