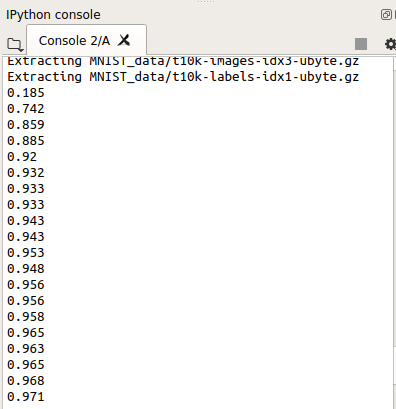

CNN实现

#!/usr/bin/env python2 # -*- coding: utf-8 -*- """ Created on Mon Apr 8 02:46:09 2019 @author: xiexj """ import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data mnist=input_data.read_data_sets('MNIST_data', one_hot=True) def compute_accuracy(v_xs, v_ys): # global prediction y_pre = sess.run(prediction, feed_dict={xs:v_xs,keep_prob:1}) correct_prediction = tf.equal(tf.argmax(y_pre,1),tf.argmax(v_ys,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) result = sess.run(accuracy, feed_dict={xs:v_xs,ys:v_ys,keep_prob:1}) return result def weight_variable(shape): initial = tf.truncated_normal(shape, stddev=0.1) return tf.Variable(initial) def bias_vatiable(shape): initial = tf.constant(0.1, shape=shape) return tf.Variable(initial) def conv2d(x, W): return tf.nn.conv2d(x, W, strides=[1,1,1,1], padding='SAME') def max_pooling_2x2(x): return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME') # define placeholder for inputs to network xs = tf.placeholder(tf.float32, [None, 784]) ys = tf.placeholder(tf.float32, [None, 10]) keep_prob = tf.placeholder(tf.float32) x_image = tf.reshape(xs, [-1, 28, 28, 1])# [n_samples, 28,28,1] ## conv1 layer ## W_conv1 = weight_variable([5,5,1,32]) b_conv1 = bias_vatiable([32]) h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1) h_pool1 = max_pooling_2x2(h_conv1) ## conv2 layer ## W_conv2 = weight_variable([5,5,32,64]) b_conv2 = bias_vatiable([64]) h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2) h_pool2 = max_pooling_2x2(h_conv2) ## fc1 layer ## W_fc1 = weight_variable([7*7*64, 1024]) b_fc1 = bias_vatiable([1024]) h_pool2_flat = tf.reshape(h_pool2, [-1,7*7*64]) h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1) h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob) ## fc2 layer ## W_fc2 = weight_variable([1024, 10]) b_fc2 = bias_vatiable([10]) prediction = tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2) # the error between prediction and real data cross_entropy = tf.reduce_mean(-tf.reduce_sum(ys * tf.log(prediction), reduction_indices=[1])) train_step = tf.train.AdamOptimizer(0.0001).minimize(cross_entropy) init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) for i in range(1000): batch_xs, batch_ys = mnist.train.next_batch(100) sess.run(train_step, feed_dict={xs:batch_xs,ys:batch_ys,keep_prob:0.5}) if i%50 == 0: print(compute_accuracy(mnist.test.images[:1000], mnist.test.labels[:1000]))

Saver 保存读取

Tensorflow目前只能保存Varibales,而不能保存框架,所以需要重新定义一下框架,再把Varibales放进来重新学习。

import tensorflow as tf import numpy as np W = tf.Variable([[1,2,3],[3,4,5]], dtype = tf.float32, name = 'weight') b = tf.Variable([[1,2,3]], dtype = tf.float32, name = 'biases') init = tf.global_variables_initializer() saver = tf.train.Saver() with tf.Session() as sess: sess.run(init) save_path = saver.save(sess, "my_net/save_net.ckpt") print("Save to path:",save_path)

import tensorflow as tf import numpy as np # restore variables tf.reset_default_graph() W = tf.Variable(np.arange(6).reshape((2,3)), dtype=tf.float32, name='weight') b = tf.Variable(np.arange(3).reshape((1,3)), dtype=tf.float32, name='biases') saver = tf.train.Saver() with tf.Session() as sess: saver.restore(sess, "my_net/save_net.ckpt") print("weight:", sess.run(W)) print("biases:", sess.run(b))

根据教程编码会出现以下错误:NotFoundError: Tensor name “weight_1” not found in checkpoint files mynet/save_net.ckpt

添加一行: tf.reset_default_graph() : # 清除默认图的堆栈,并设置全局图为默认图

原因:

真正的原因是,我写的代码 保存和加载 在前后进行,在前后两次定义了

W = tf.Variable(xxx,name="weight")

相当于 在TensorFlow 图的堆栈创建了两次 name = “weight” 的变量,第二个(第n个)的实际 name 会变成 “weight_1” (“weight_n-1”),之后我们在保存 checkpoint 中实际搜索的是 “weight_n-1” 这个变量 而不是 “weight” ,因此就会出错。

正常场景下,不会保存模型之后,马上加载(或在同一程序中加载),就不会出现这个情况,或者保存完之后 restart kernel (Spyder 中),再进行参数加载。

参考博客:Tensorflow Saver & restore 以及报错问题 NotFoundError: "x_x" not found in checkpoint