因为在OpenShift 4.1环境中不建议直接登录集群主机操作,因此很多操作可能需要在外部的Client VM上完成。当然用rhel的worker node的同事也可以和原来习惯保持一致。

这里记录一下经常遇到的一些问题:

-

如何查看密码

在4.1集群安装完后,系统会打印一句话出来,比如

INFO Creating infrastructure resources... ********************************************************************************************* INFO Waiting up to 30m0s for the Kubernetes API at https://api.cluster-8447.sandbox.opentlc.com:6443... INFO API v1.13.4+3a25c9b up INFO Waiting up to 30m0s for bootstrapping to complete... INFO Destroying the bootstrap resources... INFO Waiting up to 30m0s for the cluster at https://api.cluster.sandbox.opentlc.com:6443 to initialize... INFO Waiting up to 10m0s for the openshift-console route to be created... INFO Install complete! INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/root/cluster/auth/kubeconfig' INFO Access the OpenShift web-console here: https://console-openshift-console.apps.cluster.sandbox.opentlc.com INFO Login to the console with user: kubeadmin, password: TyCzM-ShJPQ-cgepT-dkDwq

一定要拷贝出来啊。。。如果万一没有拷贝,那在哪里还能找到呢?

可以在安装目录(cluster名)下,有个叫.openshift_install.log的文件,在那里可以找到

-

设置集群访问

export KUBECONFIG=$HOME/cluster-${GUID}/auth/kubeconfig

echo "export KUBECONFIG=$HOME/cluster-${GUID}/auth/kubeconfig" >>$HOME/.bashrc

-

上传镜像到内部镜像仓库

暴露image-registry路由,缺省不暴露route,只暴露image-registry.openshift-image-registry.svc服务

[root@clientvm 0 ~]# oc get svc -n openshift-image-registry NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE image-registry ClusterIP 172.30.134.180 <none> 5000/TCP 5h2m

oc patch configs.imageregistry.operator.openshift.io/cluster --patch '{"spec":{"defaultRoute":true}}' --type=merge

Podman登录

oc login -u kubeadm HOST=$(oc get route default-route -n openshift-image-registry --template='{{ .spec.host }}') podman login -u kubeadm -p $(oc whoami -t) --tls-verify=false $HOST

胡乱搞了一个Dockerfile,然后build

podman build -t default-route-openshift-image-registry.apps.cluster-8447.sandbox452.opentlc.com/myproject/mytomcat:slim .

[root@clientvm 127 ~/cluster-8447]# podman images REPOSITORY TAG IMAGE ID CREATED SIZE default-route-openshift-image-registry.apps.cluster-8447.sandbox452.opentlc.com/myproject/mytomcat slim ec32b2cdbea2 About a minute ago 518 MB <none> <none> 0426c1689356 5 minutes ago 500 MB docker.io/library/openjdk 8-jdk 08ded5f856cc 6 days ago 500 MB

然后push镜像,切记使用--tls-verify=false

[root@clientvm 125 ~]# podman push default-route-openshift-image-registry.apps.cluster-d60b.sandbox509.opentlc.com/myproject/mytomcat:slim --tls-verify=false Getting image source signatures Copying blob ea23cfa0bea9 done Copying blob 2bf534399aca done Copying blob eb25e0278d41 done Copying blob 46ff59048438 done Copying blob f613cd1e50cc done Copying blob 1c95c77433e8 done Copying blob 6d520b2e1077 done Copying config 7670309228 done Writing manifest to image destination Copying config 7670309228 done Writing manifest to image destination Storing signatures

push完可以看到imagestream

生成应用

[root@clientvm 0 ~/cluster-8447]# oc new-app mytomcat:slim --> Found image ec32b2c (6 minutes old) in image stream "myproject/mytomcat" under tag "slim" for "mytomcat:slim" * This image will be deployed in deployment config "mytomcat" * Port 8080/tcp will be load balanced by service "mytomcat" * Other containers can access this service through the hostname "mytomcat" * WARNING: Image "myproject/mytomcat:slim" runs as the 'root' user which may not be permitted by your cluster administrator --> Creating resources ... deploymentconfig.apps.openshift.io "mytomcat" created service "mytomcat" created --> Success Application is not exposed. You can expose services to the outside world by executing one or more of the commands below: 'oc expose svc/mytomcat' Run 'oc status' to view your app.

通过命令可以直接部署,但如果在deployment.yaml中间的image直接写myproject/mytomcat会出错的。

如果是自己建立模板和通过yaml文件部署,image字段的正确写法是全路径。

image-registry.openshift-image-registry.svc:5000/openshift/mytomcat:8-slim

-

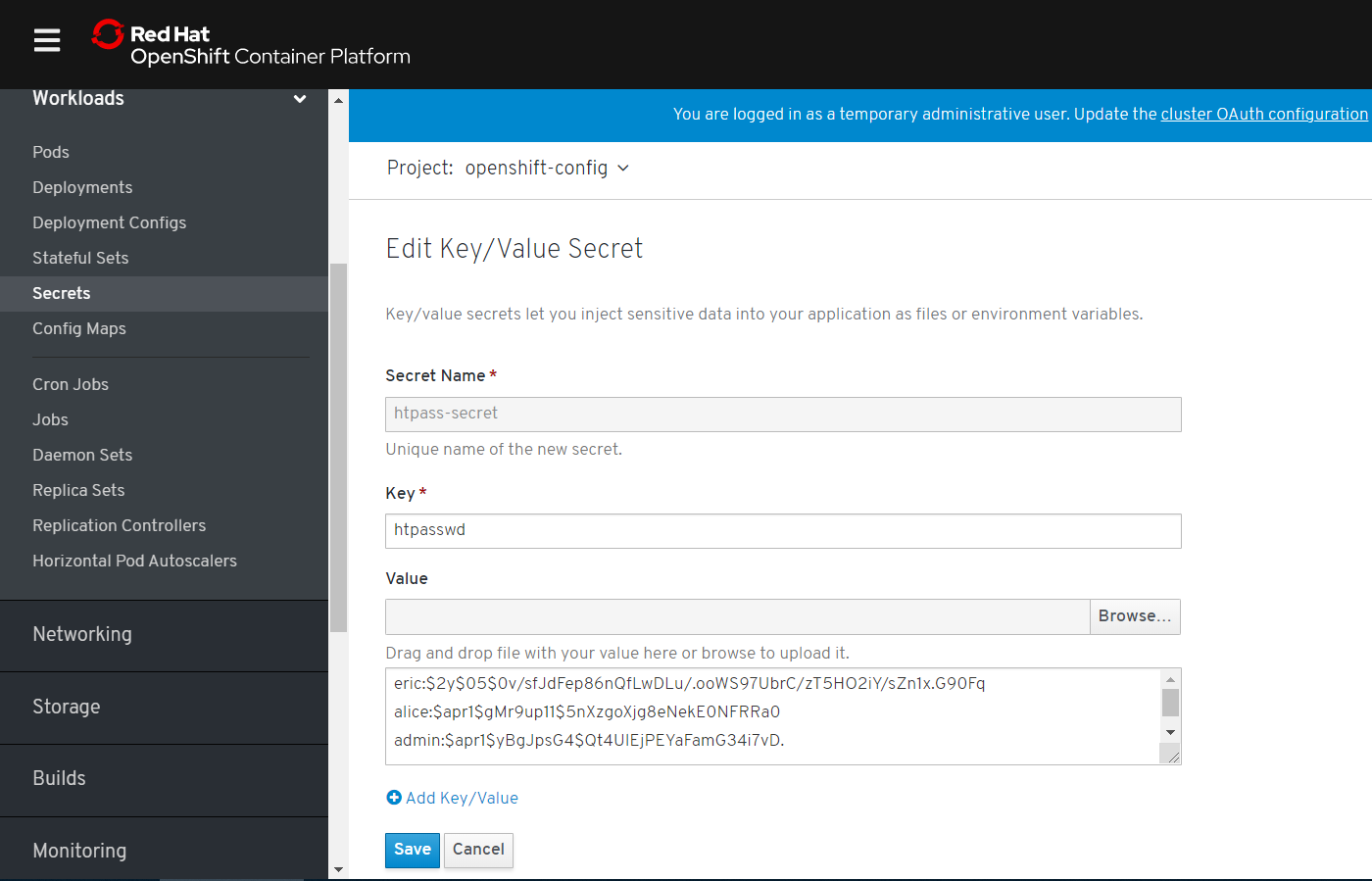

添加用户

OpenShift 4.1 的用户认证这块也基于Operator实现,和3.11很大的区别在于3.11是配置在master-config.yaml下面,缺省是HTPasswd,

而4是缺省identity provider是没有的,需要基于authentication的cr配置出来。

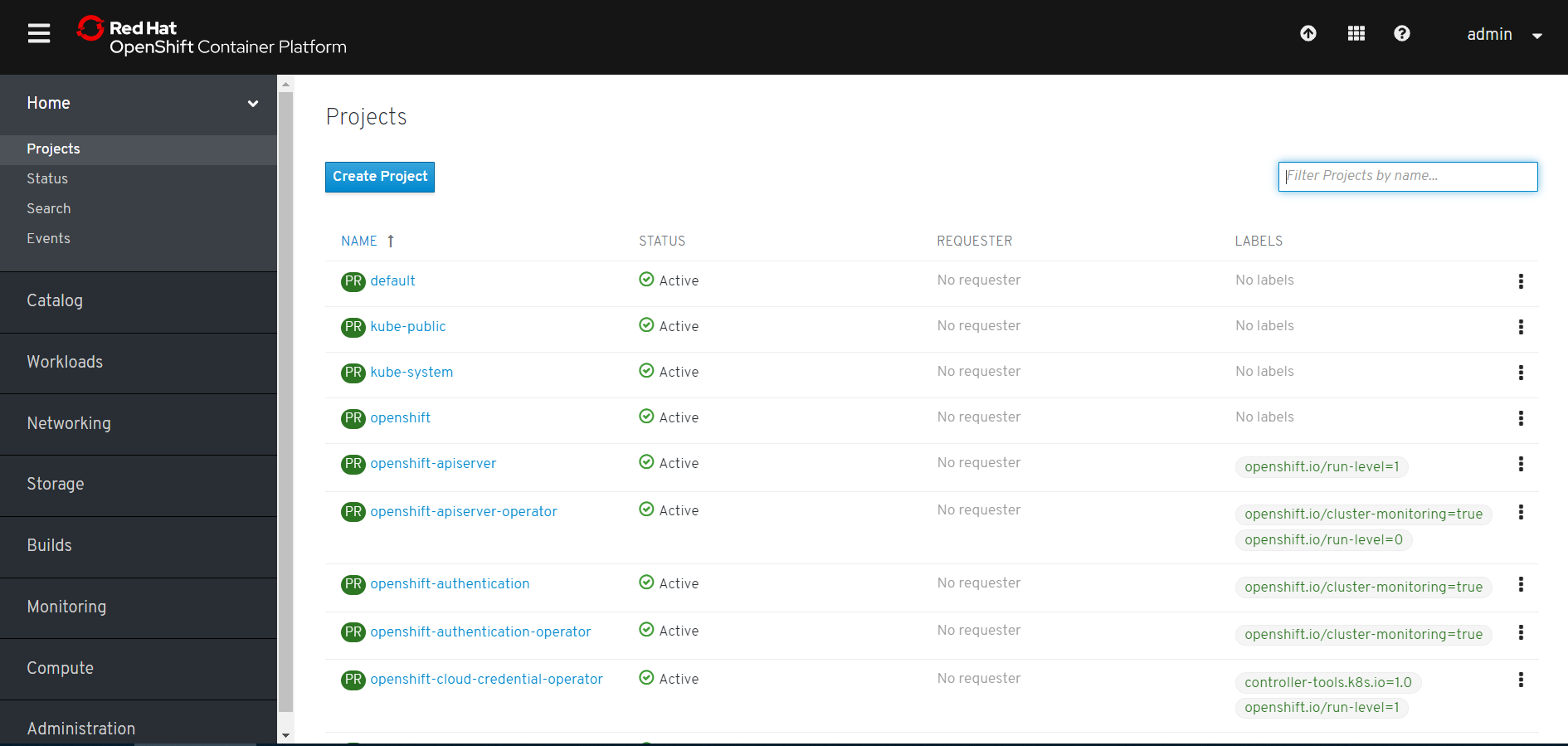

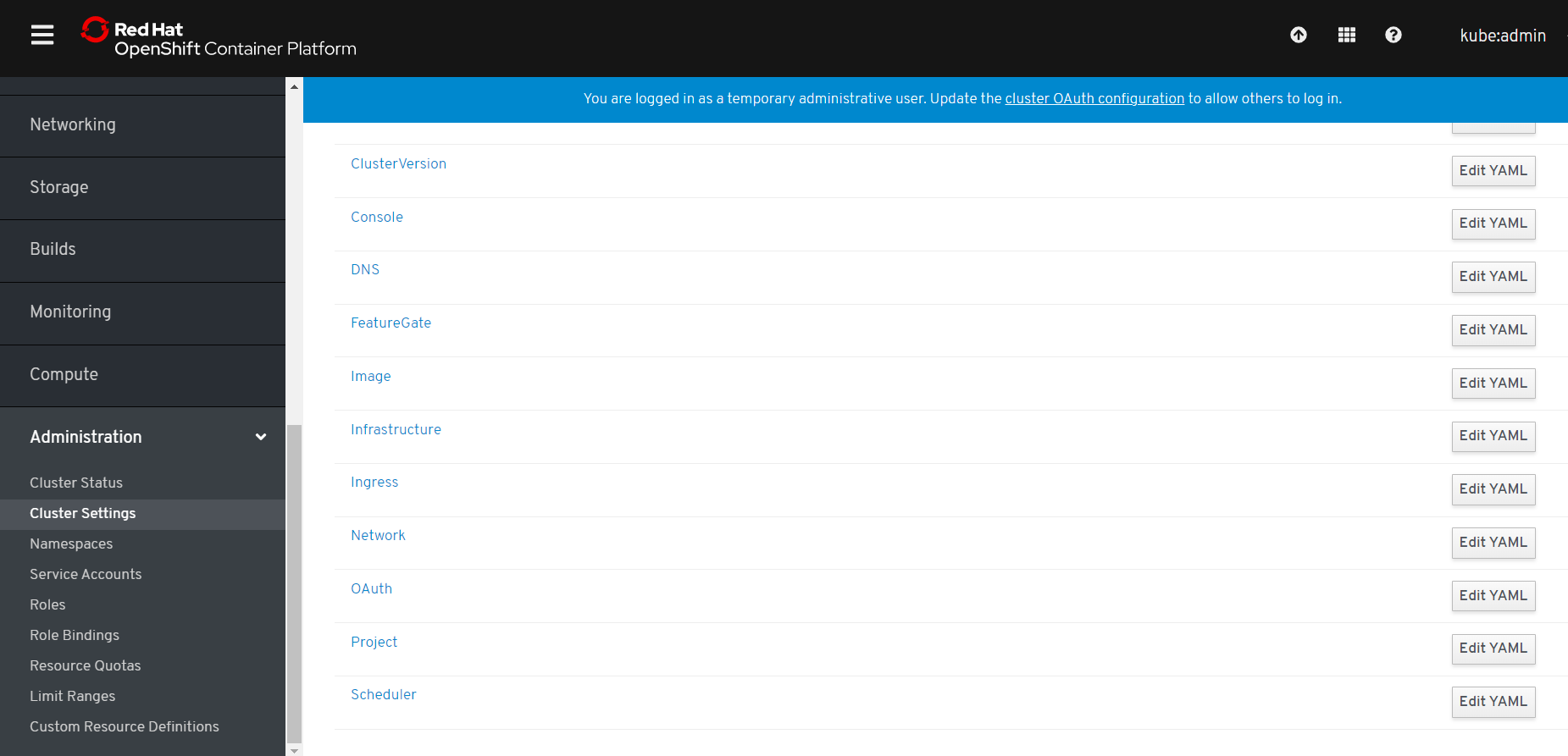

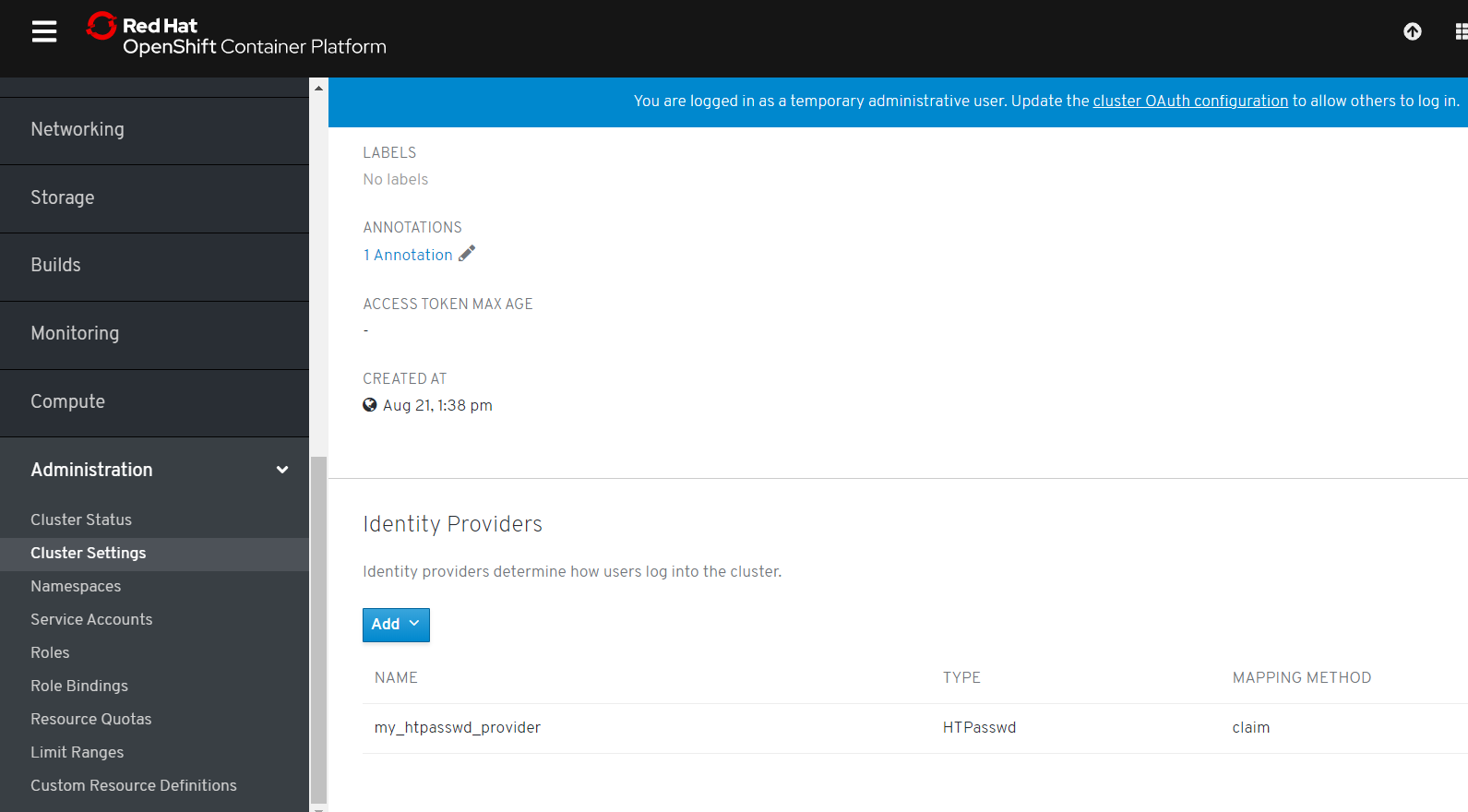

在Cluster Setting的Global Configuration里面可以看到OAuth这项内容。

点击进去可以看到identity Provider为空

缺省只能用kubeadmin登录,如果需要添加用户,首先需要创建CR(Custom Resource)

如果我们还是以原来的HTPasswd方式,步骤如下:

1.在客户端创建users.htpasswd文件,并写入用户

htpasswd -c -B -b users.htpasswd admin welcome1

如果要添加多个用户,用下面命令

htpasswd -b users.htpasswd eric welcome1

htpasswd -b users.htpasswd alice welcome1

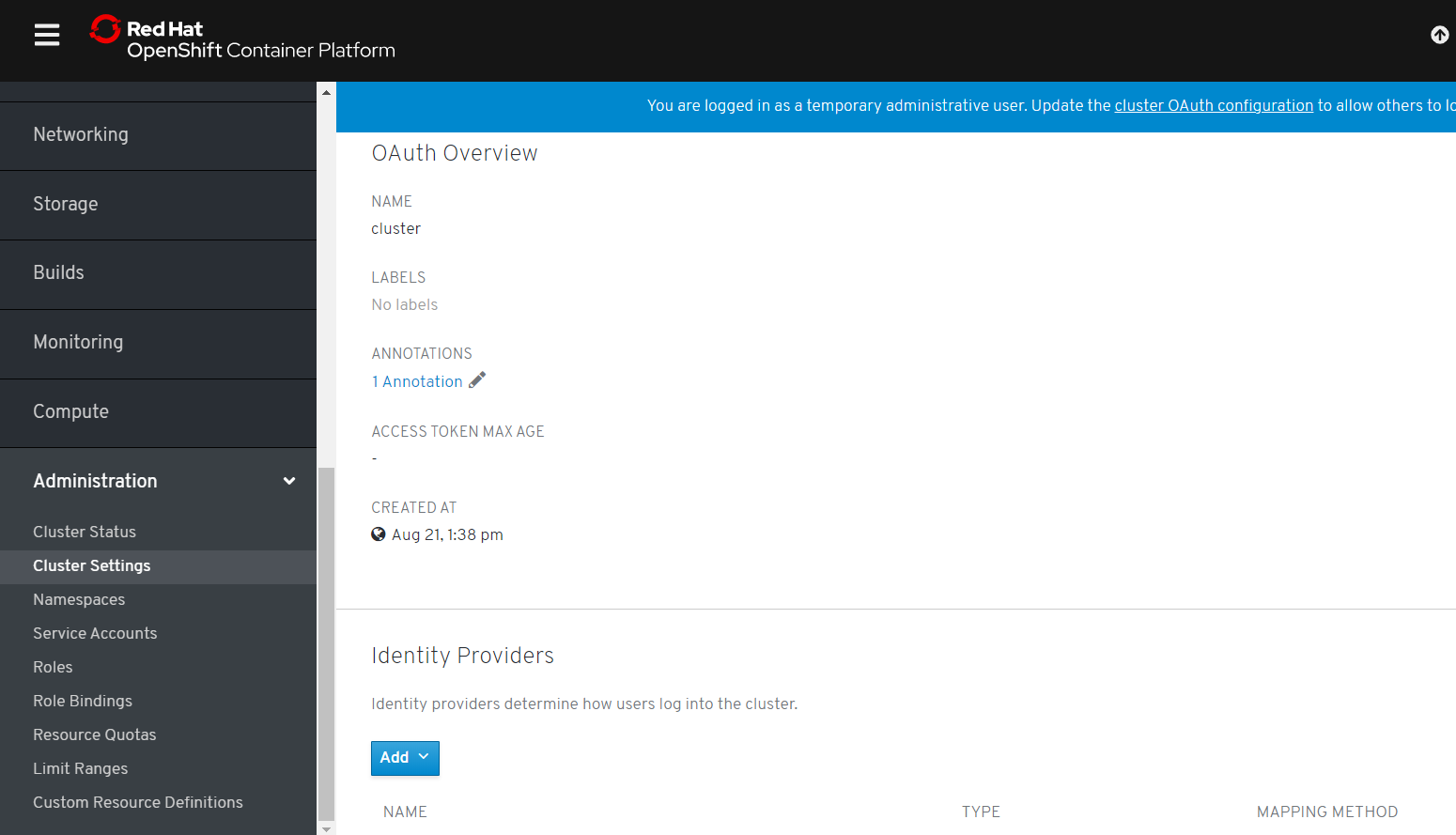

2. 在openshift-config下创建secret

oc create secret generic htpass-secret --from-file=htpasswd=/root/users.htpasswd -n openshift-config

如果是以后在文件中又添加了用户,可以用下面命令更新

oc create secret generic htpass-secret --from-file=htpasswd=/root/users.htpasswd -n openshift-config --dry-run -o yaml | oc apply -f -

完成后可以在openshift-config下看到这个secret, 选择edit secret,看到里面包含得用户名

3. 更新CR, 写一个yaml文件, 这一步也可以直接在界面上添加。

[root@clientvm 0 ~]# cat htpass.yaml apiVersion: config.openshift.io/v1 kind: OAuth metadata: name: cluster spec: identityProviders: - name: my_htpasswd_provider mappingMethod: claim type: HTPasswd htpasswd: fileData: name: htpass-secret

cluster的CR已经存在,所有通过apply去更新

[root@clientvm 0 ~]# oc apply -f htpass.yaml Warning: oc apply should be used on resource created by either oc create --save-config or oc apply oauth.config.openshift.io/cluster configured

完成后可以看到在Oauth里面包含了一个my_htpasswd_provider

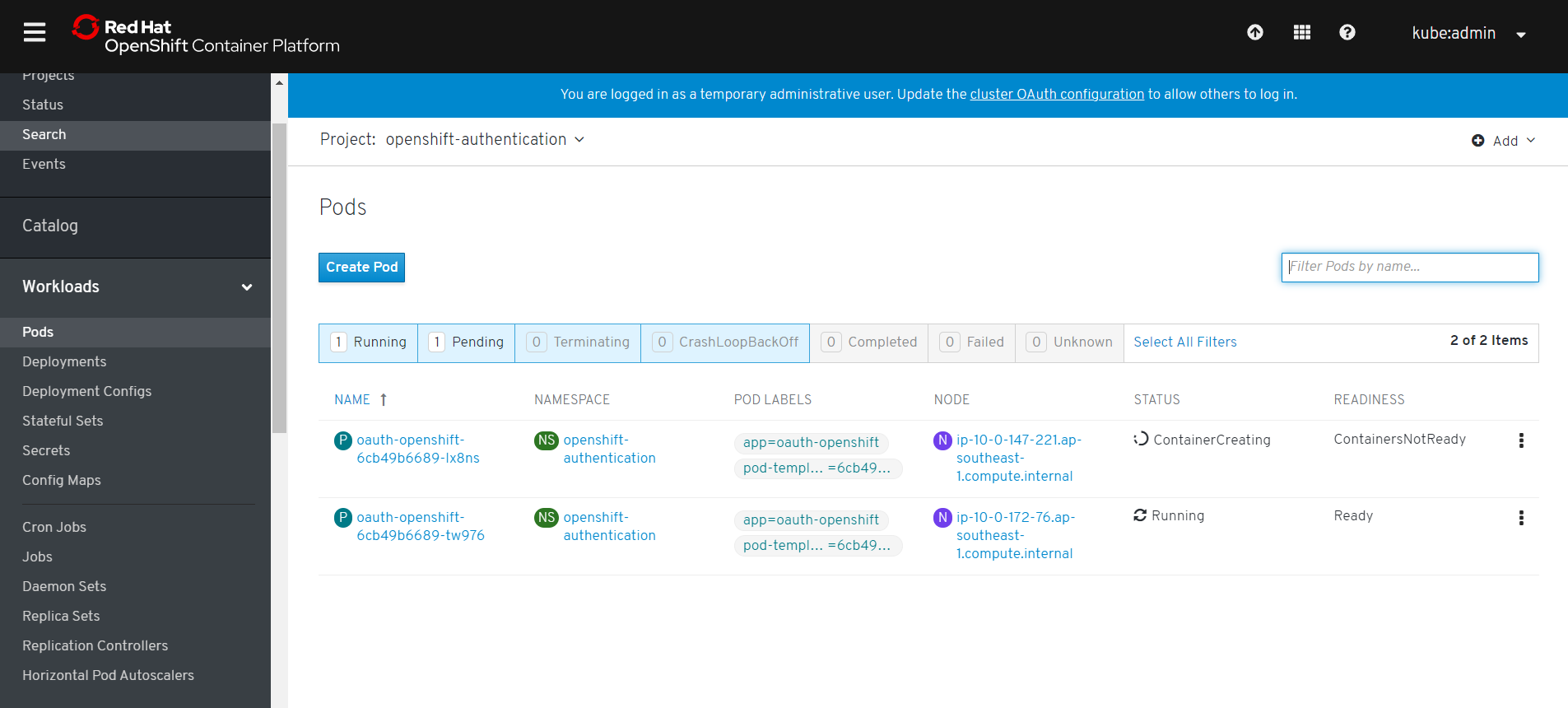

检查Pod的状态(在openshift-authentication project下),如果没有重新更新,就手工delete Pod让他重新装载一遍.

用oc get users看一看,怎么什么都没有。。。这里有个坑,只有登录过后的用户才能看到,所以直接登录吧

[root@clientvm 0 ~]# oc login -u eric Authentication required for https://api.cluster-8447.sandbox452.opentlc.com:6443 (openshift) Username: eric Password: Login successful. You don't have any projects. You can try to create a new project, by running oc new-project <projectname>

再切换回 kubeadmin用户,就可以看到了

[root@clientvm 0 ~]# oc get users NAME UID FULL NAME IDENTITIES admin 463b2706-c3d9-11e9-b6ad-0a580a81001f my_htpasswd_provider:admin alice d73b3e6f-c3db-11e9-ba6d-0a580a80001a my_htpasswd_provider:alice eric 4c8b7952-c3de-11e9-ab5a-0a580a82001b my_htpasswd_provider:eric

设置为集群管理员

oc adm policy add-cluster-role-to-user cluster-admin admin

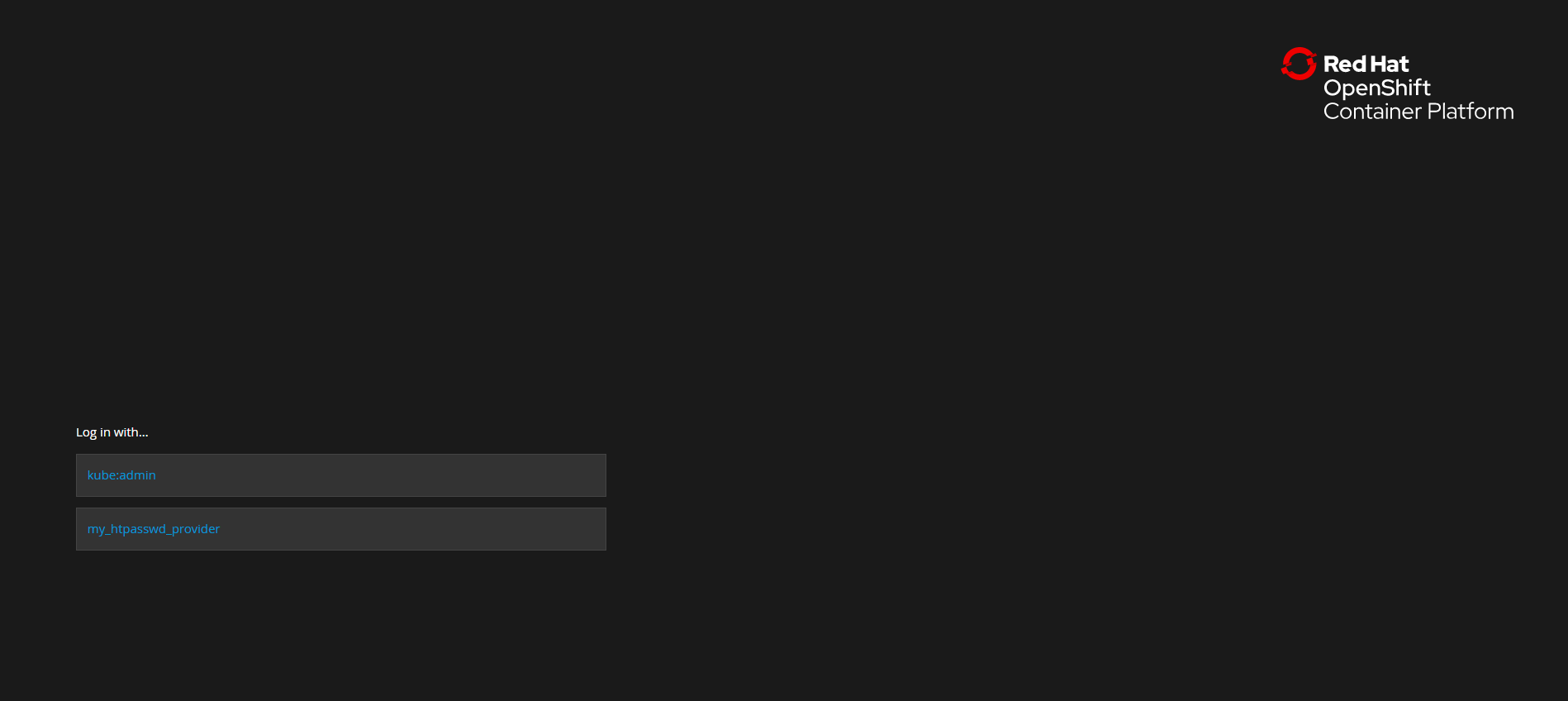

Console上LogOut

鼠标点击选择my_htpasswd_provider,一定要选这个,如果选上面的是不会让你登录的,然后用用户名登录就可以了。