his article was written almost 2 years ago, it's content may not reflect the latest state of the code which is currently available.

Please check https://net7mma.codeplex.com/ for the latest information and downloads.

https://net7mma.codeplex.com/SourceControl/latest

Preface

I will say before I start the main article that Audio data support seems to be fairly covered in .NET and if a developer wanted to perform various tasks related to Audio such as Decoding, Encoding and Transcoding there are definitely enough resources as far as information and libraries with complete implementations to do that.. Things get utterly confusing when you start to deal with video - which is the real problem domain - both encoding and decoding it.... this is for multiple reasons with the biggest myth being that managed code is just not fast enough for working with video data.

The fact is that each codec is vastly different from one another and the compression / decompression utilized in the codec is usually encumbered by some type of patent. This unfortunately means someone has to pay royalties for the code in use in that codec. The other problem is that the decoding of video is usually standardized, but the encoding is not. This means you are free to encode in any way as long as the end result conforms to the specification for decoding the stream. This yields a lot of freedom to developers, but also provides such individuals with many ways to encode the data. However, some are more efficient than others and results are variable in amount of time used as well as the results produced.

There are a few libraries that can help, such as VLC or FFMPEG, both of which use LibAvCodec. However, that library is in written in "C++" and there are some considerations to take into account with the license of the library - not to mention it will introduce external dependencies and platform invocation into your code.

In short if you need a quick analogy you can compare video decoding and encoding to zipping and unzipping a file... when you decode you unzip the data, and when you encode you zip the data. Compression and Decompression, just how Zip compression is not the same as Rar compression, MPEG4 compression is not H264compression.

This is also true with Audio encoding / decoding for, say, "mp3" vs "wav" formats. The big difference between the two is primarily concerned with audio and video. The fact that audio data is far less complex than video data in the sense that there are far less bytes to parse, introduces a separate problem: audio - in the sense that a small 'glitch' or error in the data will result in a larger problem while decoding - versus decoding video data with a 'glitch' or error, which will NOT result in any substaintial artifacts during playback.

If you are interested about the format of wave audio and how to manipulate it check out this article on CodeProject which has a bunch of great examples.

If you are interested in how audio and video data are similar yet different on a high level check out thispresentation.

Typically the biggest factor in decoding the video for display on a computer screen is the color space conversion from YUV to RGB which has to be performed for every pixel is the resulting video which means that at Quarter Common Interlace Format or more simply QCIF resolution (which is 176x220) this calculation and conversion needs to be performed about 25,000 times while decoding. (Once for every pixel, 127 * 220 = 29840) or a bit less if you use a few tricks.

Single images are far less complex than series of images or videos due to the fact that you have BMP, GIF, JPEG, PNG, etc. at your side to do the work for you Each one has it's own list of pros and cons, and each has a place and purpose.

The JPEG image format is not as encumbered by such intellectual properties (anymore). Also, it is no longer the optimal way to transmit or even store pictures. Nonetheless, due to its wide adoptions over the past 20 something years, Jpeg files are quite accessible in the sense there are a lot of resources explaining how to work with Jpeg files and their contained data.

MPEG (among JPEG2000 and many other candidates) succeeds JPEG in the sense that it allows the data transmitted to be far smaller, and thus uses less bandwidth. This increases the complexity required to compress and finally view the image. Unfortunately this results in higher CPU utilization if you want to convert the compressed data completely to a format like RGB. It is also encumbered by patents for the latest versions.

Typically conversion to RGB is performed because that is the format used for displaying images on a computer screen in most set top boxes or televisions. This is not the case, and thus far less information is converted to RGB and is simply rendered in the native format for that platform (e.g. YUV), which is why the processors of such devices can be far less powerful then a modern or even an old computer.

Some Graphics Processing Units (GPU) or even some modern Central Processing Units (CPU) can even accelerate the processing by either using advanced math and processor intrinsics known an SIMD or Parallel Excution, others can work directly with YUV encoded data or throw multiple cores at the decoding process which have been highly optomized to work against such data and in some cases specfic codecs which require such advanced routines use specialized hardware, sort of how the Networking Stack in your own computer utilizes the NIC processor to perform Checksum Verification and other operations when allowed to by the operating system and subsequently achieve better performance.

This article and library don’t have much to do with encoding, decoding and or Transcoding and it’s not centered around Audio or Video so let’s find out exactly what this article is all about….

Introduction

This library provides packet for packet Rtp aggregation agnostic of the underlying video or audio formats utilized over Rtp. This means it does not depend on or expose details about the underlying media beyond what is obtained in the dialog necessary to play the media, it also makes it easy to derive the given classes to support application specific features such as QOS and throttling or other features which are required by your Video On Demand Server.

It can be used to source a single (low bandwidth) Media over Tcp/Udp to hundreds over users through the included RtspServer by aggregating the packets. (Rtcp packets are not aggregated and will be calculated independently and sent appropriately for each Rtsp/Rtp session in the server.) When I use the term aggregated I mean repeated (not forwarded) to another client with the modifications necessary to relay the data to another EndPoint besides the one it was destined to.

It utilizes RFC2326 and RFC3550 compliant processes to provide this functionality among many others.

It can also be used if you want to broadcast from a device such as a Set Top Box without opening it up to the internet by connecting to it through the LAN and then establishing a SourceMedia (at which point you could then also add a password) and then then broadcasts the stream OnDemand to anyone who connects.

This means that you can have many different types of source streams such as JPEG, MPEG4, H263, H264 etc and your clients will all receive the same video as the source stream is transmitting.

This also means that you can use popular tools with this libraries included RtspServer such as FFMPEG, VLC,Quicktime, Live555, Darwin Streaming Media Server, etc .

This enables a developer to playback / transcode streams or save them to a file or even extract frames using those tools on the included RtspServer so you do not have to bog down the bandwidth or CPU of your actual source streams / devices.

You could also add a 3rd tier - for example: separate this process and do the work on a different server without having to worry about interop between libraries. Just use this library to create the RtspServer and source the stream. Additionally, from another process / server use AForge or another wrapper library to communicate to theRtspServer. The RtspServer then communicates to the device. Run your processing and then create a RFC2435 frame for every image required in the stream. After you have completed that step, send it out via Rtp using the RtspServer.

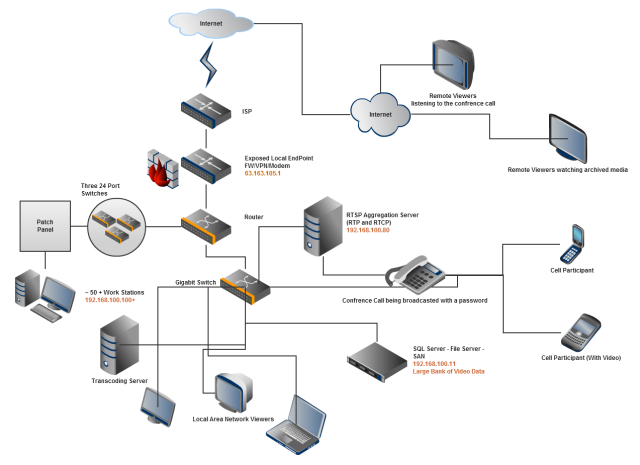

This library also gives you added scaling and customization because your transcoding and transport are done on two separate servers. If that didn't make sense hopefully this diagram will aid you in understanding.

Besides providing a RtspServer, it also provides an RtspClient and RtpClient allowing a developer to connect to any RtspServer or EndPoint for which a SessionDescription is availble, providing developers an easy way to consume a stream.

An Example in Brief

In the above diagram you will notice that the conference call is being participated in by several cell participants (some local callers). This is fine and requires usually no more then the hardware which is already present to send and receive the calls. However, if you add the interesting twist that remote users need to be able to view the call as well, you suddenly increase the complexity of the problem 10 fold due to the bandwidth and processing requirements for the added remote users.

Immediately you think (or should think), "How can this small device handle more then the people who have participated in this session?" The answer is this: the device's processor can only handle so many viewers per session, and so, only so much bandwidth is available for sending to participants. Not to mention any end users...

You can replace the call medium in the above analogy with any other device, such as a web camera, and the topology still applies.

You may be thinking, "Why do I need to do this? My camera can already support X number of users..." I would reply that if your current needs are met today and suddenly tomorrow you grow exponentially, then your user requirements are going to change, and it is better to have more padding than not - especially when delivering media to end users.

This is where the RtspServer comes in and provides a free, fast, and standards compliant implementation which allows you to repeat the call to the public optionally, at which point you could add a password. It would also allow outside users to participate in the session as well if required.

Where as a load balancer device would redirect network load, this software enables a server to act as a centralized source for consuming media and then has the capability to re-produce it elsewhere, thus removing the load from the end device and allowing the processing to be aggregated to as many tiers as desired.

If your saying, " I will never need to do anything like this, I will just use VLC or this software or that software," then this article is not for you.

You may also be thinking you could write such a client / server as I said I could write, and then all of the sudden realize there was a lot more to the standard(s) required to implement it then initially caught your eye... this article will probably help you.

You might also have come here because you have hit some type of barrier with another library and are hoping to find something more flexible to replace your current implementation. If this is the case, then this article will also help you!

Lets get some background on the problem domain...

Problem Domain

I needed to provide a way for multiple users to view Rtsp streams coming from a low bandwidth link such as modem or cell phone.

The sources already supported the Rtsp protocol but when multiple users connected to view the resources the bandwidth was not sufficient to support them; for each user that connected to the camera the bandwidth was subsequently halved, and thus, this was not sufficient to support more then 2 or 3 users at most - even with the quality setting tweaked.

The only solution was to aggregate the source media using a Media Server. This is because the Media Server would have a better processor and more bandwidth to utilize, which would allow the source stream to only have to be consumed by a single connection (The Media Server), and when clients wanted to consume the stream instead of connecting to the source media they would connect to the Media Server.

I researched around for a bit and found that there are other existing solutions such as DarwinStreamingServer, Live555, VideoLan or FFMPEG. Still, they are all written in C++ and are rather heavy weight for this type of project. Plus, they would require external dependencies from the managed code which I did not really want, not to mention possible licensing issues involved with that scenario.

I then came up with a crazy idea to build my own RtspServer which would take in the source Rtsp streams and deliver the stream data to RtspClients using the RtspServer. After all, I was familiar with socket communication and I had already built an HttpServer (among many others), so I knew I had the experience to do it. I just needed to actually start.

Before reading anything at all I searched around to see if there were any existing libraries out there that I could utilize and I found some partial implementations of Rtsp and Rtp, but nothing in C# that I could just take and use as it was... Fortunately, there were a few useful methods and concepts in the following libraries:

The problem with these implementations is that they either are not cross platform (will not work on Linux), or they are made for a specific purpose and utilize a proprietary video codec which makes them incompatible with a majority of standards based players and they are made to only interface with specific systems e.g. VoIP systems.

Having a task at hand and being confident in my abilities, I decided to go ahead and dissect the standard... I knew in advance that the video decoding and encoding would be the hardest only due to lack of domain experience, but I also realized that a good transport stack should be agnostic of such variances and thus it was my duty to build a stack that would be reusable under all circumstances... One transport stack to rule them all!(Using 100% Managed Code specifically C#)

I read up on RFC2326 which describes the Real Time Streaming Protocol or Rtsp. This is where everything starts, the Rtsp Protocol’s purpose is to get the details about the underlying media such as the format and how it will be sent back to the client. It also enables the client to control the state of the stream such as if it is playing or recording.

It turns out Rtsp requests are similar to Http by design but are not directly compatible with Http. (Unless tunneled over Http appropriately)

Take for example this 'OPTIONS' Rtsp request

C->S: OPTIONS rtsp://example.com/media.mp4 RTSP/1.0

CSeq: 1

Require: implicit-play

Proxy-Require: gzipped-messages

S->C: RTSP/1.0 200 OK

CSeq: 1

Public: DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE

Some of the status codes may be familiar and also how the data is formatted. You can see that this protocol is not much more different / difficult than working with Http.

If you can implement an Http Server then you can implement an Rtsp Server, the main difference is that all Rtsp requests usually require some type of 'State', where as some Http requests do not.

If fact if you want to support all variations of Rtsp you must support Http because Rtsp requests can be tunneled over Rtsp. The included RtspServer supports Rtsp over Http but if you want to know more about how it is tunneled you can check out this developer page from Apple

Now, back to 'State', when I say state I mean things like session variables in Http that persist with the connection even after close, in Rtsp an example of this would be the SessionId which is assigned to clients from the Server during the 'SETUP' Rtsp reqeust.

C->S: SETUP rtsp://example.com/media.mp4/streamid=0 RTSP/1.0

CSeq: 3

Transport: RTP/AVP;unicast;client_port=8000-8001

S->C: RTSP/1.0 200 OK

CSeq: 3

Transport: RTP/AVP;unicast;client_port=8000-8001;server_port=9000-9001

Session: 12345678

The other main difference is that the requests can come over Udp or Tcp requiring the Server to have 2 or more listening sockets. The default port for Rtsp over Tcp is 555 and for Rtsp over Udp is 554.

The Uri scheme for Tcp is 'rtsp://' and for Udp 'rtspu://'

The semantics of each scheme are the same, the only difference is the transport protocol being TCP or UDP underlying the IP Header.

While getting through Rtsp I discovered that I needed another protocol, RFC2326 also references the RFC for theSession Description Protocol or Sdp.

Sdp is one of the smallest parts of this project however it is equally as important to understand to get a complaint server functioning. It is only used in the 'DESCRIBE' request of the Rtsp Communication from server to client but is essential in providing the necessary information to the decode the data in the stream.

C->S: DESCRIBE rtsp://example.com/media.mp4 RTSP/1.0

CSeq: 2

S->C: RTSP/1.0 200 OK

CSeq: 2

Content-Base: rtsp://example.com/media.mp4

Content-Type: application/sdp

Content-Length: 460

m=video 0 RTP/AVP 96

a=control:streamid=0

a=range:npt=0-7.741000

a=length:npt=7.741000

a=rtpmap:96 MP4V-ES/5544

a=mimetype:string;"video/MP4V-ES"

a=AvgBitRate:integer;304018

a=StreamName:string;"hinted video track"

m=audio 0 RTP/AVP 97

a=control:streamid=1

a=range:npt=0-7.712000

a=length:npt=7.712000

a=rtpmap:97 mpeg4-generic/32000/2

a=mimetype:string;"audio/mpeg4-generic"

a=AvgBitRate:integer;65790

a=StreamName:string;"hinted audio track"

RFC4566 – The Session Description Protocol, it is used in many other places besides Rtsp and is responsible for describing media. It usually provides information on the streams which are available and the information required to start decoding them.

In my opinion this protocol is a bit weird in the sense that I believe that XML would have served a better purpose for what it does and how to validate it however that is not relevant and the format is required for streaming media so it must be implemented as per the standard. (XML Probably wasn't mature enough at the time and it required more characters in the output and thus more bandwidth which may not be desired). Another approach would have been to encapsulate this data in the SourceDescription RtcpPackets however for whatever reason it was chosen to be what it is and must be implemented.

Sdp was designed as a "Line" based protocol which was also meant to be basically human readable and easy to parse for underlying tokens which provide information which is usually determined by the first character in a 'Line'. Some "Line" items can carry a specific encoding which is different than the others in the document but the included parser handles this well.

Regardless of how you establish the Rtsp connection with the RtspServer e.g., TCP, HTTP, or UDP, the RtspServer you are connecting to must send the Media data using yet another protocol...

Real-time Transport Protocol or Rtp a.k.a / RFC3550

Again this is not a complex protocol, it defines packets and a frame construct and various algorithms used to transmit them and calculate loss in the transmissions as well as what ports to use. It also outlines how the stream data can be played out by the receiver.

The main format of the an RTP Packet is as follows (Thanks to Wikipedia)

| bit offset | 0-1 | 2 | 3 | 4-7 | 8 | 9-15 | 16-31 | |||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Version | P | X | CC | M | PT | Sequence Number | |||||||||||||||||||||||||

| 32 | Timestamp | |||||||||||||||||||||||||||||||

| 64 | SSRC identifier | |||||||||||||||||||||||||||||||

| 96 | CSRC identifiers ... |

|||||||||||||||||||||||||||||||

| 96+32×CC | Profile-specific extension header ID | Extension header length | ||||||||||||||||||||||||||||||

| 128+32×CC | Extension header ... |

|||||||||||||||||||||||||||||||

The RTP header has a minimum size of 12 bytes. After the header, optional header extensions may be present. This is followed by the RTP payload, the format of which is determined by the particular class of application. The fields in the header are as follows:

- Version: (2 bits) Indicates the version of the protocol. Current version is 2.

- P (Padding): (1 bit) Used to indicate if there are extra padding bytes at the end of the RTP packet. A padding might be used to fill up a block of certain size, for example as required by an encryption algorithm. The last byte of the padding contains the number of how many padding bytes were added (including itself).

- X (Extension): (1 bit) Indicates presence of an Extension header between standard header and payload data. This is application or profile specific.

- CC (CSRC Count): (4 bits) Contains the number of CSRC identifiers (defined below) that follow the fixed header.

- M (Marker): (1 bit) Used at the application level and defined by a profile. If it is set, it means that the current data has some special relevance for the application.

- PT (Payload Type): (7 bits) Indicates the format of the payload and determines its interpretation by the application. This is specified by an RTP profile. For example, see RTP Profile for audio and video conferences with minimal control (RFC 3551).

- Sequence Number: (16 bits) The sequence number is incremented by one for each RTP data packet sent and is to be used by the receiver to detect packet loss and to restore packet sequence. The RTP does not specify any action on packet loss; it is left to the application to take appropriate action. For example, video applications may play the last known frame in place of the missing frame. According to RFC 3550, the initial value of the sequence number should be random to make known-plaintext attacks on encryptionmore difficult. RTP provides no guarantee of delivery, but the presence of sequence numbers makes it possible to detect missing packets.

- Timestamp: (32 bits) Used to enable the receiver to play back the received samples at appropriate intervals. When several media streams are present, the timestamps are independent in each stream, and may not be relied upon for media synchronization. The granularity of the timing is application specific.

- SSRC: (32 bits) Synchronization source identifier uniquely identifies the source of a stream. The synchronization sources within the same RTP session will be unique. *This one is really important*

- CSRC: Contributing source IDs enumerate contributing sources to a stream which has been generated from multiple sources.

- Extension header: (optional) The first 32-bit word contains a profile-specific identifier (16 bits) and a length specifier (16 bits) that indicates the length of the extension (EHL=extension header length) in 32-bit units, excluding the 32 bits of the extension header.

After the RTP header there are a variable amount of bytes up to the maximum packet size of 1500 bytes and in some networks the packet size can exceed 1500 bytes - the IP and TCP / UDP Header unless there is adequate support on the network.

These bytes make up the payload of the RtpPacket Also known as the Coeffecients

The important part of the standard is obviously the packet structure and frame concept however there are also terms like JitterBuffer and Lip-synch which I will briefly explain.

A JitterBuffer and Lip-synch are just fancy words for ensuring there are no gaps in your RtpFrames by making sure the sequence numbers of the contained RtpPackets (in a RtpFrame) increment one by one. (without skipping) and are played with the proper delay, in a Translator, Mixer or Proxy implementation there is not much use for such.

Not to minimize work done in this area by others however there is a significance in their values in regard to encoding or decoding the data for use however it is much less relevant in the transport area, for example when encoding or decoding the time-stamps are used to ensure audio and video packets are within reasonable playing distance of each other resulting in the lip's being synced or in other words the audio matching the video with very little drift or lag.

I am not going to delve into all of these internals I just wanted to explain how they work at a high level, you can read the RFC's if you are interested further.

The main thing to take away when continuing is that RtpPacket have a field called the 'Ssrc'. The fully typed name of this field is SynchronizationSourceIdentifier and it identifies the stream AND who the stream is being sent from / to. This is an important distinction to make as you will read below.s

After becoming equipped with my understanding of the protocols and having gotten as far to be able to create streams myself I started next where any other reverse engineer would and I started going through a fewWireShark dumps of existing players working with existing servers.

My goal was to compare the traffic of my client to other players to determine what exactly changes per client who connects to a server.

After some analyzation of the dumps I realized that my client’s traffic was almost exactly the same and that the stream data was not changing only the single field ‘Ssrc’ in the RtspServers 'SETUP' request and subsequently the ‘Ssrc’ field in all RtcpPackets RtpPackets going out.

I realized that since the stream data (RTP Payload) was not changing nor any bytes inside it... only the ‘Ssrc’ field (which represented the stream and who the Packet was being sent to) that this was going to be an easy task; I just needed to modify that field to effectively re-target the packet when sending back out from the server to the client by using a different 'Ssrc'.

I could have also used the old 'ssrc' by adding it tothe 'ContributingSources' of the outgoing packet but I leave this as an option thing to do for people creating mixers with a specific purpose because it increases the packet size by a few bytes for each RtpPacket you send out.

I originally was going to do the same with RtcpPackets however the overhead to calculate their reports according to the standard was small plus there was existing code in the other projects I could reference and utilize in my implementation so I added the ability for my RtpClient to generate and respond with its ownRtcpPackets per the standard.

The result was efficient and pleasing and is compatible with VLC, Quicktime, FFMPEG and any other standards compliant player. The code works as follows...

Any clients consuming a stream from the included RtspServer get an exact copy of the Video / Audio streams being transmitted in the exact same Format and at the exact same Frames Per Second.

If your source stream drops a packet then all clients consuming that stream will likely drop a packet.

If a session drops a packet it will not effect other sessions on the server and finally no matter how many streams connect to the included server the source stream only has the bandwidth utilized as if a single stream is consuming it, the rest of the bandwidth is utilized by the RtspServer and the clients who connect to it.

Each RtpClient instance has a single Thread to handle all Audio and Video Transport which occurs and thus the life of the buffer is shared between audio and video streams, packet instances are short lived and usually are complete in the buffer however if a packet is larger then allocated in the buffer it will be completed accurately based on the information required in the header fields.

Now I was at the home stretch, I would have been at home plate had it not have been for Rtp over Tcp being slightly different from Rtp over Udp and to complicate matters we had to deal with Rtsp concurrently (all on the same socket in TCP). It was with a bit of research I came across the following RFC as it is not directly referenced by RFC 2326 for some reason.

When video is transmitted over the internet to home users or users in a workplace it usually has problems due toNetwork Address Translation. A common example of this is a firewall or router blocking incoming traffic on certain UDP ports which result in the player not receiving video. The only way to work around this issue is to have the player tunnel the connection through TCP hole punching. This allows the firewall to allow the traffic to and from the client and server if it is on a designated port.

In such a case there cannot be different ports for Rtp and Rtcp traffic, this additionally complicates matters because we are already using Rtsp to communicate on the TCP socket.

Enter RFC4571 / Independent TCP

RFC4571 – "Framing Real-time Transport Protocol (RTP) and RTP Control Protocol (RTCP) Packets

over Connection-Oriented Transport" Or more succinctly "Interleaving"

This RFC explains how data can be sent and received with RTP / RTCP communication when using TCP oriented sockets. It specifies that there should be a 2 byte length field preceding the Rtp or Rtcp packet.

Figure 1 defines the framing method. 0 1 2 3 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 +---------------------------------------------------------------+ | LENGTH | RTP or RTCP packet ... | +---------------------------------------------------------------+

Combine this with section Section 10.12 of RFC2326 which adds adds to this mechanism by adding a framing character and channel character to delimit Rtsp and Rtp / Rtcp traffic preceding the length field.

Take this example:

C->S: PLAY rtsp://foo.com/bar.file RTSP/1.0

CSeq: 3

Session: 12345678

S->C: RTSP/1.0 200 OK

CSeq: 3

Session: 12345678

Date: 05 Jun 1997 18:59:15 GMT

RTP-Info: url=rtsp://foo.com/bar.file;

seq=232433;rtptime=972948234

S->C: $�00{2 byte length}{"length" bytes data, w/RTcP header}

S->C: $�00{2 byte length}{"length" bytes data, w/RTP header}

C->S: GET PARAMETER rtsp://foo.com/bar.file RTSP/1.0

CSeq: 5

Session: 12345678

S->C: RTSP/1.0 200 OK

CSeq: 5

Session: 12345678

S->C: $�00{2 byte length}{"length" bytes data, w/RTP header}

S->C: $�00{2 byte length}{"length" bytes data, w/RTP header}

As you can see the example given =>'$�00{2 byte length}{"length" bytes data, w/RTP header}' was not really diagrammed out in the RFC so I will do a bit of explaining for you here and give you a real world example.

In the instance that Rtp is being 'interleaved' on the same socket that Rtsp communication is sent and received on so we need a way to differentiate the start, and end of the Rtp data and the Rtsp data within a contagious allocation of memory (a buffer)

This is where RFC2336 adds the magic character '$' as a framing control character to indicate RTP Data is coming on the socket

When '$' is encountered the channel and length follow along with the actual RTP data packet.

$ - is the control character.

� - is the channel identifier

00 - is the length of the {data} (in this case 0)

So if we had a real packet it's frame header might look like this:

0x36,0x01,{2 byte length}

Where

(0x36)- is the control character. ($)

0x01 - is the channel identifier

And the length would follow in network byte order.

If there is not a '$' character the data can be determined to be Rtp or Rtcp by inspecting the 'PayloadType' field of the Rtp and Rtcp Packet which is usually the fifth byte from where the '$' should be.

(1 for the control character + 1 for the channel + 2 for the length).

In RtpPackets this will be the PayloadType and in RtcpPackets this will be the RtcpPacketType. I could have just inspected the common version byte however that would not have identified the packet as Rtp or Rtcp so the PayloadType/PacketType (which shares the same offset in both packets) was used.

These checks can also be performed if there is no channel character or the channel character corresponds to a channel which is unknown to the sender / receiver.

If the PayloadType is not recognized as a Rtp PayloadType and is not in the rage of an RtcpPackType then the packet is either Rtsp or another layer's data and is contained until the next time in the data the control character occurs.

Once the type of packet is determined to be compatible with the underlying channel the packet may then be processed by it's handler.

Developers should take care when receiving large packets however they should also ensure that they are not being injected with values from an attacker attempting to cause a DOS attack by having the system decode large amounts of nothing which could then be used to further comprise a system in various ways.

This library compensates for this by only receiving up to half of the buffer at a time before attempting to parse packets in the buffer.

The RtpClient and RtspClient handle these issues for you easily and the RtspClient can send and receive many RtspMessages even when the underlying RtpClient is using the socket for multiple channels which in short means that interleaving is completely supported in both the RtpClient and RtspClient.

All IPacket implementations now share a Prepare method which projects the packets into a Enumerable<byte>which can then be converted to an Array or manipulated further.

New and Improved

The first incarnation of the code was rapidly developed, It followed a KISS architecture and it was very functional. Some of the biggest changes were made on the fly after determining most users would be working with sessions with 2 tracks, Audio and Video.

In the next incarnation functionality was improved by improving both the performance and adding new features to complete and polish off the library.

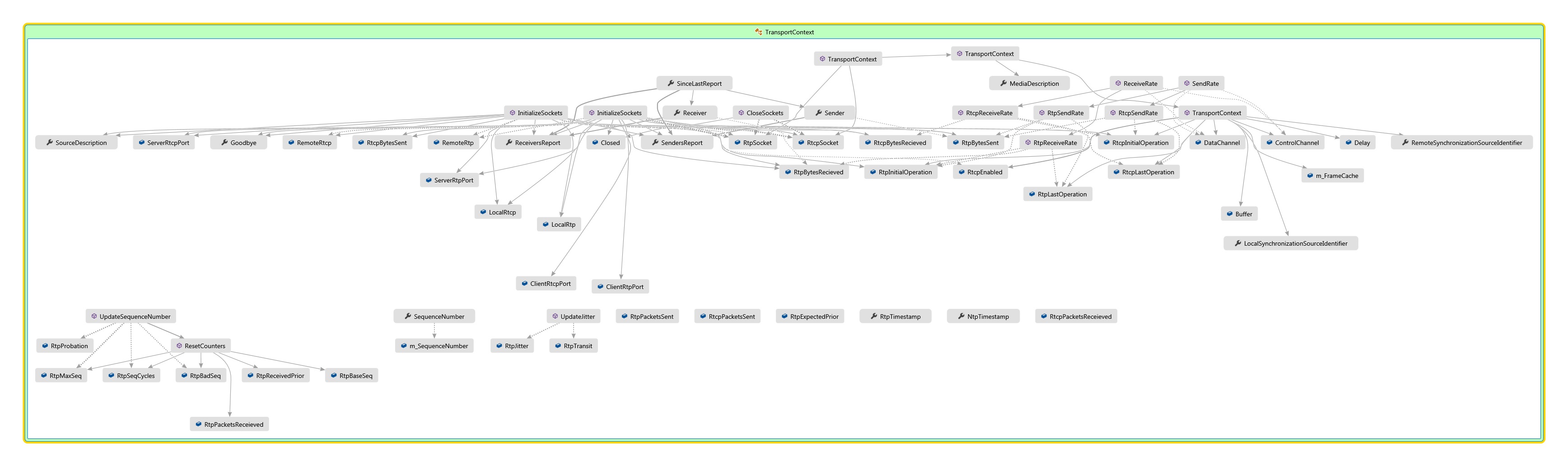

The TransportContext class... It's not your everyday System.Net.TransportContext.

It actually is unique, I am the first person to engineer such as concept as far as I know.

It works well for two reasons that most other libraries cannot accommodate because they regard the abstraction in a way which is different than mine.

I consider it better and more flexible but others may not, I will explain this concept below.

I originally had a class called Interleave which I used for the purpose of keeping track of state however in the scenario where Senders can be Receivers too (and vice versa) (Think multicast or a conference system) I think this works much better for the type of work to be done and becomes more familiar to users / developers when being used repeatedly.

The name proper is probably 'SubSession' however TransportContext aims to be a SubSession and more for instance if you wanted to achieve Tcp and Udp concurrently with Rtp this library is the only one which allows you to do so as far as I know and if there is another please do comment and I will revise my statement. (commercial or otherwise)

It maintains the sockets, memory for transport, request counters and state information which makes working with Rtp a breeze.

Here is the class diagram for the TransportContext

Note the abstraction design stance taken where the property names are in the 3rd person, Sender, Receiver, SinceLastReport. These properties are what make the TransportContext useful and functional.

It is a living beast and switches from being a Sender to a Receiver when necessary (and if required or forced) through the use of the RtpClient.

Blocking Sockets....

Typically high performance code runs on Non-Blocking sockets. This allows you to send and receive at a rate which is greater then the underlying network rate putting the bottleneck on the hardware and network equipment.

The Windows loop-back adapter is mostly adequate at handling normal traffic loads however when you intentionally send at a high rate, especially higher than the rate the network interface is rated for sometimes there are negative effects on the underlying system.

The problem with the loop-back adapter is that there is no 'network cable' and nor network interface processor expect a virtual one, which resides in the ever loving New Technologies Kernel (or whats left of it anyway).

This causes some problems in the Layered Service Provider which is responsible for verifying this traffic before it gets to and from the objects you interact with (such as Sockets)

On Unix this is less of an issue, and I have not tested Windows 8 however according to this MSDN page there might be some changes there; however what I wanted to get at is that if you experience weird issues with Udp test on your local system, while also testing from your local system... Please verify that you are not experiencing some sort of software or hardware issue or read ahead optimization before commenting or declaring an issue with the library.

Tcp Interleaving support is finally completed meaning that QuickTime and other media players will also work right out of the box.

There are still some things to do however the ground work (such as packet and report classes are already there) such as :

Rtcp XR Framework - RFC2032, RFC3611, RFC5450 RFC5760

Rtcp Feedback Framework - RFC4585

Rtsp / Http Tunneling Support.

Updates

All packet classes are now IDisposable this ensures that the memory usage of both the RtpClient / RtspClient andRtspServer always stays within some assumed size. You can choose for packets to be disposed with theShouldDispose property on each instance which is provided by the BaseDisposable class.

IPacket now consolidates Rtp and Rtcp packet instances so you can work with them much more easily. (RtspMessage is also IPacket)

RtpClient class has been re-designed to be easier to use and understand.

RtspServer and RtspClient class now supports Rtsp 2.0 draft messages.

new RtcpReport classes which are easier to use and understand.

Recording / Archiving is now support via the 'rtpdump' format, archived streams can be played back on theRtspServer or downloaded for use elsewhere.

Facilities for completely managed Transcoding have been started allowing the user to output H.264 and MPEG1/2 and soon MPEG4 and others.

Common.TaggedException<T> and ITaggedException allow dealing with exceptions and providing meta data on the exceptions being throw, the class is also a great helper for integrations because you can catchCommon.TaggedException or Common.TaggedException<MyType> as well as plain old Exception types. There are also Utility methods related to exceptions called Raise and TryRaise.

public static class ExceptionExtensions

{

//https://net7mma.codeplex.com/SourceControl/latest#Common/ExceptionExtensions.cs

}

How the code works

Here I will describe in traditional (or not so) means how my model works, I write at a pretty high level so you should be able to follow along without the use of diagrams. If there is a particular subject I reference I will link to it. If good questions come up I might consider adding content to this portion of the article.

As you may or may not known .Net is a Garbage Collector language, herein to be referred to as GC Language. .Net also uses a Time Slice or Time Sharing paradigm. See this article on Wikipedia for more information about .Net

I have included some diagrams, however as you will probably agree they are jumbled up and resemble a galaxy in my humble opinion, that aside with the proper description you should be able to understand thoroughly the entire process by the time you are reading this article. You can also check out this MSDN video on how to understand complex code diagrams from Code Map.

Now we will get a little into the basics and move very quickly in toto to understanding the environment and process as I dutifully oblige to give the end reader (YOU) a complete and concise understanding of everything you need to know about Rtsp and Rtp and how to write a program which utilizes their concepts.

In general,

All applications have a static entry point known as 'Main', from this point your code is responsible for the effects on what possibly could be an entire Domain if used improperly, especially in a GC or Time Scaled environment.

I know myself first hand that time is limited, I try to make good use of my time and squeeze every possible thing I can into every moment, sometimes leaving me with less time to enjoy the things I want to or should.

The same is true for code executing on a processor, when you think about it on a high level; electricity moves at the speed of light. The size of the component's in the devices we use (more importantly their mass) dictate the capacity at which they can respond relative to the speed of light. (Or so says the Theory of General Relativity).

The smaller and smaller things get electricity becomes a part in and unto itself all the way down to Strings. (or so I hope anyway)

What this means is that when you write code like:

int X = 0; x+= -x * x ^ x + x

You are moving electricity through components in the processor (usually transistors) these individual evaluations (-x * x), XOR, (x + x) are called instructions and the same way you have a certain amount of steps to take to achieve something a computer has to break code down into instructions.

These instructions are then utilized typically by reading values in binary (1 and 0) where a positive charge in the component usually indicates 1 and the lack thereof indicates 0.

When sharing time (and in other cases such as asynchronous kernel procedure calls or system events) you may be in the middle of giving / executing instructions when all of the sudden you are interrupted and then find that some amount of time has passed in-between the time you actually expected to pass and the time which actually has been passed with respect to the flow of electricity in the system and the ability to register changes without interference from sources such as magnetism and other electricity and the heat they can produce.

This is called time sharing; We all time share weather we realize it or not. When I try to play with my Dog 'Bandit' and I get half way into getting ready to make a solid attempt of playing with him and then all of the sudden I get a great idea, so I try to bail out and share my time between thinking about my idea and what I originally had set out to do which was play with my dog.

This is the concept of time sharing, while playing with my dog I had thoughts alternate to his play time which occured inbetween moments of play(and them sometimes take over).

The same things occurs when you use your mouse, as the device moves physically and registeres an interrupt electrically which is then observed and conveyed by hardware to the software underlying where the display adapter among other elements are updated.

Anyway enough about time sharing and all of that interesting stuff, lets put that aside and concentrate on what you really came here for, Documentation and understanding of the Rtp protocol as well as understanding of the code at it's most fundamental level.

The RtpClient class allows you to create and receive RtpPackets; it contains one or moreTransportContexts but in certain advanced circumstances they could also have 0 TransportContexts but still be sending and receiving by some mechanism not yet displaying in the code released. This is primarily being tested and developed for multicast however unicast can also be used if desired with no changes and is by default since that is most common and still applicable in most multicast cases as well.

The important thing to take away from that is you will notice the SendData and ReceiveData methods on theRtpClient take a Socket as a parameter as well as a TransportContext however these methods are marked internal for a reason and later I will implement a RtpClient.Multicast constructor which will allow this functionality.

In short Multicast Rtp is slightly different than Unicast Rtp siply due to the number of particpants which can be involved in a single session / conference. The entirety of the difference revolves around the use of Rtcp which can be used to indicate loss for receivers in the session; when using Rtcp over Multicast the reports from a single sender can potentially go to the entire group and need to contain ReportBlocks for the relvent parties; this is where a Rtp.Conference can be used to reduce the amount of work a single RtpClient has to do when sending and also the bandwidth via Multicast inter alia.

When you are sending or receiving Rtp you are participating in a session, this session is described usually be other means and then provided to the RtpClient. The means for providing the description some in the form of the Session Description Protocol.

For the most part you will always call new RtpClient(....) to join an existing session and most sessions contain one or more 'Tracks' or Media Descriptions, these descriptions define if the underlying media is audio, video, text, or some other type of binary data, more information on the SessionDescription can be found below or at thislink.

Rtcp is a mechanism that goes hand and hand with Rtp it is designed to take only a portion of the bandwidth in use by the application, it reports additions send and receive metrics which commonly would have to be conveyed in other manners in the same data channels or out of band and would increase complexity so they happen on a separate socket most of the time unless you are Duplexing which means Rtp and Rtcp are coming on the same port.

Most people would argue that you can do this with a single socket and that a single master Rtcp and Rtp socket are all that is required and the use of SendTo and ReceiveFrom however and that is basically correct, however once you start to utilize very high data rates you will find that there is typically better performance to be had with a single thread per socket scenario.

This implementation uses two Non-Blocking Sockets per TransportContext unless you are using Tcp then you will be using a single Blocking socket. It has a single thread which is created and spawned in the Connect method, the thread can be aborted by calling the Disconnect method. The thread is time divided into portions for sending and receiving using a very basic method of reading DateTime.UtcNow which does not have the overhead of having Time Zone calculation performed, you are essentially using a struct rather then a long to describe the TickCount which is embedded in the TimeSpan and DateTime anyway, the classes are just abstractions around those values.

Each TransportContext has a local buffer which is sized at 2 * RtpPacket.MaxPacketSize + 4 which by default is 1500.

4 bytes for RFC2326 + RFC4571 bytes ($,id,{len0,len1}) 1500 for Rtp and the rest (1472) for Rtcp. and overhead

Resulting is a total size of 3004. (plus some for information about the array, such as its version).

When the Connect method, Disconnect is not called until the InactivityTimeout has elapsed and a local core is able to process the instruction which determines this via local comparison.

When I or other computer scientists write 'local' we typically mean locally in the cache of the processor executing the code, because this is where all local operations occur and then are copied out of the cache. This is what 'Threads' essentially are and they share their time while execution occurs.

This is particularly interesting when you see that I make very little use of locks and I also have stopped using theInterlocked Methods such as Add and Increment or Read (although I have left them commented for comparison) because the overhead of making a function call while local in a time slice scenario is critical, this is especially important to consider when locking is performed, if another thread tries to locally 'lock' access a resource 'held' via a 'lock' statement elsewhere then this results in a deadlock in which no thread can execute and time is wasted doing nothing and results in contention until one thread or the other releases the lock.

In short you will find no use of lock at all unless I am intentionally locking something to create contention where operations are critical, there I utilize two mechanisms,

[System.Runtime.CompilerServices.MethodImplAttribute(System.Runtime.CompilerServices.MethodImplOptions.Synchronized)]

combined with a lock and that is to ensure that operations in the critical section are contended without too much errors occurring at the nano scale of things.

In short electricity combined with uncertainty is already a miracle unto itself, where we are with computers today would have been possible considered Alien 20 years ago... additionally without going into a lot of detail on computer sciences overall I believe really good code doesn't need locks and proper synchronization however especially in a GC language where there is time sharing this is especially important.

To provide some insight on my personal bias I prefer to use them when Writing to a collection in most other cases but not when reading, I rarely will use a Mutex or WaitHandle unless the situation calls for it, I will not go around looking for places to say 'Oh this needs contention' however if I find it and can confirm that contention is a critical issue I will synchronize. I will end by describing Destructors as a typical place you can lock fields without worry about contention most of the time however in a GC language Finalizers are overhead and cause the GC to run slower because it has to execute the Finalizer. See WaitForPendingFinalizers

Normally when you have code which is very similar you take that code and provide it via a function call / function pointer so that your code is easier to maintain, when reading these function you may say it seems like a few lines here and there could have been re-factored and called via a static Utility method and I would agree and I challenge people to create patches and submit them @ the project page's Issue Tracker.

There are now a lot more utility and extension classes but there is still work to be done in that area.

One main reason I have not done abstracted this yet is because the performance is fine the way it is for general use, another reason is people who need to squeeze more performance out of the current implementation are challenged to do so.

This code is suitable for use in a production environment and can handle as many streams as you can throw at it so long as the underlying network link can support the traffic as well, performance wise in its current state, with a custom built server I have observed the following results:

Performance Testing Indicated that `5000` users achieved a max CPU utilization of `42%` and 210 MB Memory.

- These numbers are obtained in process and include rapidly connecting clients which may disconnect and resume their session before it times out -

(Average 20% CPU utilization and 70 MB memory with average 750 active connections)

-These numbers are obtained from a seperate dedicated process which consumed media repeatledly from the server while I was able to visually watch stream from QuickTime, VLC etc. - (But at the same time as the disconnect test)

The new server hardware is as follows: 64GB Corei7 Extreme with Dual GTX670 (4GB) and SSD.

Advanced features such as bandwidth throttling have not yet been implemented however there are plans to add those features inter alia.

One obvious or not so obvious place for this is in the parallel execution of sending and receiving. I will not give out all of the details however I will say that there should be a model function used rather then being declared inline, the model function should iterate the outgoing packets and store them in binary form in another contagious collection while removing them from where they are, then another function would iterate the contagious allocations and operate on them for sending and perform any necessary garbage collection and waiting for finalizes on worker threads when possible.

One benefit of walking through those methods during the RtpClientTest example or another test is that all the meat and bones of the RtpClient implementation is there, if suddenly you mess something up and no longer send or receive or have high usage then your culprit is likely there.

The worker thread on the RtpClient will execute the SendReceive loop until Disconnect on the RtpClient is called.

The RtspServer runs in a similar fashion, it maintains a local collection of ClientSession objects which contain the state information for the Rtsp / Rtp sessions in progress and their underlying sub sessions.

The RtspServer can handle Udp and Http experimentally and should be stable before the final version of the library is released to the public.

Using the code

Delivering media to clients can be a complex and expensive process. This project's goal is to allow developers to deliver media to clients in less then 10 lines of code utilizing standards complaint protocol implementations.

//Create the server optionally specifying the port to listen on

Rtsp.RtspServer server = new Rtsp.RtspServer(/*554*/);

//Create a stream which will be exposed under the name Uri rtsp://localhost/live/

//From the RtspSource rtsp://1.2.3.4/mpeg4/media.amp

Media.Rtsp.Server.MediaTypes.RtspSource source = new Media.Rtsp.Server.MediaTypes.RtspSource("YouTubeRtspSource",

"rtsp://v4.cache5.c.youtube.com/CjYLENy73wIaLQlg0fcbksoOZBMYDSANFEIJbXYtZ29vZ2xlSARSBXdhdGNoYNWajp7Cv7WoUQw=/0/0/0/video.3gp");

//If the stream had a username and password

//source.Client.Credential = new System.Net.NetworkCredential("user", "password");

//If you wanted to password protect the stream when clients connnect with a player

//source.RtspCredential = new System.Net.NetworkCredential("username", "password");

//Add the stream to the server

server.TryAddMedia(source);

//Start the server and underlying streams

server.Start();

//The server is now running, you can access the stream with VLC, QuickTime, etc

Developers can create new RtpPacket's in managed code or parse them from a Byte[] They can get a binary representation of the RtpPacket by calling the Prepare method of the RtpPacket They can also re-target theRtpPacket by calling the overloaded Prepare method as shown below.

//Create a RtpPacket in managed code

Rtp.RtpPacket packet = new Rtp.RtpPacket();

//packet.Created is set to DateTime.UtcNow automatically in constructor

packet.SequenceNumber = 1;

packet.SynchronizationSourceIdentifier = 0x0707070;

packet.TimeStamp = Utility.DateTimeToNtp32(DateTime.Now);

//packet.Channel = 0; //Old Code

byte[] someRtpData = packet.Prepare().ToArrya()// Could be a byte[] from a socket or anywhere else

//From a byte[]

packet = new Rtp.RtpPacket(someRtpData, 0);

//Packet as byte[]

byte[] output = packet.Prepare().ToArray();

//Same packet with a different Ssrc

output = packet.Prepare(packet.PayloadType, 0x123456).ToArray();

RtpPackets and RtcpPackets have a DateTime Created property which allows a developer to keep track of when a packet was created.

This library also provides the same facilities for Creating and (Re)writing binary data which conforms to the Session Description Protocol

//Create a SDP in managed code

Sdp.SessionDescription sdp = new Sdp.SessionDescription(0);

sdp.SessionName = "name";

//Add a new MediaDescription with the payload type 26

sdp.Add(new Sdp.SessionDescription.MediaDescription()

{

MediaFormat = 26

});

//Output it

string output = sdp.ToString();

//Or parse it from a string

sdp = new Sdp.SessionDescription(sdp.ToString());

And the same facilities for creating RtspRequests or RtspResponses

//Make a new RtspRequest in managed code

Rtsp.RtspMessage request = new Rtsp.RtspMessage(RtspMessageType.Reqeust);

//Assign some properties

request.CSeq = 1;

request.Method = Rtsp.RtspMethod.PLAY;

//Get the output to send

byte[] output = request.ToBytes();

//Pase a RtspRequest from bytes

request = new Rtsp.RtspMessage(output);

//Create a new RtspResponse

Rtsp.RtspMessage response = new Rtsp.RtspResponse(RtspMessageType.Response);

//Parse one from bytes

response = new Rtsp.RtspResponse(output = response.Prepare());

There is an included RtspClient and RtpClient. The RtspClient sets up the RtpClient during the 'SETUP' request and automatically and switches from Udp to Tcp or the other way when it needs to

//Create a client

Rtsp.RtspClient client = new Rtsp.RtspClient("rtsp://someUri/live/name");

///The client has a Client Property which is used to access the RtpClient

///Attach events at the packet level

client.Client.RtcpPacketReceieved +=

new Rtp.RtpClient.RtcpPacketHandler(Client_RtcpPacketReceieved);

client.Client.RtpPacketReceieved +=

new Rtp.RtpClient.RtpPacketHandler(Client_RtpPacketReceieved);

//Attach events at the frame level

client.Client.RtpFrameChanged +=

new Rtp.RtpClient.RtpFrameHandler(Client_RtpFrameChanged);

//Performs the Options, Describe, Setup and Play Request

client.StartListening();

//Do something else

///while (true) { }

//Send the Teardown and Goodbye

client.StopListening();

There is a the event InterleavedData which provides developers with the data encounter in the Tcp socket. You do not have to use this event but it's there if you want to inspect the data in the interleaved slice for some reason, usually the event provides RtspMessages that have been pushed by the server, or when TCP sockets are used the Rtsp response sometimes is found there before being passed to the RtspClient via the same event

The RtpClient and RtspClient already handle this event for you and fire the appropriate event such asOnRtpPacket or OnRtcpPacket for the slice data after determining the packet is valid and the channel is capable of receiving the message.

A RtspClient can send and receive at will during the interleaved session and the RtspRequests will be handled in between the interleaved data as it is supposed to.

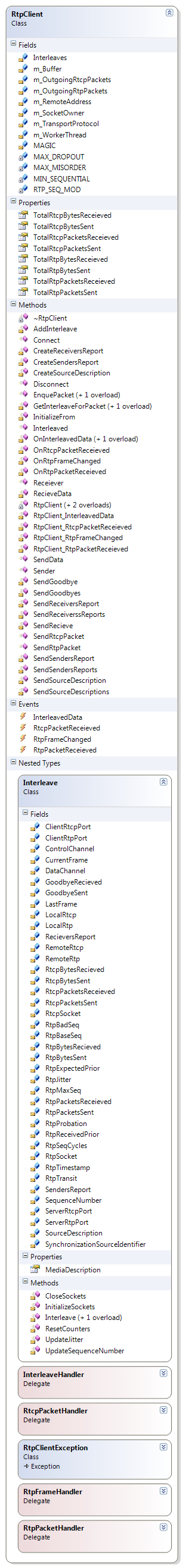

Here are the class diagrams for the RtpClient and RtspClient.

Implementation Details

Media Servers require a construct around their sources and receivers to be able to deliver them across sessions properly.This is typically called a 'Sink' and `Source` in most implementations per the terminology in the RFC.

In the included RtspServer only Rtp / Rtsp Sources are currently supported meaning that you need a source which is already sending RTSP / RTP Data in the first place. Work is in progress to support Rtmp and other protocols which will allow you to make those streams avaible via Rtsp and Rtp also.

If you wanted to stream from a file you would make a new type of SourceStream e.g. MediaFileStream and inherit from SourceStream.

IMediaSink, IMediaSource and IMediaStream interfaces have been defined, they allow a implementation where a derivation can be both a Source and a Sink, the fundamental interface is IMediaStream which provides the Guid -> Id and SessionDescription properties which identify the stream.

If you wanted to make a class which cached live streams and allowed play from any point therein currently encountered then you would derive from RtspSouceStream and add logic to store each frame and then rather then attaching to the events as live streams you would Skip and Take frames from where you cached them and send them to the client allowing you to have the client play from any point in the source media for any duration they desire. (This type of functionality may be provided in the library eventually however I just didn't have the time to complete it as of the time of this writing).

SouceStream, assigns the ID of the stream and allows you set a stream name. It is the base of all sources of theRtspServer It also provides you with a way to name streams by something other than a single name by providing an Aliases property which contains all names ever associated with a stream. E.g. when you change a name the old name will become an alias. You can also add aliases at any time.

ChildStream inherits from SourceStream to allowing a child to be created with the same properties of the source. If you wanted to reduce frame-rate or otherwise you would add the logic in the child.

I have engineered the class SourceStream and ClientSession for these purposes where SourceStream encapsulates a `Source` and a `Sink` at the same time by using events and each ClientSession just gets a copy of the `Source` stream through the events from the underlying RtpClient of said SourceStream

The ClientSession is only a `IMediaSink` in the sense that it only sends out data from elsewhere.

The RtspServer currently exposes IMediaStream as the type returned from the Streams property, overall it just means that you can check if a particular instacne is IMediaSink to determine a specficic type of stream, additionally there is also a SessionDescription property.

The goal is to eventually also allow other transport encapsulations and possibly implement them such as RTMP however since that is a closed technology which is primarily used with Flash (which I hate) it makes me less eager to put time and effort into that area, the basis for adding support has been provided and more will come when time permits so the library can also support a Flash / Rtmp To Rtsp/Rtp gateway feature!

The methods OnSouceRtpPacket and OnSourceRtcpPacket of the ClientSession handle adding the packet to a List where they will be sent from during the SendRecive phase of the underlying RtpClient used by theClientSession .

So in short when a RtpPacket arrives on the SourceStream an event is fired and subsequently handled by the ClientSession of a client though the OnSourceRtpPacketRecieved and OnSourceRtcpPacketRecievedmethods of the ClientSession which you can find below:

Copy Code

Copy Code/// <summary>

/// Called for each RtpPacket received in the source RtpClient

/// </summary>

/// <param name="client" />The RtpClient from which the packet arrived

/// <param name="packet" />The packet which arrived

internal void OnSourceRtpPacketRecieved(RtpClient client, RtpPacket packet)

{

RtpClient.TransportContext trasnportContext = m_RtpClient.GetContextForPacket(packet);

if (trasnportContext != null)

{

if (packet.Timestamp >= trasnportContext.RtpTimestamp)

{

//Send on its own thread

try { m_RtpClient.EnqueRtpPacket(packet); }

catch { }

}

}

}

/// <summary>

/// Called for each RtcpPacket recevied in the source RtpClient

/// </summary>

/// <param name="stream" />The listener from which the packet arrived

/// <param name="packet" />The packet which arrived

internal void OnSourceRtcpPacketRecieved(RtpClient stream, RtcpPacket packet)

{

try

{

//E.g. when Stream Location changes on the fly etc.

if (packet.PacketType == RtcpPacket.RtcpPacketType.Goodbye)

{

RtpClient.TransportContext trasnportContext = m_RtpClient.GetContextForPacket(packet);

//Prep the client for a data loss

if (trasnportContext != null)

{

m_RtpClient.SendGoodbye(trasnportContext);

}

}

else if (packet.PacketType == RtcpPacket.RtcpPacketType.SendersReport)

{

//The source stream recieved a senders report

//Update the RtpTimestamp and NtpTimestamp for our clients also

SendersReport sr = new SendersReport(packet);

RtpClient.TransportContext trasnportContext = m_RtpClient.GetContextForPacket(packet);

if (trasnportContext == null) return;

else if (sr.NtpTimestamp > trasnportContext.NtpTimestamp)

{

trasnportContext.NtpTimestamp = sr.NtpTimestamp;

trasnportContext.RtpTimestamp = sr.RtpTimestamp;

}

}

}

catch { }

}

Each client or player who connects to the RtspServer is represented by a RtspSession.

ClientSessions are automatically created by the RtspServer when a compliant RtspClient connects to the RtspServer.

Copy Code

Copy Code /// <summary>

/// Handles the accept of rtsp client sockets into the server

/// </summary>

/// <param name="ar">IAsyncResult with a Socket object in the AsyncState property</param>

internal void ProcessAccept(IAsyncResult ar)

{

if (ar == null) goto End;

//The ClientSession created

ClientSession created = null;

try

{

//The Socket needed to create a ClientSession

Socket clientSocket = null;

//See if there is a socket in the state object

Socket server = (Socket)ar.AsyncState;

//If there is no socket then an accept has cannot be performed

if (server == null) goto End;

//If this is the inital receive for a Udp or the server given is UDP

if (server.ProtocolType == ProtocolType.Udp)

{

//Should always be 0 for our server any servers passed in

int acceptBytes = server.EndReceive(ar);

//Start receiving again if this was our server

if (m_UdpServerSocket.Handle == server.Handle)

m_UdpServerSocket.BeginReceive(Utility.Empty, 0, 0, SocketFlags.Partial, ProcessAccept, m_UdpServerSocket);

//The client socket is the server socket under Udp

clientSocket = server;

}

else if(server.ProtocolType == ProtocolType.Tcp) //Tcp

{

//The clientSocket is obtained from the EndAccept call

clientSocket = server.EndAccept(ar);

}

else

{

throw new Exception("This server can only accept connections from Tcp or Udp sockets");

}

//Make a temporary client (Could move semantics about begin recieve to ClientSession)

created = CreateOrObtainSession(clientSocket);

}

catch(Exception ex)//Using begin methods you want to hide this exception to ensure that the worker thread does not exit because of an exception at this level

{

//If there is a logger log the exception

if (Logger != null)

Logger.LogException(ex);

////If a session was created dispose of it

//if (created != null)

// created.Disconnect();

}

End:

allDone.Set();

created = null;

//Thread exit 0

return;

}

internal ClientSession CreateOrObtainSession(Socket rtspSocket)

{

//Handle the transient sockets which may come from clients which close theirs previoulsy, this should only occur for the first request or a request after play.

//In UDP all connections are such

//Iterate clients looking for the socket handle

foreach (ClientSession cs in Clients)

{

//If there is already a socket then use that one

if (cs.RemoteEndPoint == rtspSocket.RemoteEndPoint)

{

return cs;

}

}

//Create a new session with the new socket, there may be an existing session from another port or address at this point....

//So long as that session does not attempt to access resources in another session given by 'sessionId' of that session then everything should be okay.

ClientSession session = new ClientSession(this, rtspSocket);

//Add the session

AddSession(session);

//Return a new client session

return session;

}

RtpSourcess expose methods which will allow RtpPackets and RtcpPackets to be handled or forwarded to another RtpClient or a RtspSession. You will notice the methods on the RtspSession have the same signature as the event handlers fired by the RtpClient. This is so the events can be added and removed at any time very easily.

(You can see an example of this below in the example where I handle the 'PLAY' request and response.)

They also expose an event for decoding images called OnFrameDecoded which transforms the RtpPackets orRtpFrames into System.Drawing.Image.

The logic was exposed through an event because some decoding is typically very intensive on the processor and the event model allows users to handle the events appropriately after we perform the task required.

This event is typically called by the RtspSession's RtpClient when OnRtpFrameCompleted is called. This method could block for as long as it likes because events will continue to be fired by the underlying RtpClient.

internal void OnFrameDecoded(System.Drawing.Image decoded) { if (FrameDecoded != null) FrameDecoded(this, decoded); }

Copy Code

Copy Codeinternal virtual void DecodeFrame(Rtp.RtpClient sender, Rtp.RtpFrame frame)

{

if (RtspClient.Client == null || RtspClient.Client != sender) return;

try

{

if (!frame.Complete) return;

//Get the MediaDescription (by ssrc so dynamic payload types don't conflict

Rtp.RtpClient.TransportContext tc =

this.RtspClient.Client.GetContextBySourceId(frame.SynchronizationSourceIdentifier);

if (tc == null) return;

Media.Sdp.MediaDescription mediaDescription = tc.MediaDescription;

if (mediaDescription.MediaType == Sdp.MediaType.audio)

{

//Could have generic byte[] handlers OnAudioData OnVideoData OnEtc

//throw new NotImplementedException();

}

else if (mediaDescription.MediaType == Sdp.MediaType.video)

{

if (mediaDescription.MediaFormat == 26)

{

OnFrameDecoded(m_lastFrame = (new Rtp.JpegFrame(frame)).ToImage());

}

else if (mediaDescription.MediaFormat >= 96 && mediaDescription.MediaFormat < 128)

{

//Dynamic..

//throw new NotImplementedException();

}

else

{

//0 - 95 || >= 128

//throw new NotImplementedException();

}

}

}

catch

{

return;

}

}

Currently only RFC2435 Jpeg's can be decoded or encoded by the server and the process is not very intensive on the CPU, infact the server can send data at a rate of over 100 FPS.

Other types of Encoding and Decoding support are underway but using another library such as FFMPEG or LibAvCodec you can easily support any type of encoding or decoding you require the Packetization is all which is required to be performed per the profile you are working with.

Updates in this area include support for 16 bit precision and DataRestartInterval markers.

Each RtspServer instance itself is threaded using asynchronous sockets. This means each client request will be handled on it's own thread from the thread pool.

When a 'PLAY' request comes in I simply wire up the events from the source RtspStream to the client'sRtspSession and the result is that the client gets a copy of the source audio/video stream packet for packet.

The RtpClient of the ClientSession handles sending them out with the correct ‘Ssrc’ when it dequeuesRtpPackets from its queue during the SendRecieve phase which is performed in a worker thread.

Copy Code

Copy Code /// <summary>

/// Entry point of the m_WorkerThread. Handles sending out RtpPackets and RtcpPackets in buffer and handling any incoming RtcpPackets.

/// Sends a Goodbye and exits if no packets are sent of recieved in a certain amount of time

/// </summary>

void SendReceieve()

{

Begin:

try

{

DateTime lastOperation = DateTime.UtcNow;

//Until aborted

while (!m_StopRequested)

{

#region Recieve Incoming Data

//Enumerate each context and receive data, if received update the lastActivity

//ParallelEnumerable.ForAll(TransportContexts.ToArray().AsParallel(), (tc) =>

//{

// ProcessReceive(tc, ref lastOperation);

//});

foreach (TransportContext context in TransportContexts.ToArray())

{

ProcessReceive(context, ref lastOperation);

}

if (m_OutgoingRtcpPackets.Count + m_OutgoingRtpPackets.Count == 0)

{

//Should also check for bit rate before sleeping

System.Threading.Thread.Sleep(TransportContexts.Count);

continue;

}

#endregion

#region Handle Outgoing RtcpPackets

if (m_OutgoingRtcpPackets.Count > 0)

{

int remove = m_OutgoingRtcpPackets.Count;

var rtcpPackets = m_OutgoingRtcpPackets.GetRange(0, remove);

if (SendRtcpPackets(rtcpPackets) > 0) lastOperation = DateTime.UtcNow;

m_OutgoingRtcpPackets.RemoveRange(0, remove);

rtcpPackets = null;

}

#endregion

#region Handle Outgoing RtpPackets

if (m_OutgoingRtpPackets.Count > 0)

{

//Could check for timestamp more recent then packet at 0 on transporContext and discard...

//Send only A few at a time to share with rtcp

int sent = 0;

foreach (RtpPacket packet in m_OutgoingRtpPackets.ToArray())

{

if (packet == null || packet.Disposed) ++sent;

//If the entire packet was sent

else if (SendRtpPacket(packet) >= packet.Length)

{

++sent;

lastOperation = DateTime.UtcNow;

}

}

m_OutgoingRtpPackets.RemoveRange(0, sent);

}

#endregion

}

}

catch { if (!m_StopRequested) goto Begin; }

}

Here is an example of using the events on the RtpClient of the RtspSourceStream through the RtspServerwith the RtspSession to aggregate packets when the server receives a 'PLAY' command from a client.

Copy Code

Copy Code /// <summary>

///

/// </summary>

/// <param name="request"></param>

/// <param name="session"></param>

internal void ProcessRtspPlay(RtspMessage request, ClientSession session)

{

RtpSource found = FindStreamByLocation(request.Location) as RtpSource;

if (found == null)

{

ProcessLocationNotFoundRtspRequest(session);

return;

}

if (!AuthenticateRequest(request, found))

{

ProcessAuthorizationRequired(found, session);

return;

}

else if (!found.Ready)

{

//Stream is not yet ready

ProcessInvalidRtspRequest(session, RtspStatusCode.PreconditionFailed);

return;

}

RtspMessage resp = session.ProcessPlay(request, found);

//Send the response to the client

ProcessSendRtspResponse(resp, session);

session.m_RtpClient.m_WorkerThread.Priority = ThreadPriority.AboveNormal;

session.ProcessPacketBuffer(found);

}

For the 'PAUSE' or 'TEARDOWN' request I can just remove those events from the RtpClient of the sourceRtspStream and subsequently the RtspSession

/// <summary>

///

/// </summary>

/// <param name="request"></param>

/// <param name="session"></param>

internal void ProcessRtspPause(RtspMessage request, ClientSession session)

{

RtpSource found = FindStreamByLocation(request.Location) as RtpSource;

if (found == null)

{

ProcessLocationNotFoundRtspRequest(session);

return;

}

if (!AuthenticateRequest(request, found))

{

ProcessAuthorizationRequired(found, session);

return;

}

//Might need to add some headers

ProcessSendRtspResponse(session.ProcessPause(request, found), session);

}

Screenshots of the server in action re-sourcing two Rtsp TCP streams at once over UDP

Screenshots of the server in action re-sourcing over 5 streams (TCP and UDP) at once while simultaneously viewing them with VLC

Points of Interest / Notes

I initally built the entire code base is less than 30 days! This does not mean the result is unprofessional or has problems it just goes to show what you can do if you try!

It took another 30 days or so in between moving to my first home and dealing with all of those issues to get it to the point where it was.

I have now worked on the library for about 2 years and it has been greatly improved.

As things are added, completed, fixed or contributed then I will update this article accordingly!

You won't find any CompondPacket class, most other implementations use to convey when there are multipleRtcpPackets in a single buffer, I am not sure why other implementations went with such a concept. I handle multiple RtcpPackets in a single buffer with the RtcpPacket.GetPackets method which returns an array ofRtcpPackets found in said buffer.

Memory usage is low, you only use what is required and nothing is kept around unnecessarily including completed packets / frames etc. When souring two streams to myself from the included RtspServer I found the memory usage to be under 20 - 40 MB for the duration of the testing with 10 source streams being aggregated.

There are NO External Dependencies AND You can use the part you need without the others... E.g. TheRtpClient without the RtspClient or the RtspClient without the RtspServer. They are all functional in their own right and perform as they are required by the RFC making them a complete implementation for use on any type system in any operating system which support .Net. This is the reason things are exposed the way they are in terms of internal or protected access. I have tried to only allow public properties when possible.

Things like the SessionDescription.MediaDescription have internal methods for adding and removing lines however care should be taken when using them on live connections as the Version property of aSessionDescription must change with all changes that occur inside it during a session. When you change aMediaDescription by adding or removing lines there is no way to notify the parent SessionDescriptionwithout having to have all the plumbing to support when it changed so this is solved by allowing someone to call Add or Remove on a Line of the MediaDescription but then manually change the Version if required during that operation. This is done for you when you Add or Remove a SessionDescriptionLine from aSessionDescription automatically because the version is accessible in the same scope unless you indicate not to via the optional parameter.

The code also include a new cross platform implementation which can delay time on the microsecond(μs) scale called μTimer.

Copy Code

Copy Code#region Cross Platform μTimer

/// <summary>

/// A Cross platform implementation which can delay time on the microsecond(μs) scale.