Logstash是一款开源的数据收集引擎,具备实时管道处理能力。简单来说,logstash作为数据源与数据存储分析工具之间的桥梁,结合 ElasticSearch以及Kibana,能够极大方便数据的处理与分析。通过200多个插件,logstash可以接受几乎各种各样的数据。包括日志、网络请求、关系型数据库、传感器或物联网等等。

在Linux环境中,我们可以通过包管理进行安装,例如Unbuntu中的apt、以及CentOS中的yum。也可以从这里下载对应环境的二进制版本

体验Pipeline

Logstatsh最基本的Pipeline模式如下图所示,其需要两个必需参数input、output,以及一个可选参数filter。

安装logstash

elasticstack,最好不要用root用户安装、启用。

一、创建普通用户(dyh)

useradd dyh -d /home/dyh -m

-d:指定用户home目录;

-m:如果没有该目录,则创建;

创建用户密码

passwd dyh #更改用户 dyh 的密码 。 #新的 密码: #无效的密码: 密码是一个回文 #重新输入新的 密码: #passwd:所有的身份验证令牌已经成功更新。

二、下载logstash安装包(logstash-6.2.3.tar.gz),并解压,下载地址:链接: https://pan.baidu.com/s/10MUE4tDqsKbHWNzL1Pbl7w 密码: hwyh

tar zxvf logstash-6.2.3.tar.gz

三、简单测试,进入到解压包的bin目录下,执行如下命令:

./logstash -e 'input { stdin{} } output { stdout{} }' Exception in thread "main" java.lang.UnsupportedClassVersionError: org/logstash/Logstash : Unsupported major.minor version 52.0 at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:800) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:449) at java.net.URLClassLoader.access$100(URLClassLoader.java:71) at java.net.URLClassLoader$1.run(URLClassLoader.java:361) at java.net.URLClassLoader$1.run(URLClassLoader.java:355) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:354) at java.lang.ClassLoader.loadClass(ClassLoader.java:425) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308) at java.lang.ClassLoader.loadClass(ClassLoader.java:358) at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:482) [dyh@centos74 bin]$ java -version java version "1.7.0_79" Java(TM) SE Runtime Environment (build 1.7.0_79-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode)

如果出现 “Unsupported major.minor version 52.0”错误,说明jdk版本太低,需要升级JDK;

升级JDK之后,执行以下命令(可能需要等待片刻):

java -version java version "1.8.0_131" Java(TM) SE Runtime Environment (build 1.8.0_131-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode) [dyh@centos74 bin]$ ./logstash -e 'input { stdin{} } output { stdout{} }'

-e参数允许我们在命令行中直接输入配置,而不同通过-f参数指定配置文件。看到Pipeline main started表示logstash已经启动成功,在命令行输入一些文字后,logstash会加上日期和主机名(IP)输出到终端。这就是Logstash最基本的工作模式,接受输入,然后将信息加工后放入到输出。

[dyh@centos74 bin]$ ./logstash -e 'input { stdin{} } output { stdout{} }' Sending Logstash's logs to /home/dyh/ELK/logstash-6.2.3/logs which is now configured via log4j2.properties [2018-09-13T09:43:08,545][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/fb_apache/configuration"} [2018-09-13T09:43:08,575][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/netflow/configuration"} [2018-09-13T09:43:08,745][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/home/dyh/ELK/logstash-6.2.3/data/queue"} [2018-09-13T09:43:08,754][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/home/dyh/ELK/logstash-6.2.3/data/dead_letter_queue"} [2018-09-13T09:43:09,408][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2018-09-13T09:43:09,447][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"da1c26ee-45d0-419e-b305-3e0f3c0d852a", :path=>"/home/dyh/ELK/logstash-6.2.3/data/uuid"} [2018-09-13T09:43:10,250][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.2.3"} [2018-09-13T09:43:10,805][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} [2018-09-13T09:43:12,637][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50} [2018-09-13T09:43:12,841][INFO ][logstash.pipeline ] Pipeline started succesfully {:pipeline_id=>"main", :thread=>"#<Thread:0x236d5f92 run>"} The stdin plugin is now waiting for input:

展示如下信息,表示启动成功,等待中断输入信息。

输入hello world,回车。

hello World 2018-09-13T01:45:28.703Z localhost hello World

ogstash会加上日期和主机名(IP)输出到终端。这就是Logstash最基本的工作模式,接受输入,然后将信息加工后放入到输出。

处理日志—接收fileBeat输出

Logstash收购了Filebeat加入到自己的生态系统中用来处理日志,Filebeat是一个轻量化的日志收集工具,还可以感知后台logstash的繁忙程度来决定日志发送的速度。

首先下载 Filebeat,解压后就可以使用。找到filebeat.yml修改如下:

filebeat.prospectors: 16 17 # Each - is a prospector. Most options can be set at the prospector level, so 18 # you can use different prospectors for various configurations. 19 # Below are the prospector specific configurations. 20 21 - type: log 22 23 # Change to true to enable this prospector configuration. 24 enabled: true 25 26 # Paths that should be crawled and fetched. Glob based paths. 27 paths: 28 - /var/log/*.log . . . 152 #----------------------------- Logstash output -------------------------------- 153 output.logstash: 154 # The Logstash hosts 155 hosts: ["192.168.106.20:5044"]

配置完filebeat之后,等logstash启动之后,再启动。

配置logstash,接收filebeat输入,然后输出到终端(配置文件的方式)

在logstash安装路径的config目录下,新建filebeat.conf文件,内容如下:

input {

//接收beat输入 beats{

//指定接收的端口 port => "5044" } } //输出到终端 output { stdout { codec => rubydebug } }

测试下改配置文件准确性,命令(../bin/logstash -f filebeat.conf --config.test_and_exit).

命令(

bin/logstash -f first-pipeline.conf --config.reload.automatic

):The --config.reload.automatic option enables automatic config reloading so that you don’t have to stop and restart Logstash every time you modify the configuration file.

[dyh@centos74 bin]$ cd ../config/ [dyh@centos74 config]$ ls jvm.options log4j2.properties logstash.yml pipelines.yml startup.options [dyh@centos74 config]$ vim filebeat.conf [dyh@centos74 config]$ ../bin/logstash -f filebeat.conf --config.test_and_exit Sending Logstash's logs to /home/dyh/ELK/logstash-6.2.3/logs which is now configured via log4j2.properties [2018-09-13T10:01:48,537][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/fb_apache/configuration"} [2018-09-13T10:01:48,559][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/netflow/configuration"} [2018-09-13T10:01:49,243][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified Configuration OK //说明配置文件没有问题 [2018-09-13T10:01:52,697][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

启用logstash,命令(../bin/logstash -f filebeat.conf),稍等一段时间,打印以下信息,表示logstash启动成功:

[dyh@centos74 config]$ ../bin/logstash -f filebeat.conf --config.test_and_exit Sending Logstash's logs to /home/dyh/ELK/logstash-6.2.3/logs which is now configured via log4j2.properties [2018-09-13T10:01:48,537][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/fb_apache/configuration"} [2018-09-13T10:01:48,559][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/netflow/configuration"} [2018-09-13T10:01:49,243][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified Configuration OK [2018-09-13T10:01:52,697][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash [dyh@centos74 config]$ ../bin/logstash -f filebeat.conf Sending Logstash's logs to /home/dyh/ELK/logstash-6.2.3/logs which is now configured via log4j2.properties [2018-09-13T10:07:47,604][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/fb_apache/configuration"} [2018-09-13T10:07:47,625][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/netflow/configuration"} [2018-09-13T10:07:48,263][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2018-09-13T10:07:49,130][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.2.3"} [2018-09-13T10:07:49,692][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} [2018-09-13T10:07:52,806][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50} [2018-09-13T10:07:53,600][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"} [2018-09-13T10:07:53,687][INFO ][logstash.pipeline ] Pipeline started succesfully {:pipeline_id=>"main", :thread=>"#<Thread:0x592e1bdc run>"} [2018-09-13T10:07:53,784][INFO ][org.logstash.beats.Server] Starting server on port: 5044 [2018-09-13T10:07:53,821][INFO ][logstash.agent ] Pipelines running {:count=>1, :pipelines=>["main"]}

然后启用filebeat,命令(./filebeat -e -c filebeat.yml -d "public")

[dyh@ump-pc1 filebeat-6.2.3-linux-x86_64]$ ./filebeat -e -c filebeat.yml -d "public" 2018-09-13T10:13:30.793+0800 INFO instance/beat.go:468 Home path: [/home/dyh/ELK/filebeat-6.2.3-linux-x86_64] Config path: [/home/dyh/ELK/filebeat-6.2.3-linux-x86_64] Data path: [/home/dyh/ELK/filebeat-6.2.3-linux-x86_64/data] Logs path: [/home/dyh/ELK/filebeat-6.2.3-linux-x86_64/logs] 2018-09-13T10:13:30.810+0800 INFO instance/beat.go:475 Beat UUID: 641ac54b-9a52-4861-99fb-8235e186a816 2018-09-13T10:13:30.811+0800 INFO instance/beat.go:213 Setup Beat: filebeat; Version: 6.2.3 2018-09-13T10:13:30.825+0800 INFO pipeline/module.go:76 Beat name: ump-pc1 2018-09-13T10:13:30.826+0800 INFO instance/beat.go:301 filebeat start running. 2018-09-13T10:13:30.826+0800 INFO registrar/registrar.go:71 No registry file found under: /home/dyh/ELK/filebeat-6.2.3-linux-x86_64/data/registry. Creating a new registry file. 2018-09-13T10:13:30.843+0800 INFO registrar/registrar.go:108 Loading registrar data from /home/dyh/ELK/filebeat-6.2.3-linux-x86_64/data/registry 2018-09-13T10:13:30.843+0800 INFO registrar/registrar.go:119 States Loaded from registrar: 0 2018-09-13T10:13:30.843+0800 WARN beater/filebeat.go:261 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning. 2018-09-13T10:13:30.843+0800 INFO crawler/crawler.go:48 Loading Prospectors: 1 2018-09-13T10:13:30.843+0800 INFO log/prospector.go:111 Configured paths: [/var/log/*.log] 2018-09-13T10:13:30.844+0800 INFO [monitoring] log/log.go:97 Starting metrics logging every 30s 2018-09-13T10:13:30.846+0800 ERROR log/prospector.go:437 Harvester could not be started on new file: /var/log/hawkey.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: Failed opening /var/log/hawkey.log: open /var/log/hawkey.log: permission denied 2018-09-13T10:13:30.847+0800 ERROR log/prospector.go:437 Harvester could not be started on new file: /var/log/yum.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: Failed opening /var/log/yum.log: open /var/log/yum.log: permission denied 2018-09-13T10:13:30.847+0800 ERROR log/prospector.go:437 Harvester could not be started on new file: /var/log/dnf.librepo.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: Failed opening /var/log/dnf.librepo.log: open /var/log/dnf.librepo.log: permission denied 2018-09-13T10:13:30.847+0800 ERROR log/prospector.go:437 Harvester could not be started on new file: /var/log/dnf.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: Failed opening /var/log/dnf.log: open /var/log/dnf.log: permission denied 2018-09-13T10:13:30.847+0800 ERROR log/prospector.go:437 Harvester could not be started on new file: /var/log/dnf.rpm.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: Failed opening /var/log/dnf.rpm.log: open /var/log/dnf.rpm.log: permission denied 2018-09-13T10:13:30.869+0800 INFO crawler/crawler.go:82 Loading and starting Prospectors completed. Enabled prospectors: 1 2018-09-13T10:13:30.869+0800 INFO log/harvester.go:216 Harvester started for file: /var/log/boot.log 2018-09-13T10:13:30.870+0800 INFO cfgfile/reload.go:127 Config reloader started 2018-09-13T10:13:30.870+0800 INFO log/harvester.go:216 Harvester started for file: /var/log/vmware-vmsvc.log 2018-09-13T10:13:30.871+0800 INFO cfgfile/reload.go:219 Loading of config files completed. ^C2018-09-13T10:13:40.693+0800 INFO beater/filebeat.go:323 Stopping filebeat 2018-09-13T10:13:40.694+0800 INFO crawler/crawler.go:109 Stopping Crawler 2018-09-13T10:13:40.694+0800 INFO crawler/crawler.go:119 Stopping 1 prospectors 2018-09-13T10:13:40.694+0800 INFO prospector/prospector.go:121 Prospector ticker stopped 2018-09-13T10:13:40.695+0800 INFO prospector/prospector.go:138 Stopping Prospector: 11204088409762598069 2018-09-13T10:13:40.695+0800 INFO cfgfile/reload.go:222 Dynamic config reloader stopped 2018-09-13T10:13:40.695+0800 INFO log/harvester.go:237 Reader was closed: /var/log/boot.log. Closing. 2018-09-13T10:13:40.695+0800 INFO log/harvester.go:237 Reader was closed: /var/log/vmware-vmsvc.log. Closing. 2018-09-13T10:13:40.695+0800 INFO crawler/crawler.go:135 Crawler stopped 2018-09-13T10:13:40.695+0800 INFO registrar/registrar.go:210 Stopping Registrar 2018-09-13T10:13:40.695+0800 INFO registrar/registrar.go:165 Ending Registrar 2018-09-13T10:13:40.698+0800 INFO instance/beat.go:308 filebeat stopped. 2018-09-13T10:13:40.706+0800 INFO [monitoring] log/log.go:132 Total non-zero metrics {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":90,"time":97},"total":{"ticks":120,"time":133,"value":0},"user":{"ticks":30,"time":36}},"info":{"ephemeral_id":"56baf0c6-9785-42cc-8cf1-f8cd9a5abdb4","uptime":{"ms":10054}},"memstats":{"gc_next":7432992,"memory_alloc":4374544,"memory_total":8705840,"rss":23642112}},"filebeat":{"events":{"added":1325,"done":1325},"harvester":{"closed":2,"open_files":0,"running":0,"started":2}},"libbeat":{"config":{"module":{"running":0},"reloads":1},"output":{"events":{"acked":1323,"batches":1,"total":1323},"read":{"bytes":12},"type":"logstash","write":{"bytes":38033}},"pipeline":{"clients":0,"events":{"active":0,"filtered":2,"published":1323,"retry":1323,"total":1325},"queue":{"acked":1323}}},"registrar":{"states":{"current":2,"update":1325},"writes":5},"system":{"cpu":{"cores":2},"load":{"1":0.29,"15":0.02,"5":0.06,"norm":{"1":0.145,"15":0.01,"5":0.03}}}}}} 2018-09-13T10:13:40.706+0800 INFO [monitoring] log/log.go:133 Uptime: 10.058951193s 2018-09-13T10:13:40.706+0800 INFO [monitoring] log/log.go:110 Stopping metrics logging.

然后看logstash的终端输出,截取部分内容,如下

{ "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 119887, "message" => "[9月 13 09:32:32.917] [ message] [vmsvc] Cannot load message catalog for domain 'timeSync', language 'zh', catalog dir '/usr/share/open-vm-tools'.", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 119963, "message" => "[9月 13 09:32:32.917] [ message] [vmtoolsd] Plugin 'timeSync' initialized.", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120111, "message" => "[9月 13 09:32:32.917] [ message] [vmsvc] Cannot load message catalog for domain 'vmbackup', language 'zh', catalog dir '/usr/share/open-vm-tools'.", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120187, "message" => "[9月 13 09:32:32.917] [ message] [vmtoolsd] Plugin 'vmbackup' initialized.", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120266, "message" => "[9月 13 09:32:32.920] [ message] [vix] VixTools_ProcessVixCommand: command 62", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120345, "message" => "[9月 13 09:32:33.154] [ message] [vix] VixTools_ProcessVixCommand: command 62", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120452, "message" => "[9月 13 09:32:33.154] [ message] [vix] ToolsDaemonTcloReceiveVixCommand: command 62, additionalError = 17", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120554, "message" => "[9月 13 09:32:33.155] [ message] [powerops] Executing script: '/etc/vmware-tools/poweron-vm-default'", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } } { "tags" => [ [0] "beats_input_codec_plain_applied" ], "prospector" => { "type" => "log" }, "@version" => "1", "host" => "ump-pc1", "source" => "/var/log/vmware-vmsvc.log", "@timestamp" => 2018-09-13T02:13:30.957Z, "offset" => 120632, "message" => "[9月 13 09:32:35.164] [ message] [powerops] Script exit code: 0, success = 1", "beat" => { "hostname" => "ump-pc1", "name" => "ump-pc1", "version" => "6.2.3" } }

说明filebeat搜集的日志信息,输出给logstash成功。

处理日志—LogStash自身日志采集配置

在logstash目录的config目录下新建logfile.conf文件,内容:

input { file { path => "/home/dyh/logs/log20180823/*.log" type => "ws-log" start_position => "beginning" } } output{ stdout{ codec => rubydebug } }

测试配置文件是否准确

[dyh@centos74 config]$ ../bin/logstash -f logfile.conf --config.test_and_exit Sending Logstash's logs to /home/dyh/ELK/logstash-6.2.3/logs which is now configured via log4j2.properties [2018-09-13T10:27:31,637][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/fb_apache/configuration"} [2018-09-13T10:27:31,658][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/dyh/ELK/logstash-6.2.3/modules/netflow/configuration"} [2018-09-13T10:27:32,280][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified Configuration OK [2018-09-13T10:27:35,387][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

启用logstash,命令(../bin/logstash -f logfile.conf)

截取日志输出部分内容:

{ "@version" => "1", "type" => "ws-log", "@timestamp" => 2018-09-13T02:29:04.368Z, "host" => "localhost", "message" => "08-23 07:55:53.207313 [-322978960] [\xD0½\xA8] [SUCESS] [/data/appdata/cbankapp4/iboc_corporbank.ear/corporbank.war/WEB-INF/conf/interface/ebus_SelectFund_ebus_WithdrawalFundTrading.xml] [0]", "path" => "/home/dyh/logs/log20180823/sa-evtlist_1.log" } { "@version" => "1", "type" => "ws-log", "@timestamp" => 2018-09-13T02:29:04.368Z, "host" => "localhost", "message" => "08-23 07:55:53.212594 [-322978960] [ɾ\xB3\xFD] [SUCESS] [/data/appdata/cbankapp4/iboc_corporbank.ear/corporbank.war/WEB-INF/conf/interface/ebus_PaymentManage_ebus_authOrderRefundDetailQuery_batch.xml] [0]", "path" => "/home/dyh/logs/log20180823/sa-evtlist_1.log" } { "@version" => "1", "type" => "ws-log", "@timestamp" => 2018-09-13T02:29:04.369Z, "host" => "localhost", "message" => "08-23 07:55:53.212758 [-322978960] [\xD0½\xA8] [SUCESS] [/data/appdata/cbankapp4/iboc_corporbank.ear/corporbank.war/WEB-INF/conf/interface/ebus_PaymentManage_ebus_authOrderRefundDetailQuery_batch.xml] [0]", "path" => "/home/dyh/logs/log20180823/sa-evtlist_1.log" } { "@version" => "1", "type" => "ws-log", "@timestamp" => 2018-09-13T02:29:04.369Z, "host" => "localhost", "message" => "08-23 07:55:53.220285 [-322978960] [ɾ\xB3\xFD] [SUCESS] [/data/appdata/cbankapp4/iboc_corporbank.ear/corporbank.war/WEB-INF/conf/interface/ebus_QLFeePayment_ebus_QLFeePaymentSignQueryOrderList.xml] [0]", "path" => "/home/dyh/logs/log20180823/sa-evtlist_1.log" } { "@version" => "1", "type" => "ws-log", "@timestamp" => 2018-09-13T02:29:04.369Z, "host" => "localhost", "message" => "08-23 07:55:53.220445 [-322978960] [\xD0½\xA8] [SUCESS] [/data/appdata/cbankapp4/iboc_corporbank.ear/corporbank.war/WEB-INF/conf/interface/ebus_QLFeePayment_ebus_QLFeePaymentSignQueryOrderList.xml] [0]", "path" => "/home/dyh/logs/log20180823/sa-evtlist_1.log" }

LogStash输出到elasticsearch配置

修改logfile.conf,内容如下:

input { file { path => "/home/dyh/logs/log20180823/*.log" type => "ws-log" start_position => "beginning" } } #output{ # stdout{ # codec => rubydebug # } #} output { elasticsearch { host => ["192.168.51.18:9200"] index => "system-ws-%{+YYYY.MM.dd}" } }

配置logstash扫描/home/dyh/logs/log20180823/路径下的所有已.log结尾的日志文件,并发扫描结果发送给elasticsearch(192.168.51.18:9200),存储的索引

system-ws-%{+YYYY.MM.dd},启用elasticsearch。

然后启用logstash,命令(../bin/logstash -f logfile.conf)

../bin/logstash -f logfile.conf

启用logstash之后,elasticsearch终端打印一下,说明logstash的日志发送到elasticsearch

[2018-09-13T10:46:59,364][INFO ][o.e.c.m.MetaDataCreateIndexService] [lBKeFc1] [system-ws-2018.09.13] creating index, cause [auto(bulk api)], templates [], shards [5]/[1], mappings [] [2018-09-13T10:46:59,665][INFO ][o.e.c.m.MetaDataMappingService] [lBKeFc1] [system-ws-2018.09.13/g1fY5lrxQPOsb8UCvEPnLg] create_mapping [doc]

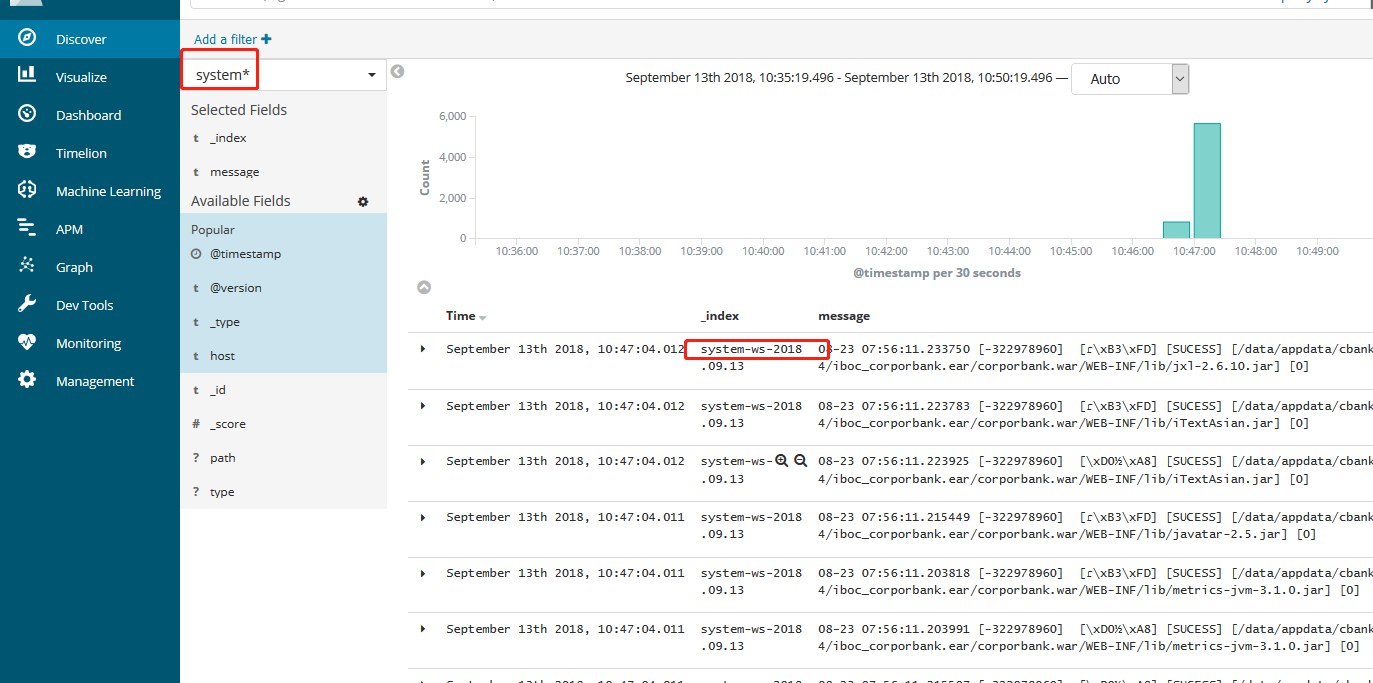

在kibana中查看传输到elasticsearch中的日志

参考文章:http://www.cnblogs.com/cocowool/p/7326527.html