Language Model estimates the probs that the sequences of words can be a sentence said by a human. Training it, we can get the embeddings of the whole vocabulary.

UnConditional Language Model just assigns probs to sequences of words. That’s to say, given the first n-1 words and to predict the probs of the next word.(learn the prob distribution of next word).

Beacuse of the probs chain rule, we only train this:

Conditional LMs

A conditional language model assigns probabilities to sequences of words, W =(w1,w2,…,wt) , given some conditioning context x.

For example, in the translation task, we must given the orininal sentence and its translation. The orininal sentence is the conditioning context, and by using it, we predict the objection sentence.

Data for training conditional LMs:

To train conditional language models, we need paired samples.E.X.

Such task like:Translation, summarisation, caption generation, speech recognition

How to evaluate the conditional LMs?

- Traditional methods: use the cross-entropy or perplexity.(hard to interpret,easy to implement)

- Task-specific evaluation: Compare the model’s most likely output to human-generated expected output . Such as 【BLEU】、METEOR、ROUGE…(okay to interpret,easy to implement)

- Human evaluation: Hard to implement.

Algorithmic challenges:

Given the condition context x, to find the max-probs of the the predict sequence of words, we cannot use the gready search, which might cann’t generate a real sentence.

We use the 【Beam Search】.

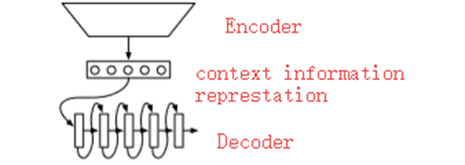

We draw attention to the “encoder-decoder” models that learn a function that maps x into a fixed-size vector and then uses a language model to “decode” that vector into a sequence of words,

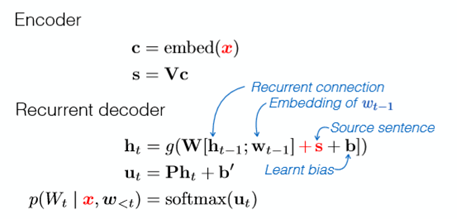

Model: K&B2013

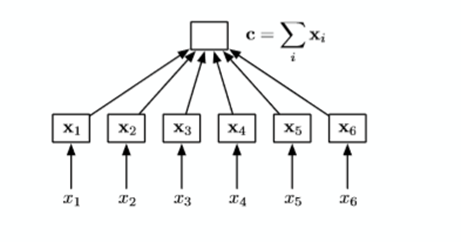

A simpal of Encoder – just cumsum(very easy)

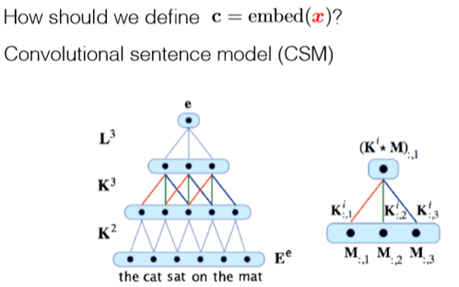

A simpal of Encoder – CSM Encoder:use CNN to encode

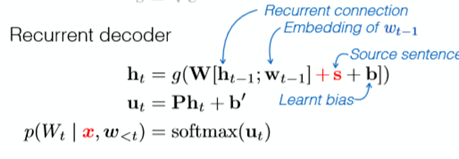

The Decoder – RNN Decoder

The cal graph is.

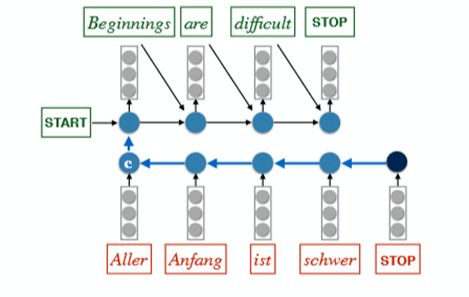

Sutskever et al. Model (2014):

- Important.Classic Model

Cal Graph:

Some Tricks to Sutskever et al. Model :

- Read the Input Sequence ‘backwards’: +4BLEU

- Use an ensemble of m 【independently trained】 models (at the decode period) :

- Ensemble of 2 models: +3 BLEU

- Ensemble of 5 models: +4.5 BLEU

For example:

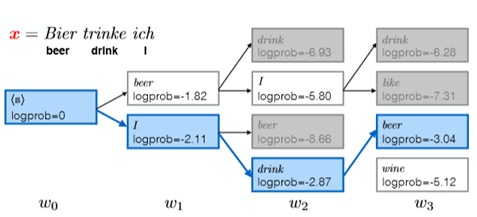

- we want to find the most probable (MAP) output given the input,i,e.

We use the beam search : +1BLEU

For example,the beam size is 2:

Example of A Application: Image caption generation

Encoder:CNN

Decoder:RNN or

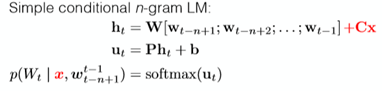

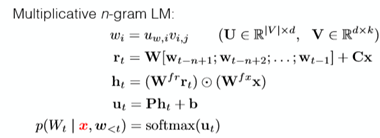

conditional n-gram LM(different to the RNN but it is useful)

We must have some datasets already.

Kiros et al. Model has done this.

.