1、基本概念

对于复杂的应用中间件,需要设置镜像运行的需求、环境变量,并且需要定制存储、网络等设置,最后设计和编写Deployment、Configmap、Service及Ingress等相关yaml配置文件,再提交给kubernetes进行部署。这些复杂的过程将逐步被Helm应用包管理工具实现。

Helm是一个由CNCF孵化和管理的项目,用于对需要在k8s上部署复杂应用进行定义、安装和更新。Helm以Chart的方式对应用软件进行描述,可以方便地创建、版本化、共享和发布复杂的应用软件。

Chart:一个Helm包,其中包含了运行一个应用所需要的工具和资源定义,还可能包含kubernetes集群中的服务定义,类似于Homebrew中的formula、apt中的dpkg或者yum中的rpm文件。

Release:在K8S集群上运行一个Chart实例。在同一个集群上,一个Chart可以安装多次,例如有一个MySQL Chart,如果想在服务器上运行两个数据库,就可以基于这个Chart安装两次。每次安装都会生成新的Release,会有独立的Release名称。

Repository:用于存放和共享Chart的仓库。

简单来说,Helm的任务是在仓库中查找需要的Chart,然后将Chart以Release的形式安装到K8S集群中。

Harbor基本概念:此篇文章很不错

2、Helm安装

Helm由两个组件组成:

- HelmClinet:客户端,拥有对Repository、Chart、Release等对象的管理能力。

TillerServer:负责客户端指令和k8s集群之间的交互,根据Chart定义,生成和管理各种k8s的资源对象。

安装HelmClient:可以通过二进制文件或脚本方式进行安装。

下载最新版二进制文件:https://github.com/helm/helm/releases

[root@k8s-master01 ~]# tar xf helm-v2.11.0-linux-amd64.tar.gz [root@k8s-master01 ~]# cp linux-amd64/helm linux-amd64/tiller /usr/local/bin/

[root@k8s-master01 ~]# helm version Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"} Error: could not find tiller # 因为没有安装tillerServer所以会报找不到tiller

安装TillerServer

所有节点下载tiller:v[helm-version]镜像,helm-version为上面helm的版本2.11.0

docker pull dotbalo/tiller:v2.11.0

yum install socat -y

使用helm init安装tiller

[root@k8s-master01 ~]# helm init --tiller-image dotbalo/tiller:v2.11.0 Creating /root/.helm Creating /root/.helm/repository Creating /root/.helm/repository/cache Creating /root/.helm/repository/local Creating /root/.helm/plugins Creating /root/.helm/starters Creating /root/.helm/cache/archive Creating /root/.helm/repository/repositories.yaml Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com Adding local repo with URL: http://127.0.0.1:8879/charts $HELM_HOME has been configured at /root/.helm. Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy. To prevent this, run `helm init` with the --tiller-tls-verify flag. For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation Happy Helming!

再次查看helm version及pod状态

[root@k8s-master01 ~]# helm version Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"} Server: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

[root@k8s-master01 ~]# kubectl get pod -n kube-system | grep tiller tiller-deploy-5d7c8fcd59-d4djx 1/1 Running 0 49s [root@k8s-master01 ~]# kubectl get pod,svc -n kube-system | grep tiller pod/tiller-deploy-5d7c8fcd59-d4djx 1/1 Running 0 3m service/tiller-deploy ClusterIP 10.106.28.190 <none> 44134/TCP 5m

3、Helm使用

3.1 helm search:搜索可用的Chart

Helm初始化完成之后,默认配置为使用官方的k8s chart仓库

通过search查找可用的Chart

[root@k8s-master01 ~]# helm search gitlab NAME CHART VERSION APP VERSION DESCRIPTION stable/gitlab-ce 0.2.2 9.4.1 GitLab Community Edition stable/gitlab-ee 0.2.2 9.4.1 GitLab Enterprise Edition [root@k8s-master01 ~]# helm search | more NAME CHART VERSION APP VERSION DESCRIPTION stable/acs-engine-autoscaler 2.2.0 2.1.1 Scales worker n odes within agent pools stable/aerospike 0.1.7 v3.14.1.2 A Helm chart fo r Aerospike in Kubernetes stable/anchore-engine 0.9.0 0.3.0 Anchore contain er analysis and policy evaluation engine s... stable/apm-server 0.1.0 6.2.4 The server rece ives data from the Elastic APM agents and ... stable/ark 1.2.2 0.9.1 A Helm chart fo r ark stable/artifactory 7.3.1 6.1.0 DEPRECATED Univ ersal Repository Manager supporting all ma... stable/artifactory-ha 0.4.1 6.2.0 DEPRECATED Univ ersal Repository Manager supporting all ma... stable/auditbeat 0.3.1 6.4.3 A lightweight s hipper to audit the activities of users an... --More--

查看详细信息

[root@k8s-master01 ~]# helm search gitlab NAME CHART VERSION APP VERSION DESCRIPTION stable/gitlab-ce 0.2.2 9.4.1 GitLab Community Edition stable/gitlab-ee 0.2.2 9.4.1 GitLab Enterprise Edition [root@k8s-master01 ~]# helm inspect stable/gitlab-ce

3.2 Helm install harbor

使用helm repo remove和add删除repository和添加aliyun的repository

[root@k8s-master01 harbor-helm]# helm repo list NAME URL aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

下载harbor,并checkout到0.3.0分支

git clone https://github.com/goharbor/harbor-helm.git

更改requirement.yaml如下

[root@k8s-master01 harbor-helm]# cat requirements.yaml dependencies: - name: redis version: 1.1.15 repository: https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts #repository: https://kubernetes-charts.storage.googleapis.com

下载依赖

[root@k8s-master01 harbor-helm]# helm dependency update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "aliyun" chart repository Update Complete. ⎈Happy Helming!⎈ Saving 1 charts Downloading redis from repo https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts Deleting outdated charts

所有节点下载相关镜像

docker pull goharbor/chartmuseum-photon:v0.7.1-v1.6.0 docker pull goharbor/harbor-adminserver:v1.6.0 docker pull goharbor/harbor-jobservice:v1.6.0 docker pull goharbor/harbor-ui:v1.6.0 docker pull goharbor/harbor-db:v1.6.0 docker pull goharbor/registry-photon:v2.6.2-v1.6.0 docker pull goharbor/chartmuseum-photon:v0.7.1-v1.6.0 docker pull goharbor/clair-photon:v2.0.5-v1.6.0 docker pull goharbor/notary-server-photon:v0.5.1-v1.6.0 docker pull goharbor/notary-signer-photon:v0.5.1-v1.6.0

docker pull bitnami/redis:4.0.8-r2

更改values.yaml所有的storageClass为storageClass: "gluster-heketi"

注意修改values.yaml的redis默认配置,添加port至master

master: port: 6379

注意修改charts/redis-1.1.15.tgz 里面的redis的values.yaml的storageClass也为"gluster-heketi",usePassword为 false

注意修改charts/redis-1.1.15.tgz 里面的redis下template下的svc的name: {{ template "redis.fullname" . }}-master

注意修改相关存储空间的大小,比如registry。

安装harbor

helm install --name harbor-v1 . --wait --timeout 1500 --debug --namespace harbor

如果报forbidden的错误,需要创建serveraccount

[root@k8s-master01 harbor-helm]# helm install --name harbor-v1 . --set externalDomain=harbor.xxx.net --wait --timeout 1500 --debug --namespace harbor [debug] Created tunnel using local port: '35557' [debug] SERVER: "127.0.0.1:35557" [debug] Original chart version: "" [debug] CHART PATH: /root/harbor-helm Error: release harbor-v1 failed: namespaces "harbor" is forbidden: User "system:serviceaccount:kube-system:default" cannot get namespaces in the namespace "harbor"

解决:

kubectl create serviceaccount --namespace kube-system tiller kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

再次部署:

...... ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE harbor-v1-redis-84dffd8574-xzrsh 0/1 Running 0 <invalid> harbor-v1-harbor-adminserver-5b59c684b4-g6cjc 1/1 Running 0 <invalid> harbor-v1-harbor-chartmuseum-699cf6599-q6vfw 1/1 Running 0 <invalid> harbor-v1-harbor-clair-6d9bb84485-2p52v 1/1 Running 0 <invalid> harbor-v1-harbor-jobservice-5c9496775d-sj6mb 1/1 Running 0 <invalid> harbor-v1-harbor-notary-server-5fb65b6866-dnnnk 1/1 Running 0 <invalid> harbor-v1-harbor-notary-signer-5bfcfcd5cf-j774t 1/1 Running 0 <invalid> harbor-v1-harbor-registry-75c9b6b457-pqxj6 1/1 Running 0 <invalid> harbor-v1-harbor-ui-5974bd5549-zl9nj 1/1 Running 0 <invalid> harbor-v1-harbor-database-0 1/1 Running 0 <invalid> ==> v1/Secret NAME AGE harbor-v1-harbor-adminserver <invalid> harbor-v1-harbor-chartmuseum <invalid> harbor-v1-harbor-database <invalid> harbor-v1-harbor-ingress <invalid> harbor-v1-harbor-jobservice <invalid> harbor-v1-harbor-registry <invalid> harbor-v1-harbor-ui <invalid> NOTES: Please wait for several minutes for Harbor deployment to complete. Then you should be able to visit the UI portal at https://core.harbor.domain. For more details, please visit https://github.com/goharbor/harbor. ......

查看pod

[root@k8s-master01 harbor-helm]# kubectl get pod -n harbor NAME READY STATUS RESTARTS AGE harbor-v1-harbor-adminserver-5b59c684b4-g6cjc 1/1 Running 1 2m harbor-v1-harbor-chartmuseum-699cf6599-q6vfw 1/1 Running 0 2m harbor-v1-harbor-clair-6d9bb84485-2p52v 1/1 Running 1 2m harbor-v1-harbor-database-0 1/1 Running 0 2m harbor-v1-harbor-jobservice-5c9496775d-sj6mb 1/1 Running 1 2m harbor-v1-harbor-notary-server-5fb65b6866-dnnnk 1/1 Running 0 2m harbor-v1-harbor-notary-signer-5bfcfcd5cf-j774t 1/1 Running 0 2m harbor-v1-harbor-registry-75c9b6b457-pqxj6 1/1 Running 0 2m harbor-v1-harbor-ui-5974bd5549-zl9nj 1/1 Running 2 2m harbor-v1-redis-84dffd8574-xzrsh 1/1 Running 0 2m

查看service

[root@k8s-master01 harbor-helm]# kubectl get svc -n harbor NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE glusterfs-dynamic-database-data-harbor-v1-harbor-database-0 ClusterIP 10.101.10.82 <none> 1/TCP 2h glusterfs-dynamic-harbor-v1-harbor-chartmuseum ClusterIP 10.97.114.51 <none> 1/TCP 36s glusterfs-dynamic-harbor-v1-harbor-registry ClusterIP 10.98.207.16 <none> 1/TCP 36s glusterfs-dynamic-harbor-v1-redis ClusterIP 10.105.214.102 <none> 1/TCP 31s harbor-v1-harbor-adminserver ClusterIP 10.99.152.38 <none> 80/TCP 3m harbor-v1-harbor-chartmuseum ClusterIP 10.99.237.224 <none> 80/TCP 3m harbor-v1-harbor-clair ClusterIP 10.98.217.176 <none> 6060/TCP 3m harbor-v1-harbor-database ClusterIP 10.111.182.188 <none> 5432/TCP 3m harbor-v1-harbor-jobservice ClusterIP 10.98.202.61 <none> 80/TCP 3m harbor-v1-harbor-notary-server ClusterIP 10.110.72.98 <none> 4443/TCP 3m harbor-v1-harbor-notary-signer ClusterIP 10.106.234.19 <none> 7899/TCP 3m harbor-v1-harbor-registry ClusterIP 10.98.80.141 <none> 5000/TCP 3m harbor-v1-harbor-ui ClusterIP 10.98.240.15 <none> 80/TCP 3m harbor-v1-redis ClusterIP 10.107.234.107 <none> 6379/TCP 3m

查看pv和pvc

[root@k8s-master01 harbor-helm]# kubectl get pv,pvc -n harbor | grep harbor persistentvolume/pvc-080d1242-e990-11e8-8a89-000c293ad492 1Gi RWO Delete Bound harbor/database-data-harbor-v1-harbor-database-0 gluster-heketi 2h persistentvolume/pvc-f573b165-e9a3-11e8-882f-000c293bfe27 8Gi RWO Delete Bound harbor/harbor-v1-redis gluster-heketi 1m persistentvolume/pvc-f575855d-e9a3-11e8-882f-000c293bfe27 5Gi RWO Delete Bound harbor/harbor-v1-harbor-chartmuseum gluster-heketi 1m persistentvolume/pvc-f577371b-e9a3-11e8-882f-000c293bfe27 10Gi RWO Delete Bound harbor/harbor-v1-harbor-registry gluster-heketi 1m persistentvolumeclaim/database-data-harbor-v1-harbor-database-0 Bound pvc-080d1242-e990-11e8-8a89-000c293ad492 1Gi RWO gluster-heketi 2h persistentvolumeclaim/harbor-v1-harbor-chartmuseum Bound pvc-f575855d-e9a3-11e8-882f-000c293bfe27 5Gi RWO gluster-heketi 4m persistentvolumeclaim/harbor-v1-harbor-registry Bound pvc-f577371b-e9a3-11e8-882f-000c293bfe27 10Gi RWO gluster-heketi 4m persistentvolumeclaim/harbor-v1-redis Bound pvc-f573b165-e9a3-11e8-882f-000c293bfe27 8Gi RWO gluster-heketi 4m

查看ingress

[root@k8s-master01 harbor-helm]# vim values.yaml [root@k8s-master01 harbor-helm]# kubectl get ingress -n harbor NAME HOSTS ADDRESS PORTS AGE harbor-v1-harbor-ingress core.harbor.domain,notary.harbor.domain 80, 443 53m

安装时也可以指定域名:--set externalURL=xxx.com

卸载:helm del --purge harbor-v1

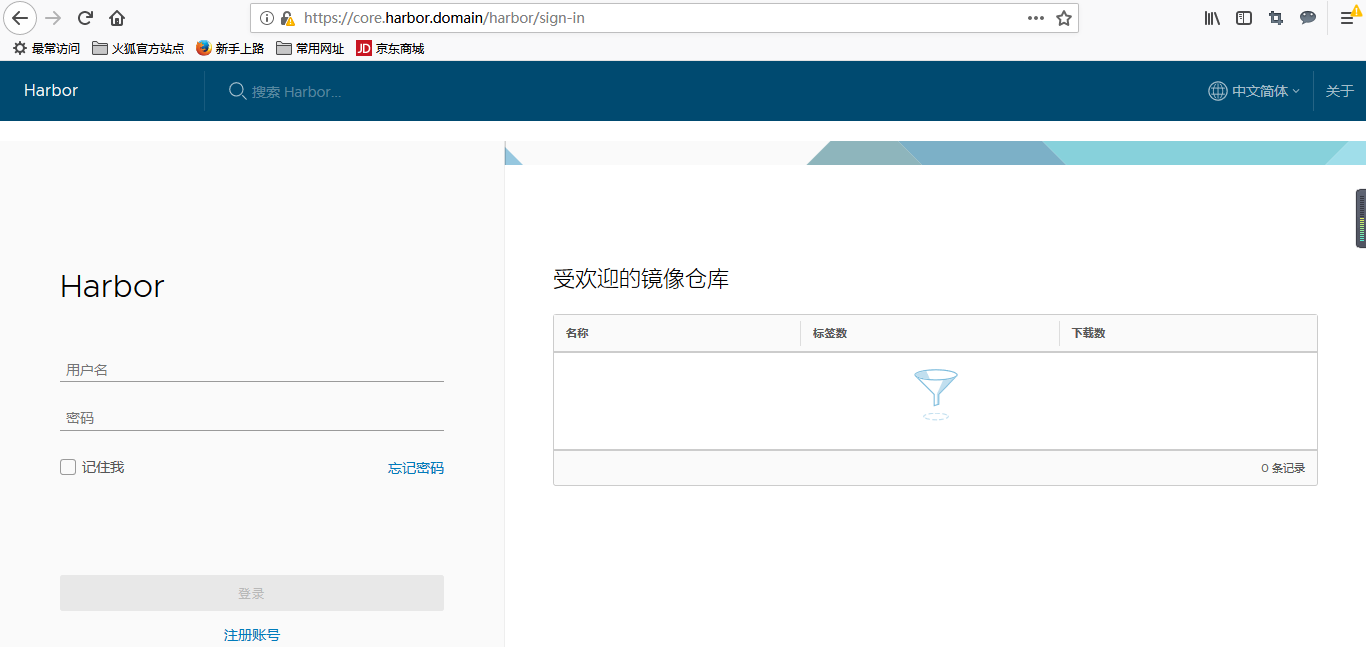

4、Harbor使用

访问测试,需要解析上述域名core.harbor.domain至k8s任意节点

默认账号密码:admin/Harbor12345

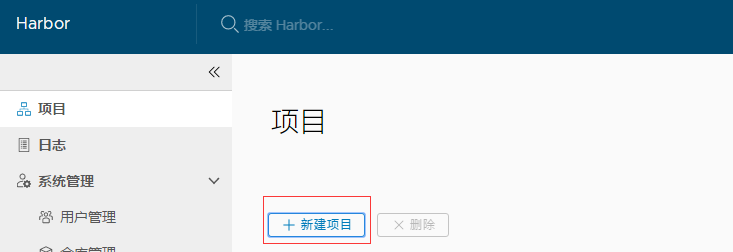

创建开发环境仓库:

5、在k8s中使用harbor

查看harbor自带证书

[root@k8s-master01 ~]# kubectl get secrets/harbor-v1-harbor-ingress -n harbor -o jsonpath="{.data.ca.crt}" | base64 --decode -----BEGIN CERTIFICATE----- MIIC9DCCAdygAwIBAgIQffFj8E2+DLnbT3a3XRXlBjANBgkqhkiG9w0BAQsFADAU MRIwEAYDVQQDEwloYXJib3ItY2EwHhcNMTgxMTE2MTYwODA5WhcNMjgxMTEzMTYw ODA5WjAUMRIwEAYDVQQDEwloYXJib3ItY2EwggEiMA0GCSqGSIb3DQEBAQUAA4IB DwAwggEKAoIBAQDw1WP6S3O+7zrhVAAZGcrAEdeQxr0c53eyDGcPL6my/h+FhZ1Y KBvY5CLDVES957u/GtEXFfZr9aQT/PZECcccPcyZvt8NscEAuQONfrQFH/VLCvwm XOcbFDR5BXDJR8nqGT6DVq8a1HUEOxiY39bp/Jz2HrDIfD9IMwEuyh/2IVXYHwD0 deaBpOY1slSylpOYWPFfy9UMfCsd+Jc7UCzRaiP3XWP9HMFKc4JTU8CDRR80s9UM siU8QheVXn/Y9SxKaDfrYjaVUkEfJ6cAZkkDLmM1OzSU73N7I4nmm1SUS99vdSiZ yu/R4oDFMezOkvYGBeDhLmmkK3sqWRh+dNoNAgMBAAGjQjBAMA4GA1UdDwEB/wQE AwICpDAdBgNVHSUEFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwDwYDVR0TAQH/BAUw AwEB/zANBgkqhkiG9w0BAQsFAAOCAQEAJjANauFSPZ+Da6VJSV2lGirpQN+EnrTl u5VJxhQQGr1of4Je7aej6216KI9W5/Q4lDQfVOa/5JO1LFaiWp1AMBOlEm7FNiqx LcLZzEZ4i6sLZ965FdrPGvy5cOeLa6D8Vx4faDCWaVYOkXoi/7oH91IuH6eEh+1H u/Kelp8WEng4vfEcXRKkq4XTO51B1Mg1g7gflxMIoeSpXYSO5qwIL5ZqvoAD9H7J CnQFO2xO3wrLq6TXH5Z7+0GWNghGk0GIOvF/ULHLWpsyhU5asKLK//MvORwQNHzL b5LHG9uYeI+Jf12X4TI9qDaTCstiqM8vk1JPvgtSPJ9M62nRKY4ang== -----END CERTIFICATE-----

创建证书

cat <<EOF > /etc/docker/certs.d/core.harbor.domain/ca.crt -----BEGIN CERTIFICATE----- MIIC9DCCAdygAwIBAgIQffFj8E2+DLnbT3a3XRXlBjANBgkqhkiG9w0BAQsFADAU MRIwEAYDVQQDEwloYXJib3ItY2EwHhcNMTgxMTE2MTYwODA5WhcNMjgxMTEzMTYw ODA5WjAUMRIwEAYDVQQDEwloYXJib3ItY2EwggEiMA0GCSqGSIb3DQEBAQUAA4IB DwAwggEKAoIBAQDw1WP6S3O+7zrhVAAZGcrAEdeQxr0c53eyDGcPL6my/h+FhZ1Y KBvY5CLDVES957u/GtEXFfZr9aQT/PZECcccPcyZvt8NscEAuQONfrQFH/VLCvwm XOcbFDR5BXDJR8nqGT6DVq8a1HUEOxiY39bp/Jz2HrDIfD9IMwEuyh/2IVXYHwD0 deaBpOY1slSylpOYWPFfy9UMfCsd+Jc7UCzRaiP3XWP9HMFKc4JTU8CDRR80s9UM siU8QheVXn/Y9SxKaDfrYjaVUkEfJ6cAZkkDLmM1OzSU73N7I4nmm1SUS99vdSiZ yu/R4oDFMezOkvYGBeDhLmmkK3sqWRh+dNoNAgMBAAGjQjBAMA4GA1UdDwEB/wQE AwICpDAdBgNVHSUEFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwDwYDVR0TAQH/BAUw AwEB/zANBgkqhkiG9w0BAQsFAAOCAQEAJjANauFSPZ+Da6VJSV2lGirpQN+EnrTl u5VJxhQQGr1of4Je7aej6216KI9W5/Q4lDQfVOa/5JO1LFaiWp1AMBOlEm7FNiqx LcLZzEZ4i6sLZ965FdrPGvy5cOeLa6D8Vx4faDCWaVYOkXoi/7oH91IuH6eEh+1H u/Kelp8WEng4vfEcXRKkq4XTO51B1Mg1g7gflxMIoeSpXYSO5qwIL5ZqvoAD9H7J CnQFO2xO3wrLq6TXH5Z7+0GWNghGk0GIOvF/ULHLWpsyhU5asKLK//MvORwQNHzL b5LHG9uYeI+Jf12X4TI9qDaTCstiqM8vk1JPvgtSPJ9M62nRKY4ang== -----END CERTIFICATE----- EOF

重启docker然后使用docker login 登录

[root@k8s-master01 ~]# docker login core.harbor.domain Username: admin Password: Login Succeeded

如果报证书不信任错误x509: certificate signed by unknown authority

可以添加信任

chmod 644 /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem

将上述ca.crt添加到/etc/pki/tls/certs/ca-bundle.crt即可

chmod 444 /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem

上次镜像,随便找一个镜像打上tag,然后上传

[root@k8s-master01 ~]# docker push core.harbor.domain/develop/busybox The push refers to a repository [core.harbor.domain/develop/busybox] 8ac8bfaff55a: Pushed latest: digest: sha256:540f2e917216c5cfdf047b246d6b5883932f13d7b77227f09e03d42021e98941 size: 527

6、总结

部署过程中遇到的问题:

1) 由于某种原因无法访问https://kubernetes-charts.storage.googleapis.com,也懒得FQ,就使用阿里的helm仓库。(如果FQ了,就没有以下问题)

2) 由于换成了阿里云的仓库,找不到源requirement.yaml中的redis版本,故修改阿里云仓库中有的redis。

3) 使用了GFS动态存储,持久化了Harbor,需要更改values.yaml和redis里面的values.yaml中的storageClass。

4) 阿里云仓库中的redis启用了密码验证,但是harbor chart的配置中未启用密码,所以干脆禁用了redis的密码。

5) 使用Helm部署完Harbor以后,jobservice和harbor-ui的pods不断重启,通过日志发现未能解析Redis的service,原因是harbor chart里面配置Redis的service是harbor-v1-redis-master,而通过helm dependency update下载的Redis Chart service配置的harbor-v1-redis,为了方便,直接改了redis的svc.yaml文件。

6) 更改完上述文件以后pods还是处于一直重启的状态,且报错:Failed to load and run worker pool: connect to redis server timeout: dial tcp 10.96.137.238:0: i/o timeout,发现Redis的地址+端口少了端口,最后经查证是harbor chart的values的redis配置port的参数,加上后重新部署即成功。

7) 由于Helm安装的harbor默认启用了https,故直接配置证书以提高安全性。

8) 将Harbor安装到k8s上,原作者推荐的是Helm安装,详情见:https://github.com/goharbor/harbor/blob/master/docs/kubernetes_deployment.md,文档见:https://github.com/goharbor/harbor-helm

9) 个人认为Harbor应该独立于k8s集群之外使用docker-compose单独部署,这也是最常见的方式,我目前使用的是此种方式(此文档为第一次部署harbor到k8s,也为了介绍Helm),而且便于维护及扩展,以及配置LDAP等都很方便。

10) Helm是非常强大的k8s包管理工具。

11) Harbor集成openLDAP点击

赞助作者: