说明:部署的过程中请保证每个命令都有在相应的节点执行,并且执行成功,此文档已经帮助几十人(仅包含和我取得联系的)快速部署k8s高可用集群,文档不足之处也已更改,在部署过程中遇到问题请先检查是否遗忘某个步骤,文档中每个步骤都是必须的。

经测验此文档也适合高可用部署k8s v.12,只需修改对应版本号就可。

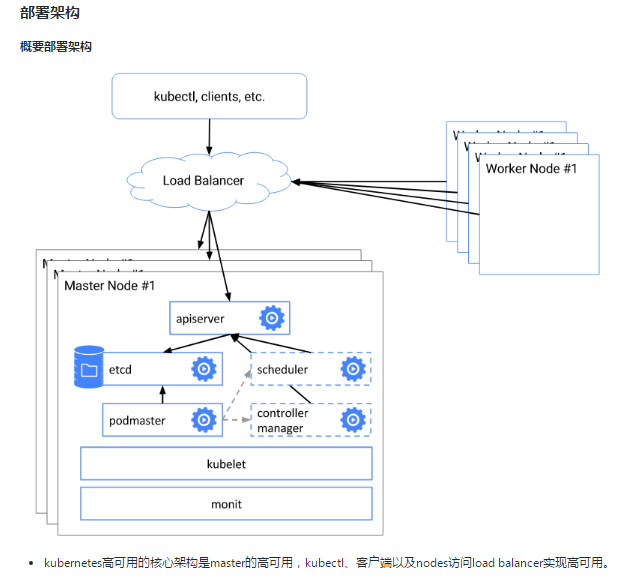

1、部署架构

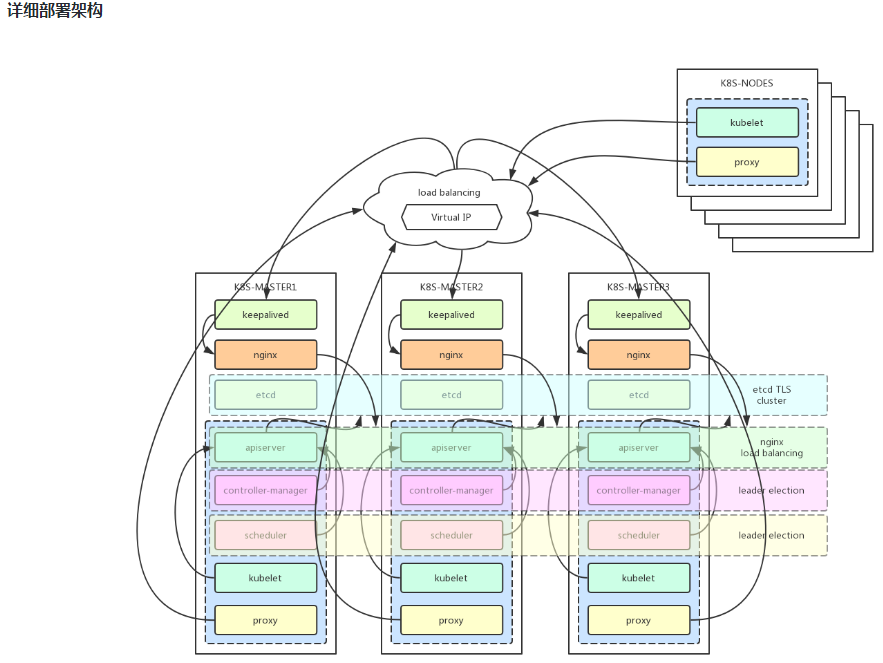

详细架构:

2、基本配置

| 主机名 | IP地址 | 说明 | 组件 |

|---|---|---|---|

| k8s-master01 ~ 03 | 192.168.20.20 ~ 22 | master节点 * 3 | keepalived、nginx、etcd、kubelet、kube-apiserver |

| k8s-master-lb | 192.168.20.10 | keepalived虚拟IP | 无 |

| k8s-node01 ~ 08 | 192.168.20.30 ~ 37 | worker节点 * 8 | kubelet |

组件说明:

kube-apiserver:集群核心,集群API接口、集群各个组件通信的中枢;集群安全控制;

etcd:集群的数据中心,用于存放集群的配置以及状态信息,非常重要,如果数据丢失那么集群将无法恢复;因此高可用集群部署首先就是etcd是高可用集群;

kube-scheduler:集群Pod的调度中心;默认kubeadm安装情况下--leader-elect参数已经设置为true,保证master集群中只有一个kube-scheduler处于活跃状态;

kube-controller-manager:集群状态管理器,当集群状态与期望不同时,kcm会努力让集群恢复期望状态,比如:当一个pod死掉,kcm会努力新建一个pod来恢复对应replicas set期望的状态;默认kubeadm安装情况下--leader-elect参数已经设置为true,保证master集群中只有一个kube-controller-manager处于活跃状态;

kubelet: kubernetes node agent,负责与node上的docker engine打交道;

kube-proxy: 每个node上一个,负责service vip到endpoint pod的流量转发,当前主要通过设置iptables规则实现。

keepalived集群设置一个虚拟ip地址,虚拟ip地址指向k8s-master01、k8s-master02、k8s-master03。

nginx用于k8s-master01、k8s-master02、k8s-master03的apiserver的负载均衡。

外部kubectl以及nodes访问apiserver的时候就可以用过keepalived的虚拟ip(192.168.20.10)以及nginx端口(16443)访问master集群的apiserver。

系统信息:

[root@k8s-master01 ~]# cat /etc/redhat-release CentOS Linux release 7.5.1804 (Core) [root@k8s-master01 ~]# uname Linux [root@k8s-master01 ~]# uname -r 3.10.0-862.14.4.el7.x86_64 [root@k8s-master01 ~]# docker version Client: Version: 17.03.2-ce API version: 1.27 Go version: go1.7.5 Git commit: f5ec1e2 Built: Tue Jun 27 02:21:36 2017 OS/Arch: linux/amd64 Server: Version: 17.03.2-ce API version: 1.27 (minimum version 1.12) Go version: go1.7.5 Git commit: f5ec1e2 Built: Tue Jun 27 02:21:36 2017 OS/Arch: linux/amd64 Experimental: false

以下操作在所有的k8s节点

同步时区及时间

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time.windows.com

limit配置

ulimit -SHn 65535

hosts文件配置

[root@k8s-master01 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 # k8s 192.168.20.20 k8s-master01 192.168.20.21 k8s-master02 192.168.20.22 k8s-master03

192.168.20.10 k8s-master-lb 192.168.20.30 k8s-node01 192.168.20.31 k8s-node02

镜像源配置

cd /etc/yum.repos.d mkdir bak mv *.repo bak/

# vim CentOS-Base.repo # CentOS-Base.repo # # The mirror system uses the connecting IP address of the client and the # update status of each mirror to pick mirrors that are updated to and # geographically close to the client. You should use this for CentOS updates # unless you are manually picking other mirrors. # # If the mirrorlist= does not work for you, as a fall back you can try the # remarked out baseurl= line instead. # # [base] name=CentOS-$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #released updates [updates] name=CentOS-$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that may be useful [extras] name=CentOS-$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that extend functionality of existing packages [centosplus] name=CentOS-$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #contrib - packages by Centos Users [contrib] name=CentOS-$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

# vim epel-7.repo [epel] name=Extra Packages for Enterprise Linux 7 - $basearch baseurl=http://mirrors.aliyun.com/epel/7/$basearch failovermethod=priority enabled=1 gpgcheck=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 [epel-debuginfo] name=Extra Packages for Enterprise Linux 7 - $basearch - Debug baseurl=http://mirrors.aliyun.com/epel/7/$basearch/debug failovermethod=priority enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 gpgcheck=0 [epel-source] name=Extra Packages for Enterprise Linux 7 - $basearch - Source baseurl=http://mirrors.aliyun.com/epel/7/SRPMS failovermethod=priority enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 gpgcheck=0

# vim docker-ce.repo [docker-ce-stable] name=Docker CE Stable - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-debuginfo] name=Docker CE Stable - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-source] name=Docker CE Stable - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge] name=Docker CE Edge - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/edge enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge-debuginfo] name=Docker CE Edge - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge-source] name=Docker CE Edge - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test] name=Docker CE Test - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-debuginfo] name=Docker CE Test - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-source] name=Docker CE Test - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly] name=Docker CE Nightly - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-debuginfo] name=Docker CE Nightly - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-source] name=Docker CE Nightly - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

# vim kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

所有节点加载ipvs模块

modprobe ip_vs modprobe ip_vs_rr modprobe ip_vs_wrr modprobe ip_vs_sh modprobe nf_conntrack_ipv4

系统更新

yum update -y

安装docker-ce及kubernetes

yum install https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm -y yum install -y kubelet-1.11.1-0.x86_64 kubeadm-1.11.1-0.x86_64 kubectl-1.11.1-0.x86_64 docker-ce-17.03.2.ce-1.el7.centos

所有k8s节点关闭selinux及firewalld

$ vi /etc/selinux/config SELINUX=permissive $ setenforce 0

$ systemctl disable firewalld

$ systemctl stop firewalld

所有k8s节点设置iptables参数

$ cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF $ sysctl --system

所有k8s节点禁用swap

$ swapoff -a # 禁用fstab中的swap项目 $ vi /etc/fstab #/dev/mapper/centos-swap swap swap defaults 0 0 # 确认swap已经被禁用 $ cat /proc/swaps Filename Type Size Used Priority

所有k8s节点重启

$ reboot

如需开启firewalld配置如下:

所有节点开启防火墙

$ systemctl enable firewalld

$ systemctl restart firewalld

$ systemctl status firewalld

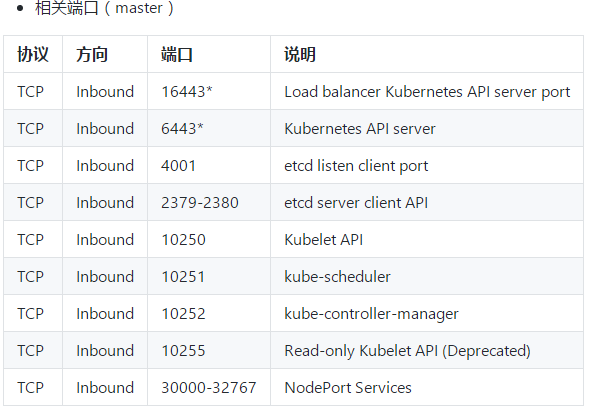

设置防火墙策略master节点

$ firewall-cmd --zone=public --add-port=16443/tcp --permanent $ firewall-cmd --zone=public --add-port=6443/tcp --permanent $ firewall-cmd --zone=public --add-port=4001/tcp --permanent $ firewall-cmd --zone=public --add-port=2379-2380/tcp --permanent $ firewall-cmd --zone=public --add-port=10250/tcp --permanent $ firewall-cmd --zone=public --add-port=10251/tcp --permanent $ firewall-cmd --zone=public --add-port=10252/tcp --permanent $ firewall-cmd --zone=public --add-port=30000-32767/tcp --permanent

$ firewall-cmd --reload $ firewall-cmd --list-all --zone=public public (active) target: default icmp-block-inversion: no interfaces: ens2f1 ens1f0 nm-bond sources: services: ssh dhcpv6-client ports: 4001/tcp 6443/tcp 2379-2380/tcp 10250/tcp 10251/tcp 10252/tcp 30000-32767/tcp protocols: masquerade: no forward-ports: source-ports: icmp-blocks: rich rules:

设置防火墙策略node节点

$ firewall-cmd --zone=public --add-port=10250/tcp --permanent $ firewall-cmd --zone=public --add-port=30000-32767/tcp --permanent $ firewall-cmd --reload $ firewall-cmd --list-all --zone=public public (active) target: default icmp-block-inversion: no interfaces: ens2f1 ens1f0 nm-bond sources: services: ssh dhcpv6-client ports: 10250/tcp 30000-32767/tcp protocols: masquerade: no forward-ports: source-ports: icmp-blocks: rich rules:

在所有k8s节点上允许kube-proxy的forward

$ firewall-cmd --permanent --direct --add-rule ipv4 filter INPUT 1 -i docker0 -j ACCEPT -m comment --comment "kube-proxy redirects" $ firewall-cmd --permanent --direct --add-rule ipv4 filter FORWARD 1 -o docker0 -j ACCEPT -m comment --comment "docker subnet" $ firewall-cmd --reload $ firewall-cmd --direct --get-all-rules ipv4 filter INPUT 1 -i docker0 -j ACCEPT -m comment --comment 'kube-proxy redirects' ipv4 filter FORWARD 1 -o docker0 -j ACCEPT -m comment --comment 'docker subnet' # 重启防火墙 $ systemctl restart firewalld

解决kube-proxy无法启用nodePort,重启firewalld必须执行以下命令,在所有节点设置定时任务

$ crontab -e */5 * * * * /usr/sbin/iptables -D INPUT -j REJECT --reject-with icmp-host-prohibited

4、服务启动

在所有kubernetes节点上启动docker-ce和kubelet

启动docker-ce

systemctl enable docker && systemctl start docker

查看状态及版本

[root@k8s-master02 ~]# docker version Client: Version: 17.03.2-ce API version: 1.27 Go version: go1.7.5 Git commit: f5ec1e2 Built: Tue Jun 27 02:21:36 2017 OS/Arch: linux/amd64 Server: Version: 17.03.2-ce API version: 1.27 (minimum version 1.12) Go version: go1.7.5 Git commit: f5ec1e2 Built: Tue Jun 27 02:21:36 2017 OS/Arch: linux/amd64 Experimental: false

在所有kubernetes节点上安装并启动k8s

修改kubelet配置

# 配置kubelet使用国内pause镜像 # 配置kubelet的cgroups # 获取docker的cgroups DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3) echo $DOCKER_CGROUPS cat >/etc/sysconfig/kubelet<<EOF KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1" EOF # 启动 systemctl daemon-reload

systemctl enable kubelet && systemctl start kubelet

注意:如果此时kubelet启动不成功,暂时不用管

在所有master节点安装并启动keepalived及docker-compose

$ yum install -y keepalived $ systemctl enable keepalived && systemctl restart keepalived

#安装docker-compose

$ yum install -y docker-compose

所有master节点互信

[root@k8s-master01 ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:XZ1oFmFToS19l0jURW2xUyLSkysK/SjaZVgAG1/EDCs root@k8s-master01 The key's randomart image is: +---[RSA 2048]----+ | o..=o ..B*=+B| | +.oo o=X.+*| | E oo B+==o| | .. o..+.. .o| | +S+.. | | o = . | | o + | | . . | | | +----[SHA256]-----+ [root@k8s-master01 ~]# for i in k8s-master01 k8s-master02 k8s-master03;do ssh-copy-id -i .ssh/id_rsa.pub $i;done /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub" The authenticity of host 'k8s-master01 (192.168.20.20)' can't be established. ECDSA key fingerprint is SHA256:bUoUE9+VGU3wBWGsjP/qvIGxXG9KQMmKK7fVeihVp2s. ECDSA key fingerprint is MD5:39:2c:69:f9:24:49:b2:b8:27:8a:14:93:16:bb:3b:14. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@k8s-master01's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'k8s-master01'" and check to make sure that only the key(s) you wanted were added. /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub" The authenticity of host 'k8s-master02 (192.168.20.21)' can't be established. ECDSA key fingerprint is SHA256:+4rgkToh5TyEM2NtWeyqpyKZ+l8fLhW5jmWhrfSDUDQ. ECDSA key fingerprint is MD5:e9:35:b8:22:b1:c1:35:0b:93:9b:a6:c2:28:e0:28:e1. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@k8s-master02's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'k8s-master02'" and check to make sure that only the key(s) you wanted were added. /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub" The authenticity of host 'k8s-master03 (192.168.20.22)' can't be established. ECDSA key fingerprint is SHA256:R8FRR8fvBTmFZSlKThEZ6+1br+aAkFgFPuNS0qq96aQ. ECDSA key fingerprint is MD5:08:45:48:0c:7e:7c:00:2c:82:42:19:75:89:44:c6:1f. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@k8s-master03's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'k8s-master03'" and check to make sure that only the key(s) you wanted were added.

高可用安装基本配置,K8SHA_CALICO_REACHABLE_IP为服务器网关地址

# 以下操作在master01 # 下载源码 [root@k8s-master01 ~]# yum install git -y [root@k8s-master01 ~]# git clone https://github.com/dotbalo/k8s-ha-install.git Cloning into 'k8s-ha-install'... remote: Enumerating objects: 10, done. remote: Counting objects: 100% (10/10), done. remote: Compressing objects: 100% (8/8), done. remote: Total 731 (delta 3), reused 6 (delta 2), pack-reused 721 Receiving objects: 100% (731/731), 2.32 MiB | 655.00 KiB/s, done. Resolving deltas: 100% (417/417), done. # 修改对应的配置信息,注意nm-bond修改为服务器对应网卡名称 [root@k8s-master01 k8s-ha-install]# pwd /root/k8s-ha-install [root@k8s-master01 k8s-ha-install]# cat create-config.sh #!/bin/bash ####################################### # set variables below to create the config files, all files will create at ./config directory ####################################### # master keepalived virtual ip address export K8SHA_VIP=192.168.20.10 # master01 ip address export K8SHA_IP1=192.168.20.20 # master02 ip address export K8SHA_IP2=192.168.20.21 # master03 ip address export K8SHA_IP3=192.168.20.22 # master keepalived virtual ip hostname export K8SHA_VHOST=k8s-master-lb # master01 hostname export K8SHA_HOST1=k8s-master01 # master02 hostname export K8SHA_HOST2=k8s-master02 # master03 hostname export K8SHA_HOST3=k8s-master03 # master01 network interface name export K8SHA_NETINF1=nm-bond # master02 network interface name export K8SHA_NETINF2=nm-bond # master03 network interface name export K8SHA_NETINF3=nm-bond # keepalived auth_pass config export K8SHA_KEEPALIVED_AUTH=412f7dc3bfed32194d1600c483e10ad1d # calico reachable ip address export K8SHA_CALICO_REACHABLE_IP=192.168.20.1 # kubernetes CIDR pod subnet, if CIDR pod subnet is "172.168.0.0/16" please set to "172.168.0.0" export K8SHA_CIDR=172.168.0.0 # 创建3个master节点的kubeadm配置文件,keepalived配置文件,nginx负载均衡配置文件,以及calico配置文件 [root@k8s-master01 k8s-ha-install]# ./create-config.sh create kubeadm-config.yaml files success. config/k8s-master01/kubeadm-config.yaml create kubeadm-config.yaml files success. config/k8s-master02/kubeadm-config.yaml create kubeadm-config.yaml files success. config/k8s-master03/kubeadm-config.yaml create keepalived files success. config/k8s-master01/keepalived/ create keepalived files success. config/k8s-master02/keepalived/ create keepalived files success. config/k8s-master03/keepalived/ create nginx-lb files success. config/k8s-master01/nginx-lb/ create nginx-lb files success. config/k8s-master02/nginx-lb/ create nginx-lb files success. config/k8s-master03/nginx-lb/ create calico.yaml file success. calico/calico.yaml # 设置相关hostname变量 export HOST1=k8s-master01 export HOST2=k8s-master02 export HOST3=k8s-master03 # 把kubeadm配置文件放到各个master节点的/root/目录 scp -r config/$HOST1/kubeadm-config.yaml $HOST1:/root/ scp -r config/$HOST2/kubeadm-config.yaml $HOST2:/root/ scp -r config/$HOST3/kubeadm-config.yaml $HOST3:/root/ # 把keepalived配置文件放到各个master节点的/etc/keepalived/目录 scp -r config/$HOST1/keepalived/* $HOST1:/etc/keepalived/ scp -r config/$HOST2/keepalived/* $HOST2:/etc/keepalived/ scp -r config/$HOST3/keepalived/* $HOST3:/etc/keepalived/ # 把nginx负载均衡配置文件放到各个master节点的/root/目录 scp -r config/$HOST1/nginx-lb $HOST1:/root/ scp -r config/$HOST2/nginx-lb $HOST2:/root/ scp -r config/$HOST3/nginx-lb $HOST3:/root/

以下操作在所有master执行

keepalived和nginx-lb配置

启动nginx-lb,修改nginx-lb下的nginx配置文件proxy_connect_timeout 60s;proxy_timeout 10m;

cd docker-compose --file=/root/nginx-lb/docker-compose.yaml up -d docker-compose --file=/root/nginx-lb/docker-compose.yaml ps

修改keepalived的配置文件如下:

# 注释健康检查 [root@k8s-master01 ~]# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } #vrrp_script chk_apiserver { # script "/etc/keepalived/check_apiserver.sh" # interval 2 # weight -5 # fall 3 # rise 2 #} vrrp_instance VI_1 { state MASTER interface ens160 mcast_src_ip 192.168.20.20 virtual_router_id 51 priority 102 advert_int 2 authentication { auth_type PASS auth_pass 412f7dc3bfed32194d1600c483e10ad1d } virtual_ipaddress { 192.168.20.10 } track_script { chk_apiserver } }

重启keepalived,并确认能ping通虚拟IP

[root@k8s-master01 ~]# ping 192.168.20.10 -c 4 PING 192.168.20.10 (192.168.20.10) 56(84) bytes of data. 64 bytes from 192.168.20.10: icmp_seq=1 ttl=64 time=0.045 ms 64 bytes from 192.168.20.10: icmp_seq=2 ttl=64 time=0.036 ms 64 bytes from 192.168.20.10: icmp_seq=3 ttl=64 time=0.032 ms 64 bytes from 192.168.20.10: icmp_seq=4 ttl=64 time=0.032 ms --- 192.168.20.10 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 2999ms rtt min/avg/max/mdev = 0.032/0.036/0.045/0.006 ms

修改kubeadm-config.yaml,注意:此时是在原有基础上添加了imageRepository、controllerManagerExtraArgs和api,其中advertiseAddress为每个master本机IP

[root@k8s-master01 ~]# cat kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1alpha2 kind: MasterConfiguration kubernetesVersion: v1.11.1 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers apiServerCertSANs: - k8s-master01 - k8s-master02 - k8s-master03 - k8s-master-lb - 192.168.20.20 - 192.168.20.21 - 192.168.20.22 - 192.168.20.10 api: advertiseAddress: 192.168.20.20 controlPlaneEndpoint: 192.168.20.10:16443 etcd: local: extraArgs: listen-client-urls: "https://127.0.0.1:2379,https://192.168.20.20:2379" advertise-client-urls: "https://192.168.20.20:2379" listen-peer-urls: "https://192.168.20.20:2380" initial-advertise-peer-urls: "https://192.168.20.20:2380" initial-cluster: "k8s-master01=https://192.168.20.20:2380" serverCertSANs: - k8s-master01 - 192.168.20.20 peerCertSANs: - k8s-master01 - 192.168.20.20 controllerManagerExtraArgs: node-monitor-grace-period: 10s pod-eviction-timeout: 10s networking: # This CIDR is a Calico default. Substitute or remove for your CNI provider. podSubnet: "172.168.0.0/16"

以下操作在master01执行

提前下载镜像

kubeadm config images pull --config /root/kubeadm-config.yaml

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.11.1 k8s.gcr.io/kube-apiserver-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.11.1 k8s.gcr.io/kube-proxy-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.18 k8s.gcr.io/etcd-amd64:3.2.18

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.11.1 k8s.gcr.io/kube-scheduler-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.11.1 k8s.gcr.io/kube-controller-manager-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.1.3 k8s.gcr.io/coredns:1.1.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

# 集群初始化

kubeadm init --config /root/kubeadm-config.yaml

记录初始化后的token,最后一行

[root@k8s-master01 ~]# kubeadm init --config /root/kubeadm-config.yaml [endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address [init] using Kubernetes version: v1.11.1 [preflight] running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' I1031 13:44:25.751524 8345 kernel_validator.go:81] Validating kernel version I1031 13:44:25.751634 8345 kernel_validator.go:96] Validating kernel config [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service' [preflight/images] Pulling images required for setting up a Kubernetes cluster [preflight/images] This might take a minute or two, depending on the speed of your internet connection [preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [preflight] Activating the kubelet service [certificates] Generated ca certificate and key. [certificates] Generated apiserver certificate and key. [certificates] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local k8s-master01 k8s-master02 k8s-master03 k8s-master-lb] and IPs [10.96.0.1 192.168.20.20 192.168.20.10 192.168.20.20 192.168.20.21 192.168.20.22 192.168.20.10] [certificates] Generated apiserver-kubelet-client certificate and key. [certificates] Generated sa key and public key. [certificates] Generated front-proxy-ca certificate and key. [certificates] Generated front-proxy-client certificate and key. [certificates] Generated etcd/ca certificate and key. [certificates] Generated etcd/server certificate and key. [certificates] etcd/server serving cert is signed for DNS names [k8s-master01 localhost k8s-master01] and IPs [127.0.0.1 ::1 192.168.20.20] [certificates] Generated etcd/peer certificate and key. [certificates] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost k8s-master01] and IPs [192.168.20.20 127.0.0.1 ::1 192.168.20.20] [certificates] Generated etcd/healthcheck-client certificate and key. [certificates] Generated apiserver-etcd-client certificate and key. [certificates] valid certificates and keys now exist in "/etc/kubernetes/pki" [endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" [controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" [etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml" [init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests" [init] this might take a minute or longer if the control plane images have to be pulled [apiclient] All control plane components are healthy after 40.507213 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.11" in namespace kube-system with the configuration for the kubelets in the cluster [markmaster] Marking the node k8s-master01 as master by adding the label "node-role.kubernetes.io/master=''" [markmaster] Marking the node k8s-master01 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule] [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master01" as an annotation [bootstraptoken] using token: vl9ca2.g2cytziz8ctjsjzd [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.20.10:16443 --token vl9ca2.g2cytziz8ctjsjzd --discovery-token-ca-cert-hash sha256:3459199acc7f719d3197bd14dfe2781f25b99a8686b215989e5d748ae92fcb28

5、配置环境变量

所有master节点配置变量

[root@k8s-master01 ~]# cat <<EOF >> ~/.bashrc export KUBECONFIG=/etc/kubernetes/admin.conf EOF [root@k8s-master01 ~]# source ~/.bashrc

查看node状态

[root@k8s-master01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady master 14m v1.11.1

查看pods状态

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE coredns-777d78ff6f-kstsz 0/1 Pending 0 14m <none> <none> coredns-777d78ff6f-rlfr5 0/1 Pending 0 14m <none> <none> etcd-k8s-master01 1/1 Running 0 14m 192.168.20.20 k8s-master01 kube-apiserver-k8s-master01 1/1 Running 0 13m 192.168.20.20 k8s-master01 kube-controller-manager-k8s-master01 1/1 Running 0 13m 192.168.20.20 k8s-master01 kube-proxy-8d4qc 1/1 Running 0 14m 192.168.20.20 k8s-master01 kube-scheduler-k8s-master01 1/1 Running 0 13m 192.168.20.20 k8s-master01 # 网络插件未安装,所以coredns状态为Pending

6、高可用配置

以下操作在master01

复制证书

USER=root CONTROL_PLANE_IPS="k8s-master02 k8s-master03" for host in $CONTROL_PLANE_IPS; do ssh "${USER}"@$host "mkdir -p /etc/kubernetes/pki/etcd" scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:/etc/kubernetes/pki/ca.crt scp /etc/kubernetes/pki/ca.key "${USER}"@$host:/etc/kubernetes/pki/ca.key scp /etc/kubernetes/pki/sa.key "${USER}"@$host:/etc/kubernetes/pki/sa.key scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:/etc/kubernetes/pki/sa.pub scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:/etc/kubernetes/pki/front-proxy-ca.crt scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:/etc/kubernetes/pki/front-proxy-ca.key scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.crt scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.key scp /etc/kubernetes/admin.conf "${USER}"@$host:/etc/kubernetes/admin.conf done

以下操作在master02

把master02加入集群

修改kubeadm-config.yaml 如下

[root@k8s-master02 ~]# cat kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1alpha2 kind: MasterConfiguration kubernetesVersion: v1.11.1 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers apiServerCertSANs: - k8s-master01 - k8s-master02 - k8s-master03 - k8s-master-lb - 192.168.20.20 - 192.168.20.21 - 192.168.20.22 - 192.168.20.10 api: advertiseAddress: 192.168.20.21 controlPlaneEndpoint: 192.168.20.10:16443 etcd: local: extraArgs: listen-client-urls: "https://127.0.0.1:2379,https://192.168.20.21:2379" advertise-client-urls: "https://192.168.20.21:2379" listen-peer-urls: "https://192.168.20.21:2380" initial-advertise-peer-urls: "https://192.168.20.21:2380" initial-cluster: "k8s-master01=https://192.168.20.20:2380,k8s-master02=https://192.168.20.21:2380" initial-cluster-state: existing serverCertSANs: - k8s-master02 - 192.168.20.21 peerCertSANs: - k8s-master02 - 192.168.20.21 controllerManagerExtraArgs: node-monitor-grace-period: 10s pod-eviction-timeout: 10s networking: # This CIDR is a calico default. Substitute or remove for your CNI provider. podSubnet: "172.168.0.0/16"

提前下载镜像

kubeadm config images pull --config /root/kubeadm-config.yaml

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.11.1 k8s.gcr.io/kube-apiserver-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.11.1 k8s.gcr.io/kube-proxy-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.18 k8s.gcr.io/etcd-amd64:3.2.18

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.11.1 k8s.gcr.io/kube-scheduler-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.11.1 k8s.gcr.io/kube-controller-manager-amd64:v1.11.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.1.3 k8s.gcr.io/coredns:1.1.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

创建相关的证书以及kubelet配置文件

kubeadm alpha phase certs all --config /root/kubeadm-config.yaml kubeadm alpha phase kubeconfig controller-manager --config /root/kubeadm-config.yaml kubeadm alpha phase kubeconfig scheduler --config /root/kubeadm-config.yaml kubeadm alpha phase kubelet config write-to-disk --config /root/kubeadm-config.yaml kubeadm alpha phase kubelet write-env-file --config /root/kubeadm-config.yaml kubeadm alpha phase kubeconfig kubelet --config /root/kubeadm-config.yaml systemctl restart kubelet

设置k8s-master01以及k8s-master02的HOSTNAME以及地址

export CP0_IP=192.168.20.20 export CP0_HOSTNAME=k8s-master01 export CP1_IP=192.168.20.21 export CP1_HOSTNAME=k8s-master02

将etcd加入成员

[root@k8s-master02 kubernetes]# kubectl exec -n kube-system etcd-${CP0_HOSTNAME} -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://${CP0_IP}:2379 member add ${CP1_HOSTNAME} https://${CP1_IP}:2380

Added member named k8s-master02 with ID 34c8bee3b342508 to cluster ETCD_NAME="k8s-master02" ETCD_INITIAL_CLUSTER="k8s-master02=https://192.168.20.21:2380,k8s-master01=https://192.168.20.20:2380" ETCD_INITIAL_CLUSTER_STATE="existing"

[root@k8s-master02 kubernetes]# kubeadm alpha phase etcd local --config /root/kubeadm-config.yaml [endpoint] WARNING: port specified in api.controlPlaneEndpoint overrides api.bindPort in the controlplane address [etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

启动master02

kubeadm alpha phase kubeconfig all --config /root/kubeadm-config.yaml kubeadm alpha phase controlplane all --config /root/kubeadm-config.yaml kubeadm alpha phase mark-master --config /root/kubeadm-config.yaml

将master03加入集群

修改kubeadm-config.yaml 如下

[root@k8s-master03 ~]# cat kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1alpha2 kind: MasterConfiguration kubernetesVersion: v1.11.1 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers apiServerCertSANs: - k8s-master01 - k8s-master02 - k8s-master03 - k8s-master-lb - 192.168.20.20 - 192.168.20.21 - 192.168.20.22 - 192.168.20.10 api: advertiseAddress: 192.168.20.22 controlPlaneEndpoint: 192.168.20.10:16443 etcd: local: extraArgs: listen-client-urls: "https://127.0.0.1:2379,https://192.168.20.22:2379" advertise-client-urls: "https://192.168.20.22:2379" listen-peer-urls: "https://192.168.20.22:2380" initial-advertise-peer-urls: "https://192.168.20.22:2380" initial-cluster: "k8s-master01=https://192.168.20.20:2380,k8s-master02=https://192.168.20.21:2380,k8s-master03=https://192.168.20.22:2380" initial-cluster-state: existing serverCertSANs: - k8s-master03 - 192.168.20.22 peerCertSANs: - k8s-master03 - 192.168.20.22 controllerManagerExtraArgs: node-monitor-grace-period: 10s pod-eviction-timeout: 10s networking: # This CIDR is a calico default. Substitute or remove for your CNI provider. podSubnet: "172.168.0.0/16"

其余步骤同master02

所有master节点打开keepalived的监控检查,并重启keepalived

7、检查

检查node节点

[root@k8s-master01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady master 56m v1.11.1 k8s-master02 NotReady master 49m v1.11.1 k8s-master03 NotReady master 39m v1.11.1

检查pods

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE coredns-777d78ff6f-4rcsb 0/1 Pending 0 56m <none> <none> coredns-777d78ff6f-7xqzx 0/1 Pending 0 56m <none> <none> etcd-k8s-master01 1/1 Running 0 56m 192.168.20.20 k8s-master01 etcd-k8s-master02 1/1 Running 0 44m 192.168.20.21 k8s-master02 etcd-k8s-master03 1/1 Running 9 29m 192.168.20.22 k8s-master03 kube-apiserver-k8s-master01 1/1 Running 0 55m 192.168.20.20 k8s-master01 kube-apiserver-k8s-master02 1/1 Running 0 44m 192.168.20.21 k8s-master02 kube-apiserver-k8s-master03 1/1 Running 9 29m 192.168.20.22 k8s-master03 kube-controller-manager-k8s-master01 1/1 Running 1 56m 192.168.20.20 k8s-master01 kube-controller-manager-k8s-master02 1/1 Running 0 44m 192.168.20.21 k8s-master02 kube-controller-manager-k8s-master03 1/1 Running 2 37m 192.168.20.22 k8s-master03 kube-proxy-4cjhm 1/1 Running 0 49m 192.168.20.21 k8s-master02 kube-proxy-lkvjk 1/1 Running 2 40m 192.168.20.22 k8s-master03 kube-proxy-m7htq 1/1 Running 0 56m 192.168.20.20 k8s-master01 kube-scheduler-k8s-master01 1/1 Running 1 55m 192.168.20.20 k8s-master01 kube-scheduler-k8s-master02 1/1 Running 0 44m 192.168.20.21 k8s-master02 kube-scheduler-k8s-master03 1/1 Running 2 37m 192.168.20.22 k8s-master03

注意:此时网络插件仍未安装

8、部署网络插件

在任意master节点

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

在所有master节点上允许hpa通过接口采集数据

vi /etc/kubernetes/manifests/kube-controller-manager.yaml - --horizontal-pod-autoscaler-use-rest-clients=false

在所有master上允许istio的自动注入,修改/etc/kubernetes/manifests/kube-apiserver.yaml

vi /etc/kubernetes/manifests/kube-apiserver.yaml - --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota

所有master节点重启服务

# 重启服务

systemctl restart kubelet

所有节点下载calico镜像

# kubernetes networks add ons docker pull quay.io/calico/typha:v0.7.4 docker pull quay.io/calico/node:v3.1.3 docker pull quay.io/calico/cni:v3.1.3

所有节点下载dashboard镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.8.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.8.3 gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.3

所有节点下载prometheus和traefik

# prometheus docker pull prom/prometheus:v2.3.1 # traefik docker pull traefik:v1.6.3

所有节点下载istio,istio是一个强大的服务网格,可用于灰度发布等强大功能,文档点我,不需要可无需下载

# istio docker pull docker.io/jaegertracing/all-in-one:1.5 docker pull docker.io/prom/prometheus:v2.3.1 docker pull docker.io/prom/statsd-exporter:v0.6.0 docker pull quay.io/coreos/hyperkube:v1.7.6_coreos.0 docker pull istio/citadel:1.0.0 docker tag istio/citadel:1.0.0 gcr.io/istio-release/citadel:1.0.0 docker pull istio/galley:1.0.0 docker tag istio/galley:1.0.0 gcr.io/istio-release/galley:1.0.0 docker pull istio/grafana:1.0.0 docker tag istio/grafana:1.0.0 gcr.io/istio-release/grafana:1.0.0 docker pull istio/mixer:1.0.0 docker tag istio/mixer:1.0.0 gcr.io/istio-release/mixer:1.0.0 docker pull istio/pilot:1.0.0 docker tag istio/pilot:1.0.0 gcr.io/istio-release/pilot:1.0.0 docker pull istio/proxy_init:1.0.0 docker tag istio/proxy_init:1.0.0 gcr.io/istio-release/proxy_init:1.0.0 docker pull istio/proxyv2:1.0.0 docker tag istio/proxyv2:1.0.0 gcr.io/istio-release/proxyv2:1.0.0 docker pull istio/servicegraph:1.0.0 docker tag istio/servicegraph:1.0.0 gcr.io/istio-release/servicegraph:1.0.0 docker pull istio/sidecar_injector:1.0.0 docker tag istio/sidecar_injector:1.0.0 gcr.io/istio-release/sidecar_injector:1.0.0

所有节点下载metric和heapster

docker pull dotbalo/heapster-amd64:v1.5.4 docker pull dotbalo/heapster-grafana-amd64:v5.0.4 docker pull dotbalo/heapster-influxdb-amd64:v1.5.2 docker pull dotbalo/metrics-server-amd64:v0.2.1 docker tag dotbalo/heapster-amd64:v1.5.4 k8s.gcr.io/heapster-amd64:v1.5.4 docker tag dotbalo/heapster-grafana-amd64:v5.0.4 k8s.gcr.io/heapster-grafana-amd64:v5.0.4

docker tag dotbalo/heapster-influxdb-amd64:v1.5.2 k8s.gcr.io/heapster-influxdb-amd64:v1.5.2

docker tag dotbalo/metrics-server-amd64:v0.2.1 gcr.io/google_containers/metrics-server-amd64:v0.2.1

在master01节点上安装calico,安装calico网络组件后,nodes状态才会恢复正常

kubectl apply -f calico/

查看节点及pods状态

[root@k8s-master01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready master 1h v1.11.1 k8s-master02 Ready master 1h v1.11.1 k8s-master03 Ready master 1h v1.11.1

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE calico-node-kwz9t 2/2 Running 0 20m 192.168.20.20 k8s-master01 calico-node-rj8p8 2/2 Running 0 20m 192.168.20.21 k8s-master02 calico-node-xfsg5 2/2 Running 0 20m 192.168.20.22 k8s-master03 coredns-777d78ff6f-4rcsb 1/1 Running 0 1h 172.168.1.3 k8s-master02 coredns-777d78ff6f-7xqzx 1/1 Running 0 1h 172.168.1.2 k8s-master02 etcd-k8s-master01 1/1 Running 0 1h 192.168.20.20 k8s-master01 etcd-k8s-master02 1/1 Running 0 1h 192.168.20.21 k8s-master02 etcd-k8s-master03 1/1 Running 9 17m 192.168.20.22 k8s-master03 kube-apiserver-k8s-master01 1/1 Running 0 21m 192.168.20.20 k8s-master01 kube-apiserver-k8s-master02 1/1 Running 0 21m 192.168.20.21 k8s-master02 kube-apiserver-k8s-master03 1/1 Running 1 17m 192.168.20.22 k8s-master03 kube-controller-manager-k8s-master01 1/1 Running 0 23m 192.168.20.20 k8s-master01 kube-controller-manager-k8s-master02 1/1 Running 1 23m 192.168.20.21 k8s-master02 kube-controller-manager-k8s-master03 1/1 Running 0 17m 192.168.20.22 k8s-master03 kube-proxy-4cjhm 1/1 Running 0 1h 192.168.20.21 k8s-master02 kube-proxy-lkvjk 1/1 Running 2 1h 192.168.20.22 k8s-master03 kube-proxy-m7htq 1/1 Running 0 1h 192.168.20.20 k8s-master01 kube-scheduler-k8s-master01 1/1 Running 2 1h 192.168.20.20 k8s-master01 kube-scheduler-k8s-master02 1/1 Running 0 1h 192.168.20.21 k8s-master02 kube-scheduler-k8s-master03 1/1 Running 2 17m 192.168.20.22 k8s-master03

9、确认所有服务开启自启动

所有节点

systemctl enable kubelet

systemctl enable docker

systemctl disable firewalld

master节点

systemctl enable keepalived

10、监控及dashboard安装

在任意Master节点允许master部署pod

kubectl taint nodes --all node-role.kubernetes.io/master-

在任意master节点上安装metrics-server,从v1.11.0开始,性能采集不再采用heapster采集pod性能数据,而是使用metrics-server

[root@k8s-master01 k8s-ha-install]# kubectl apply -f metrics-server/ clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created serviceaccount/metrics-server created deployment.extensions/metrics-server created service/metrics-server created clusterrole.rbac.authorization.k8s.io/system:metrics-server created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

查看pod

[root@k8s-master01 k8s-ha-install]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE ..... metrics-server-55fcc5b88-ncmld 1/1 Running 0 3m 172.168.3.2 k8s-node01 .....

在任意master节点上安装heapster,从v1.11.0开始,性能采集不再采用heapster采集pod性能数据,而是使用metrics-server,但是dashboard依然使用heapster呈现性能数据

[root@k8s-master01 k8s-ha-install]# kubectl apply -f heapster/ deployment.extensions/monitoring-grafana created service/monitoring-grafana created clusterrolebinding.rbac.authorization.k8s.io/heapster created clusterrole.rbac.authorization.k8s.io/heapster created serviceaccount/heapster created deployment.extensions/heapster created service/heapster created deployment.extensions/monitoring-influxdb created service/monitoring-influxdb created

查看pods

[root@k8s-master01 k8s-ha-install]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE ..... heapster-5874d498f5-75r58 1/1 Running 0 25s 172.168.3.3 k8s-node01 ..... monitoring-grafana-9b6b75b49-4zm6d 1/1 Running 0 26s 172.168.2.2 k8s-master03 monitoring-influxdb-655cd78874-lmz5l 1/1 Running 0 24s 172.168.0.2 k8s-master01

.....

部署dashboard

[root@k8s-master01 k8s-ha-install]# kubectl apply -f dashboard/ secret/kubernetes-dashboard-certs created serviceaccount/kubernetes-dashboard created role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created deployment.apps/kubernetes-dashboard created service/kubernetes-dashboard created serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created

查看最终pods

[root@k8s-master01 k8s-ha-install]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE calico-node-47bmt 2/2 Running 0 49m 192.168.20.30 k8s-node01 calico-node-5r9ln 2/2 Running 1 43m 192.168.20.31 k8s-node02 calico-node-kwz9t 2/2 Running 0 2h 192.168.20.20 k8s-master01 calico-node-rj8p8 2/2 Running 0 2h 192.168.20.21 k8s-master02 calico-node-xfsg5 2/2 Running 0 2h 192.168.20.22 k8s-master03 coredns-777d78ff6f-4rcsb 1/1 Running 0 3h 172.168.1.3 k8s-master02 coredns-777d78ff6f-7xqzx 1/1 Running 0 3h 172.168.1.2 k8s-master02 etcd-k8s-master01 1/1 Running 0 3h 192.168.20.20 k8s-master01 etcd-k8s-master02 1/1 Running 0 3h 192.168.20.21 k8s-master02 etcd-k8s-master03 1/1 Running 9 2h 192.168.20.22 k8s-master03 heapster-5874d498f5-75r58 1/1 Running 0 2m 172.168.3.3 k8s-node01 kube-apiserver-k8s-master01 1/1 Running 0 2h 192.168.20.20 k8s-master01 kube-apiserver-k8s-master02 1/1 Running 0 2h 192.168.20.21 k8s-master02 kube-apiserver-k8s-master03 1/1 Running 1 2h 192.168.20.22 k8s-master03 kube-controller-manager-k8s-master01 1/1 Running 0 2h 192.168.20.20 k8s-master01 kube-controller-manager-k8s-master02 1/1 Running 1 2h 192.168.20.21 k8s-master02 kube-controller-manager-k8s-master03 1/1 Running 0 2h 192.168.20.22 k8s-master03 kube-proxy-4cjhm 1/1 Running 0 3h 192.168.20.21 k8s-master02 kube-proxy-ctjlh 1/1 Running 0 43m 192.168.20.31 k8s-node02 kube-proxy-lkvjk 1/1 Running 2 3h 192.168.20.22 k8s-master03 kube-proxy-m7htq 1/1 Running 0 3h 192.168.20.20 k8s-master01 kube-proxy-n9nzb 1/1 Running 0 49m 192.168.20.30 k8s-node01 kube-scheduler-k8s-master01 1/1 Running 2 3h 192.168.20.20 k8s-master01 kube-scheduler-k8s-master02 1/1 Running 0 3h 192.168.20.21 k8s-master02 kube-scheduler-k8s-master03 1/1 Running 2 2h 192.168.20.22 k8s-master03 kubernetes-dashboard-7954d796d8-mhpzq 1/1 Running 0 16s 172.168.0.3 k8s-master01 metrics-server-55fcc5b88-ncmld 1/1 Running 0 9m 172.168.3.2 k8s-node01 monitoring-grafana-9b6b75b49-4zm6d 1/1 Running 0 2m 172.168.2.2 k8s-master03 monitoring-influxdb-655cd78874-lmz5l 1/1 Running 0 2m 172.168.0.2 k8s-master01

11、dashboard配置

访问:https://k8s-master-lb:30000

任意master节点获取token

[root@k8s-master01 dashboard]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') Name: admin-user-token-xkzll Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name=admin-user kubernetes.io/service-account.uid=630e83b8-dcf1-11e8-bac5-000c293bfe27 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXhremxsIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2MzBlODNiOC1kY2YxLTExZTgtYmFjNS0wMDBjMjkzYmZlMjciLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.k9wh_tSMEPBJfExfkfmGwQRaYntkAKNEcbKaT0VH4y13pgXKNEsSt26mjH-C9kxSAkVuaWaXIMLqhKC3wuWF57I2SsgG6uVyRTjX3klaJQXjuuDWqS3gAIRIDKLvK57MzU72WKX1lCrW54t5ZvxPAVB3zd6b3qOQzju_1ieRq7PERGTG_nagmrA7302CDK3e5BD7hscNyQs7Tqw-xsf8Ws6GccszJVY6lBbbXYfKrgv3-Auyum9N0Y19Jf8VM6T4cFgVEfoR3Nm4t8D1Zp8fdCVO23qkbxbGM2HzBlzxt15c4_cqqYHbeF_8Nu4jdqnEQWLyrETiIm68yLDsEJ_4Ow

查看性能数据

12、node节点加入集群

清理node节点的kubelet配置

[root@k8s-node01 ~]# kubeadm reset

[reset] WARNING: changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] are you sure you want to proceed? [y/N]: y

[preflight] running pre-flight checks

[reset] stopping the kubelet service

[reset] unmounting mounted directories in "/var/lib/kubelet"

[reset] removing kubernetes-managed containers

[reset] cleaning up running containers using crictl with socket /var/run/dockershim.sock

[reset] failed to list running pods using crictl: exit status 1. Trying to use docker instead[reset] no etcd manifest found in "/etc/kubernetes/manifests/etcd.yaml". Assuming external etcd

[reset] deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

[reset] deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

加入集群

[root@k8s-node01 ~]# kubeadm join 192.168.20.10:16443 --token vl9ca2.g2cytziz8ctjsjzd --discovery-token-ca-cert-hash sha256:3459199acc7f719d3197bd14dfe2781f25b99a8686b215989e5d748ae92fcb28

查看节点

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 3h v1.11.1

k8s-master02 Ready master 3h v1.11.1

k8s-master03 Ready master 2h v1.11.1

k8s-node01 Ready <none> 3m v1.11.1

其他node配置相同

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 3h v1.11.1

k8s-master02 Ready master 3h v1.11.1

k8s-master03 Ready master 2h v1.11.1

k8s-node01 Ready <none> 6m v1.11.1

k8s-node02 Ready <none> 26s v1.11.1

至此,高可用集群安装完毕,注意:最好将用到的系统镜像保存在私有仓库中,然后更改各配置文件image地址为私有地址。

参考:

https://kubernetes.io/docs/setup/independent/high-availability/

https://github.com/cookeem/kubeadm-ha/blob/master/README_CN.md

https://blog.csdn.net/ygqygq2/article/details/81333400

赞助作者: