1、系统环境

使用kubeadm安装高可用k8s v.13.x较为简单,相比以往的版本省去了很多步骤。

kubeadm安装高可用k8s v.11 和 v1.12 点我

主机信息

| 主机名 | IP地址 | 说明 | 组件 |

|---|---|---|---|

| k8s-master01 ~ 03 | 192.168.20.20 ~ 22 | master节点 * 3 | keepalived、nginx、etcd、kubelet、kube-apiserver |

| k8s-master-lb | 192.168.20.10 | keepalived虚拟IP | 无 |

| k8s-node01 ~ 08 | 192.168.20.30 ~ 37 | worker节点 * 8 | kubelet |

主机配置

[root@k8s-master01 ~]# hostname

k8s-master01

[root@k8s-master01 ~]# free -g

total used free shared buff/cache available

Mem: 3 1 0 0 2 2

Swap: 0 0 0

[root@k8s-master01 ~]# cat /proc/cpuinfo | grep process

processor : 0

processor : 1

processor : 2

processor : 3

[root@k8s-master01 ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

Docker和k8s版本

[root@k8s-master01 ~]# docker version

Client:

Version: 17.09.1-ce

API version: 1.32

Go version: go1.8.3

Git commit: 19e2cf6

Built: Thu Dec 7 22:23:40 2017

OS/Arch: linux/amd64

Server:

Version: 17.09.1-ce

API version: 1.32 (minimum version 1.12)

Go version: go1.8.3

Git commit: 19e2cf6

Built: Thu Dec 7 22:25:03 2017

OS/Arch: linux/amd64

Experimental: false

[root@k8s-master01 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:35:51Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:28:14Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

2、配置SSH互信

所有节点配置hosts:

[root@k8s-master01 ~]# cat /etc/hosts

192.168.20.20 k8s-master01

192.168.20.21 k8s-master02

192.168.20.22 k8s-master03

192.168.20.10 k8s-master-lb

192.168.20.30 k8s-node01

192.168.20.31 k8s-node02

在k8s-master01上执行:

[root@k8s-master01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:TE0eRfhGNRXL3btmmMRq+awUTkR4RnWrMf6Q5oJaTn0 root@k8s-master01

The key's randomart image is:

+---[RSA 2048]----+

| =*+oo+o|

| =o+. o.=|

| . =+ o +o|

| o . = = .|

| S + O . |

| = B = .|

| + O E = |

| = o = o |

| . . ..o |

+----[SHA256]-----+

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

所有节点关闭防火墙和selinux

[root@k8s-master01 ~]# systemctl disable --now firewalld NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-master01 ~]# setenforce 0

[root@k8s-master01 ~]# sed -ri '/^[^#]*SELINUX=/s#=.+$#=disabled#' /etc/selinux/config

所有节点关闭dnsmasq(如开启)

systemctl disable --now dnsmasq

所有节点关闭swap

[root@k8s-master01 ~]# swapoff -a && sysctl -w vm.swappiness=0

vm.swappiness = 0

[root@k8s-master01 ~]# sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

所有节点同步时间

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab

所有节点limit配置

ulimit -SHn 65535

master01下载安装文件

[root@k8s-master01 ~]# git clone https://github.com/dotbalo/k8s-ha-install.git -b v1.13.x

所有节点创建repo

cd /etc/yum.repos.d

mkdir bak

mv *.repo bak/

cp /root/k8s-ha-install/repo/* .

所有节点升级系统并重启

yum install wget git jq psmisc vim net-tools -y

yum update -y && reboot

所有节点配置k8s内核

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl --system

3、k8s服务安装

所有节点安装docker-ce

yum -y install docker-ce-17.09.1.ce-1.el7.centos

所有节点安装集群组件

yum install -y kubelet-1.13.2-0.x86_64 kubeadm-1.13.2-0.x86_64

所有节点启动docker和kubelet

systemctl enable docker && systemctl start docker

[root@k8s-master01 ~]# DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3)

[root@k8s-master01 ~]# echo $DOCKER_CGROUPS

cgroupfs

[root@k8s-master01 ~]# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable kubelet && systemctl start kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

[root@k8s-master01 ~]#

注意此时如果kubelet无法启动不用管

在所有master节点安装并启动keepalived及docker-compose

yum install -y keepalived

systemctl enable keepalived && systemctl restart keepalived

#安装docker-compose

yum install -y docker-compose

4、master01节点安装

以下操作在master01节点

创建配置文件

修改对应的配置信息,注意nm-bond修改为服务器对应网卡名称

[root@k8s-master01 k8s-ha-install]# ./create-config.sh

create kubeadm-config.yaml files success. config/k8s-master01/kubeadm-config.yaml

create kubeadm-config.yaml files success. config/k8s-master02/kubeadm-config.yaml

create kubeadm-config.yaml files success. config/k8s-master03/kubeadm-config.yaml

create keepalived files success. config/k8s-master01/keepalived/

create keepalived files success. config/k8s-master02/keepalived/

create keepalived files success. config/k8s-master03/keepalived/

create nginx-lb files success. config/k8s-master01/nginx-lb/

create nginx-lb files success. config/k8s-master02/nginx-lb/

create nginx-lb files success. config/k8s-master03/nginx-lb/

create calico.yaml file success. calico/calico.yaml

[root@k8s-master01 k8s-ha-install]# pwd

/root/k8s-ha-instal

分发文件

[root@k8s-master01 k8s-ha-install]# export HOST1=k8s-master01

[root@k8s-master01 k8s-ha-install]# export HOST2=k8s-master02

[root@k8s-master01 k8s-ha-install]# export HOST3=k8s-master03

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST1/kubeadm-config.yaml $HOST1:/root/

kubeadm-config.yaml 100% 993 1.9MB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST2/kubeadm-config.yaml $HOST2:/root/

kubeadm-config.yaml 100% 1071 63.8KB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST3/kubeadm-config.yaml $HOST3:/root/

kubeadm-config.yaml 100% 1112 27.6KB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST1/keepalived/* $HOST1:/etc/keepalived/

check_apiserver.sh 100% 471 36.4KB/s 00:00

keepalived.conf 100% 558 69.9KB/s 00:00

You have new mail in /var/spool/mail/root

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST2/keepalived/* $HOST2:/etc/keepalived/

check_apiserver.sh 100% 471 10.8KB/s 00:00

keepalived.conf 100% 558 275.5KB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST3/keepalived/* $HOST3:/etc/keepalived/

check_apiserver.sh 100% 471 12.7KB/s 00:00

keepalived.conf 100% 558 1.1MB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST1/nginx-lb $HOST1:/root/

docker-compose.yaml 100% 213 478.6KB/s 00:00

nginx-lb.conf 100% 1036 2.6MB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST2/nginx-lb $HOST2:/root/

docker-compose.yaml 100% 213 12.5KB/s 00:00

nginx-lb.conf 100% 1036 35.5KB/s 00:00

[root@k8s-master01 k8s-ha-install]# scp -r config/$HOST3/nginx-lb $HOST3:/root/

docker-compose.yaml 100% 213 20.5KB/s 00:00

nginx-lb.conf 100% 1036 94.3KB/s 00:00

所有master节点启动nginx

cd

docker-compose --file=/root/nginx-lb/docker-compose.yaml up -d

docker-compose --file=/root/nginx-lb/docker-compose.yaml ps

重启keepalived

systemctl restart keepalived

提前下载镜像

kubeadm config images pull --config /root/kubeadm-config.yaml

集群初始化

kubeadm init --config /root/kubeadm-config.yaml

....

kubeadm join k8s-master-lb:16443 --token cxwr3f.2knnb1gj83ztdg9l --discovery-token-ca-cert-hash sha256:41718412b5d2ccdc8b7326fd440360bf186a21dac4a0769f460ca4bdaf5d2825

....

[root@k8s-master01 ~]# cat <<EOF >> ~/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

[root@k8s-master01 ~]# source ~/.bashrc

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 2m11s v1.13.2

查看pods状态

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-89cc84847-2h7r6 0/1 ContainerCreating 0 3m12s <none> k8s-master01 <none> <none>

coredns-89cc84847-fhwbr 0/1 ContainerCreating 0 3m12s <none> k8s-master01 <none> <none>

etcd-k8s-master01 1/1 Running 0 2m31s 192.168.20.20 k8s-master01 <none> <none>

kube-apiserver-k8s-master01 1/1 Running 0 2m36s 192.168.20.20 k8s-master01 <none> <none>

kube-controller-manager-k8s-master01 1/1 Running 0 2m39s 192.168.20.20 k8s-master01 <none> <none>

kube-proxy-kb95s 1/1 Running 0 3m12s 192.168.20.20 k8s-master01 <none> <none>

kube-scheduler-k8s-master01 1/1 Running 0 2m46s 192.168.20.20 k8s-master01 <none> <none>

此时CoreDNS状态为ContainerCreating,报错如下:

Normal Scheduled 2m51s default-scheduler Successfully assigned kube-system/coredns-89cc84847-2h7r6 to k8s-master01

Warning NetworkNotReady 2m3s (x25 over 2m51s) kubelet, k8s-master01 network is not ready: [runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

因为没有安装网络插件,暂时不用管

安装calico

[root@k8s-master01 k8s-ha-install]# kubectl create -f calico/

configmap/calico-config created

service/calico-typha created

deployment.apps/calico-typha created

poddisruptionbudget.policy/calico-typha created

daemonset.extensions/calico-node created

serviceaccount/calico-node created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

再次查看

[root@k8s-master01 k8s-ha-install]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-tp2dz 2/2 Running 0 42s

coredns-89cc84847-2djpl 1/1 Running 0 66s

coredns-89cc84847-vt6zq 1/1 Running 0 66s

etcd-k8s-master01 1/1 Running 0 27s

kube-apiserver-k8s-master01 1/1 Running 0 16s

kube-controller-manager-k8s-master01 1/1 Running 0 34s

kube-proxy-x497d 1/1 Running 0 66s

kube-scheduler-k8s-master01 1/1 Running 0 17s

5、高可用配置

复制证书

USER=root

CONTROL_PLANE_IPS="k8s-master02 k8s-master03"

for host in $CONTROL_PLANE_IPS; do

ssh "${USER}"@$host "mkdir -p /etc/kubernetes/pki/etcd"

scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:/etc/kubernetes/pki/ca.crt

scp /etc/kubernetes/pki/ca.key "${USER}"@$host:/etc/kubernetes/pki/ca.key

scp /etc/kubernetes/pki/sa.key "${USER}"@$host:/etc/kubernetes/pki/sa.key

scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:/etc/kubernetes/pki/sa.pub

scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:/etc/kubernetes/pki/front-proxy-ca.key

scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.crt

scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.key

scp /etc/kubernetes/admin.conf "${USER}"@$host:/etc/kubernetes/admin.conf

done

以下操作在master02执行

提前下载镜像

kubeadm config images pull --config /root/kubeadm-config.yaml

master02加入集群,与node节点相差的参数就是--experimental-control-plane

kubeadm join k8s-master-lb:16443 --token cxwr3f.2knnb1gj83ztdg9l --discovery-token-ca-cert-hash sha256:41718412b5d2ccdc8b7326fd440360bf186a21dac4a0769f460ca4bdaf5d2825 --experimental-control-plane

......

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Master label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

master01查看状态

[root@k8s-master01 k8s-ha-install]# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 15m v1.13.2

k8s-master02 Ready master 9m55s v1.13.2

其他master节点类似

查看最终master状态

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-49dwr 2/2 Running 0 26m

calico-node-kz2d4 2/2 Running 0 22m

calico-node-zwnmq 2/2 Running 0 4m6s

coredns-89cc84847-dgxlw 1/1 Running 0 27m

coredns-89cc84847-n77x6 1/1 Running 0 27m

etcd-k8s-master01 1/1 Running 0 27m

etcd-k8s-master02 1/1 Running 0 22m

etcd-k8s-master03 1/1 Running 0 4m5s

kube-apiserver-k8s-master01 1/1 Running 0 27m

kube-apiserver-k8s-master02 1/1 Running 0 22m

kube-apiserver-k8s-master03 1/1 Running 3 4m6s

kube-controller-manager-k8s-master01 1/1 Running 1 27m

kube-controller-manager-k8s-master02 1/1 Running 0 22m

kube-controller-manager-k8s-master03 1/1 Running 0 4m6s

kube-proxy-f9qc5 1/1 Running 0 27m

kube-proxy-k55bg 1/1 Running 0 22m

kube-proxy-kbg9c 1/1 Running 0 4m6s

kube-scheduler-k8s-master01 1/1 Running 1 27m

kube-scheduler-k8s-master02 1/1 Running 0 22m

kube-scheduler-k8s-master03 1/1 Running 0 4m6s

[root@k8s-master01 ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 28m v1.13.2

k8s-master02 Ready master 22m v1.13.2

k8s-master03 Ready master 4m16s v1.13.2

[root@k8s-master01 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-6mqbv 28m system:node:k8s-master01 Approved,Issued

node-csr-GPLcR1G4Nchf-zuB5DaTWncoluMuENUfKvWKs0j2GdQ 23m system:bootstrap:9zp70m Approved,Issued

node-csr-cxAxrkllyidkBuZ8fck6fwq-ht1_u6s0snbDErM8bIs 4m51s system:bootstrap:9zp70m Approved,Issued

在所有master节点上允许hpa通过接口采集数据

vi /etc/kubernetes/manifests/kube-controller-manager.yaml - --horizontal-pod-autoscaler-use-rest-clients=false

6、node节点加入集群

kubeadm join 192.168.20.10:16443 --token ll4usb.qmplnofiv7z1j0an --discovery-token-ca-cert-hash sha256:e88a29f62ab77a59bf88578abadbcd37e89455515f6ecf3ca371656dc65b1d6e

......

[kubelet-start] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-node02" as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

master节点查看

[root@k8s-master01 k8s-ha-install]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-49dwr 2/2 Running 0 13h

calico-node-9nmhb 2/2 Running 0 11m

calico-node-k5nmt 2/2 Running 0 11m

calico-node-kz2d4 2/2 Running 0 13h

calico-node-zwnmq 2/2 Running 0 13h

coredns-89cc84847-dgxlw 1/1 Running 0 13h

coredns-89cc84847-n77x6 1/1 Running 0 13h

etcd-k8s-master01 1/1 Running 0 13h

etcd-k8s-master02 1/1 Running 0 13h

etcd-k8s-master03 1/1 Running 0 13h

kube-apiserver-k8s-master01 1/1 Running 0 18m

kube-apiserver-k8s-master02 1/1 Running 0 17m

kube-apiserver-k8s-master03 1/1 Running 0 16m

kube-controller-manager-k8s-master01 1/1 Running 0 19m

kube-controller-manager-k8s-master02 1/1 Running 1 19m

kube-controller-manager-k8s-master03 1/1 Running 0 19m

kube-proxy-cl2zv 1/1 Running 0 11m

kube-proxy-f9qc5 1/1 Running 0 13h

kube-proxy-hkcq5 1/1 Running 0 11m

kube-proxy-k55bg 1/1 Running 0 13h

kube-proxy-kbg9c 1/1 Running 0 13h

kube-scheduler-k8s-master01 1/1 Running 1 13h

kube-scheduler-k8s-master02 1/1 Running 0 13h

kube-scheduler-k8s-master03 1/1 Running 0 13h

You have new mail in /var/spool/mail/root

[root@k8s-master01 k8s-ha-install]# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 13h v1.13.2

k8s-master02 Ready master 13h v1.13.2

k8s-master03 Ready master 13h v1.13.2

k8s-node01 Ready <none> 11m v1.13.2

k8s-node02 Ready <none> 11m v1.13.2

7、其他组件安装

部署metrics server 0.3.1/1.8+安装

[root@k8s-master01 k8s-ha-install]# kubectl create -f metrics-server/

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.extensions/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@k8s-master01 k8s-ha-install]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-49dwr 2/2 Running 0 14h

calico-node-9nmhb 2/2 Running 0 69m

calico-node-k5nmt 2/2 Running 0 69m

calico-node-kz2d4 2/2 Running 0 14h

calico-node-zwnmq 2/2 Running 0 14h

coredns-89cc84847-dgxlw 1/1 Running 0 14h

coredns-89cc84847-n77x6 1/1 Running 0 14h

etcd-k8s-master01 1/1 Running 0 14h

etcd-k8s-master02 1/1 Running 0 14h

etcd-k8s-master03 1/1 Running 0 14h

kube-apiserver-k8s-master01 1/1 Running 0 6m23s

kube-apiserver-k8s-master02 1/1 Running 1 4m41s

kube-apiserver-k8s-master03 1/1 Running 0 4m34s

kube-controller-manager-k8s-master01 1/1 Running 0 78m

kube-controller-manager-k8s-master02 1/1 Running 1 78m

kube-controller-manager-k8s-master03 1/1 Running 0 77m

kube-proxy-cl2zv 1/1 Running 0 69m

kube-proxy-f9qc5 1/1 Running 0 14h

kube-proxy-hkcq5 1/1 Running 0 69m

kube-proxy-k55bg 1/1 Running 0 14h

kube-proxy-kbg9c 1/1 Running 0 14h

kube-scheduler-k8s-master01 1/1 Running 1 14h

kube-scheduler-k8s-master02 1/1 Running 0 14h

kube-scheduler-k8s-master03 1/1 Running 0 14h

metrics-server-7c5546c5c5-ms4nz 1/1 Running 0 25s

过5分钟左右查看

[root@k8s-master01 k8s-ha-install]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01 155m 3% 1716Mi 44%

k8s-master02 337m 8% 1385Mi 36%

k8s-master03 450m 11% 1180Mi 30%

k8s-node01 153m 3% 582Mi 7%

k8s-node02 142m 3% 601Mi 7%

[root@k8s-master01 k8s-ha-install]# kubectl top pod -n kube-system

NAME CPU(cores) MEMORY(bytes)

calico-node-49dwr 15m 71Mi

calico-node-9nmhb 47m 60Mi

calico-node-k5nmt 46m 61Mi

calico-node-kz2d4 18m 47Mi

calico-node-zwnmq 16m 46Mi

coredns-89cc84847-dgxlw 2m 13Mi

coredns-89cc84847-n77x6 2m 13Mi

etcd-k8s-master01 27m 126Mi

etcd-k8s-master02 23m 117Mi

etcd-k8s-master03 19m 112Mi

kube-apiserver-k8s-master01 29m 410Mi

kube-apiserver-k8s-master02 19m 343Mi

kube-apiserver-k8s-master03 13m 343Mi

kube-controller-manager-k8s-master01 23m 97Mi

kube-controller-manager-k8s-master02 1m 16Mi

kube-controller-manager-k8s-master03 1m 16Mi

kube-proxy-cl2zv 18m 18Mi

kube-proxy-f9qc5 8m 20Mi

kube-proxy-hkcq5 30m 19Mi

kube-proxy-k55bg 8m 20Mi

kube-proxy-kbg9c 6m 20Mi

kube-scheduler-k8s-master01 7m 20Mi

kube-scheduler-k8s-master02 9m 19Mi

kube-scheduler-k8s-master03 7m 19Mi

metrics-server-7c5546c5c5-ms4nz 3m 14Mi

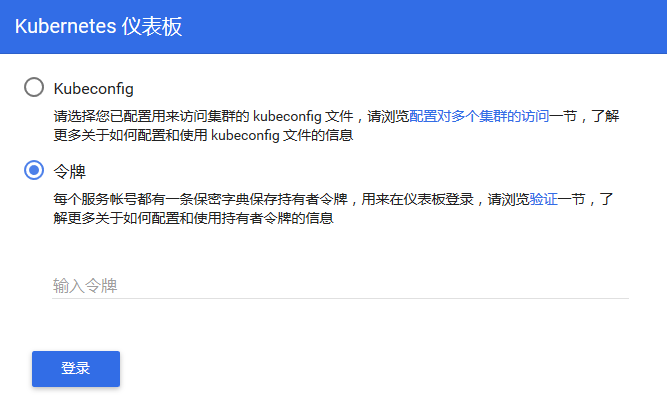

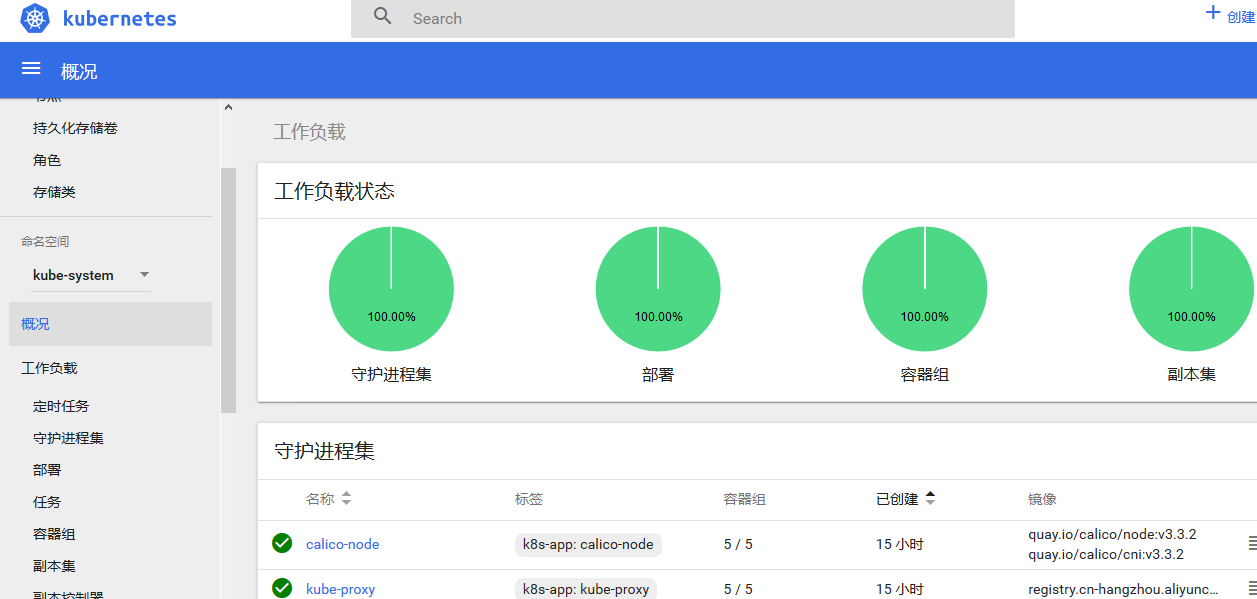

部署dashboard v1.10.0

[root@k8s-master01 k8s-ha-install]# kubectl create -f dashboard/

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

查看pod和svc

[root@k8s-master01 k8s-ha-install]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 10.102.221.48 <none> 5473/TCP 15h

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 15h

kubernetes-dashboard NodePort 10.105.18.61 <none> 443:30000/TCP 7s

metrics-server ClusterIP 10.101.178.115 <none> 443/TCP 23m

[root@k8s-master01 k8s-ha-install]# kubectl get po -n kube-system -l k8s-app=kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-845b47dbfc-j4r48 1/1 Running 0 7m14s

访问:https://192.168.20.10:30000/#!/login

查看令牌

[root@k8s-master01 k8s-ha-install]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-455bd

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: e6effde6-1a0a-11e9-ae1a-000c298bf023

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTQ1NWJkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJlNmVmZmRlNi0xYTBhLTExZTktYWUxYS0wMDBjMjk4YmYwMjMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.Lw8hErqRoEC3e4VrEsAkFraytQI13NWj2osm-3lhaFDfgLtj4DIadq3ef8VgxpmyViPRzPh5fhq7EejuGH6V9cPsqEVlNBjWG0Wzfn0QuPP0xkxoW2V7Lne14Pu0-bTDE4P4UcW4MGPJAHSvckO9DTfYSzYghE2YeNKzDfhhA4DuWXaWGdNqzth_QjG_zbHsAB9kT3yVNM6bMVj945wZYSzXdJixSPBB46y92PAnfO0kAWsQc_zUtG8U1bTo7FdJ8BXgvNhytUvP7-nYanSIcpUoVXZRinQDGB-_aVRuoHHpiBOKmZlEqWOOaUrDf0DQJvDzt9TL-YHjimIstzv18A

ca.crt: 1025 bytes

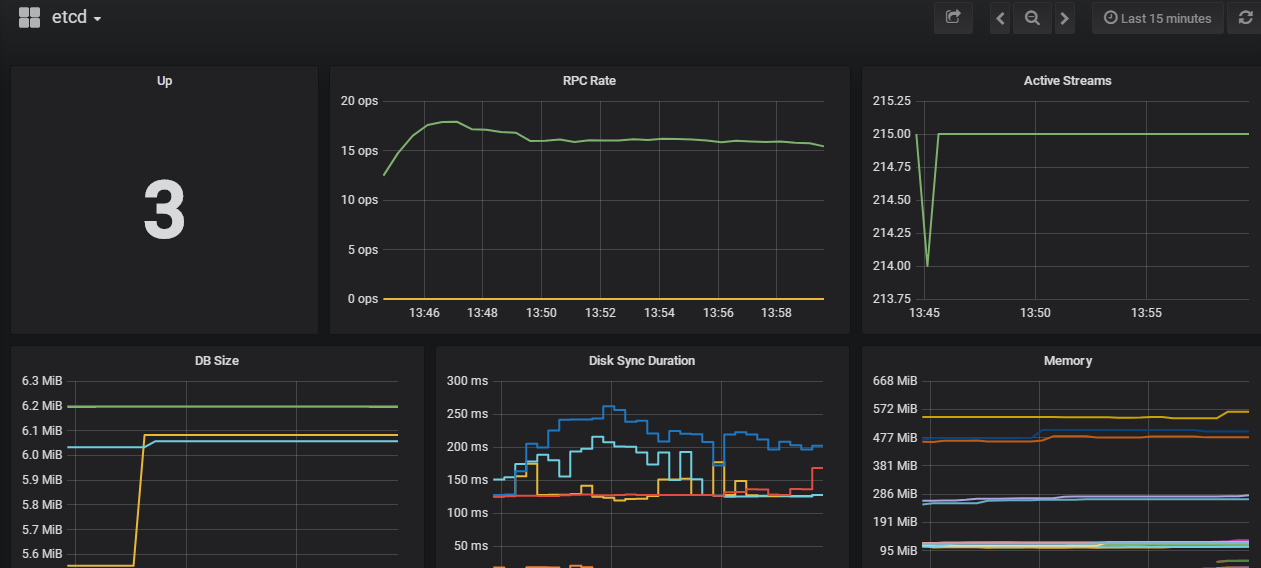

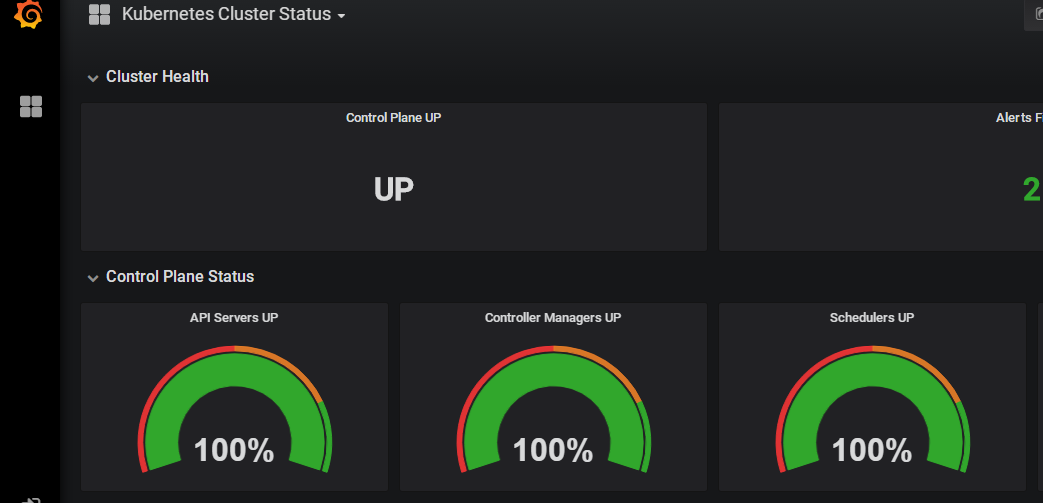

Prometheus部署:https://www.cnblogs.com/dukuan/p/10177757.html

赞助作者: