KVM is an acronym of “Kernel based Virtual Machine”, and is a virtualization infrastructure for the Linux kernel that turns it into a hypervisor.

It is used with QEMU to emulate some peripherals, called QEMU-KVM.

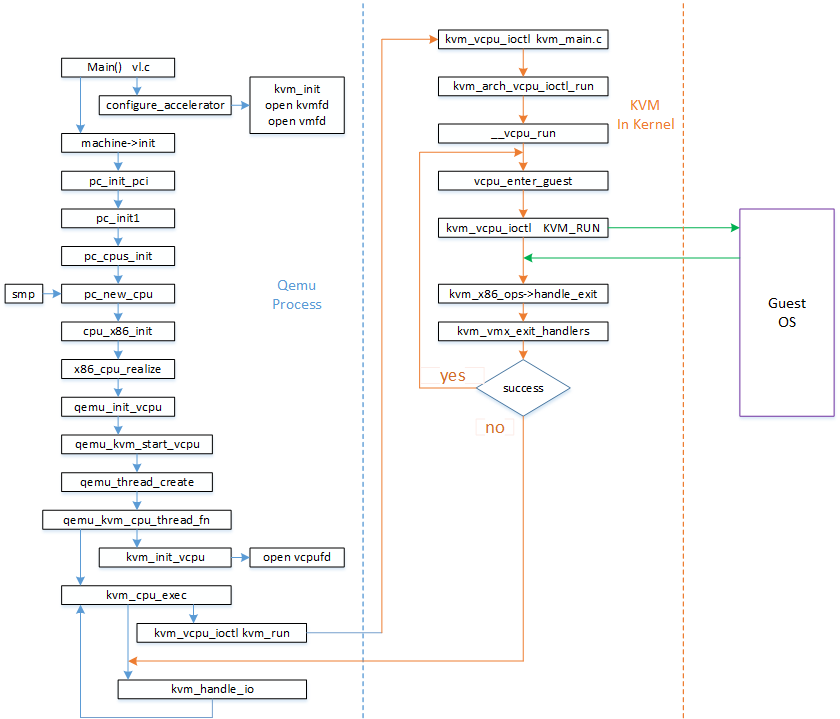

The basic architecture for KVM is as follows.

- KVM Architecture. {: .center}

QEMU process runs as a userspace process on top of the Linux kernel with KVM module, and a guest kernel runs on the of emulated hardware in QEMU.

QEMU can co-work with KVM for hardware based virtualization (Intel VT-x or AMD AMD-V). Using hardware based virtualization, QEMU does not have to emulate all CPU instructions, so it is really fast.

When we create a virtual machine on the host, we type the following command.

udo qemu-system-x86_64 -enable-kvm -M q35 -m 8192 -cpu host,kvm=off ...

which means that we runs x86-64 based architecture CPU, with the help of KVM support (hardware based virtualization), the emulated chipset should be q35, and the size of memory should be 8GB, and so on.

After typing the command, QEMU sends ioctl() command to KVM module to create a VM.

/linux/virt/kvm/kvm_main.c:2998 static int kvm_dev_ioctl_create_vm (unsigned long type) { int r; struct kvm* kvm; kvm = kvm_create_vm(type); ... }

/linux/virt/kvm/kvm_main.c:545 static struct kvm *kvm_create_vm (unsigned long type) { int r, i; struct kvm* kvm = kvm_arch_alloc_vm(); ... r = kvm_arch_init_vm(kvm, type); r = hardware_enable_all(); ... }

/linux/include/linux/kvm_host.h:738 static inline struct kvm *kvm_arch_alloc_vm(void) { return kzalloc(sizeof(struct kvm), GFP_KERNEL); }

struct kvm is a virtual machine data structure that KVM uses, and is defined as follows.

/linux/include/linux/kvm_host.h:361 struct kvm { spinlock_t mmu_lock; struct mutex slots_lock; struct mm_struct* mm; ... }

It includes all hardware units that are necessary.

Actual initialization of the data structure is done in kvm_arch_init_vm().

/linux/arch/x86/kvm/x86.c:7726 int kvm_arch_init_vm (struct kvm *kvm, unsigned long type) { INIT_HLIST_HEAD(&kvm->arch.mask_notifier_list); INIT_LIST_HEAD(&kvm->arch.active_mmu_pages); ... kvm_page_track_init(kvm); kvm_mmu_init_vm(kvm); return 0; }

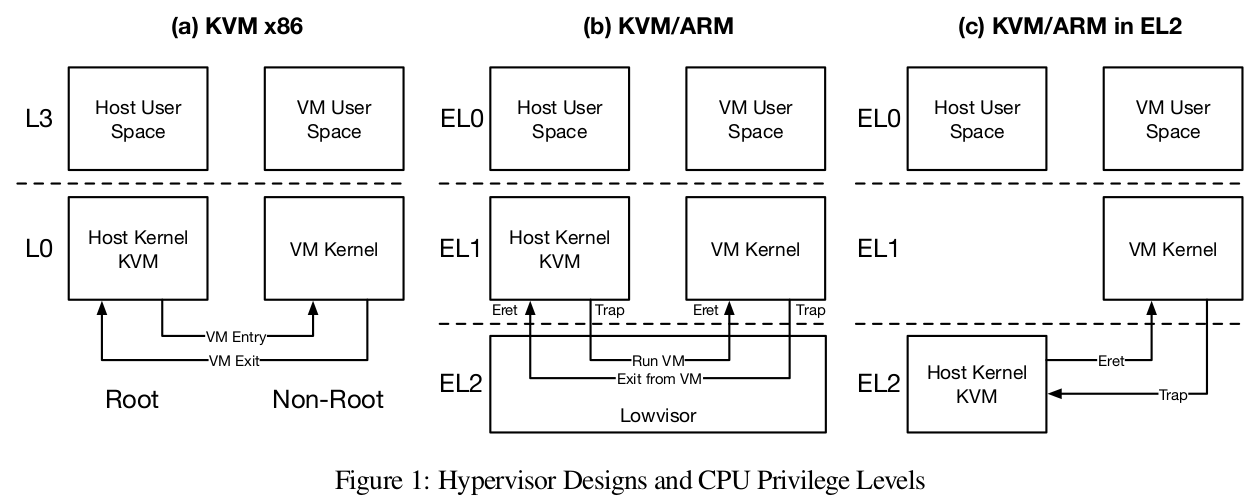

KVM on ARM

Kernel KVM code go through is in KVM Internal: How a VM is Created?.  From Paper Optimizing the Design and Implementation of the Linux ARM Hypervisor.

From Paper Optimizing the Design and Implementation of the Linux ARM Hypervisor.

Virtualization Host Extensions (VHE) on ARMv8.1

Reference is Optimizing the Design and Implementation of KVM/Arm

- HCR_EL2.E2H enables and disables VHE

- Run host kernel on EL2, no stage 2 translation table overhead

- Reduce hypercall overhead

References

- Wikipedia. Kernel-based Virtual Machine. [Online]. https://en.wikipedia.org/wiki/Kernel-based_Virtual_Machine

- Novell. KVM Architecture. [Online]. https://www.slideshare.net/NOVL/virtualization-with-kvm-kernelbased-virtual-machine

在“KVM Run Process之Qemu核心流程”一文中讲到Qemu通过KVM_RUN调用KVM提供的API发起KVM的启动,从这里进入到了内核空间运行,本文主要讲述内核中KVM关于VM运行的核心调用流程,所使用的内核版本为linux3.15。

KVM核心流程

KVM RUN的准备

当Qemu使用kvm_vcpu_ioctl(env, KVM_RUN, 0);发起KVM_RUN命令时,ioctl会陷入内核,到达kvm_vcpu_ioctl();

kvm_vcpu_ioctl() file: virt/kvm/kvm_main.c, line: 1958

--->kvm_arch_vcpu_ioctl_run() file: arch/x86/kvm, line: 6305

--->__vcpu_run() file: arch/x86/kvm/x86.c, line: 6156

在__vcpu_run()中也出现了一个while(){}主循环;

1

|

static int __vcpu_run(struct kvm_vcpu *vcpu)

|

我们看到当KVM通过__vcpu_run()进入主循环后,调用vcpu_enter_guest(),从名字上看可以知道这是进入guest模式的入口;

当r大于0时KVM内核代码会一直调用vcpu_enter_guest(),重复进入guest模式;

当r小于等于0时则会跳出循环体,此时会一步一步退到当初的入口kvm_vcpu_ioctl(),乃至于退回到用户态空间Qemu进程中,具体的地方可以参看上一篇文章,这里也给出相关的代码片段:

1

|

int kvm_cpu_exec(CPUArchState *env)

|

Qemu根据退出的原因进行处理,主要是IO相关方面的操作,当然处理完后又会调用kvm_vcpu_ioctl(env, KVM_RUN, 0)再次RUN KMV。

我们再次拉回到内核空间,走到了static int vcpu_enter_guest(struct kvm_vcpu *vcpu)函数,其中有几个重要的初始化准备工作:

1

|

static int vcpu_enter_guest(struct kvm_vcpu *vcpu) file: arch/x86/kvm/x86.c, line: 5944

|

Guest的进入

kvm_x86_ops是一个x86体系相关的函数集,定义位于file: arch/x86/kvm/vmx.c, line: 8693

1

|

static struct kvm_x86_ops vmx_x86_ops = {

|

vmx_vcpu_run()中一段核心的汇编函数的功能主要就是从ROOT模式切换至NO ROOT模式,主要进行了:

- Store host registers:主要将host状态上下文存入到VM对应的VMCS结构中;

- Load guest registers:主要讲guest状态进行加载;

- Enter guest mode: 通过ASM_VMX_VMLAUNCH指令进行VM的切换,从此进入另一个世界,即Guest OS中;

- Save guest registers, load host registers: 当发生VM Exit时,需要保持guest状态,同时加载HOST;

当第4步完成后,Guest即从NO ROOT模式退回到了ROOT模式中,又恢复了HOST的执行生涯。

Guest的退出处理

当然Guest的退出不会就这么算了,退出总是有原因的,为了保证Guest后续的顺利运行,KVM要根据退出原因进行处理,此时重要的函数为:vmx_handle_exit();

1

|

static int vmx_handle_exit(struct kvm_vcpu *vcpu) file: arch/x86/kvm/vmx.c, line: 6877

|

而众多的exit reason对应的handler如下:

1

|

static int (*const kvm_vmx_exit_handlers[])(struct kvm_vcpu *vcpu) = {

|

当该众多的handler处理成功后,会得到一个大于0的返回值,而处理失败则会返回一个小于0的数;则又回到__vcpu_run()中的主循环中;

vcpu_enter_guest() > 0时: 则继续循环,再次准备进入Guest模式;

vcpu_enter_guest() <= 0时: 则跳出循环,返回用户态空间,由Qemu根据退出原因进行处理。

Conclusion

至此,KVM内核代码部分的核心调用流程的分析到此结束,从上述流程中可以看出,KVM内核代码的主要工作如下:

- Guest进入前的准备工作;

- Guest的进入;

- 根据Guest的退出原因进行处理,若kvm自身能够处理的则自行处理;若KVM无法处理,则返回到用户态空间的Qemu进程中进行处理;

总而言之,KVM与Qemu的工作是为了确保Guest的正常运行,通过各种异常的处理,使Guest无需感知其运行的虚拟环境。