ps -elf | grep containerd 0 S root 19894 19862 0 80 0 - 1418 pipe_w 14:36 pts/3 00:00:00 grep --color=auto containerd 4 S root 39827 1 0 80 0 - 3197 futex_ Oct15 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 1ccf9e9d7f19e6b4159443e4f9eb69520efae7178ad14d8ddef3fb27b2a42a42 -address /run/containerd/containerd.sock 4 S root 39851 1 0 80 0 - 2565 futex_ Oct15 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 07c09e1bfbed1ddd50dbd6fa6fa0c986e82f83bcfaa8dd30c27c895b78173030 -address /run/containerd/containerd.sock 4 S root 39879 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:03 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 98f42915d08acd929bed377113f7a9e72b1026ff87790b366bd56afa6c32e893 -address /run/containerd/containerd.sock 4 S root 39914 1 0 80 0 - 2829 futex_ Oct15 ? 00:00:03 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 04606a708483b150e8b6ad3ccd34fc8d7f1ee8ca54a830211dfe366346556e82 -address /run/containerd/containerd.sock 4 S root 40180 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:12 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id c2469551e412cc8b357b1990ccdfe48e4996a42f2b505f65cfb88928fa932d44 -address /run/containerd/containerd.sock 4 S root 40229 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:13 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 9cc604741074d6f92ca6ad819f2c334cc14e5febbe213d30c880c352a5c4a126 -address /run/containerd/containerd.sock 4 S root 40260 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:12 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 44a16229f3aa90bb3925152715bfb7acbc58ef0cca4e4cb923a34d3c57bdfc66 -address /run/containerd/containerd.sock 4 S root 40287 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:11 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f09a3b37ef24e98e5966422cc21398cb5b937b56d604a436441fdf4b5c4d8a53 -address /run/containerd/containerd.sock 4 S root 40895 1 5 80 0 - 1567151 futex_ Oct15 ? 00:58:57 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime=remote --container-runtime-endpoint=/run/containerd/containerd.sock --cgroup-driver=systemd 4 S root 41268 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:04 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 4226cdd5d357d36a8d4727ffe2a0fb17b305aa720f6c2e5ca3d6213702f71fb8 -address /run/containerd/containerd.sock 4 S root 41341 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:12 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 625a5a2542d87024d4d0b5c198d09d7dbfd690891154144511043f9de94f8fb8 -address /run/containerd/containerd.sock 4 S root 41567 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:03 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 67a906f68abb8b942e2e27ab4b144c0b42a4bd5cfa69ff5a34d312cd728726fe -address /run/containerd/containerd.sock 4 S root 41651 1 0 80 0 - 3181 futex_ Oct15 ? 00:00:03 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7f76ee366fdda2d50c266ef416582f07af53b41d757266c40df6ade0339c0474 -address /run/containerd/containerd.sock 4 S root 42604 1 0 80 0 - 2246 futex_ Oct13 ? 00:00:11 containerd-shim -namespace moby -workdir /var/lib/containerd/io.containerd.runtime.v1.linux/moby/5fc9ee9cd73f5f3b737c65d08c02d622e508846f076be133e3620815682d6863 -address /run/containerd/containerd.sock -containerd-binary /usr/bin/containerd -runtime-root /var/run/docker/runtime-runc 0 S root 44377 1 0 80 0 - 328983 futex_ Oct15 ? 00:02:15 /usr/local/bin/containerd-shim-kata-v2 -namespace k8s.io -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id 7d5e7ea32ba10ed89e2d87b9b025e371ba7a839c223d62c6d34ff7512b47a586 -debug 0 S root 53651 1 0 80 0 - 347416 futex_ 08:37 ? 00:00:49 /usr/local/bin/containerd-shim-kata-v2 -namespace k8s.io -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id 41005e66e4c59ae11c6533c279a2868f7912634995212dd5d131e3371d8f93e7 -debug 4 S root 96887 1 1 80 0 - 2069709 futex_ Oct15 ? 00:14:08 /usr/bin/containerd 4 S root 96888 1 0 80 0 - 1172301 futex_ Oct15 ? 00:03:39 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --add-runtime kata-runtime=/usr/local/bin/kata-runtime

root@ubuntu:/usr/share/defaults/kata-containers# ls /usr/local/bin/ containerd-shim-kata-v2 kata-runtime qemu-io ivshmem-client kata-runtime.bak qemu-nbd ivshmem-server qemu-ga qemu-system-aarch64 kata-collect-data.sh qemu-img

root@ubuntu:/etc/containerd# ps -elf | grep containerd 0 S root 33334 1 0 80 0 - 27454 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 13faedcf4cf2d8501b8dd240a7c886e55bcdb911178b666c4ead6aa29f7ba4a7 -address /run/containerd/containerd.sock 0 S root 33451 1 0 80 0 - 27806 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id b811984e3cda7c014a70fcb2f6e1a61292af2b7deb93f740a3c51c9e7a52b7fe -address /run/containerd/containerd.sock 0 S root 33486 1 0 80 0 - 27806 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 16288c7c88cb53922e96af85c73d3bc178d1be522ec3cd9578dfa3de55c60030 -address /run/containerd/containerd.sock 0 S root 33748 1 0 80 0 - 27454 futex_ 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 8de3978642c12908b0f0cdc6bfa8dbab3156979bb280c40b4c3ebc8e33080937 -address /run/containerd/containerd.sock 0 S root 33824 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f6cbd0a77bee3a313fd8f8417166315a5c0f19212caba3f4aa95061bb943dfe1 -address /run/containerd/containerd.sock 0 S root 33945 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 31246780d074d791990a0c68b562f84bdd821834fb53def9bdf0183f978aaa99 -address /run/containerd/containerd.sock 0 S root 34005 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id ad48842a9a8d481fbd20a936860ec9243a4892f2e6e9c064ab5093cf083ba0fd -address /run/containerd/containerd.sock 0 S root 34030 1 0 80 0 - 27806 futex_ 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7dc3992c40a06c1f60b5d115e1d6bac8c16950e6b0aec6c891a20e85bf906e7f -address /run/containerd/containerd.sock 0 S root 34079 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 6a57995cd991dd4e2c41c1421ab53880cd3d39a84f804194ed4fd1bc80a85cc4 -address /run/containerd/containerd.sock 4 S root 48603 1 5 80 0 - 993600 futex_ 13:14 ? 00:00:01 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime=remote --container-runtime-endpoint=/run/containerd/containerd.sock --resolv-conf=/run/systemd/resolve/resolv.conf --container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock

root@ubuntu:/home/ubuntu# ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/busybox:latest hello sh / # ls bin dev etc home proc root run sys tmp usr var / # quit sh: quit: not found / # exit root@ubuntu:/home/ubuntu#

ubuntu@ubuntu:~$ ps -elf | grep containerd 0 S root 33334 1 0 80 0 - 27454 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 13faedcf4cf2d8501b8dd240a7c886e55bcdb911178b666c4ead6aa29f7ba4a7 -address /run/containerd/containerd.sock 0 S root 33451 1 0 80 0 - 27806 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id b811984e3cda7c014a70fcb2f6e1a61292af2b7deb93f740a3c51c9e7a52b7fe -address /run/containerd/containerd.sock 0 S root 33486 1 0 80 0 - 27806 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 16288c7c88cb53922e96af85c73d3bc178d1be522ec3cd9578dfa3de55c60030 -address /run/containerd/containerd.sock 0 S root 33748 1 0 80 0 - 27454 - 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 8de3978642c12908b0f0cdc6bfa8dbab3156979bb280c40b4c3ebc8e33080937 -address /run/containerd/containerd.sock 0 S root 33824 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f6cbd0a77bee3a313fd8f8417166315a5c0f19212caba3f4aa95061bb943dfe1 -address /run/containerd/containerd.sock 0 S root 33945 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 31246780d074d791990a0c68b562f84bdd821834fb53def9bdf0183f978aaa99 -address /run/containerd/containerd.sock 0 S root 34005 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id ad48842a9a8d481fbd20a936860ec9243a4892f2e6e9c064ab5093cf083ba0fd -address /run/containerd/containerd.sock 0 S root 34030 1 0 80 0 - 27806 - 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7dc3992c40a06c1f60b5d115e1d6bac8c16950e6b0aec6c891a20e85bf906e7f -address /run/containerd/containerd.sock 0 S root 34079 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 6a57995cd991dd4e2c41c1421ab53880cd3d39a84f804194ed4fd1bc80a85cc4 -address /run/containerd/containerd.sock 0 S root 50495 56922 0 80 0 - 271362 - 13:16 pts/3 00:00:00 ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/busybox:latest hello sh 0 S root 50518 1 1 80 0 - 328755 - 13:17 ? 00:00:00 /usr/local/bin/containerd-shim-kata-v2 -namespace default -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id hello -debug 0 S ubuntu 50634 41843 0 80 0 - 1097 pipe_w 13:17 pts/4 00:00:00 grep --color=auto containerd 4 S root 59577 1 1 80 0 - 1496304 - 10:23 ? 00:02:52 /usr/bin/containerd

root@ubuntu:/etc/containerd# ps -elf | grep containerd 0 S root 33334 1 0 80 0 - 27454 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 13faedcf4cf2d8501b8dd240a7c886e55bcdb911178b666c4ead6aa29f7ba4a7 -address /run/containerd/containerd.sock 0 S root 33451 1 0 80 0 - 27806 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id b811984e3cda7c014a70fcb2f6e1a61292af2b7deb93f740a3c51c9e7a52b7fe -address /run/containerd/containerd.sock 0 S root 33486 1 0 80 0 - 27806 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 16288c7c88cb53922e96af85c73d3bc178d1be522ec3cd9578dfa3de55c60030 -address /run/containerd/containerd.sock 0 S root 33748 1 0 80 0 - 27454 futex_ 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 8de3978642c12908b0f0cdc6bfa8dbab3156979bb280c40b4c3ebc8e33080937 -address /run/containerd/containerd.sock 0 S root 33824 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f6cbd0a77bee3a313fd8f8417166315a5c0f19212caba3f4aa95061bb943dfe1 -address /run/containerd/containerd.sock 0 S root 33945 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 31246780d074d791990a0c68b562f84bdd821834fb53def9bdf0183f978aaa99 -address /run/containerd/containerd.sock 0 S root 34005 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id ad48842a9a8d481fbd20a936860ec9243a4892f2e6e9c064ab5093cf083ba0fd -address /run/containerd/containerd.sock 0 S root 34030 1 0 80 0 - 27806 futex_ 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7dc3992c40a06c1f60b5d115e1d6bac8c16950e6b0aec6c891a20e85bf906e7f -address /run/containerd/containerd.sock 0 S root 34079 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 6a57995cd991dd4e2c41c1421ab53880cd3d39a84f804194ed4fd1bc80a85cc4 -address /run/containerd/containerd.sock 4 S root 52666 1 1 80 0 - 590735 futex_ 13:20 ? 00:00:00 /usr/bin/containerd 0 S root 52951 37885 1 80 0 - 35131 futex_ 13:20 pts/1 00:00:00 kubeadm init --kubernetes-version=v1.18.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.10.16.82 --ignore-preflight-errors=all --cri-socket /run/containerd/containerd.sock 4 S root 53019 52951 0 80 0 - 30113 ep_pol 13:20 pts/1 00:00:00 crictl -r unix:///run/containerd/containerd.sock pull k8s.gcr.io/kube-apiserver:v1.18.1 0 S root 53036 56922 0 80 0 - 1097 pipe_w 13:20 pts/3 00:00:00 grep --color=auto containerd

root@ubuntu:/etc/containerd# ps -elf | grep containerd 0 S root 33334 1 0 80 0 - 27454 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 13faedcf4cf2d8501b8dd240a7c886e55bcdb911178b666c4ead6aa29f7ba4a7 -address /run/containerd/containerd.sock 0 S root 33451 1 0 80 0 - 27806 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id b811984e3cda7c014a70fcb2f6e1a61292af2b7deb93f740a3c51c9e7a52b7fe -address /run/containerd/containerd.sock 0 S root 33486 1 0 80 0 - 27806 futex_ 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 16288c7c88cb53922e96af85c73d3bc178d1be522ec3cd9578dfa3de55c60030 -address /run/containerd/containerd.sock 0 S root 33748 1 0 80 0 - 27454 futex_ 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 8de3978642c12908b0f0cdc6bfa8dbab3156979bb280c40b4c3ebc8e33080937 -address /run/containerd/containerd.sock 0 S root 33824 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f6cbd0a77bee3a313fd8f8417166315a5c0f19212caba3f4aa95061bb943dfe1 -address /run/containerd/containerd.sock 0 S root 33945 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 31246780d074d791990a0c68b562f84bdd821834fb53def9bdf0183f978aaa99 -address /run/containerd/containerd.sock 0 S root 34005 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id ad48842a9a8d481fbd20a936860ec9243a4892f2e6e9c064ab5093cf083ba0fd -address /run/containerd/containerd.sock 0 S root 34030 1 0 80 0 - 27806 futex_ 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7dc3992c40a06c1f60b5d115e1d6bac8c16950e6b0aec6c891a20e85bf906e7f -address /run/containerd/containerd.sock 0 S root 34079 1 0 80 0 - 27806 futex_ 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 6a57995cd991dd4e2c41c1421ab53880cd3d39a84f804194ed4fd1bc80a85cc4 -address /run/containerd/containerd.sock 4 S root 52666 1 0 80 0 - 1015398 futex_ 13:20 ? 00:00:02 /usr/bin/containerd 0 S root 52951 37885 1 80 0 - 35323 ep_pol 13:20 pts/1 00:00:09 kubeadm init --kubernetes-version=v1.18.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.10.16.82 --ignore-preflight-errors=all --cri-socket /run/containerd/containerd.sock 4 S root 54588 1 11 80 0 - 938109 futex_ 13:35 ? 00:00:01 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime=remote --container-runtime-endpoint=/run/containerd/containerd.sock --resolv-conf=/run/systemd/resolve/resolv.conf --container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock 0 S root 54949 56922 0 80 0 - 1097 pipe_w 13:35 pts/3 00:00:00 grep --color=auto containerd

Binary Naming Users specify the runtime they wish to use when creating a container. The runtime can also be changed via a container update. > ctr run --runtime io.containerd.runc.v1 When a user specifies a runtime name, io.containerd.runc.v1, they will specify the name and version of the runtime. This will be translated by containerd into a binary name for the shim. io.containerd.runc.v1 -> containerd-shim-runc-v1 containerd keeps the containerd-shim-* prefix so that users can ps aux | grep containerd-shim to see running shims on their system.

root@ubuntu:/etc/containerd# ctr run --runtime io.containerd.runc.v1 -t --rm docker.io/library/busybox:latest hello sh / # ls bin dev etc home proc root run sys tmp usr var / #

ubuntu@ubuntu:~$ ps -elf | grep containerd 0 S root 33334 1 0 80 0 - 27454 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 13faedcf4cf2d8501b8dd240a7c886e55bcdb911178b666c4ead6aa29f7ba4a7 -address /run/containerd/containerd.sock 0 S root 33451 1 0 80 0 - 27806 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id b811984e3cda7c014a70fcb2f6e1a61292af2b7deb93f740a3c51c9e7a52b7fe -address /run/containerd/containerd.sock 0 S root 33486 1 0 80 0 - 27806 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 16288c7c88cb53922e96af85c73d3bc178d1be522ec3cd9578dfa3de55c60030 -address /run/containerd/containerd.sock 0 S root 33748 1 0 80 0 - 27454 - 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 8de3978642c12908b0f0cdc6bfa8dbab3156979bb280c40b4c3ebc8e33080937 -address /run/containerd/containerd.sock 0 S root 33824 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f6cbd0a77bee3a313fd8f8417166315a5c0f19212caba3f4aa95061bb943dfe1 -address /run/containerd/containerd.sock 0 S root 33945 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 31246780d074d791990a0c68b562f84bdd821834fb53def9bdf0183f978aaa99 -address /run/containerd/containerd.sock 0 S root 34005 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id ad48842a9a8d481fbd20a936860ec9243a4892f2e6e9c064ab5093cf083ba0fd -address /run/containerd/containerd.sock 0 S root 34030 1 0 80 0 - 27806 - 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7dc3992c40a06c1f60b5d115e1d6bac8c16950e6b0aec6c891a20e85bf906e7f -address /run/containerd/containerd.sock 0 S root 34079 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 6a57995cd991dd4e2c41c1421ab53880cd3d39a84f804194ed4fd1bc80a85cc4 -address /run/containerd/containerd.sock 0 S root 50495 56922 0 80 0 - 271362 - 13:16 pts/3 00:00:00 ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/busybox:latest hello sh 0 S root 50518 1 1 80 0 - 328755 - 13:17 ? 00:00:00 /usr/local/bin/containerd-shim-kata-v2 -namespace default -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id hello -debug 0 S ubuntu 50634 41843 0 80 0 - 1097 pipe_w 13:17 pts/4 00:00:00 grep --color=auto containerd 4 S root 59577 1 1 80 0 - 1496304 - 10:23 ? 00:02:52 /usr/bin/containerd ubuntu@ubuntu:~$ ps -elf | grep containerd 0 S root 33334 1 0 80 0 - 27454 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 13faedcf4cf2d8501b8dd240a7c886e55bcdb911178b666c4ead6aa29f7ba4a7 -address /run/containerd/containerd.sock 0 S root 33451 1 0 80 0 - 27806 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id b811984e3cda7c014a70fcb2f6e1a61292af2b7deb93f740a3c51c9e7a52b7fe -address /run/containerd/containerd.sock 0 S root 33486 1 0 80 0 - 27806 - 12:55 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 16288c7c88cb53922e96af85c73d3bc178d1be522ec3cd9578dfa3de55c60030 -address /run/containerd/containerd.sock 0 S root 33748 1 0 80 0 - 27454 - 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 8de3978642c12908b0f0cdc6bfa8dbab3156979bb280c40b4c3ebc8e33080937 -address /run/containerd/containerd.sock 0 S root 33824 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id f6cbd0a77bee3a313fd8f8417166315a5c0f19212caba3f4aa95061bb943dfe1 -address /run/containerd/containerd.sock 0 S root 33945 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 31246780d074d791990a0c68b562f84bdd821834fb53def9bdf0183f978aaa99 -address /run/containerd/containerd.sock 0 S root 34005 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id ad48842a9a8d481fbd20a936860ec9243a4892f2e6e9c064ab5093cf083ba0fd -address /run/containerd/containerd.sock 0 S root 34030 1 0 80 0 - 27806 - 12:56 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 7dc3992c40a06c1f60b5d115e1d6bac8c16950e6b0aec6c891a20e85bf906e7f -address /run/containerd/containerd.sock 0 S root 34079 1 0 80 0 - 27806 - 09:47 ? 00:00:01 /usr/bin/containerd-shim-runc-v1 -namespace k8s.io -id 6a57995cd991dd4e2c41c1421ab53880cd3d39a84f804194ed4fd1bc80a85cc4 -address /run/containerd/containerd.sock 4 S root 52666 1 0 80 0 - 1162990 - 13:20 ? 00:00:04 /usr/bin/containerd 0 S root 57554 56922 0 80 0 - 289859 - 13:39 pts/3 00:00:00 ctr run --runtime io.containerd.runc.v1 -t --rm docker.io/library/busybox:latest hello sh 0 S root 57577 1 0 80 0 - 27774 - 13:39 ? 00:00:00 /usr/bin/containerd-shim-runc-v1 -namespace default -id hello -address /run/containerd/containerd.sock 4 S root 57675 1 4 80 0 - 568937 - 13:40 ? 00:00:00 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime=remote --container-runtime-endpoint=/run/containerd/containerd.sock --resolv-conf=/run/systemd/resolve/resolv.conf --container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock 0 S ubuntu 57803 41843 0 80 0 - 1097 - 13:40 pts/4 00:00:00 grep --color=auto containerd ubuntu@ubuntu:~$

可以看到/usr/bin/目录下有如下可执行文件:

kata-runtime:kata-containers的运行时

还有三个containerd开头的bin containerd:

containerd运行时,docker官方提供的

containerd-shim:shim,docker官方提供的(假如用kata的运行时,再用这个shim,似乎跑起来就是v1的kata?)

containerd-shim-kata-v2:kata-containers连接到containerd的shim,是kata-containers安装上去的。

运行

使用kata-runtime来运行容器

kata-containers提供了一个命令叫kata-runtime,它本质上是一个类似于runC的low level container runtime。只能进行一个容器的生命周期管理(就是启动,停止,销毁等基本操作),没有镜像下载等功能,镜像之类的高级功能一般是由containerd提供的。

所以我们假如直接用kata-runtime来运行容器,我们需要自己手动准备好一个容器的镜像。

# 准备一个busybox镜像

# 构造rootfs

mkdir rootfs

docker export $(docker create busybox) | tar -xf - -C rootfs

# 构造config.json

runc spec

# 使用kata-runtime来运行荣齐全

kata-runtime run busybox这样子启动container,默认运行的是kata containers v1(就是有kata-proxy进程)。

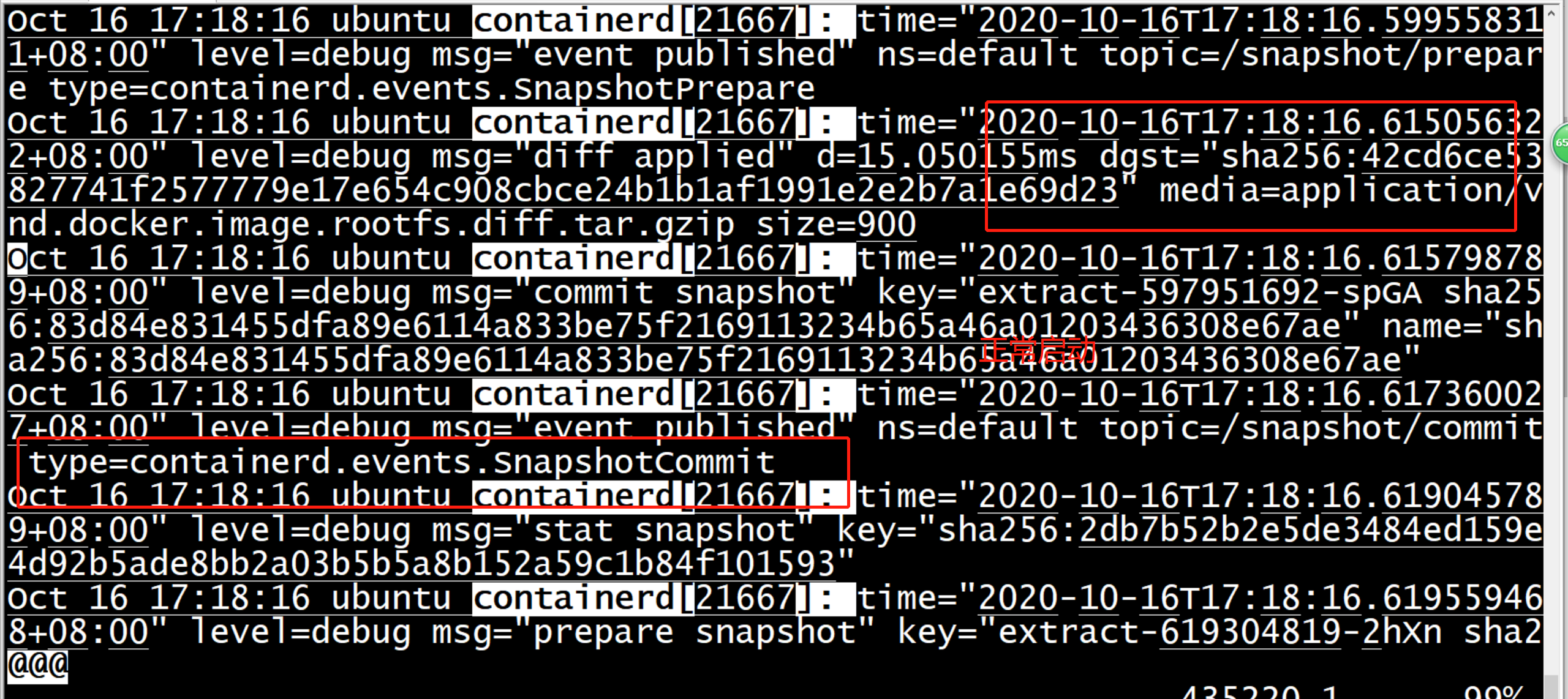

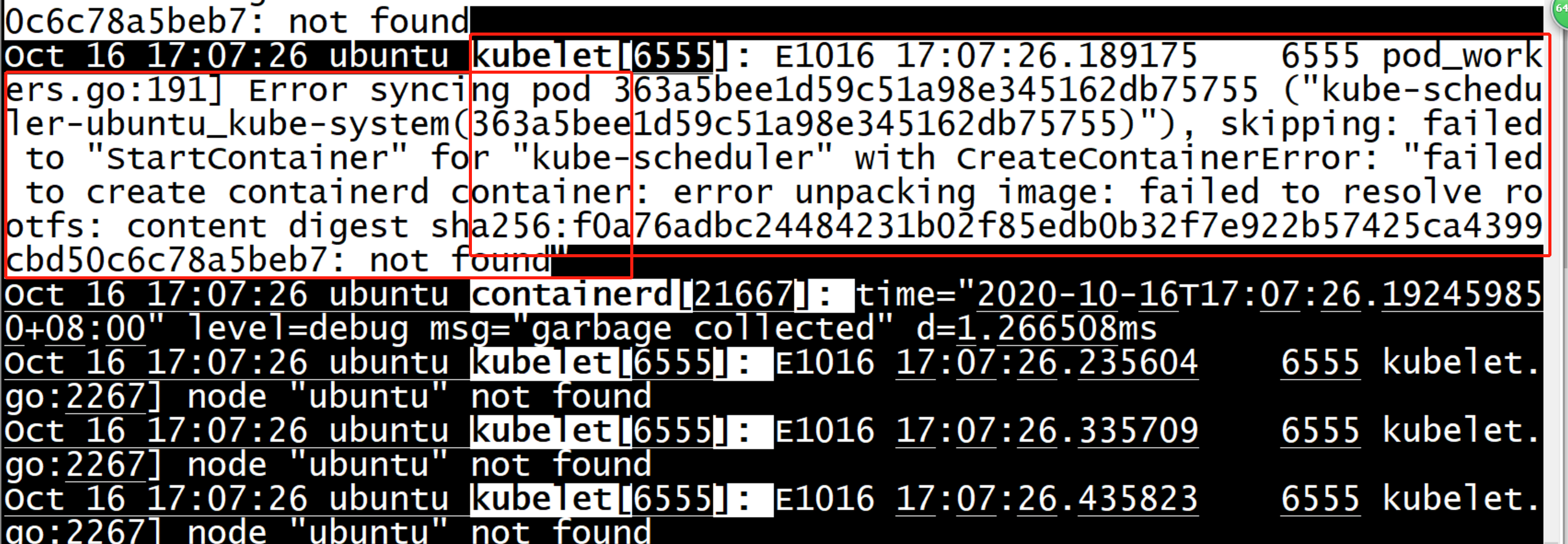

使用containerd运行kata容器

使用containerd,关键就是要设置好底层的runtime,让containerd知道要用kata的runtime。 有如下两种方法:

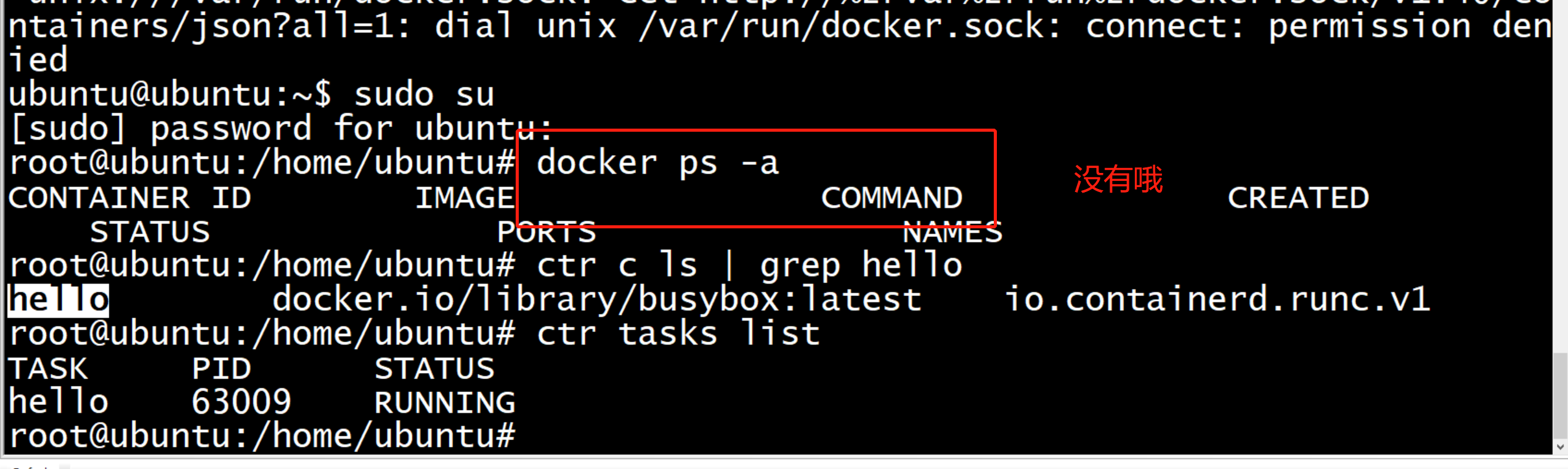

ctr命令行指定runtime

sudo ctr image pull docker.io/library/busybox:latest

sudo ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/busybox:latest hello sh值得注意的是io.containerd.run.kata.v2是v2版本,至于v1版本,我不知道应该怎么从命令行指定。

修改containerd的config文件

默认config文件:/etc/containerd/config.toml。

注意:如果后续要使用CRI接口,要让kubelet通过CRI接口连接上containerd,则必须去掉一开始的disabled_plugins = ["cri"]。 可以参照如下两个链接来修改containerd的config。

将默认runtime设置成kata v1

[plugins.linux]

# shim binary name/path

shim = "containerd-shim"

# runtime binary name/path

runtime = "/usr/bin/kata-runtime"

# do not use a shim when starting containers, saves on memory but

# live restore is not supported

no_shim = false

# display shim logs in the containerd daemon's log output

shim_debug = true将默认runtime设置成kata v2

注意:下面这个配置会报错ctr: read unix @->@/containerd-shim/default/hello_5/shim.sock: read: connection reset by peer: unknown,具体原因未知。

所以运行kata v2还是直接用上面说的ctr命令行指定runtime来搞吧。

[plugins.linux]

# shim binary name/path

shim = "containerd-shim-kata-v2"

# runtime binary name/path

runtime = "/usr/bin/kata-runtime"

# do not use a shim when starting containers, saves on memory but

# live restore is not supported

no_shim = false

# display shim logs in the containerd daemon's log output

shim_debug = true注意:对config文件做了任何修改后,都必须要运行sudo service containerd restart来重新reload config。

用ctr命令运行,注意,这次命令行不需要指定runtime了。

sudo ctr image pull docker.io/library/busybox:latest

sudo ctr run -t --rm docker.io/library/busybox:latest hello sh查看启动kata容器后,在系统中多了多少进程

可以看看启动一个kata容器后,系统里面到底多了几个进程?

# 查看系统中的所有进程

# 其中PID是process ID

# PPID:parent process ID,从这个可以看某个进程到底是由哪个进程创建的

ps -AF

# 查看系统中的所有线程

# LWP:线程ID

# NLWP:当前进程的总线程数

ps -AFL H对比启动前和启动后的进程以及线程,可以发现多了如下的进程:

1. sudo命令行进程(因为命令是sudo开头的,所以是先运行sudo,然后sudo在开新进程执行后续的命令行)

2. kata-runtime/ctr/containerd-shim的命令行进程。

3. qemu进程,对于单CPU的处理器,它有四个线程

4. kvm-nx-lpage-re

5. kvm-pit

6. kata-proxy

7. kata-shim

如果是v2的就没有kata-proxy和kata-shim进程。

暂时还有如下问题没有搞清楚:

1. 是一个container一个shim,还是一个container process一个shim?

2. 文档里面说v2里面没有kata-proxy,但是没有说没有shim?还是说在v2里面kata-shim被整合进了containerd-shim-kata-v2?暂时还不确定。

977 37392 container_manager_linux.go:271] Creating Container Manager object based on Node Config: {RuntimeCgroup 445 37392 topology_manager.go:126] [topologymanager] Creating topology manager with none policy 472 37392 container_manager_linux.go:301] [topologymanager] Initializing Topology Manager with none policy 485 37392 container_manager_linux.go:306] Creating device plugin manager: true 867 37392 remote_runtime.go:59] parsed scheme: "" 889 37392 remote_runtime.go:59] scheme "" not registered, fallback to default scheme 977 37392 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{/run/containerd/containerd.sock <nil> 998 37392 clientconn.go:933] ClientConn switching balancer to "pick_first" 088 37392 remote_image.go:50] parsed scheme: "" 103 37392 remote_image.go:50] scheme "" not registered, fallback to default scheme 121 37392 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{/run/containerd/containerd.sock <nil> 133 37392 clientconn.go:933] ClientConn switching balancer to "pick_first" 214 37392 kubelet.go:292] Adding pod path: /etc/kubernetes/manifests 270 37392 kubelet.go:317] Watching apiserver

k3sのcontainerdに設定を入れる

現在利用しているk8sはk3sで動いているので、「通常のcontainerd」ではなく、「k3sにくっついてくるcontainerd」対して設定を行います。

Rancher Docs: Advanced Options and Configuration によると、/var/lib/rancher/k3s/agent/etc/containerd/config.toml に設定ファイルがあり、`/var/lib/rancher/k3s/agent/etc/containerd/config.toml.tmpl を置いておくとそちらが優先して読み込まれるとのことなので、元の設定ファイルをコピーして追記する形にしました。

(snip)

[plugins.cri.containerd.runtimes.kata]

runtime_type = "io.containerd.kata.v2"

[plugins.cri.containerd.runtimes.kata.options]

ConfigPath = "/usr/share/defaults/kata-containers/configuration.toml"

RuntimeClassをk8s上に作成する

ブログ記事では触れられていません4が、k8s上にKata Containerに対応するRuntimeClassを作成する必要があります。

RuntimeClassは下記のように、metadata.name と handler しかないシンプルな設定です。

containerdをruntimeに利用している場合は、handlerは[plugins.cri.containerd.runtimes.${HANDLER_NAME}] の部分、つまり今回はkataが正解です。

apiVersion: node.k8s.io/v1beta1

kind: RuntimeClass

metadata:

name: kata

handler: kata

動かしてみる

ctrで直接コンテナを起動してみるのと、k8sのPodをKata Containerを動かしてみます。

# runtimeにkataを指定して、k3sのcontainerdを使ってコンテナを起動

tk3fftk@nuc1:~$ sudo k3s ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/busybox:latest hello sh

/ #

# 別シェルで動いているコンテナを確認。runtimeがkataになっている

tk3fftk@nuc1:~$ sudo k3s ctr c ls | grep hello

hello docker.io/library/busybox:latest io.containerd.run.kata.v2

# 適当にPodのYAMLを吐き出す

$ kubectl run --image nginx nginx --dry-run=client -o yaml > nginx_pod.yaml

# spec.runtimeClassName を追加する。下記は追加後のYAML

$ cat nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

runtimeClassName: kata

containers:

- image: nginx

name: nginx

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# deploy

$ kubectl apply -f nginx_pod.yaml

# worker側で確認。pauseコンテナとnginxコンテナのruntimeがkataになっている

tk3fftk@nuc1:~$ sudo k3s ctr containers list | grep kata

910d6bf06948071677690a9cc482455617c7d17e386a0fe3a02c15762a0a7f88 docker.io/library/nginx@sha256:c870bf53de0357813af37b9500cb1c2ff9fb4c00120d5fe1d75c21591293c34d io.containerd.kata.v2

95dbaab3534ba6a87036d2f0057cad600734466293baec222b998817d3b84b40 docker.io/rancher/pause:3.1

io.containerd.kata.v2

OK, in this example we’ll run Kata containers on existing and working Kubernetes on-premise cluster, created with the help of Kubeadm utility. Things that we need: Configured and working Kubernetes cluster v 1.14 or v 1.15 One or more worker nodes in the cluster must be able to run Qemu as the virtualization platform, so these nodes must be bare-metal and have a real CPU with virtualization support. You can check this by running this command on your Kubernetes worker nodes: kubernetes-node# cat /proc/cpuinfo | grep vmx The Kata containers can only run with CRI-O or containerd, but not with dockershim that is the main CRI API for many Kubernetes clusters by default. If you didn’t specify any other CRI API when you created the cluster, you will get a dockershim on all your Kubernetes nodes. Try to run: kubernetes-node# docker ps If you see any output, that means you are using dockershim CRI runtime for this host. First, let’s change the CRI runtime on nodes that will be used for running Kata containers in the future. I’ll use the containerd for this example. Very often the containerd are installed with Docker CE as an additional package, on my Debian hosts it was already installed: kubernetes-node# dpkg -l | grep container ii containerd.io 1.2.5-1 amd64 An open and reliable container runtime ii docker-ce 5:18.09.4~3-0~debian-stretch amd64 Docker: the open-source application container engine ii docker-ce-cli 5:18.09.4~3-0~debian-stretch amd64 Docker CLI: the open-source application container engine If nope, you can install it manually as a package or from source code. OK, first of all, if the node that we’ll migrate to containerd have any running production pods, first drain this node or migrate all pods to other nodes. Then login to this node and remove cri plugin from the list ofdisabled_plugins in the containerd configuration file /etc/containerd/config.toml. before disabled_plugins = ["cri"] after disabled_plugins = [""] Next, we need to prepare this node for the containerd: kubernetes-node# modprobe overlay kubernetes-node# modprobe br_netfilter kubernetes-node# cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF kubernetes-node# sysctl --system Now we need to configure Kubelet on this node for using containerd: kubernetes-node# cat << EOF | sudo tee /etc/systemd/system/kubelet.service.d/0-containerd.conf [Service] Environment="KUBELET_EXTRA_ARGS=--container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock" EOF On some systems, you also may need to add default config for kubelet: kubernetes-node# vi /etc/default/kubelet KUBELET_EXTRA_ARGS= --container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock After that done, restart containerd and kubelet services on this node: kubernetes-node# systemctl daemon-reload kubernetes-node# systemctl restart containerd kubernetes-node# systemctl restart kubelet Now wait a bit till kubelet will change the runtime engine, and then try to run docker ps command again: kubernetes-node# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES You must see the empty output, that means Kuibernetes start using containerd instead of docker as a container runtime. Also, you can check this by running kubectl get nodes -o wide command: kubectl# kubectl get nodes -o wide NAME STATUS ..... CONTAINER-RUNTIME kubernetes-node Ready . .... containerd://1.2.5 For listing containers on this node, you now must use ctr command: kubernetes-node# ctr --namespace k8s.io containers ls Good, now we have the right configured kubelet and containerd and we can move on and install Kata containers inside our cluster. There’s are prepared deployments and some documentation about how to do it in the kata-deploy section of the repository. Clone it and use the readme file for additional information. Run next commands for deploy Kata containers support into your Kubernetes cluster: kubectl# kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/kata-rbac.yaml kubectl# kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/kata-deploy.yaml #For the Kubernetes 1.14 and 1.15 run: kubectl# kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/k8s-1.14/kata-qemu-runtimeClass.yaml After Kata containers installation the nodes that available for running it must get specific labels (katacontainers.io/kata-runtime=true): kubectl# kubectl get nodes --show-labels | grep kata kubernetes-node Ready <none> 5d23h v1.15.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,katacontainers.io/kata-runtime=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=kubernetes-node,kubernetes.io/os=linux If you get these labels on previously configured nodes, that means you can run Kata containers now. For testing it we can use example deployment from the kata-deploy repo: kubectl# kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/examples/test-deploy-kata-qemu.yaml Congrats, now you have a first running container on the base of real VM with Qemu virtualization. Good luck.