1.

2.错误日志

命令为 bin/flume-ng agent --name a2 --conf conf/ --conf-file job/file-hdfs.conf

Info: Sourcing environment configuration script /opt/modules/flume/conf/flume-env.sh

Info: Including Hive libraries found via () for Hive access

+ exec /opt/modules/jdk1.8.0_121/bin/java -Xmx20m -cp '/opt/modules/flume/conf:/opt/modules/flume/lib/*:/lib/*' -Djava.library.path= org.apache.flume.node.Application --name a2 --conf-file job/file-hdfs.conf

Exception in thread "SinkRunner-PollingRunner-DefaultSinkProcessor" java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:635)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2653)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:92)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2687)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2669)

3.情况好转

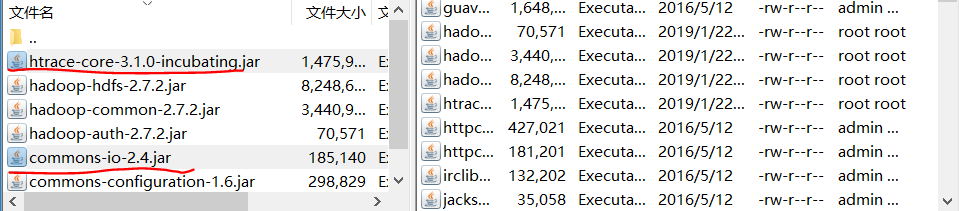

把如图的两个jar放入flume下的lib目录

重新运行flume,没有报错,但是没有动静,如图

同时启动hive,在hdfs并没有产生/flume/%Y%m%d/%H目录

问题待解决!!!

4.进一步实验

把那两个jar移除,同时把conf中sink指定的02号机namenode关闭掉,再启动01号机上的flume,没有发生错误但是在hdfs上任然没有flume目录

猜想原因:能够不报错,可能是因为JVM记录着原来的变量??????

问题待解决!!!

案列3,发生同样的情况,HDFS上没有flume文件夹

在命令中加入了输出日志

bin/flume-ng agent --conf conf/ --name a3 --conf-file job/dir-hdfs.conf -Dflume.root.logger=INFO,console

发现错误日志

2019-01-23 06:44:20,627 (conf-file-poller-0) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:182)] Starting Source r3 2019-01-23 06:44:20,628 (lifecycleSupervisor-1-4) [INFO - org.apache.flume.source.SpoolDirectorySource.start(SpoolDirectorySource.java:83)] SpoolDirectorySource source starting with directory: /opt/module/flume/upload 2019-01-23 06:44:20,634 (lifecycleSupervisor-1-4) [ERROR - org.apache.flume.lifecycle.LifecycleSupervisor$MonitorRunnable.run(LifecycleSupervisor.java:251)] Unable to start EventDrivenSourceRunner: { source:Spool Directory source r3: { spoolDir: /opt/module/flume/upload } } - Exception follows. java.lang.IllegalStateException: Directory does not exist: /opt/module/flume/upload at com.google.common.base.Preconditions.checkState(Preconditions.java:145) at org.apache.flume.client.avro.ReliableSpoolingFileEventReader.<init>(ReliableSpoolingFileEventReader.java:159) at org.apache.flume.client.avro.ReliableSpoolingFileEventReader.<init>(ReliableSpoolingFileEventReader.java:85)

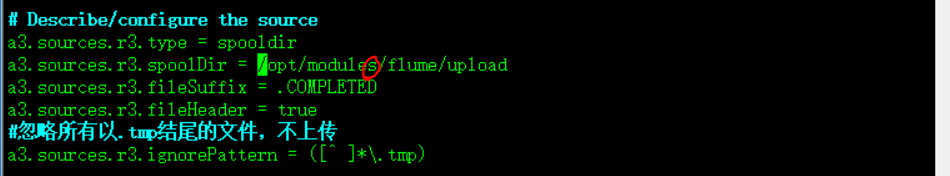

上述日志中错误原因是:

conf中少了s

改正之后重新运行flume:

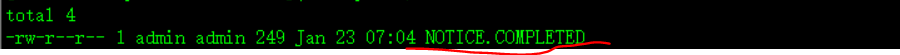

同时上传NOTICE文件到upload中,此时upload文件中

但是flume打印出来的日志提示:

[ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

2019-01-23 07:05:33,717 (lifecycleSupervisor-1-0) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:95)] Component type: SOURCE, name: r3 started 2019-01-23 07:05:34,064 (pool-5-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:324)] Last read took us just up to a file boundary. Rolling to the next file, if there is one. 2019-01-23 07:05:34,064 (pool-5-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:433)] Preparing to move file /opt/modules/flume/upload/NOTICE to /opt/modules/flume/upload/NOTICE.COMPLETED 2019-01-23 07:05:34,085 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.HDFSDataStream.configure(HDFSDataStream.java:57)] Serializer = TEXT, UseRawLocalFileSystem = false 2019-01-23 07:05:34,388 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:231)] Creating hdfs://hadoop-senior02.itguigu.com:9000/flume/upload/20190123/07/upload-.1548198334086.tmp 2019-01-23 07:05:34,636 (hdfs-k3-call-runner-0) [WARN - org.apache.hadoop.util.NativeCodeLoader.<clinit>(NativeCodeLoader.java:62)] Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2019-01-23 07:05:34,837 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:635) at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619) at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

经查询:flime/lib中缺少htrace-core-3.1.0-incubating.jar包,mvn工程的话,通过mvn install安装(参考http://blog.51cto.com/enetq/1827028)。我直接找到此jar包手动拷贝进lib/xia

上面问题解决了,继续:cp NOTICE upload/,但是flume报错,日志如下:

java.lang.NoClassDefFoundError: org/apache/commons/io/Charsets

Info: Sourcing environment configuration script /opt/modules/flume/conf/flume-env.sh Info: Including Hive libraries found via () for Hive access + exec /opt/modules/jdk1.8.0_121/bin/java -Xmx20m -cp '/opt/modules/flume/conf:/opt/modules/flume/lib/*:/lib/*' -Djava.library.path= org.apache.flume.node.Application -n a3 -f job/dir-hdfs.conf Exception in thread "SinkRunner-PollingRunner-DefaultSinkProcessor" java.lang.NoClassDefFoundError: org/apache/commons/io/Charsets at org.apache.hadoop.ipc.Server.<clinit>(Server.java:182) at org.apache.hadoop.ipc.ProtobufRpcEngine.<clinit>(ProtobufRpcEngine.java:72) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348)

解决:把commons-io-2.4.jar放进flume/lib/目录下

再重新过程,出现HDFS IO error,见日志:

2019-01-23 15:48:00,717 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:443)] HDFS IO error java.net.ConnectException: Call From hadoop-senior01/192.168.10.20 to hadoop-senior02:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

(插曲) 因为file-hdfs.conf,之前也出现了问题,现在配置基本改好了。运行此配置出现,如日志所示问题:

xecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) 2019-01-23 15:59:29,157 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:231)] Creating hdfs://hadoop-senior01/flume/20190123/15/logs-.1548230362663.tmp 2019-01-23 15:59:29,199 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:443)] HDFS IO error org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category WRITE is not supported in state standby at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:87)

打不开HA中的standby节点中的目录,改成active namenode之后,flume运行过程成功!

继续,dir-file.conf还是出问题,经对比file-file.conf(成功),dir-file.conf中指定了9000端口,去掉,成功!!!

a2.sinks.k2.hdfs.path = hdfs://hadoop-senior02/flume/%Y%m%d/

%H

有关参考:https://blog.csdn.net/dai451954706/article/details/50449436

https://blog.csdn.net/woloqun/article/details/81350323