转载于:https://cloud.tencent.com/developer/article/1626935

rook版本: 1.3.11 ceph版本: 14.2.10

一、Rook是什么,要解决什么问题

First thing first,Rook is not a CSI driver. —— 首先,Rook不是一个容器存储驱动。

官方对于Rook的定义是这样的:

Rook is an open source cloud-native storage orchestrator, providing the platform, framework, and support for a diverse set of storage solutions to natively integrate with cloud-native environments. Rook turns storage software into self-managing, self-scaling, and self-healing storage services. It does this by automating deployment, bootstrapping, configuration, provisioning, scaling, upgrading, migration, disaster recovery, monitoring, and resource management. Rook uses the facilities provided by the underlying cloud-native container management, scheduling and orchestration platform to perform its duties. Rook integrates deeply into cloud native environments leveraging extension points and providing a seamless experience for scheduling, lifecycle management, resource management, security, monitoring, and user experience.

翻译过来概况下

Rook是一个开源的云原生存储编排系统,提供平台、框架和支持,提供了一套多样化的存储解决方案,可以与云原生环境进行天然集成。Rook利用云原生容器管理、调度和调度平台提供的设施,将存储软件转化为自我管理、自我扩展和自我修复的存储服务,实现自动化部署、启动、配置、扩容、升级、迁移、灾难恢复、监控和资源管理。Rook的快速扩展的特点,深度集成到云原生环境中,并在调度、生命周期管理、资源管理、安全、监控等方面提供优异的用户体验。

因此,Rook解决的问题是:

- 快速部署一套云原生存储集群;

- 平台化管理云原生存储集群,包括存储的扩容、升级、监控、灾难恢复等全生命周期管理;

- 本身基于云原生容器管理(如Kubernetes),管理方便。

二、通过Rook部署Ceph集群

目前Rook支持多种存储集群的部署,包括:

- Ceph,它是一个高度可扩展的分布式存储解决方案,适用于块存储、对象存储和共享文件系统,具有多年的生产部署经验。

- EdgeFS,它是高性能和容错的分散式数据结构,可以通过对象、文件、NoSQL和块存储形式进行访问。

- Cassandra,它是一个高度可用的NoSQL数据库,具有闪电般快速的性能、灵活可控的数据一致性和大规模的可扩展性。

- CockroachDB,它是一个云原生的SQL数据库,用于构建全局性的、可扩展的云服务,可在灾难中生存。

- NFS,它允许远程主机通过网络挂载文件系统,并与这些文件系统进行交互,就像在本地挂载一样。

- YugabyteDB,是一个高性能的云端分布式SQL数据库,可以自动容忍磁盘、节点、区域和区域故障。

其中对于Ceph和EdgeFS已经是stable了,可以逐步生产使用。今天就来部署一把存储界的Super Star——Ceph。

1、部署前准备

官方给出了部署条件,主要是针对Kubernetes集群和节点系统层如何支持Ceph的部署条件。我这边使用的CentOS 7.6的官方系统,作了如下操作:

- 确保部署节点都安装了lvm2,可以通过

yum install lvm2安装 - 如果你跟我一样,计划使用Ceph作为rbd存储,确保部署节点都安装了rbd内核模块,可以通过

modprobe rbd检查是否已安装

2、部署Ceph集群

所有的部署所需的物料已经都在Rook官方的Git仓库中,建议git clone最新稳定版,然后可以参照官方文档一步步进行部署。以下是我这边的部署效果

拉取项目

git clone --single-branch --branch release-1.2 https://github.com/rook/rook.git

修改 operator.yaml 的镜像名,更改为私有仓库

ROOK_CSI_CEPH_IMAGE: "10.2.55.8:5000/kubernetes/cephcsi:v2.1.2" ROOK_CSI_REGISTRAR_IMAGE: "10.2.55.8:5000/kubernetes/csi-node-driver-registrar:v1.2.0" ROOK_CSI_RESIZER_IMAGE: "10.2.55.8:5000/kubernetes/csi-resizer:v0.4.0" ROOK_CSI_PROVISIONER_IMAGE: "10.2.55.8:5000/kubernetes/csi-provisioner:v1.4.0" ROOK_CSI_SNAPSHOTTER_IMAGE: "10.2.55.8:5000/kubernetes/csi-snapshotter:v1.2.2" ROOK_CSI_ATTACHER_IMAGE: "10.2.55.8:5000/kubernetes/csi-attacher:v2.1.0"

ROOK_CSI_KUBELET_DIR_PATH: "/data/k8s/kubelet" ###如果之前有修改过kubelet 数据目录,这里需要修改

执行 operator.yaml

cd rook/cluster/examples/kubernetes/ceph # create namespace、crds、service accounts, roles, role bindings kubectl create -f common.yaml # create rook-ceph operator kubectl create -f operator.yaml

配置cluster

cluster.yaml文件里的内容需要修改,一定要适配自己的硬件情况,请详细阅读配置文件里的注释,避免我踩过的坑。

此文件的配置,除了增删osd设备外,其他的修改都要重装ceph集群才能生效,所以请提前规划好集群。如果修改后不卸载ceph直接apply,会触发ceph集群重装,导致集群异常挂掉

apiVersion: ceph.rook.io/v1 kind: CephCluster metadata: # 命名空间的名字,同一个命名空间只支持一个集群 name: rook-ceph namespace: rook-ceph spec: # ceph版本说明 # v13 is mimic, v14 is nautilus, and v15 is octopus. cephVersion: #修改ceph镜像,加速部署时间 image: 10.2.55.8:5000/kubernetes/ceph:v14.2.10 # 是否允许不支持的ceph版本 allowUnsupported: false #指定rook数据在节点的保存路径 dataDirHostPath: /data/k8s/rook # 升级时如果检查失败是否继续 skipUpgradeChecks: false # 从1.5开始,mon的数量必须是奇数 mon: count: 3 # 是否允许在单个节点上部署多个mon pod allowMultiplePerNode: false mgr: modules: - name: pg_autoscaler enabled: true # 开启dashboard,禁用ssl,指定端口是7000,你可以默认https配置。我是为了ingress配置省事。 dashboard: enabled: true port: 7000 ssl: false # 开启prometheusRule monitoring: enabled: false # 部署PrometheusRule的命名空间,默认此CR所在命名空间 rulesNamespace: rook-ceph crashCollector: disable: false placement: osd: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: roleoperator: In values: - storage-node # 存储的设置,默认都是true,意思是会把集群所有node的设备清空初始化。 storage: # cluster level storage configuration and selection useAllNodes: false #关闭使用所有Node useAllDevices: false #关闭使用所有设备 nodes: - name: "k8s-node1" #指定存储节点主机 devices: - name: "sdb" #指定磁盘为/dev/sdb - name: "k8s-node2" devices: - name: "sdb"

更多 cluster 的 CRD 配置参考:

为osd节点增加label

[root@k8s-master ceph]# kubectl label nodes k8s-node1 role=storage-node node/k8s-node1 labeled [root@k8s-master ceph]# kubectl label nodes k8s-node2 role=storage-node node/k8s-node2 labeled [root@k8s-master ceph]# kubectl label nodes k8s-master role=storage-node node/k8s-masterlabeled

执行安装

# create single-node ceph cluster for test kubectl create -f cluster.yaml # Once it is completed(it took 5 mins, which depends on ur network condition), it should look like as below: # all the pods are deployed in `rook-ceph` namespace [root@k8s-master cephfs]# kubectl get pod -n rook-ceph NAME READY STATUS RESTARTS AGE csi-cephfsplugin-gpxh5 3/3 Running 3 24h csi-cephfsplugin-j2ms4 3/3 Running 3 24h csi-cephfsplugin-mnrfj 3/3 Running 3 24h csi-cephfsplugin-provisioner-845c5c79b4-4xzhl 5/5 Running 5 24h csi-cephfsplugin-provisioner-845c5c79b4-8frl8 5/5 Running 4 24h csi-rbdplugin-lkl2f 3/3 Running 3 24h csi-rbdplugin-n6p2k 3/3 Running 3 24h csi-rbdplugin-n9hmx 3/3 Running 3 24h csi-rbdplugin-provisioner-5fd9759ff6-2pjwd 6/6 Running 6 24h csi-rbdplugin-provisioner-5fd9759ff6-87g9c 6/6 Running 5 24h rook-ceph-crashcollector-k8s-master-579874bc7d-lfqd7 1/1 Running 1 24h rook-ceph-crashcollector-k8s-node1-7845c5d877-nkgp4 1/1 Running 0 34m rook-ceph-crashcollector-k8s-node2-6f9d46bffb-mlzpk 1/1 Running 0 34m rook-ceph-mds-myfs-a-757d4b-vnbwk 1/1 Running 0 34m rook-ceph-mds-myfs-b-69b5cc7f8-vjjfm 1/1 Running 0 34m rook-ceph-mgr-a-7f54fc9664-8wgzn 1/1 Running 1 24h rook-ceph-mon-a-7fc6b89ffb-w62bt 1/1 Running 1 24h rook-ceph-mon-b-7b88756867-99wc7 1/1 Running 1 24h rook-ceph-mon-c-846595bfcf-hv9cd 1/1 Running 1 24h rook-ceph-operator-6b57cd66b7-xnxnt 1/1 Running 1 24h rook-ceph-osd-0-7f6f9dcdf6-jlqhb 1/1 Running 1 24h rook-ceph-osd-1-6bd5556d9f-nrl8t 1/1 Running 1 24h rook-ceph-osd-2-5b6fc44884-nwfwb 1/1 Running 1 24h rook-ceph-osd-prepare-k8s-master-rw4gf 0/1 Completed 0 40m rook-ceph-osd-prepare-k8s-node1-l2jsw 0/1 Completed 0 40m rook-ceph-osd-prepare-k8s-node2-qc9nm 0/1 Completed 0 40m rook-ceph-tools-7fc67d8895-wbtr6 1/1 Running 1 24h rook-discover-9ms6x 1/1 Running 1 24h rook-discover-jj5sl 1/1 Running 1 24h rook-discover-l2rnr 1/1 Running 1 24h

部署完成后,可以通过官方提供的toolbox(就在刚才的git目录下)检查Ceph集群的健康状况:

# create ceph toolbox for check kubectl create -f toolbox.yaml # enter the pod to run ceph command kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') -- bash [root@rook-ceph-tools-7fc67d8895-wbtr6 /]# ceph status cluster: id: 37eb0bc7-0863-482b-bdf5-90f0a3c3fb66 health: HEALTH_WARN clock skew detected on mon.b, mon.c services: mon: 3 daemons, quorum a,b,c (age 52m) mgr: a(active, since 48m) mds: myfs:1 {0=myfs-a=up:active} 1 up:standby-replay osd: 3 osds: 3 up (since 52m), 3 in (since 24h) task status: scrub status: mds.myfs-a: idle mds.myfs-b: idle data: pools: 2 pools, 64 pgs objects: 26 objects, 92 KiB usage: 3.0 GiB used, 57 GiB / 60 GiB avail pgs: 64 active+clean io: client: 1.2 KiB/s rd, 2 op/s rd, 0 op/s wr [root@rook-ceph-tools-7fc67d8895-wbtr6 /]# ceph osd status +----+------------+-------+-------+--------+---------+--------+---------+-----------+ | id | host | used | avail | wr ops | wr data | rd ops | rd data | state | +----+------------+-------+-------+--------+---------+--------+---------+-----------+ | 0 | k8s-node1 | 1028M | 18.9G | 0 | 0 | 2 | 106 | exists,up | | 1 | k8s-node2 | 1028M | 18.9G | 0 | 0 | 0 | 0 | exists,up | | 2 | k8s-master | 1028M | 18.9G | 0 | 0 | 0 | 0 | exists,up | +----+------------+-------+-------+--------+---------+--------+---------+-----------+

[root@rook-ceph-tools-7fc67d8895-wbtr6 /]# ceph df RAW STORAGE: CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 60 GiB 57 GiB 13 MiB 3.0 GiB 5.02 TOTAL 60 GiB 57 GiB 13 MiB 3.0 GiB 5.02 POOLS: POOL ID STORED OBJECTS USED %USED MAX AVAIL myfs-metadata 1 91 KiB 25 1.9 MiB 0 18 GiB myfs-data0 2 158 B 1 192 KiB 0 18 GiB

[root@rook-ceph-tools-7fc67d8895-wbtr6 /]# rados df POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR myfs-data0 192 KiB 1 0 3 0 0 0 6 3 KiB 3 2 KiB 0 B 0 B myfs-metadata 1.9 MiB 25 0 75 0 0 0 5165 2.6 MiB 149 160 KiB 0 B 0 B total_objects 26 total_used 3.0 GiB total_avail 57 GiB total_space 60 GiB

三、删除ceph集群

删除ceph集群前,请先清理相关pod

删除块存储和文件存储

kubectl delete -n rook-ceph cephblockpool replicapool kubectl delete storageclass rook-ceph-block kubectl delete -f csi/cephfs/filesystem.yaml kubectl delete storageclass csi-cephfs rook-ceph-block

kubectl -n rook-ceph delete cephcluster rook-ceph

删除operator和相关crd

kubectl delete -f cluster.yaml

kubectl delete -f operator.yaml kubectl delete -f common.yaml kubectl delete -f crds.yaml

清除主机上的数据

删除Ceph集群后,在之前部署Ceph组件节点的/data/rook/目录,会遗留下Ceph集群的配置信息。

rm -rf /data/k8s/rook/*

若之后再部署新的Ceph集群,先把之前Ceph集群的这些信息删除,不然启动monitor会失败;

# cat clean-rook-dir.sh

hosts=(

192.168.130.130

192.168.130.131

192.168.130.132

)

for host in ${hosts[@]} ; do

ssh $host "rm -rf /data/k8s/rook/*"

done

清除device

yum install gdisk -y export DISK="/dev/sdb" sgdisk --zap-all $DISK dd if=/dev/zero of="$DISK" bs=1M count=100 oflag=direct,dsync blkdiscard $DISK ls /dev/mapper/ceph-* | xargs -I% -- dmsetup remove % rm -rf /dev/ceph-*

如果因为某些原因导致删除ceph集群卡主,可以先执行以下命令, 再删除ceph集群就不会卡主了

kubectl -n rook-ceph patch cephclusters.ceph.rook.io rook-ceph -p '{"metadata":{"finalizers": []}}' --type=merge

四、使用Ceph集群

Ceph是能提供对象存储、块存储、共享文件系统多种存储形式,这里使用块存储,兼容性更好,灵活性更高。

# go to the ceph csi rbd folder cd rook/cluster/examples/kubernetes/ceph/ # create ceph rdb storageclass for test [root@k8s-master ceph]# kubectl apply -f csi/rbd/storageclass.yaml cephblockpool.ceph.rook.io/replicapool created storageclass.storage.k8s.io/rook-ceph-block created [root@k8s-master ceph]# kubectl get storageclass NAME PROVISIONER AGE rook-ceph-block rook-ceph.rbd.csi.ceph.com 17s

有了Ceph StorageClass,我们只需要申明PVC,就可以快速按需创建出一个块设备以及对应的PV,相比传统的需要手动首先创建PV,然后在声明对应的PVC,操作更简单,管理更方便。

下面是一个基于Ceph StorageClass的PVC yaml例子:

[root@k8s-master ceph]# kubectl apply -f csi/rbd/pvc.yaml

persistentvolumeclaim/rbd-pvc created

部署PVC,并观察PV是否自动创建:

[root@k8s-master ceph]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE rbd-pvc Bound pvc-ef49d8f8-b9fd-4aad-b604-9d4ec667e346 1Gi RWO rook-ceph-block 23s

[root@k8s-master ceph]# kubectl get pvc,pv NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/rbd-pvc Bound pvc-ef49d8f8-b9fd-4aad-b604-9d4ec667e346 1Gi RWO rook-ceph-block 29s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-ef49d8f8-b9fd-4aad-b604-9d4ec667e346 1Gi RWO Delete Bound default/rbd-pvc rook-ceph-block 26s

创建一个基于PVC的Pod:

[root@k8s-master ceph]# kubectl apply -f csi/rbd/pod.yaml pod/csirbd-demo-pod created

--- apiVersion: v1 kind: Pod metadata: name: csirbd-demo-pod spec: containers: - name: web-server image: nginx volumeMounts: - name: mypvc mountPath: /var/lib/www/html volumes: - name: mypvc persistentVolumeClaim: claimName: rbd-pvc readOnly: false

等待Pod部署完成,观察pod的存储挂载情况:

[root@k8s-master ceph]# kubectl exec -it csirbd-demo-pod -- bash -c df -h Filesystem 1K-blocks Used Available Use% Mounted on overlay 16558080 8315872 8242208 51% / tmpfs 65536 0 65536 0% /dev tmpfs 1447648 0 1447648 0% /sys/fs/cgroup /dev/mapper/centos-root 16558080 8315872 8242208 51% /etc/hosts shm 65536 0 65536 0% /dev/shm /dev/rbd0 999320 2564 980372 1% /var/lib/www/html tmpfs 1447648 12 1447636 1% /run/secrets/kubernetes.io/serviceaccount tmpfs 1447648 0 1447648 0% /proc/acpi tmpfs 1447648 0 1447648 0% /proc/scsi tmpfs 1447648 0 1447648 0% /sys/firmware

理解Access Mode属性

存储系统的访问安全控制在Kubernetes的时代得到了长足的进步,远远胜于纯Docker时代的简单粗暴。来看下Kubernetes在管理存储(PV、PVC)时提供了哪些访问控制机制:

- RWO: ReadWriteOnce,只有单个节点可以挂载这个volume,进行读写操作;

- ROX: ReadOnlyMany,多个节点可以挂载这个volume,只能进行读操作;

- RWX: ReadWriteMany,多个节点可以挂载这个volume,读写操作都是允许的。

所以RWO、ROX和RWX只跟同时使用volume的worker节点数量有关,而不是跟pod数量!

以前苦于没有部署云原生存储系统,一直没法实践这些特性,这次得益于Rook的便捷性,赶紧来尝鲜下。计划测试两个场景:

- 测试ReadWriteOnce,测试步骤如下:

- 首先部署一个使用ReadWriteOnce访问权限的PVC的名为ceph-pv-pod的单个pod实例

- 然后部署一个使用相同PVC的名为n2的deployment,1个pod实例

- 扩容n2至6个pod副本

- 观察结果

> kubectl get pod -o wide --sort-by=.spec.nodeName | grep -E '^(n2|ceph)' NAME READY STATUS IP NODE n2-7db787d7f4-ww2fp 0/1 ContainerCreating <none> node01 n2-7db787d7f4-8r4n4 0/1 ContainerCreating <none> node02 n2-7db787d7f4-q5msc 0/1 ContainerCreating <none> node02 n2-7db787d7f4-2pfvd 1/1 Running 100.96.174.137 node03 n2-7db787d7f4-c8r8k 1/1 Running 100.96.174.139 node03 n2-7db787d7f4-hrwv4 1/1 Running 100.96.174.138 node03 ceph-pv-pod 1/1 Running 100.96.174.135 node03

从上面的结果可以看到,由于ceph-pv-pod这个Pod优先绑定了声明为ReadWriteOnce的PVC,它所在的节点node03就能成功部署n2的pod实例,而调度到其他节点的n2就无法成功部署了,挑个看看错误信息:

> kubectl describe pod n2-7db787d7f4-ww2fp ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned default/n2-7db787d7f4-ww2fp to node01 Warning FailedAttachVolume 10m attachdetach-controller Multi-Attach error for volume "pvc-fb2d6d97-d7aa-43df-808c-81f15e7a2797" Volume is already used by pod(s) n2-7db787d7f4-c8r8k, ceph-pv-pod, n2-7db787d7f4-2pfvd, n2-7db787d7f4-hrwv4

从Pod Events中可以明显看到错误了,由于ReadWriteOnce的存在,无法使用Multi-Attach了,符合期待。

- 测试ReadWriteMany,测试步骤如下:

- 首先部署一个使用 ReadWriteMany访问权限的PVC的名为2ceph-pv-pod的单个pod实例

- 然后部署一个使用相同PVC的名为n3的deployment,1个pod实例

- 扩容n3至6个pod副本

- 观察结果

原来是想直接改第一个测试场景的创建pvc的yaml,发现如下错误。意思是创建好的pvc除了申请的存储空间以外,其他属性是无法修改的。

kubectl apply -f pvc.yaml The PersistentVolumeClaim "ceph-pv-claim" is invalid: spec: Forbidden: is immutable after creation except resources.requests for bound claims

只能重新创建了。。。但当声明创建新的PVC时,又发生了问题,pvc一直处于pending状态。。。

> kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE ceph-pv-claim Bound pvc-fb2d6d97-d7aa-43df-808c-81f15e7a2797 1Gi RWO rook-ceph-block 36h ceph-pvc-2 Pending rook-ceph-block 10m > kubectl describe pvc ceph-pvc-2 ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Provisioning 4m41s (x11 over 13m) rook-ceph.rbd.csi.ceph.com_csi-rbdplugin-provisioner-66f64ff49c-wvpkg_b78217fb-8739-4ced-9e18-7430fdde964b External provisioner is provisioning volume for claim "default/ceph-pvc-2" Warning ProvisioningFailed 4m41s (x11 over 13m) rook-ceph.rbd.csi.ceph.com_csi-rbdplugin-provisioner-66f64ff49c-wvpkg_b78217fb-8739-4ced-9e18-7430fdde964b failed to provision volume with StorageClass "rook-ceph-block": rpc error: code = InvalidArgument desc = multi node access modes are only supported on rbd `block` type volumes Normal ExternalProvisioning 3m4s (x42 over 13m) persistentvolume-controller waiting for a volume to be created, either by external provisioner "rook-ceph.rbd.csi.ceph.com" or manually created by system administrator

查看event详细后,发现了这个错误信息:

failed to provision volume with StorageClass "rook-ceph-block": rpc error: code = InvalidArgument desc = multi node access modes are only supported on rbd `block` type volumes

翻译过来的意思是:多节点访问模式只支持在rbd block类型的volume上配置。。。难道说ceph的这个rbd storageclass是个假的“块存储”。。。

一般发生这种不所措的错误,首先可以去官方Github的issue或pr里找找有没有类似的问题。经过一番搜索,找到一个maintainer的相关说法。如下图所示。意思是不推荐在ceph rbd模式下使用RWX访问控制,如果应用层没有访问锁机制,可能会造成数据损坏。

进而找到了官方上的说法

There are two CSI drivers integrated with Rook that will enable different scenarios:

- RBD: This driver is optimized for RWO pod access where only one pod may access the storage

- CephFS: This driver allows for RWX with one or more pods accessing the same storage

好吧,原来官方网站已经说明了CephFS模式是使用RWX模式的正确选择。

使用CephFS测试ReadWriteMany(RWX)模式

官方已经提供了支持CephFS的StorageClass,我们需要部署开启:

cd rook/cluster/examples/kubernetes/ceph/ kubectl apply -f filesystem.yaml kubectl apply -f csi/cephfs/storageclass.yaml [root@k8s-master ceph]# kubectl get sc NAME PROVISIONER AGE rook-ceph-block rook-ceph.rbd.csi.ceph.com 10m rook-cephfs rook-ceph.cephfs.csi.ceph.com 57m

创建完CephFS的StorageClass和FileSystem,就可以测试了。测试场景为部署一个deployment,6个副本,使用RWX模式的Volume:

--- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: cephfs-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi storageClassName: rook-cephfs

新建一个nginx的deployment

apiVersion: apps/v1 kind: Deployment metadata: labels: app: n4-cephfs pv: cephfs name: n4-cephfs spec: replicas: 3 selector: matchLabels: app: n4-cephfs pv: cephfs template: metadata: labels: app: n4-cephfs pv: cephfs spec: volumes: - name: fsceph-pv-storage persistentVolumeClaim: claimName: cephfs-pvc containers: - image: 10.2.55.8:5000/library/nginx:1.18.0 name: nginx ports: - containerPort: 80 name: "http-server" volumeMounts: - mountPath: "/usr/share/nginx/html" name: fsceph-pv-storage

部署后观察每个pod的运行情况以及PV和PVC创建情况:

[root@k8s-master ceph]# kubectl get pod -l pv=cephfs -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES n4-cephfs-66cbcfd84b-25k4n 1/1 Running 0 44m 10.244.2.196 k8s-node2 <none> <none> n4-cephfs-66cbcfd84b-7rgw6 1/1 Running 0 44m 10.244.1.242 k8s-node1 <none> <none> n4-cephfs-66cbcfd84b-gl9pj 1/1 Running 0 44m 10.244.0.178 k8s-master <none> <none> [root@k8s-master ceph]# kubectl get pvc,pv NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/cephfs-pvc Bound pvc-7265f11e-39ce-42df-9b7c-02e8916bc5c2 1Gi RWX rook-cephfs 44m persistentvolumeclaim/rbd-pvc Bound pvc-ef49d8f8-b9fd-4aad-b604-9d4ec667e346 1Gi RWO rook-ceph-block 13m NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-7265f11e-39ce-42df-9b7c-02e8916bc5c2 1Gi RWX Delete Bound default/cephfs-pvc rook-cephfs 44m persistentvolume/pvc-ef49d8f8-b9fd-4aad-b604-9d4ec667e346 1Gi RWO Delete Bound default/rbd-pvc rook-ceph-block 13m [root@k8s-master ~]# kubectl exec pod/n4-cephfs-66cbcfd84b-7rgw6 -- bash -c df -h Filesystem 1K-blocks Used Available Use% Mounted on overlay 16558080 8313176 8244904 51% / tmpfs 65536 0 65536 0% /dev tmpfs 1447648 0 1447648 0% /sys/fs/cgroup /dev/mapper/centos-root 16558080 8313176 8244904 51% /etc/hosts shm 65536 0 65536 0% /dev/shm 10.99.56.167:6789,10.110.248.120:6789,10.110.195.253:6789:/volumes/csi/csi-vol-e14ef036-5002-11eb-b1e4-e2f740f51378/319af089-cd37-4b55-a776-d81216bca859 1048576 0 1048576 0% /usr/share/nginx/html tmpfs 1447648 12 1447636 1% /run/secrets/kubernetes.io/serviceaccount tmpfs 1447648 0 1447648 0% /proc/acpi tmpfs 1447648 0 1447648 0% /proc/scsi tmpfs 1447648 0 1447648 0% /sys/firmware [root@k8s-master ~]# kubectl exec pod/n4-cephfs-66cbcfd84b-25k4n -- bash -c df -h Filesystem 1K-blocks Used Available Use% Mounted on overlay 16558080 9623308 6934772 59% / tmpfs 65536 0 65536 0% /dev tmpfs 1447648 0 1447648 0% /sys/fs/cgroup /dev/mapper/centos-root 16558080 9623308 6934772 59% /etc/hosts shm 65536 0 65536 0% /dev/shm 10.99.56.167:6789,10.110.248.120:6789,10.110.195.253:6789:/volumes/csi/csi-vol-e14ef036-5002-11eb-b1e4-e2f740f51378/319af089-cd37-4b55-a776-d81216bca859 1048576 0 1048576 0% /usr/share/nginx/html tmpfs 1447648 12 1447636 1% /run/secrets/kubernetes.io/serviceaccount tmpfs 1447648 0 1447648 0% /proc/acpi tmpfs 1447648 0 1447648 0% /proc/scsi tmpfs 1447648 0 1447648 0% /sys/firmware

分布在不同的节点上的pod都能部署成功,PV也能创建绑定成功。符合测试预期。

更深入地观察存储挂载机制

通过上面两个测试场景,我们来看下背后的云原生存储的运行逻辑:

- 进入pod观察存储挂载情况

对比两个测试场景pod实例里面存储挂载情况:

# use ceph as rbd storage # it is mount as block device df -h /dev/rbd0 976M 3.3M 957M 1% /usr/share/nginx/html # use ceph as file system storage # it is mount as nfs storage df -h 10.109.80.220:6789:/volumes/csi/csi-vol-1dc92634-79cd-11ea-96a3-26ab72958ea2 1.0G 0 1.0G 0% /usr/share/nginx/html

可以看到Ceph rbd和CephFS挂载到Pod里的方式是有差别的。

- 观察主机层存储挂载情况

# use ceph as rbd storage > df -h |grep rbd # on work node /dev/rbd0 976M 3.3M 957M 1% /var/lib/kubelet/pods/e432e18d-b18f-4b26-8128-0b0219a60662/volumes/kubernetes.io~csi/pvc-fb2d6d97-d7aa-43df-808c-81f15e7a2797/mount # use ceph as file system storage > df -h |grep csi 10.109.80.220:6789:/volumes/csi/csi-vol-1dc92634-79cd-11ea-96a3-26ab72958ea2 1.0G 0 1.0G 0% /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-75b40dd7-b880-4d67-9da6-88aba8616466/globalmount

简单解释下主机层相关路径的命名规则:

/var/lib/kubelet/pods/<Pod的ID>/volumes/kubernetes.io~<Volume类型>/<Volume名字>

最终都是通过docker run映射到容器里去:

docker run -v /var/lib/kubelet/pods/<Pod-ID>/volumes/kubernetes.io~<Volume类型>/<Volume名字>:/<容器内目标目录> 镜像 ...

- 从Kubernetes观察存储挂载情况

Kubernetes提供了获取StorageClass、PV和Node之间的关系——volumeattachment资源类型。它的官方解释是:

VolumeAttachment captures the intent to attach or detach the specified volume to/from the specified node. VolumeAttachment objects are non-namespaced.

来看下当前的情况:

[root@k8s-master ~]# kubectl get volumeattachment NAME ATTACHER PV NODE ATTACHED AGE csi-0824b06d082cc7fca254899682d6665890473ad6023e13361031c57f60094361 rook-ceph.rbd.csi.ceph.com pvc-ef49d8f8-b9fd-4aad-b604-9d4ec667e346 k8s-node1 true 15m csi-321887a821d7fd1ad443965cb5527feaaec7db107331312f2e33da02c8544938 rook-ceph.cephfs.csi.ceph.com pvc-7265f11e-39ce-42df-9b7c-02e8916bc5c2 k8s-master true 48m csi-3beca915ee1a56489667bb1f848e9dd23ce605a408e308f46ce28b1f301bf613 rook-ceph.cephfs.csi.ceph.com pvc-7265f11e-39ce-42df-9b7c-02e8916bc5c2 k8s-node1 true 48m csi-c684923291c052d41ae6cc5afd7f9852f6e6d3e2d5183ea4148c29ed1430e5b8 rook-ceph.cephfs.csi.ceph.com pvc-7265f11e-39ce-42df-9b7c-02e8916bc5c2 k8s-node2 true 48m

能看到每个主机层挂载点的详细情况,方便大家troubleshooting。

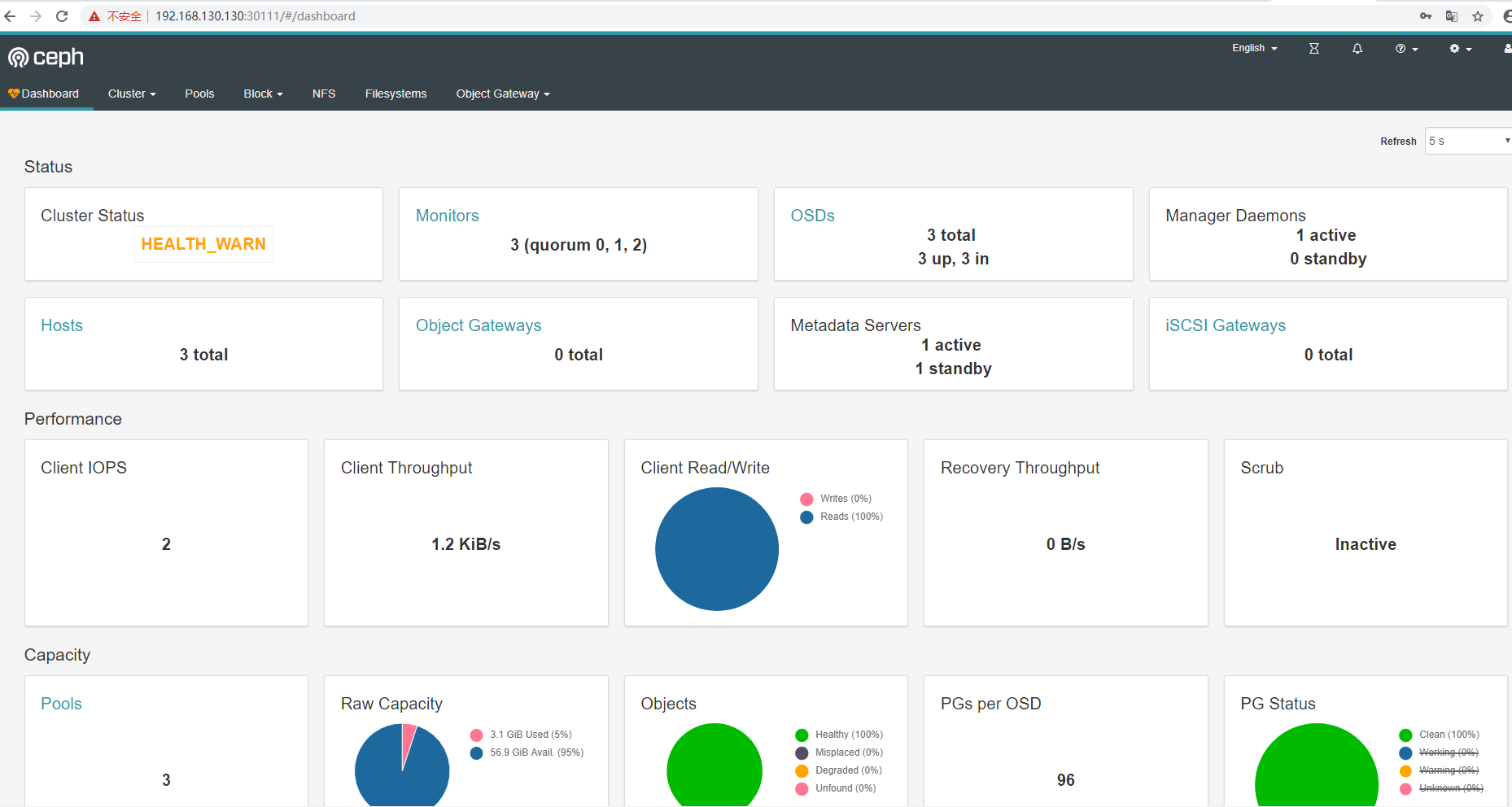

Ceph界面化管理Ceph Dashboard

Rook官方很贴心地提供了Ceph界面化管理的解决方案——Ceph dashboard。标准版部署Rook已经自带这个功能,默认是无法集群外访问的,手动expose为nodeport模式即可:

[root@k8s-master ~]# kubectl -n rook-ceph get svc |grep dash rook-ceph-mgr-dashboard NodePort 10.111.149.53 <none> 7000:30111/TCP 25h

通过浏览器访问https://node-ip:30111,默认登录用户名为admin,密码可以通过这样的方式获取:

[root@k8s-master ~]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo ]Qz!5OK^|%#a$lzgQ(<n

登录后,界面如下。内容非常多,包括读写速率监控,健康监控等,绝对是Ceph管理的好帮手。

还提供交互式API文档,非常贴心。

戳视频可以看完整Demo:

总结

Rook能帮你快速搭建一套Production-Ready的云原生存储平台,同时提供全生命周期管理,适合初中高级全阶段的存储管理玩家。

本文涉及的部署物料可以去这里获取: