一、更新源

1、更新docker和k8s源

(这里我使用的是华为源https://mirrors.huaweicloud.com/)

docker-ce

1、若您安装过docker,需要先删掉,之后再安装依赖: sudo yum remove docker docker-common docker-selinux docker-engine sudo yum install -y yum-utils device-mapper-persistent-data lvm2 2、根据版本不同,下载repo文件。您使用的发行版: CentOS/RHEL wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo 软件仓库地址替换为: sudo sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo 3、更新索引文件并安装 sudo yum makecache fast sudo yum install docker-ce

kubernetes源

1、备份/etc/yum.repos.d/kubernetes.repo文件: cp /etc/yum.repos.d/kubernetes.repo /etc/yum.repos.d/kubernetes.repo.bak 2、修改/etc/yum.repos.d/kubernetes.repo文件: cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://repo.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://repo.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg https://repo.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg EOF 3、SELinux运行模式切换为宽容模式 setenforce 0 4、更新索引文件 yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes systemctl enable --now kubelet

2、更新docker镜像加速(网易)

cat >/etc/docker/daemon.json << EOF { "registry-mirrors": ["http://hub-mirror.c.163.com"] } EOF

二、设置环境

1、关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

2、关闭selinux

setenforce 0

3、关闭swap

swapoff -a 临时关闭 永久关闭设置/etc/fstab

sed -i 's/.*swap.*/#&/' /etc/fstab

4.内核参数修改

cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

sysctl -p 生效

三、安装docker和k8s

master节点操作

1、安装服务

yum install -y docker-ce

yum install -y kubelet kubeadm kubectl

2、拉取镜像

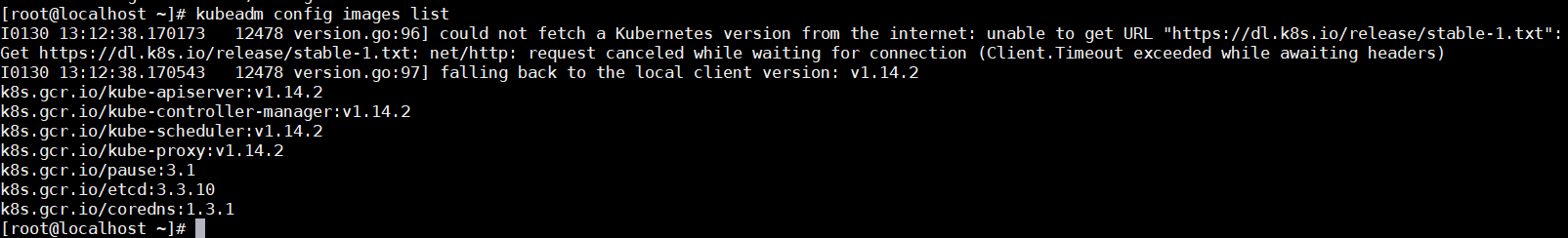

可先通过kubeadm config images list 查看所需镜像,由于镜像都是国外,所以先拉取国内镜像,在tag改名

docker pull mirrorgooglecontainers/kube-apiserver-amd64:v1.14.2 docker pull mirrorgooglecontainers/kube-controller-manager-amd64:v1.14.2 docker pull mirrorgooglecontainers/kube-scheduler-amd64:v1.14.2 docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.14.2 docker pull mirrorgooglecontainers/pause-amd64:3.1 docker pull mirrorgooglecontainers/etcd-amd64:3.3.10 docker pull coredns/coredns:1.3.1

[root@localhost ~]# docker tag mirrorgooglecontainers/kube-proxy-amd64:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2 [root@localhost ~]# docker tag mirrorgooglecontainers/kube-apiserver-amd64:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2 [root@localhost ~]# docker tag mirrorgooglecontainers/kube-controller-manager-amd64:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2 [root@localhost ~]# docker tag mirrorgooglecontainers/kube-scheduler-amd64:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2 [root@localhost ~]# docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1 [root@localhost ~]# docker tag mirrorgooglecontainers/etcd-amd64:3.3.10 k8s.gcr.io/etcd:3.3.10 [root@localhost ~]# docker tag mirrorgooglecontainers/pause-amd64:3.1 k8s.gcr.io/pause:3.1

docker rmi mirrorgooglecontainers/kube-proxy-amd64:v1.14.2 docker rmi mirrorgooglecontainers/kube-apiserver-amd64:v1.14.2 docker rmi mirrorgooglecontainers/kube-controller-manager-amd64:v1.14.2 docker rmi mirrorgooglecontainers/kube-scheduler-amd64:v1.14.2 docker rmi coredns/coredns:1.3.1 docker rmi mirrorgooglecontainers/etcd-amd64:3.3.10 docker rmi mirrorgooglecontainers/pause-amd64:3.1

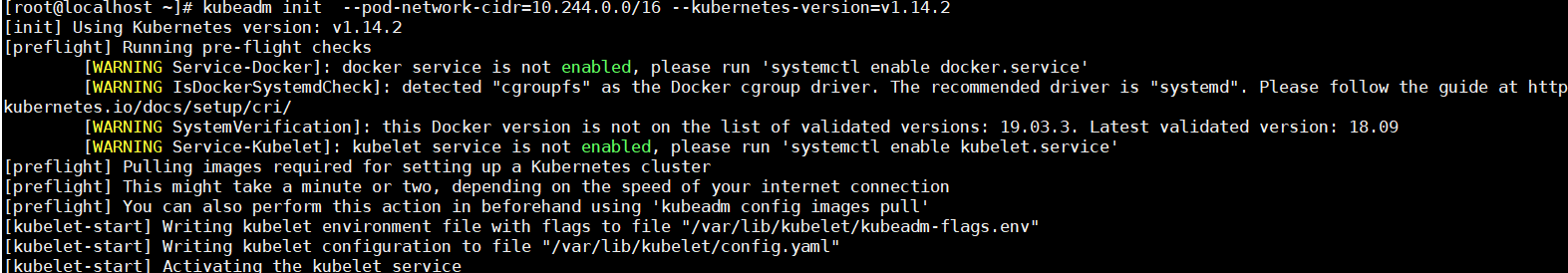

3、初始化集群

kubeadm init --pod-network-cidr=10.244.0.0/16 --kubernetes-version=v1.14.2

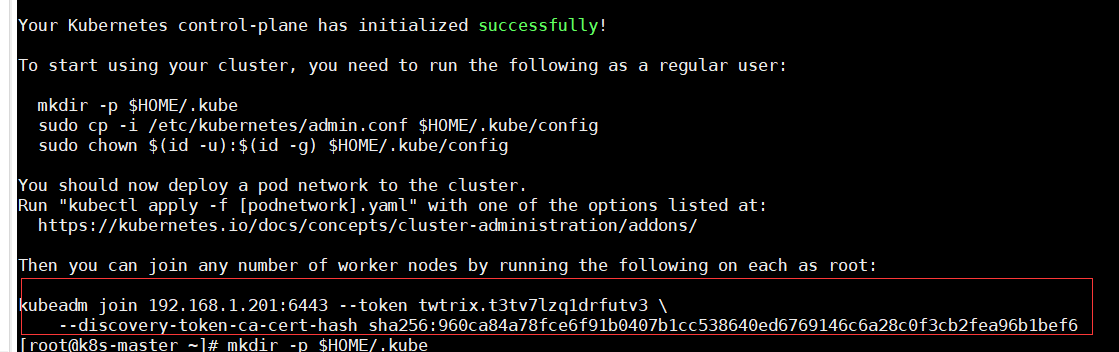

记下这里的token,节点加入需要使用

要使kubectl为非root用户工作,请运行以下命令,这些命令也是kubeadm init输出的一部分:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

或者,如果是root用户,则可以运行(未测试):

export KUBECONFIG=/etc/kubernetes/admin.conf

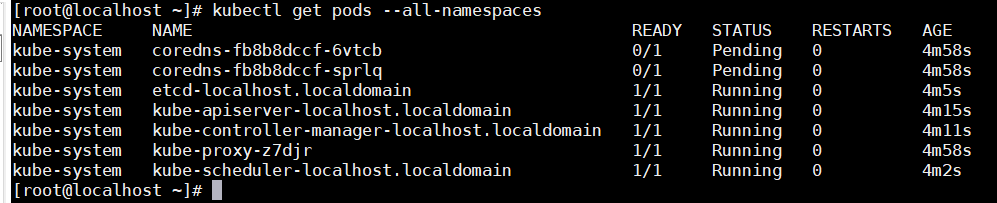

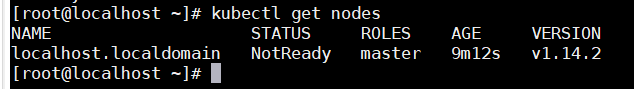

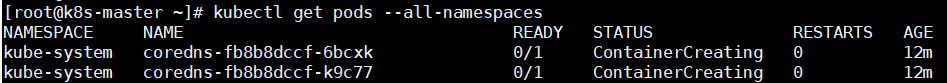

查看pod状态 kubectl get pods --all-namespaces

此处可以看出我们已经获取到了node节点,但是状态是NotReady,因为此时我们还没有安装网络,接下来我们安装flannel:

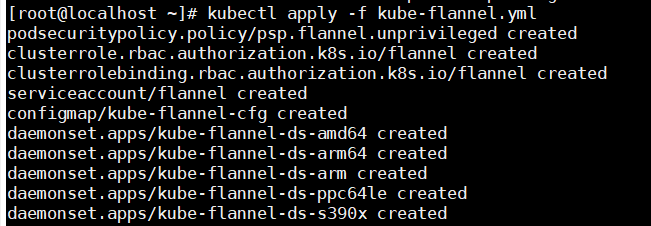

4、配置flannel网络

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - amd64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - ppc64le hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - s390x hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

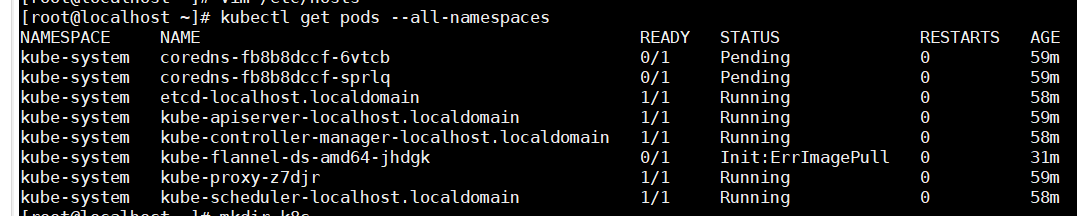

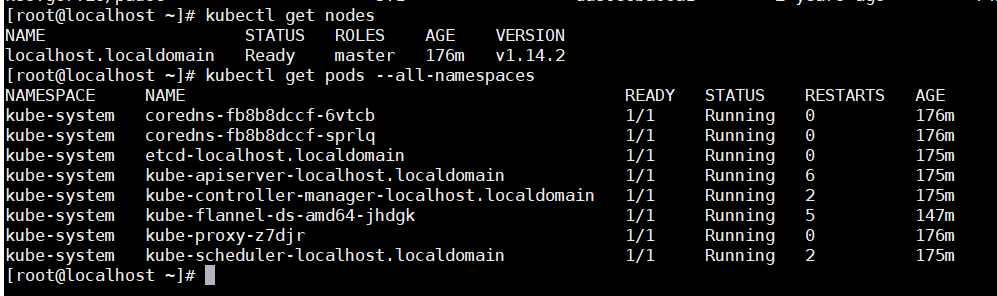

此时查看pod节点

显示镜像未拉取,可以手动先pull镜像 quay.io/coreos/flannel:v0.11.0-amd64,此时再查看节点信息,一然后running

节点上操作

1、安装服务

yum install -y docker-ce

yum install -y kubelet kubeadm kubectl

2、拉取镜像

节点只需要安装如下镜像以及flannel网络

docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.14.2 docker pull mirrorgooglecontainers/pause-amd64:3.1 docker pull coredns/coredns:1.3.1

docker pull quay.io/coreos/flannel:v0.11.0-amd64

[root@localhost ~]# docker tag mirrorgooglecontainers/kube-proxy-amd64:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2 [root@localhost ~]# docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1 [root@localhost ~]# docker tag mirrorgooglecontainers/pause-amd64:3.1 k8s.gcr.io/pause:3.1

docker rmi mirrorgooglecontainers/kube-proxy-amd64:v1.14.2 docker rmi coredns/coredns:1.3.1 docker rmi mirrorgooglecontainers/pause-amd64:3.1

3、加入k8s集群,使用master创建时显示的token

kubeadm join 192.168.1.201:6443 --token twtrix.t3tv7lzq1drfutv3 --discovery-token-ca-cert-hash sha256:960ca84a78fce6f91b0407b1cc538640ed6769146c6a28c0f3cb2fea96b1bef6

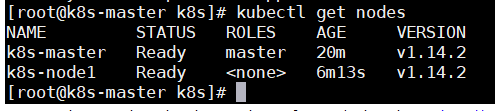

此时在master上就可以看到新加的节点

四、部署Dashboard

在master节点上进行如下操作:

1.创建Dashboard的yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

使用如下命令或直接手动编辑kubernetes-dashboard.yaml文件

sed -i 's/k8s.gcr.io/loveone/g' kubernetes-dashboard.yaml sed -i '/targetPort:/a nodePort: 30001 type: NodePort' kubernetes-dashboard.yaml

手动编辑kubernetes-dashboard.yaml文件时,需要修改两处内容,首先在Dashboard Deployment部分修改Dashboard镜像下载链接,由于默认从官方社区下载,因此修改为:image: loveone/kubernetes-dashboard-amd64:v1.10.1 修改后内容如图,修改后如下:

# Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # ------------------- Dashboard Secret ------------------- # apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kube-system type: Opaque --- # ------------------- Dashboard Service Account ------------------- # apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system --- # ------------------- Dashboard Role & Role Binding ------------------- # kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system rules: # Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret. - apiGroups: [""] resources: ["secrets"] verbs: ["create"] # Allow Dashboard to create 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] verbs: ["create"] # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics from heapster. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system --- # ------------------- Dashboard Deployment ------------------- # kind: Deployment apiVersion: apps/v1beta2 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: 192.168.0.217:5000/library/kubernetes-dashboard-amd64:v1.10.0 ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- # ------------------- Dashboard Service ------------------- # kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30001 selector: k8s-app: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: - apiGroups: - '*' resources: - '*' verbs: - '*' - nonResourceURLs: - '*' verbs: - '*' --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system

2.部署Dashboard

kubectl create -f kubernetes-dashboard.yaml

3.创建完成后,检查相关服务运行状态

kubectl get deployment kubernetes-dashboard -n kube-system kubectl get pods -n kube-system -o wide kubectl get services -n kube-system netstat -ntlp|grep 30001

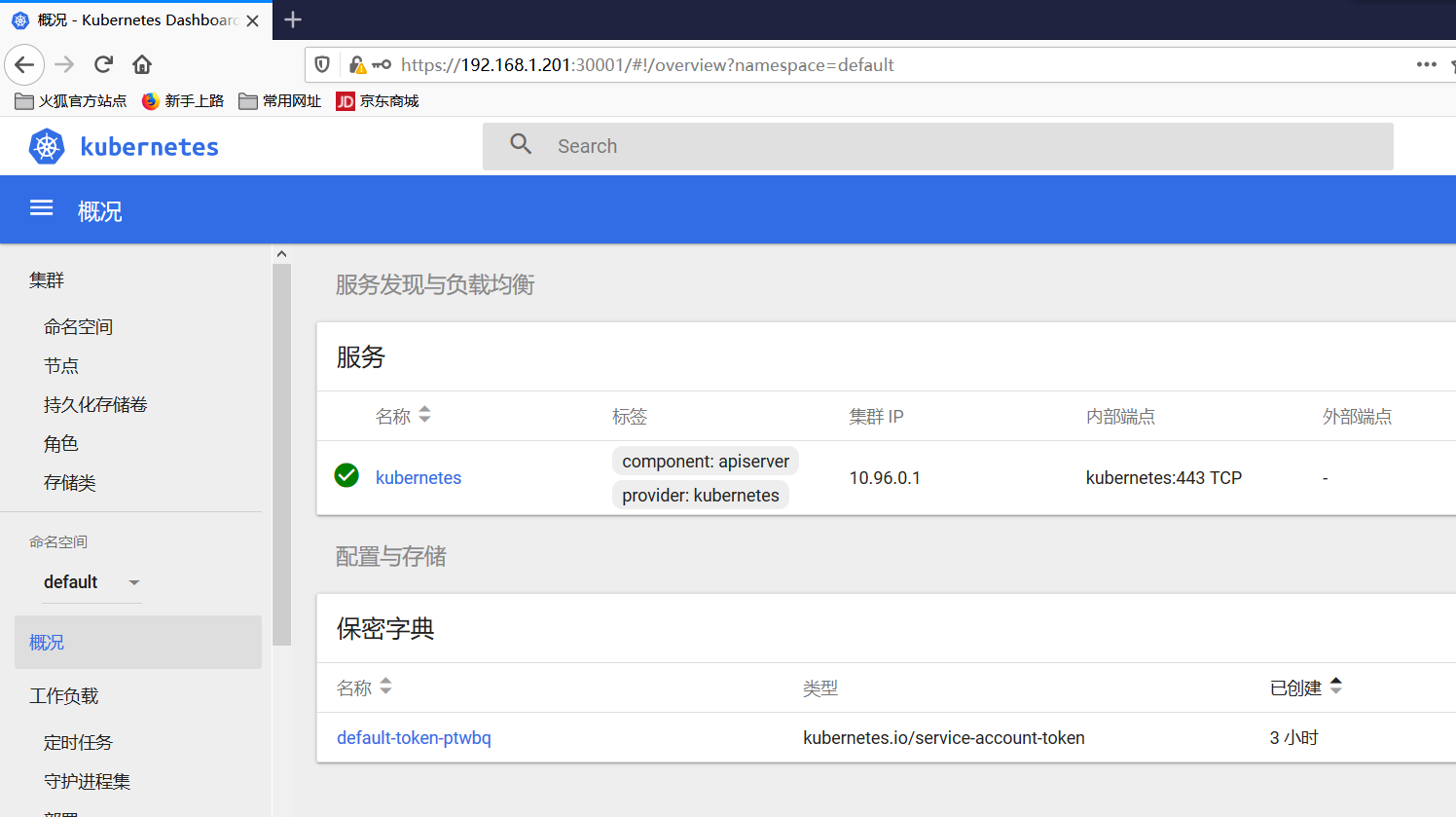

4.在Firefox浏览器输入Dashboard访问地址:https://192.168.1.201:30001 (使用火狐浏览器,360和ie都不行,测试了很多遍)

PS:在谷歌浏览器打开后,如显示,鼠标选中网页,直接键盘输入thisisunsafe,页面将自动刷新,看到惊喜。

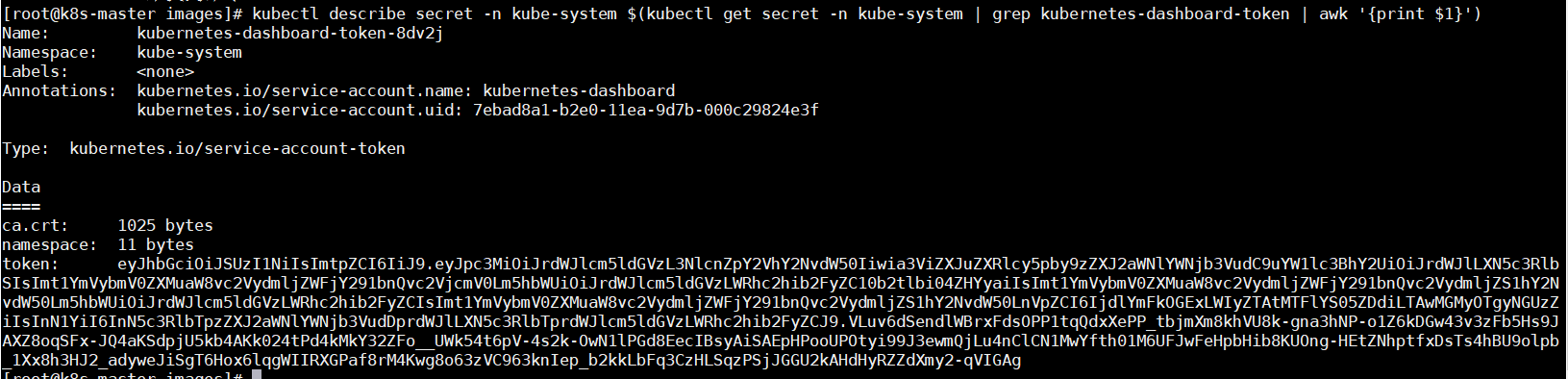

5. 查看访问Dashboard的认证令牌

kubectl describe secret -n kube-system $(kubectl get secret -n kube-system | grep kubernetes-dashboard-token | awk '{print $1}')

6.使用上面输出的token登录Dashboard。

或者配置kubeconfig文件进行登录

TOKEN=$(kubectl describe secret -n kube-system $(kubectl get secret -n kube-system | grep kubernetes-dashboard-token | awk '{print $1}')|grep token|awk 'END {print $2}') kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.crt --embed-certs=true --server=192.168.0.211:6443 --kubeconfig=dashboard.kubeconfig kubectl config set-credentials dashboard_user --token=${TOKEN} --kubeconfig=dashboard.kubeconfig kubectl config set-context default --cluster=kubernetes --user=dashboard_user --kubeconfig=dashboard.kubeconfig kubectl config use-context default --kubeconfig=dashboard.kubeconfig

认证通过后,登录Dashboard首页如图

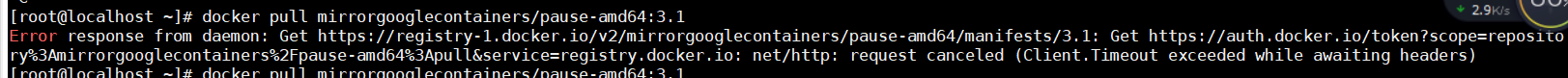

PS:报错

1、

解决办法:

[root@docker-registry ~]# yum install bind-utils // 安装dig工具 [root@docker-registry ~]# dig @114.114.114.114 registry-1.docker.io

[root@localhost ~]# dig @114.114.114.114 registry-1.docker.io IP地址非固定可能会变动,所以需要多试几次(几个IP)

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-9.P2.el7 <<>> @114.114.114.114 registry-1.docker.io

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 50465

;; flags: qr rd ra; QUERY: 1, ANSWER: 8, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;registry-1.docker.io. IN A

;; ANSWER SECTION:

registry-1.docker.io. 1 IN A 54.174.39.59

registry-1.docker.io. 1 IN A 52.201.142.14

registry-1.docker.io. 1 IN A 34.228.211.243

registry-1.docker.io. 1 IN A 52.87.94.70

registry-1.docker.io. 1 IN A 52.202.253.107

registry-1.docker.io. 1 IN A 34.232.31.24

registry-1.docker.io. 1 IN A 52.55.198.220

registry-1.docker.io. 1 IN A 34.201.196.144

;; Query time: 8 msec

;; SERVER: 114.114.114.114#53(114.114.114.114)

;; WHEN: 四 1月 30 15:05:08 CST 2020

;; MSG SIZE rcvd: 166

选择上面命令执行结果中的一组解析放到本机的/etc/hosts文件里做映射

[root@localhost ~]# vim /etc/hosts

52.201.142.14 registry-1.docker.io

[root@localhost ~]# docker pull mirrorgooglecontainers/pause-amd64:3.1

3.1: Pulling from mirrorgooglecontainers/pause-amd64

67ddbfb20a22: Pull complete

Digest: sha256:59eec8837a4d942cc19a52b8c09ea75121acc38114a2c68b98983ce9356b8610

Status: Downloaded newer image for mirrorgooglecontainers/pause-amd64:3.1

docker.io/mirrorgooglecontainers/pause-amd64:3.1

2、现象如下:

coredns一直处于ContainerCreating,通过kubectl describe pods/coredns-fb8b8dccf-k9c77 -n kube-system看到如下错误

failed to set bridge addr: "cni0" already has an IP address different from 10.244.1.1/24

原因:

node节点pod无法启动/节点删除网络重置

- node1之前反复添加过,添加之前需要清除下网络

解决方法:

node1之前反复添加过,添加之前需要清除下网络

重置kubernetes服务,重置网络。删除网络配置

kubeadm reset systemctl stop kubelet systemctl stop docker rm -rf /var/lib/cni/ rm -rf /var/lib/kubelet/* rm -rf /etc/cni/

rm -rf /etc/kubernetes/

rm -rf $HOME/.kube ifconfig cni0 down ifconfig flannel.1 down ifconfig docker0 down ip link delete cni0 ip link delete flannel.1 systemctl start docker

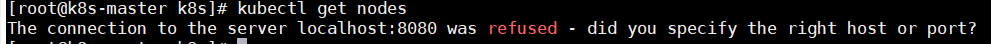

3、

原因:kubenetes master没有与本机绑定,集群初始化的时候没有设置

解决方法:

[root@k8s-master ~]# mkdir -p $HOME/.kube [root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config