Transposed Convolution, 也叫Fractional Strided Convolution, 或者流行的(错误)称谓: 反卷积, Deconvolution. 定义请参考tutorial. 此处也是对tutorial中的theano实现做一个总结, 得到一段可用的Deconvolution代码.

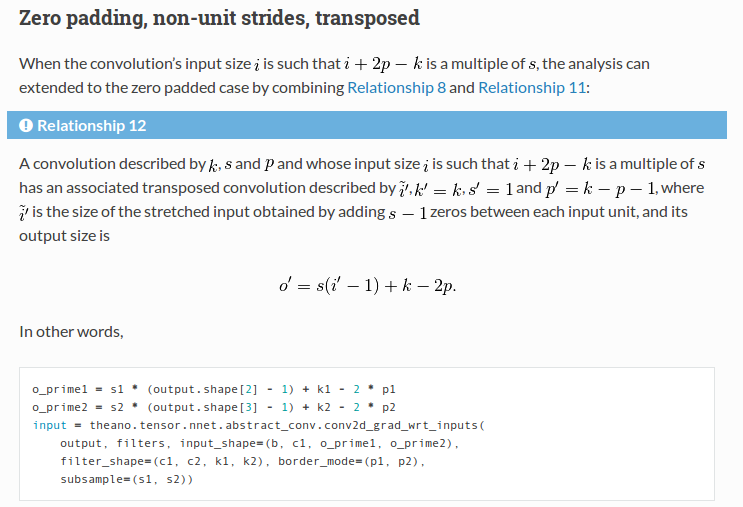

反卷积(都这么叫了, 那我也不纠结这个了. )的实现方式之一是前向卷积操作的反向梯度传播过程, 所以在Theano中可使用theano.tensor.nnet.abstract_conv.conv2d_grad_wrt_inputs方法来实现反卷积, 方法名的大概意思是给定输出后, 它可以反向传播到输入的梯度大小, 即(frac {partial a}{x}), 其中(a,x)分别为输出和输入.

封装成常见的class:

class DeconvolutionLayer(Layer):

def __init__(self, input, filter_shape, stride, padding = (0, 0), name = 'deconv' ):

Layer.__init__(self, input, name, activation = None)

W_value = util.rand.normal(filter_shape)

W_value = np.asarray(W_value, dtype = util.dtype.floatX)

self.W = theano.shared(value = W_value, borrow = True)

s1, s2 = stride;

p1, p2 = padding;

k1, k2 = filter_shape[-2:]

o_prime1 = s1 * (self.input.shape[2] - 1) + k1 - 2 * p1

o_prime2 = s2 * (self.input.shape[3] - 1) + k2 - 2 * p2

output_shape=(None, None, o_prime1, o_prime2)

self.output_shape = output_shape

self.output = T.nnet.abstract_conv.conv2d_grad_wrt_inputs(output_grad = self.input, input_shape = output_shape, filters = self.W, filter_shape = filter_shape, border_mode= padding, subsample= stride)

self.params = [self.W]

不明白为什么conv2d_grad_wrt_inputs方法一定要提供input_shape参数. 文档是这么写的:

input_shape : [None/int/Constant] * 2 + [Tensor/int/Constant] * 2 The shape of the input (upsampled) parameter. A tuple/list of len 4, with the first two dimensions being None or int or Constant and the last two dimensions being Tensor or int or Constant. Not Optional, since given the output_grad shape and the subsample values, multiple input_shape may be plausible.

意思是给定output_grad的shape与subsample(即stride)后, input_shape不是唯一的, 可是我还确定了padding啊, 这不就唯一了?

值得一提的是, padding一般取0.

在用FCN作语义分割的paper code(caffe 实现)中:

n.upscore = L.Deconvolution(n.score_fr,

convolution_param=dict(num_output=21, kernel_size=64, stride=32,

bias_term=False),

param=[dict(lr_mult=0)])

n.score = crop(n.upscore, n.data)

也就是说, 它是一次性将feature map放大32倍, 然后crop到与输入一样大小. 它为什么能这样做呢?

因为它的第一层conv pad = 100:

n.conv1_1, n.relu1_1 = conv_relu(n.data, 64, pad=100)

这样一来, crop掉的数据都是在padding 0上计算来的.

[full code](https://github.com/dengdan/pylib/blob/master/src/nnet/layer.py#L94)