1 Redis Cluster集群

redis3.0以后推出的redis cluster 集群方案,redis cluster集群保证了高可用、高性能、高可扩展性。

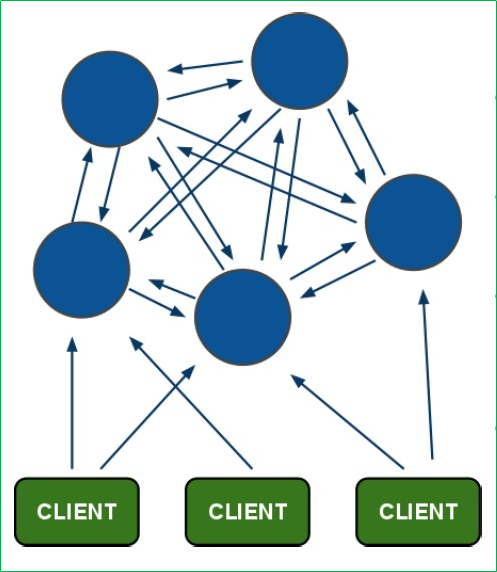

1.1 redis-cluster架构图

架构细节:

(1)所有的redis节点彼此互联(PING-PONG机制),内部使用二进制协议优化传输速度和带宽.

(2)节点的fail是通过集群中超过半数的节点检测失效时才生效.

(3)客户端与redis节点直连,不需要中间proxy层.客户端不需要连接集群所有节点,连接集群中任何一个可用节点即可

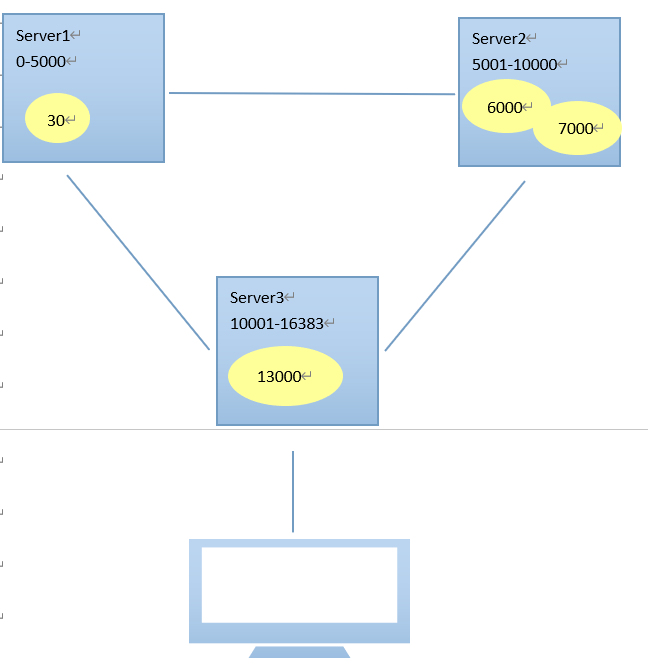

(4)redis-cluster把所有的物理节点映射到[0-16383]slot上,cluster 负责维护node<->slot<->value

Redis 集群中内置了 16384 个哈希槽,当需要在 Redis 集群中放置一个 key-value 时,redis 先对 key 使用 crc16 算法算出一个结果,然后把结果对 16384 求余数,这样每个 key 都会对应一个编号在 0-16383 之间的哈希槽,redis 会根据节点数量大致均等的将哈希槽映射到不同的节点

示例如下:

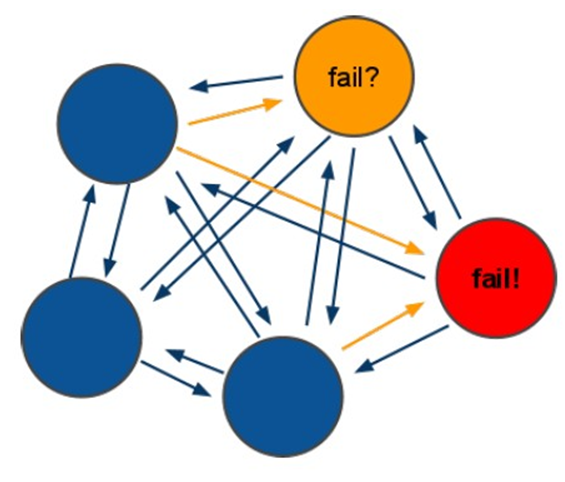

1.2 redis-cluster投票:容错

最小节点数:3台

(1)节点失效判断:集群中所有master参与投票,如果半数以上master节点与其中一个master节点通信超过(cluster-node-timeout),认为该master节点挂掉.

(2)集群失效判断:什么时候整个集群不可用(cluster_state:fail)?

Ø 如果集群任意master挂掉,且当前master没有slave,则集群进入fail状态。也可以理解成集群的[0-16383]slot映射不完全时进入fail状态。

Ø 如果集群超过半数以上master挂掉,无论是否有slave,集群进入fail状态。

1.3 安装Ruby环境

redis集群需要使用集群管理脚本redis-trib.rb,它的执行相应依赖ruby环境。

l 第一步:安装ruby

|

yum install ruby yum install rubygems |

l 第三步:安装ruby和redis的接口程序redis-3.2.2.gem

|

gem install redis -V 3.2.2 |

l 第四步:复制redis-3.2.9/src/redis-trib.rb文件到/usr/local/redis目录

|

cp redis-3.2.9/src/redis-trib.rb /usr/local/redis-cluster/ -r |

1.4 安装Redis集群(RedisCluster)

Redis集群最少需要三台主服务器,三台从服务器。

端口号分别为:7001~7006

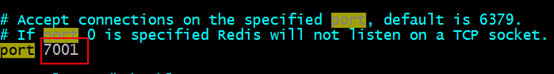

l 第一步:创建7001实例,并编辑redis.conf文件,修改port为7001。

注意:创建实例,即拷贝单机版安装时,生成的bin目录,为7001目录。

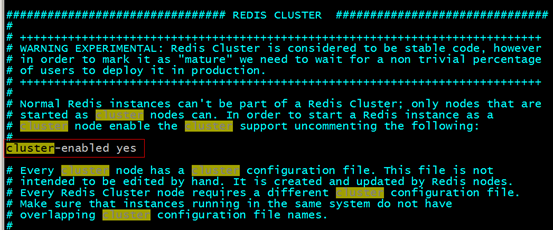

l 第二步:修改redis.conf配置文件,打开Cluster-enable yes

l 第三步:复制7001,创建7002~7006实例,注意端口修改。

l 第四步:启动所有的实例

l 第五步:创建Redis集群

|

./redis-trib.rb create --replicas 1 192.168.10.133:7001 192.168.10.133:7002 192.168.10.133:7003 192.168.10.133:7004 192.168.10.133:7005 192.168.10.133:7006 >>> Creating cluster Connecting to node 192.168.10.133:7001: OK Connecting to node 192.168.10.133:7002: OK Connecting to node 192.168.10.133:7003: OK Connecting to node 192.168.10.133:7004: OK Connecting to node 192.168.10.133:7005: OK Connecting to node 192.168.10.133:7006: OK >>> Performing hash slots allocation on 6 nodes... Using 3 masters: 192.168.10.133:7001 192.168.10.133:7002 192.168.10.133:7003 Adding replica 192.168.10.133:7004 to 192.168.10.133:7001 Adding replica 192.168.10.133:7005 to 192.168.10.133:7002 Adding replica 192.168.10.133:7006 to 192.168.10.133:7003 M: d8f6a0e3192c905f0aad411946f3ef9305350420 192.168.10.133:7001 slots:0-5460 (5461 slots) master M: 7a12bc730ddc939c84a156f276c446c28acf798c 192.168.10.133:7002 slots:5461-10922 (5462 slots) master M: 93f73d2424a796657948c660928b71edd3db881f 192.168.10.133:7003 slots:10923-16383 (5461 slots) master S: f79802d3da6b58ef6f9f30c903db7b2f79664e61 192.168.10.133:7004 replicates d8f6a0e3192c905f0aad411946f3ef9305350420 S: 0bc78702413eb88eb6d7982833a6e040c6af05be 192.168.10.133:7005 replicates 7a12bc730ddc939c84a156f276c446c28acf798c S: 4170a68ba6b7757e914056e2857bb84c5e10950e 192.168.10.133:7006 replicates 93f73d2424a796657948c660928b71edd3db881f Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join.... >>> Performing Cluster Check (using node 192.168.10.133:7001) M: d8f6a0e3192c905f0aad411946f3ef9305350420 192.168.10.133:7001 slots:0-5460 (5461 slots) master M: 7a12bc730ddc939c84a156f276c446c28acf798c 192.168.10.133:7002 slots:5461-10922 (5462 slots) master M: 93f73d2424a796657948c660928b71edd3db881f 192.168.10.133:7003 slots:10923-16383 (5461 slots) master M: f79802d3da6b58ef6f9f30c903db7b2f79664e61 192.168.10.133:7004 slots: (0 slots) master replicates d8f6a0e3192c905f0aad411946f3ef9305350420 M: 0bc78702413eb88eb6d7982833a6e040c6af05be 192.168.10.133:7005 slots: (0 slots) master replicates 7a12bc730ddc939c84a156f276c446c28acf798c M: 4170a68ba6b7757e914056e2857bb84c5e10950e 192.168.10.133:7006 slots: (0 slots) master replicates 93f73d2424a796657948c660928b71edd3db881f [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. [root@localhost-0723 redis]# |

1.5 命令客户端连接集群

命令:./redis-cli –h 127.0.0.1 –p 7001 –c

注意:-c 表示是以redis集群方式进行连接

|

./redis-cli -p 7006 -c 127.0.0.1:7006> set key1 123 -> Redirected to slot [9189] located at 127.0.0.1:7002 OK 127.0.0.1:7002> |

1.6 查看集群的命令

Ø 查看集群状态

|

127.0.0.1:7003> cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:6 cluster_my_epoch:3 cluster_stats_messages_sent:926 cluster_stats_messages_received:926 |

Ø 查看集群中的节点:

|

127.0.0.1:7003> cluster nodes 7a12bc730ddc939c84a156f276c446c28acf798c 127.0.0.1:7002 master - 0 1443601739754 2 connected 5461-10922 93f73d2424a796657948c660928b71edd3db881f 127.0.0.1:7003 myself,master - 0 0 3 connected 10923-16383 d8f6a0e3192c905f0aad411946f3ef9305350420 127.0.0.1:7001 master - 0 1443601741267 1 connected 0-5460 4170a68ba6b7757e914056e2857bb84c5e10950e 127.0.0.1:7006 slave 93f73d2424a796657948c660928b71edd3db881f 0 1443601739250 6 connected f79802d3da6b58ef6f9f30c903db7b2f79664e61 127.0.0.1:7004 slave d8f6a0e3192c905f0aad411946f3ef9305350420 0 1443601742277 4 connected 0bc78702413eb88eb6d7982833a6e040c6af05be 127.0.0.1:7005 slave 7a12bc730ddc939c84a156f276c446c28acf798c 0 1443601740259 5 connected 127.0.0.1:7003> |

1.7 维护节点

集群创建成功后可以继续向集群中添加节点

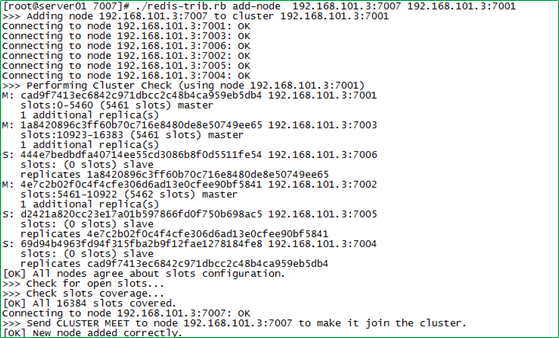

1.7.1添加主节点

l 先创建7007节点

l 添加7007结点作为新节点

执行命令:./redis-trib.rb add-node 127.0.0.1:7007 127.0.0.1:7001

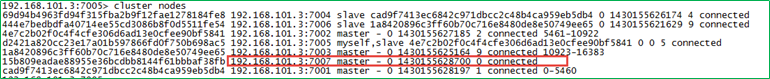

l 查看集群结点发现7007已添加到集群中

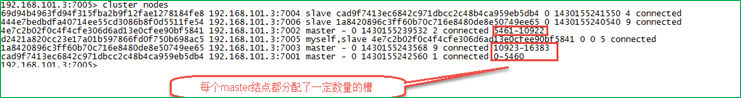

1.7.2hash槽重新分配(数据迁移)

添加完主节点需要对主节点进行hash槽分配,这样该主节才可以存储数据。

l 查看集群中槽占用情况

redis集群有16384个槽,集群中的每个结点分配自已槽,通过查看集群结点可以看到槽占用情况。

l 给刚添加的7007结点分配槽

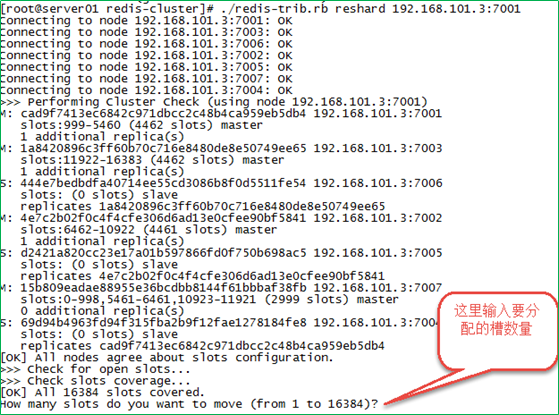

* 第一步:连接上集群(连接集群中任意一个可用结点都行)

|

./redis-trib.rb reshard 192.168.10.133:7001 |

* 第二步:输入要分配的槽数量

输入:3000,表示要给目标节点分配3000个槽

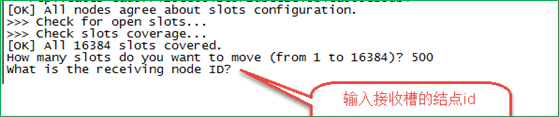

* 第三步:输入接收槽的结点id

输入:15b809eadae88955e36bcdbb8144f61bbbaf38fb

PS:这里准备给7007分配槽,通过cluster nodes查看7007结点id为:

15b809eadae88955e36bcdbb8144f61bbbaf38fb

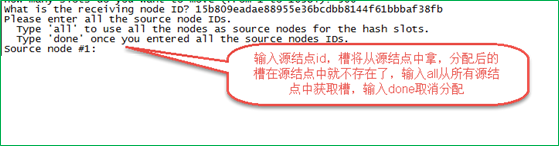

* 第四步:输入源结点id

输入:all

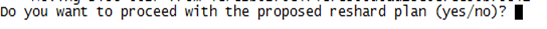

* 第五步:输入yes开始移动槽到目标结点id

输入:yes

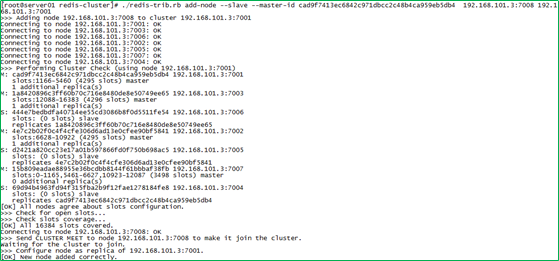

1.7.3添加从节点

l 添加7008从结点,将7008作为7007的从结点

命令:./redis-trib.rb add-node --slave --master-id 主节点id 新节点的ip和端口 旧节点ip和端口(集群中任一节点都可以)

执行如下命令:

|

./redis-trib.rb add-node --slave --master-id 35da64607a02c9159334a19164e68dd95a3b943c 192.168.10.103:7008 192.168.10.103:7001 |

35da64607a02c9159334a19164e68dd95a3b943c是7007结点的id,可通过cluster nodes查看。

注意:如果原来该结点在集群中的配置信息已经生成到cluster-config-file指定的配置文件中(如果cluster-config-file没有指定则默认为nodes.conf),这时可能会报错:

|

[ERR] Node XXXXXX is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0 |

解决方法是删除生成的配置文件nodes.conf,删除后再执行./redis-trib.rb add-node指令

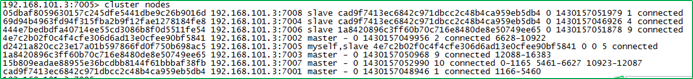

l 查看集群中的结点,刚添加的7008为7007的从节点:

1.7.4删除结点

命令:./redis-trib.rb del-node 127.0.0.1:7005 4b45eb75c8b428fbd77ab979b85080146a9bc017

删除已经占有hash槽的结点会失败,报错如下:

[ERR] Node 127.0.0.1:7005 is not empty! Reshard data away and try again.

需要将该结点占用的hash槽分配出去(参考hash槽重新分配章节)。

1.8 Jedis连接集群

需要开启防火墙,或者直接关闭防火墙。

|

service iptables stop |

1.8.1代码实现

创建JedisCluster类连接redis集群。

|

@Test publicvoid testJedisCluster() throws Exception { //创建一连接,JedisCluster对象,在系统中是单例存在 Set<HostAndPort> nodes = new HashSet<>(); nodes.add(new HostAndPort("192.168.10.133", 7001)); nodes.add(new HostAndPort("192.168.10.133", 7002)); nodes.add(new HostAndPort("192.168.10.133", 7003)); nodes.add(new HostAndPort("192.168.10.133", 7004)); nodes.add(new HostAndPort("192.168.10.133", 7005)); nodes.add(new HostAndPort("192.168.10.133", 7006)); JedisCluster cluster = new JedisCluster(nodes); //执行JedisCluster对象中的方法,方法和redis一一对应。 cluster.set("cluster-test", "my jedis cluster test"); String result = cluster.get("cluster-test"); System.out.println(result); //程序结束时需要关闭JedisCluster对象 cluster.close(); } |

1.8.2使用spring

Ø 配置applicationContext.xml

|

<!-- 连接池配置 --> <bean id="jedisPoolConfig" class="redis.clients.jedis.JedisPoolConfig"> <!-- 最大连接数 --> <property name="maxTotal" value="30" /> <!-- 最大空闲连接数 --> <property name="maxIdle" value="10" /> <!-- 每次释放连接的最大数目 --> <property name="numTestsPerEvictionRun" value="1024" /> <!-- 释放连接的扫描间隔(毫秒) --> <property name="timeBetweenEvictionRunsMillis" value="30000" /> <!-- 连接最小空闲时间 --> <property name="minEvictableIdleTimeMillis" value="1800000" /> <!-- 连接空闲多久后释放, 当空闲时间>该值 且 空闲连接>最大空闲连接数 时直接释放 --> <property name="softMinEvictableIdleTimeMillis" value="10000" /> <!-- 获取连接时的最大等待毫秒数,小于零:阻塞不确定的时间,默认-1 --> <property name="maxWaitMillis" value="1500" /> <!-- 在获取连接的时候检查有效性, 默认false --> <property name="testOnBorrow" value="true" /> <!-- 在空闲时检查有效性, 默认false --> <property name="testWhileIdle" value="true" /> <!-- 连接耗尽时是否阻塞, false报异常,ture阻塞直到超时, 默认true --> <property name="blockWhenExhausted" value="false" /> </bean> <!-- redis集群 --> <bean id="jedisCluster" class="redis.clients.jedis.JedisCluster"> <constructor-arg index="0"> <set> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7001"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7002"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7003"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7004"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7005"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7006"></constructor-arg> </bean> </set> </constructor-arg> <constructor-arg index="1" ref="jedisPoolConfig"></constructor-arg> </bean> |

Ø

测试代码

|

private ApplicationContext applicationContext; @Before publicvoid init() { applicationContext = new ClassPathXmlApplicationContext( "classpath:applicationContext.xml"); }

// redis集群 @Test publicvoid testJedisCluster() { JedisCluster jedisCluster = (JedisCluster) applicationContext .getBean("jedisCluster");

jedisCluster.set("name", "zhangsan"); String value = jedisCluster.get("name"); System.out.println(value); } |

1.1 redis-cluster投票:容错

最小节点数:3台

(1)节点失效判断:集群中所有master参与投票,如果半数以上master节点与其中一个master节点通信超过(cluster-node-timeout),认为该master节点挂掉.

(2)集群失效判断:什么时候整个集群不可用(cluster_state:fail)?

Ø 如果集群任意master挂掉,且当前master没有slave,则集群进入fail状态。也可以理解成集群的[0-16383]slot映射不完全时进入fail状态。

Ø 如果集群超过半数以上master挂掉,无论是否有slave,集群进入fail状态。

1.2 安装Ruby环境

redis集群需要使用集群管理脚本redis-trib.rb,它的执行相应依赖ruby环境。

l 第一步:安装ruby

|

yum install ruby yum install rubygems |

l 第三步:安装ruby和redis的接口程序redis-3.2.2.gem

|

gem install redis -V 3.2.2 |

l 第四步:复制redis-3.2.9/src/redis-trib.rb文件到/usr/local/redis目录

|

cp redis-3.2.9/src/redis-trib.rb /usr/local/redis-cluster/ -r |

1.3 安装Redis集群(RedisCluster)

Redis集群最少需要三台主服务器,三台从服务器。

端口号分别为:7001~7006

l 第一步:创建7001实例,并编辑redis.conf文件,修改port为7001。

注意:创建实例,即拷贝单机版安装时,生成的bin目录,为7001目录。

l 第二步:修改redis.conf配置文件,打开Cluster-enable yes

l 第三步:复制7001,创建7002~7006实例,注意端口修改。

l 第四步:启动所有的实例

l 第五步:创建Redis集群

|

./redis-trib.rb create --replicas 1 192.168.10.133:7001 192.168.10.133:7002 192.168.10.133:7003 192.168.10.133:7004 192.168.10.133:7005 192.168.10.133:7006 >>> Creating cluster Connecting to node 192.168.10.133:7001: OK Connecting to node 192.168.10.133:7002: OK Connecting to node 192.168.10.133:7003: OK Connecting to node 192.168.10.133:7004: OK Connecting to node 192.168.10.133:7005: OK Connecting to node 192.168.10.133:7006: OK >>> Performing hash slots allocation on 6 nodes... Using 3 masters: 192.168.10.133:7001 192.168.10.133:7002 192.168.10.133:7003 Adding replica 192.168.10.133:7004 to 192.168.10.133:7001 Adding replica 192.168.10.133:7005 to 192.168.10.133:7002 Adding replica 192.168.10.133:7006 to 192.168.10.133:7003 M: d8f6a0e3192c905f0aad411946f3ef9305350420 192.168.10.133:7001 slots:0-5460 (5461 slots) master M: 7a12bc730ddc939c84a156f276c446c28acf798c 192.168.10.133:7002 slots:5461-10922 (5462 slots) master M: 93f73d2424a796657948c660928b71edd3db881f 192.168.10.133:7003 slots:10923-16383 (5461 slots) master S: f79802d3da6b58ef6f9f30c903db7b2f79664e61 192.168.10.133:7004 replicates d8f6a0e3192c905f0aad411946f3ef9305350420 S: 0bc78702413eb88eb6d7982833a6e040c6af05be 192.168.10.133:7005 replicates 7a12bc730ddc939c84a156f276c446c28acf798c S: 4170a68ba6b7757e914056e2857bb84c5e10950e 192.168.10.133:7006 replicates 93f73d2424a796657948c660928b71edd3db881f Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join.... >>> Performing Cluster Check (using node 192.168.10.133:7001) M: d8f6a0e3192c905f0aad411946f3ef9305350420 192.168.10.133:7001 slots:0-5460 (5461 slots) master M: 7a12bc730ddc939c84a156f276c446c28acf798c 192.168.10.133:7002 slots:5461-10922 (5462 slots) master M: 93f73d2424a796657948c660928b71edd3db881f 192.168.10.133:7003 slots:10923-16383 (5461 slots) master M: f79802d3da6b58ef6f9f30c903db7b2f79664e61 192.168.10.133:7004 slots: (0 slots) master replicates d8f6a0e3192c905f0aad411946f3ef9305350420 M: 0bc78702413eb88eb6d7982833a6e040c6af05be 192.168.10.133:7005 slots: (0 slots) master replicates 7a12bc730ddc939c84a156f276c446c28acf798c M: 4170a68ba6b7757e914056e2857bb84c5e10950e 192.168.10.133:7006 slots: (0 slots) master replicates 93f73d2424a796657948c660928b71edd3db881f [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. [root@localhost-0723 redis]# |

1.4 命令客户端连接集群

命令:./redis-cli –h 127.0.0.1 –p 7001 –c

注意:-c 表示是以redis集群方式进行连接

|

./redis-cli -p 7006 -c 127.0.0.1:7006> set key1 123 -> Redirected to slot [9189] located at 127.0.0.1:7002 OK 127.0.0.1:7002> |

1.5 查看集群的命令

Ø 查看集群状态

|

127.0.0.1:7003> cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:6 cluster_my_epoch:3 cluster_stats_messages_sent:926 cluster_stats_messages_received:926 |

Ø 查看集群中的节点:

|

127.0.0.1:7003> cluster nodes 7a12bc730ddc939c84a156f276c446c28acf798c 127.0.0.1:7002 master - 0 1443601739754 2 connected 5461-10922 93f73d2424a796657948c660928b71edd3db881f 127.0.0.1:7003 myself,master - 0 0 3 connected 10923-16383 d8f6a0e3192c905f0aad411946f3ef9305350420 127.0.0.1:7001 master - 0 1443601741267 1 connected 0-5460 4170a68ba6b7757e914056e2857bb84c5e10950e 127.0.0.1:7006 slave 93f73d2424a796657948c660928b71edd3db881f 0 1443601739250 6 connected f79802d3da6b58ef6f9f30c903db7b2f79664e61 127.0.0.1:7004 slave d8f6a0e3192c905f0aad411946f3ef9305350420 0 1443601742277 4 connected 0bc78702413eb88eb6d7982833a6e040c6af05be 127.0.0.1:7005 slave 7a12bc730ddc939c84a156f276c446c28acf798c 0 1443601740259 5 connected 127.0.0.1:7003> |

1.6 维护节点

集群创建成功后可以继续向集群中添加节点

1.6.1添加主节点

l 先创建7007节点

l 添加7007结点作为新节点

执行命令:./redis-trib.rb add-node 127.0.0.1:7007 127.0.0.1:7001

l 查看集群结点发现7007已添加到集群中

1.6.2hash槽重新分配(数据迁移)

添加完主节点需要对主节点进行hash槽分配,这样该主节才可以存储数据。

l 查看集群中槽占用情况

redis集群有16384个槽,集群中的每个结点分配自已槽,通过查看集群结点可以看到槽占用情况。

l 给刚添加的7007结点分配槽

* 第一步:连接上集群(连接集群中任意一个可用结点都行)

|

./redis-trib.rb reshard 192.168.10.133:7001 |

* 第二步:输入要分配的槽数量

输入:3000,表示要给目标节点分配3000个槽

* 第三步:输入接收槽的结点id

输入:15b809eadae88955e36bcdbb8144f61bbbaf38fb

PS:这里准备给7007分配槽,通过cluster nodes查看7007结点id为:

15b809eadae88955e36bcdbb8144f61bbbaf38fb

* 第四步:输入源结点id

输入:all

* 第五步:输入yes开始移动槽到目标结点id

输入:yes

1.6.3添加从节点

l 添加7008从结点,将7008作为7007的从结点

命令:./redis-trib.rb add-node --slave --master-id 主节点id 新节点的ip和端口 旧节点ip和端口(集群中任一节点都可以)

执行如下命令:

|

./redis-trib.rb add-node --slave --master-id 35da64607a02c9159334a19164e68dd95a3b943c 192.168.10.103:7008 192.168.10.103:7001 |

35da64607a02c9159334a19164e68dd95a3b943c是7007结点的id,可通过cluster nodes查看。

注意:如果原来该结点在集群中的配置信息已经生成到cluster-config-file指定的配置文件中(如果cluster-config-file没有指定则默认为nodes.conf),这时可能会报错:

|

[ERR] Node XXXXXX is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0 |

解决方法是删除生成的配置文件nodes.conf,删除后再执行./redis-trib.rb add-node指令

l 查看集群中的结点,刚添加的7008为7007的从节点:

1.6.4删除结点

命令:./redis-trib.rb del-node 127.0.0.1:7005 4b45eb75c8b428fbd77ab979b85080146a9bc017

删除已经占有hash槽的结点会失败,报错如下:

[ERR] Node 127.0.0.1:7005 is not empty! Reshard data away and try again.

需要将该结点占用的hash槽分配出去(参考hash槽重新分配章节)。

1.7 Jedis连接集群

需要开启防火墙,或者直接关闭防火墙。

|

service iptables stop |

1.7.1代码实现

创建JedisCluster类连接redis集群。

|

@Test publicvoid testJedisCluster() throws Exception { //创建一连接,JedisCluster对象,在系统中是单例存在 Set<HostAndPort> nodes = new HashSet<>(); nodes.add(new HostAndPort("192.168.10.133", 7001)); nodes.add(new HostAndPort("192.168.10.133", 7002)); nodes.add(new HostAndPort("192.168.10.133", 7003)); nodes.add(new HostAndPort("192.168.10.133", 7004)); nodes.add(new HostAndPort("192.168.10.133", 7005)); nodes.add(new HostAndPort("192.168.10.133", 7006)); JedisCluster cluster = new JedisCluster(nodes); //执行JedisCluster对象中的方法,方法和redis一一对应。 cluster.set("cluster-test", "my jedis cluster test"); String result = cluster.get("cluster-test"); System.out.println(result); //程序结束时需要关闭JedisCluster对象 cluster.close(); } |

1.7.2使用spring

Ø 配置applicationContext.xml

|

<!-- 连接池配置 --> <bean id="jedisPoolConfig" class="redis.clients.jedis.JedisPoolConfig"> <!-- 最大连接数 --> <property name="maxTotal" value="30" /> <!-- 最大空闲连接数 --> <property name="maxIdle" value="10" /> <!-- 每次释放连接的最大数目 --> <property name="numTestsPerEvictionRun" value="1024" /> <!-- 释放连接的扫描间隔(毫秒) --> <property name="timeBetweenEvictionRunsMillis" value="30000" /> <!-- 连接最小空闲时间 --> <property name="minEvictableIdleTimeMillis" value="1800000" /> <!-- 连接空闲多久后释放, 当空闲时间>该值 且 空闲连接>最大空闲连接数 时直接释放 --> <property name="softMinEvictableIdleTimeMillis" value="10000" /> <!-- 获取连接时的最大等待毫秒数,小于零:阻塞不确定的时间,默认-1 --> <property name="maxWaitMillis" value="1500" /> <!-- 在获取连接的时候检查有效性, 默认false --> <property name="testOnBorrow" value="true" /> <!-- 在空闲时检查有效性, 默认false --> <property name="testWhileIdle" value="true" /> <!-- 连接耗尽时是否阻塞, false报异常,ture阻塞直到超时, 默认true --> <property name="blockWhenExhausted" value="false" /> </bean> <!-- redis集群 --> <bean id="jedisCluster" class="redis.clients.jedis.JedisCluster"> <constructor-arg index="0"> <set> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7001"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7002"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7003"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7004"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7005"></constructor-arg> </bean> <bean class="redis.clients.jedis.HostAndPort"> <constructor-arg index="0" value="192.168.101.3"></constructor-arg> <constructor-arg index="1" value="7006"></constructor-arg> </bean> </set> </constructor-arg> <constructor-arg index="1" ref="jedisPoolConfig"></constructor-arg> </bean> |

Ø 测试代码

|

private ApplicationContext applicationContext; @Before publicvoid init() { applicationContext = new ClassPathXmlApplicationContext( "classpath:applicationContext.xml"); }

// redis集群 @Test publicvoid testJedisCluster() { JedisCluster jedisCluster = (JedisCluster) applicationContext .getBean("jedisCluster");

jedisCluster.set("name", "zhangsan"); String value = jedisCluster.get("name"); System.out.println(value); } |