图和tensorboard

什么是图结构:

图的相关操作

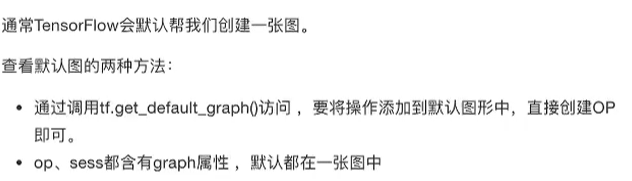

默认图:

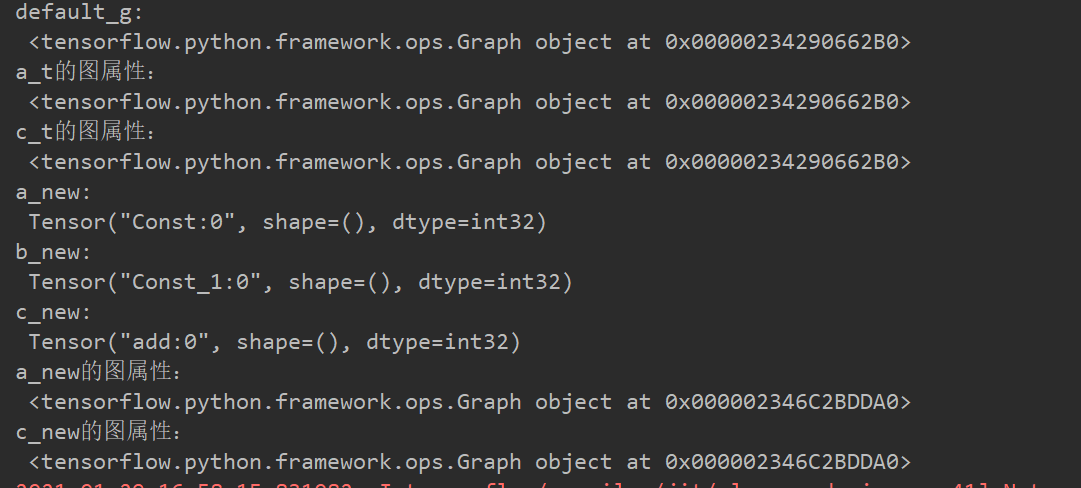

def graph_demo(): tf.compat.v1.disable_eager_execution() """ 图的演示 :return: """ # TensorFlow实现加法运算 a_t = tf.constant(2) b_t = tf.constant(3) c_t = tf.add(a_t, b_t) # 查看默认图 # 方法1:调用方法 default_g = tf.compat.v1.get_default_graph() print("default_g: ", default_g) # 方法2:查看属性 print("a_t的图属性: ", a_t.graph) print("c_t的图属性: ", c_t.graph) # 开启会话 tf.compat.v1.disable_eager_execution() sess = tf.compat.v1.Session() c_t_value = sess.run(c_t) print("c_t_value: ", c_t_value) print("sess的图属性: ", sess.graph) return None

自定义图:

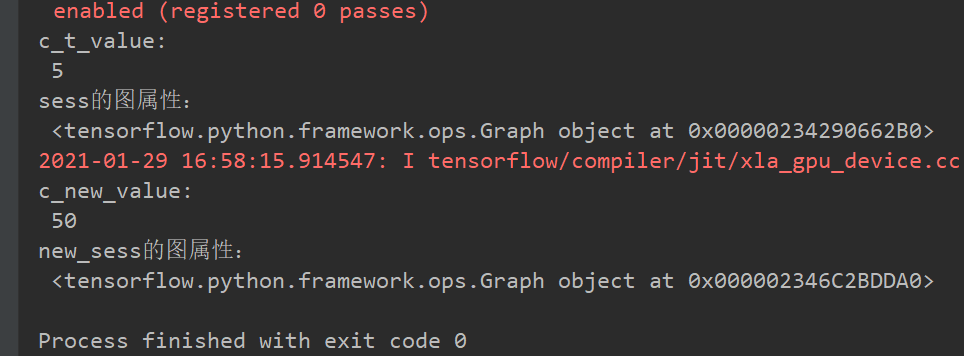

def graph_demo(): tf.compat.v1.disable_eager_execution() """ 图的演示 :return: """ # TensorFlow实现加法运算 a_t = tf.constant(2) b_t = tf.constant(3) c_t = tf.add(a_t, b_t) # 查看默认图 # 方法1:调用方法 default_g = tf.compat.v1.get_default_graph() print("default_g: ", default_g) # 方法2:查看属性 print("a_t的图属性: ", a_t.graph) print("c_t的图属性: ", c_t.graph) # 自定义图 new_g = tf.Graph() # 在自己的图中定义数据和操作 with new_g.as_default(): a_new = tf.constant(20) b_new = tf.constant(30) c_new = a_new + b_new print("a_new: ", a_new) print("b_new: ", b_new) print("c_new: ", c_new) print("a_new的图属性: ", a_new.graph) print("c_new的图属性: ", c_new.graph) # 开启会话 with tf.compat.v1.Session() as sess: # c_t_value = sess.run(c_t) # 试图运行自定义图中的数据、操作 # c_new_value = sess.run((c_new)) # print("c_new_value: ", c_new_value) print("c_t_value: ", c_t.eval()) print("sess的图属性: ", sess.graph) # 开启new_g的会话 with tf.compat.v1.Session(graph=new_g) as new_sess: c_new_value = new_sess.run((c_new)) print("c_new_value: ", c_new_value) print("new_sess的图属性: ", new_sess.graph) return None

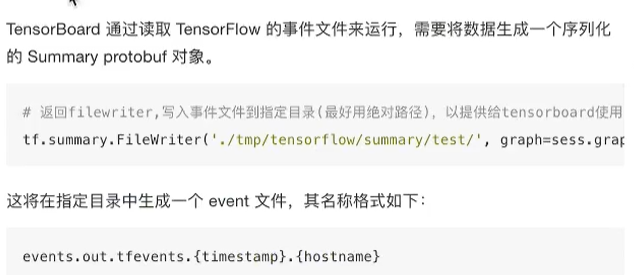

tensorboard可视化学习:

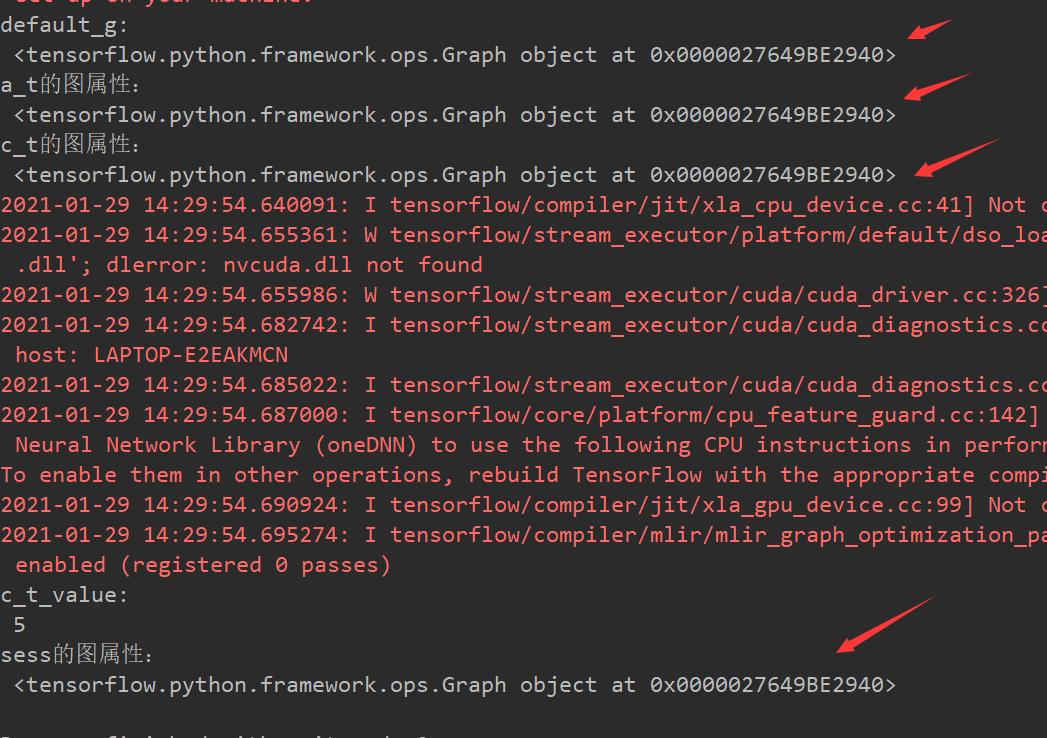

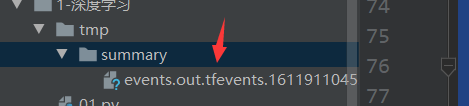

数据序列化events文件:

def graph_demo(): tf.compat.v1.disable_eager_execution() """ 图的演示 :return: """ # TensorFlow实现加法运算 a_t = tf.constant(2) b_t = tf.constant(3) c_t = tf.add(a_t, b_t) # 查看默认图 # 方法1:调用方法 default_g = tf.compat.v1.get_default_graph() print("default_g: ", default_g) # 方法2:查看属性 print("a_t的图属性: ", a_t.graph) print("c_t的图属性: ", c_t.graph) # 自定义图 new_g = tf.Graph() # 在自己的图中定义数据和操作 with new_g.as_default(): a_new = tf.constant(20) b_new = tf.constant(30) c_new = a_new + b_new print("a_new: ", a_new) print("b_new: ", b_new) print("c_new: ", c_new) print("a_new的图属性: ", a_new.graph) print("c_new的图属性: ", c_new.graph) # 开启会话 with tf.compat.v1.Session() as sess: # c_t_value = sess.run(c_t) # 试图运行自定义图中的数据、操作 # c_new_value = sess.run((c_new)) # print("c_new_value: ", c_new_value) print("c_t_value: ", c_t.eval()) print("sess的图属性: ", sess.graph) # 1)将图写入本地生成events文件 tf.compat.v1.summary.FileWriter("./tmp/summary", graph=sess.graph) # 开启new_g的会话 with tf.compat.v1.Session(graph=new_g) as new_sess: c_new_value = new_sess.run((c_new)) print("c_new_value: ", c_new_value) print("new_sess的图属性: ", new_sess.graph) return None

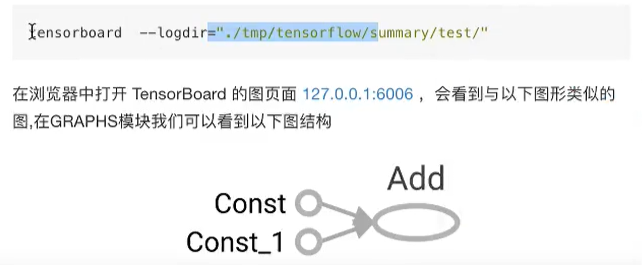

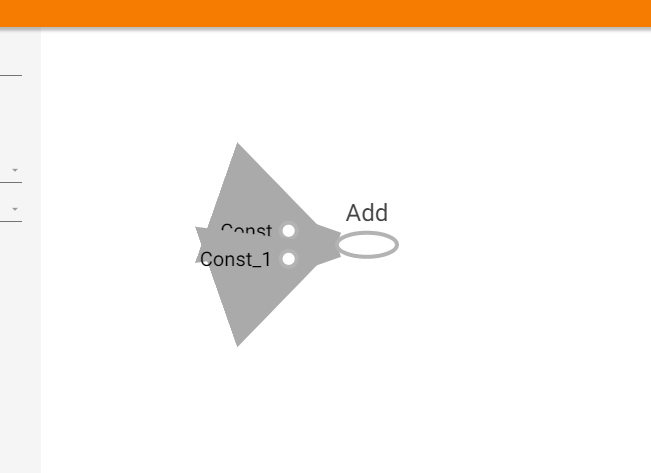

启动tensorboard:

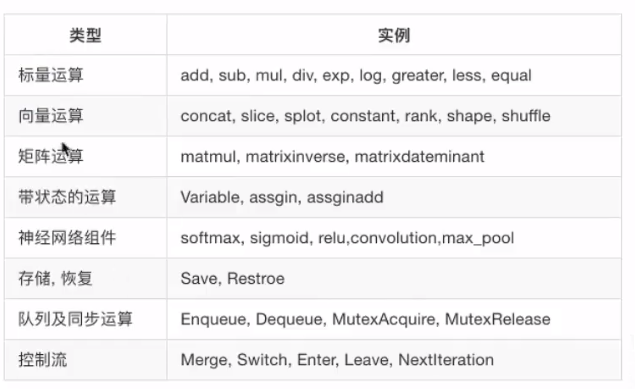

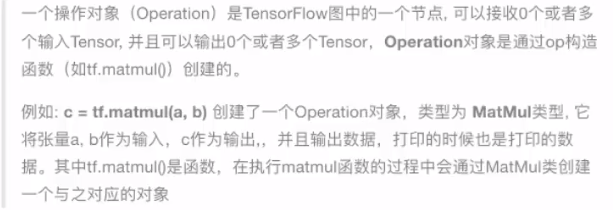

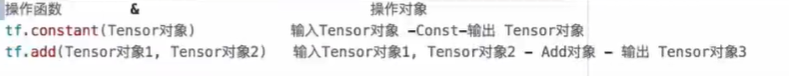

OP:

常见的op:

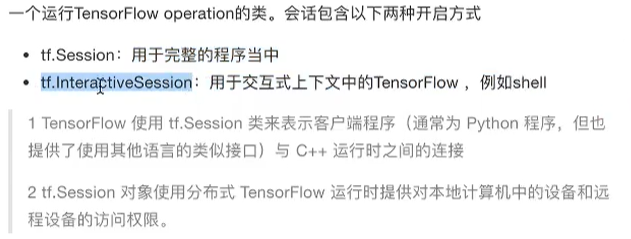

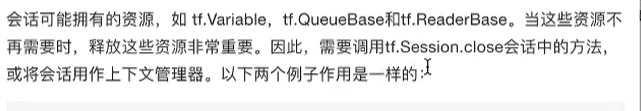

会话

会话创建的时候:

会话的run:

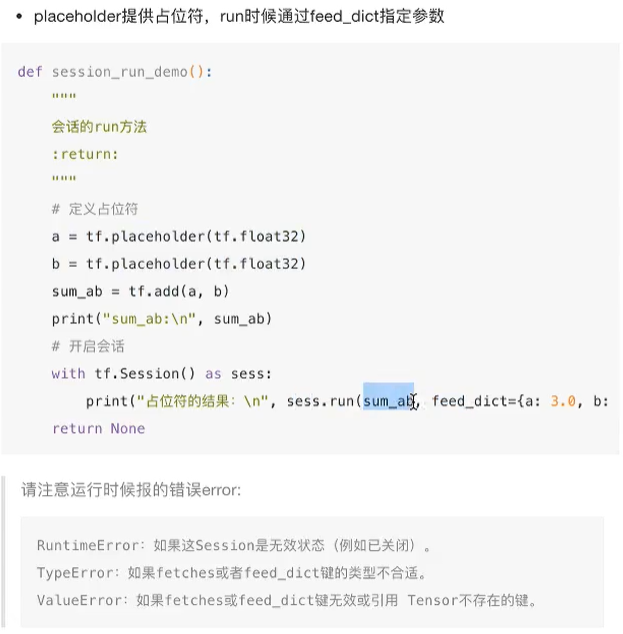

feed操作:

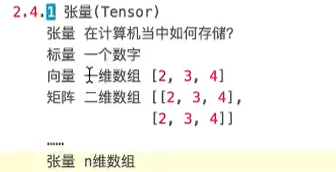

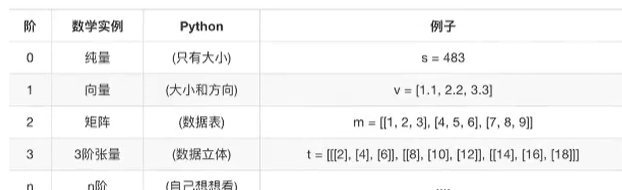

张量:

张量类型:

张量的阶:

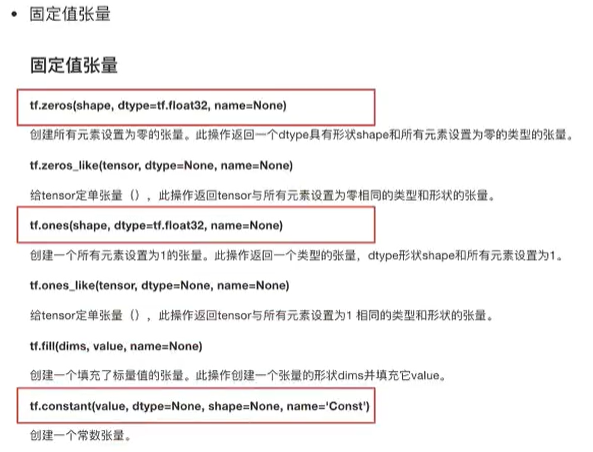

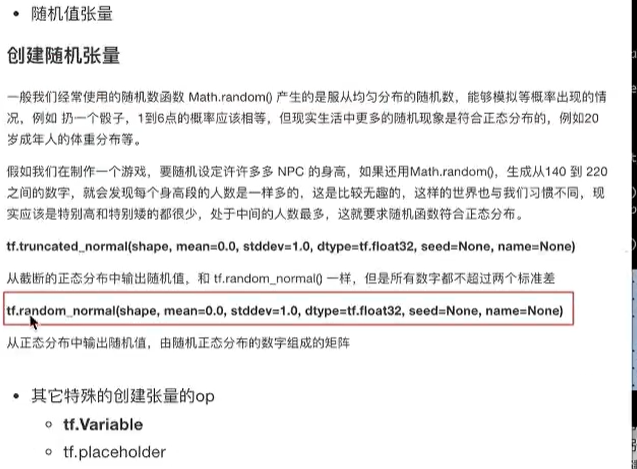

创建张量的指令:

def tensor_demo(): """ 张量的演示 :return: """ tensor1 = tf.constant(4.0) tensor2 = tf.constant([1, 2, 3, 4]) linear_squares = tf.constant([[4], [9], [16], [25]], dtype=tf.int32) print("tensor1: ", tensor1) print("tensor2: ", tensor2) print("linear_squares_before: ", linear_squares) # 张量类型的修改 l_cast = tf.cast(linear_squares, dtype=tf.float32) print("linear_squares_after: ", linear_squares) print("l_cast: ", l_cast) # 更新/改变静态形状 # 定义占位符 # 没有完全固定下来的静态形状 a_p = tf.compat.v1.placeholder(dtype=tf.float32, shape=[None, None]) b_p = tf.compat.v1.placeholder(dtype=tf.float32, shape=[None, 10]) c_p = tf.compat.v1.placeholder(dtype=tf.float32, shape=[3, 2]) print("a_p: ", a_p) print("b_p: ", b_p) print("c_p: ", c_p) # 更新形状未确定的部分 # a_p.set_shape([2, 3]) # b_p.set_shape([2, 10]) # c_p.set_shape([2, 3]) # 动态形状修改 a_p_reshape = tf.reshape(a_p, shape=[2, 3, 1]) print("a_p: ", a_p) # print("b_p: ", b_p) print("a_p_reshape: ", a_p_reshape) c_p_reshape = tf.reshape(c_p, shape=[2, 3]) print("c_p: ", c_p) print("c_p_reshape: ", c_p_reshape)