1.Analyzer的使用

Analyzer使用在IndexWriter的构造方法

/** * Constructs a new IndexWriter per the settings given in <code>conf</code>. * If you want to make "live" changes to this writer instance, use * {@link #getConfig()}. * * <p> * <b>NOTE:</b> after ths writer is created, the given configuration instance * cannot be passed to another writer. * * @param d * the index directory. The index is either created or appended * according <code>conf.getOpenMode()</code>. * @param conf * the configuration settings according to which IndexWriter should * be initialized. * @throws IOException * if the directory cannot be read/written to, or if it does not * exist and <code>conf.getOpenMode()</code> is * <code>OpenMode.APPEND</code> or if there is any other low-level * IO error */ public IndexWriter(Directory d, IndexWriterConfig conf) throws IOException { enableTestPoints = isEnableTestPoints(); conf.setIndexWriter(this); // prevent reuse by other instances config = conf; infoStream = config.getInfoStream(); softDeletesEnabled = config.getSoftDeletesField() != null; // obtain the write.lock. If the user configured a timeout, // we wrap with a sleeper and this might take some time. writeLock = d.obtainLock(WRITE_LOCK_NAME); boolean success = false; try { directoryOrig = d; directory = new LockValidatingDirectoryWrapper(d, writeLock); analyzer = config.getAnalyzer(); mergeScheduler = config.getMergeScheduler(); mergeScheduler.setInfoStream(infoStream); codec = config.getCodec(); OpenMode mode = config.getOpenMode(); final boolean indexExists; final boolean create; if (mode == OpenMode.CREATE) { indexExists = DirectoryReader.indexExists(directory); create = true; } else if (mode == OpenMode.APPEND) { indexExists = true; create = false; } else { // CREATE_OR_APPEND - create only if an index does not exist indexExists = DirectoryReader.indexExists(directory); create = !indexExists; } // If index is too old, reading the segments will throw // IndexFormatTooOldException. String[] files = directory.listAll(); // Set up our initial SegmentInfos: IndexCommit commit = config.getIndexCommit(); // Set up our initial SegmentInfos: StandardDirectoryReader reader; if (commit == null) { reader = null; } else { reader = commit.getReader(); } if (create) { if (config.getIndexCommit() != null) { // We cannot both open from a commit point and create: if (mode == OpenMode.CREATE) { throw new IllegalArgumentException("cannot use IndexWriterConfig.setIndexCommit() with OpenMode.CREATE"); } else { throw new IllegalArgumentException("cannot use IndexWriterConfig.setIndexCommit() when index has no commit"); } } // Try to read first. This is to allow create // against an index that's currently open for // searching. In this case we write the next // segments_N file with no segments: final SegmentInfos sis = new SegmentInfos(Version.LATEST.major); if (indexExists) { final SegmentInfos previous = SegmentInfos.readLatestCommit(directory); sis.updateGenerationVersionAndCounter(previous); } segmentInfos = sis; rollbackSegments = segmentInfos.createBackupSegmentInfos(); // Record that we have a change (zero out all // segments) pending: changed(); } else if (reader != null) { // Init from an existing already opened NRT or non-NRT reader: if (reader.directory() != commit.getDirectory()) { throw new IllegalArgumentException("IndexCommit's reader must have the same directory as the IndexCommit"); } if (reader.directory() != directoryOrig) { throw new IllegalArgumentException("IndexCommit's reader must have the same directory passed to IndexWriter"); } if (reader.segmentInfos.getLastGeneration() == 0) { // TODO: maybe we could allow this? It's tricky... throw new IllegalArgumentException("index must already have an initial commit to open from reader"); } // Must clone because we don't want the incoming NRT reader to "see" any changes this writer now makes: segmentInfos = reader.segmentInfos.clone(); SegmentInfos lastCommit; try { lastCommit = SegmentInfos.readCommit(directoryOrig, segmentInfos.getSegmentsFileName()); } catch (IOException ioe) { throw new IllegalArgumentException("the provided reader is stale: its prior commit file "" + segmentInfos.getSegmentsFileName() + "" is missing from index"); } if (reader.writer != null) { // The old writer better be closed (we have the write lock now!): assert reader.writer.closed; // In case the old writer wrote further segments (which we are now dropping), // update SIS metadata so we remain write-once: segmentInfos.updateGenerationVersionAndCounter(reader.writer.segmentInfos); lastCommit.updateGenerationVersionAndCounter(reader.writer.segmentInfos); } rollbackSegments = lastCommit.createBackupSegmentInfos(); } else { // Init from either the latest commit point, or an explicit prior commit point: String lastSegmentsFile = SegmentInfos.getLastCommitSegmentsFileName(files); if (lastSegmentsFile == null) { throw new IndexNotFoundException("no segments* file found in " + directory + ": files: " + Arrays.toString(files)); } // Do not use SegmentInfos.read(Directory) since the spooky // retrying it does is not necessary here (we hold the write lock): segmentInfos = SegmentInfos.readCommit(directoryOrig, lastSegmentsFile); if (commit != null) { // Swap out all segments, but, keep metadata in // SegmentInfos, like version & generation, to // preserve write-once. This is important if // readers are open against the future commit // points. if (commit.getDirectory() != directoryOrig) { throw new IllegalArgumentException("IndexCommit's directory doesn't match my directory, expected=" + directoryOrig + ", got=" + commit.getDirectory()); } SegmentInfos oldInfos = SegmentInfos.readCommit(directoryOrig, commit.getSegmentsFileName()); segmentInfos.replace(oldInfos); changed(); if (infoStream.isEnabled("IW")) { infoStream.message("IW", "init: loaded commit "" + commit.getSegmentsFileName() + """); } } rollbackSegments = segmentInfos.createBackupSegmentInfos(); } commitUserData = new HashMap<>(segmentInfos.getUserData()).entrySet(); pendingNumDocs.set(segmentInfos.totalMaxDoc()); // start with previous field numbers, but new FieldInfos // NOTE: this is correct even for an NRT reader because we'll pull FieldInfos even for the un-committed segments: globalFieldNumberMap = getFieldNumberMap(); validateIndexSort(); config.getFlushPolicy().init(config); bufferedUpdatesStream = new BufferedUpdatesStream(infoStream); docWriter = new DocumentsWriter(flushNotifications, segmentInfos.getIndexCreatedVersionMajor(), pendingNumDocs, enableTestPoints, this::newSegmentName, config, directoryOrig, directory, globalFieldNumberMap); readerPool = new ReaderPool(directory, directoryOrig, segmentInfos, globalFieldNumberMap, bufferedUpdatesStream::getCompletedDelGen, infoStream, conf.getSoftDeletesField(), reader); if (config.getReaderPooling()) { readerPool.enableReaderPooling(); } // Default deleter (for backwards compatibility) is // KeepOnlyLastCommitDeleter: // Sync'd is silly here, but IFD asserts we sync'd on the IW instance: synchronized(this) { deleter = new IndexFileDeleter(files, directoryOrig, directory, config.getIndexDeletionPolicy(), segmentInfos, infoStream, this, indexExists, reader != null); // We incRef all files when we return an NRT reader from IW, so all files must exist even in the NRT case: assert create || filesExist(segmentInfos); } if (deleter.startingCommitDeleted) { // Deletion policy deleted the "head" commit point. // We have to mark ourself as changed so that if we // are closed w/o any further changes we write a new // segments_N file. changed(); } if (reader != null) { // We always assume we are carrying over incoming changes when opening from reader: segmentInfos.changed(); changed(); } if (infoStream.isEnabled("IW")) { infoStream.message("IW", "init: create=" + create + " reader=" + reader); messageState(); } success = true; } finally { if (!success) { if (infoStream.isEnabled("IW")) { infoStream.message("IW", "init: hit exception on init; releasing write lock"); } IOUtils.closeWhileHandlingException(writeLock); writeLock = null; } } }

2.Analyzer的定义

/** * An Analyzer builds TokenStreams, which analyze text. It thus represents a * policy for extracting index terms from text. * <p> * In order to define what analysis is done, subclasses must define their * {@link TokenStreamComponents TokenStreamComponents} in {@link #createComponents(String)}. * The components are then reused in each call to {@link #tokenStream(String, Reader)}. * <p> * Simple example: * <pre class="prettyprint"> * Analyzer analyzer = new Analyzer() { * {@literal @Override} * protected TokenStreamComponents createComponents(String fieldName) { * Tokenizer source = new FooTokenizer(reader); * TokenStream filter = new FooFilter(source); * filter = new BarFilter(filter); * return new TokenStreamComponents(source, filter); * } * {@literal @Override} * protected TokenStream normalize(TokenStream in) { * // Assuming FooFilter is about normalization and BarFilter is about * // stemming, only FooFilter should be applied * return new FooFilter(in); * } * }; * </pre> * For more examples, see the {@link org.apache.lucene.analysis Analysis package documentation}. * <p> * For some concrete implementations bundled with Lucene, look in the analysis modules: * <ul> * <li><a href="{@docRoot}/../analyzers-common/overview-summary.html">Common</a>: * Analyzers for indexing content in different languages and domains. * <li><a href="{@docRoot}/../analyzers-icu/overview-summary.html">ICU</a>: * Exposes functionality from ICU to Apache Lucene. * <li><a href="{@docRoot}/../analyzers-kuromoji/overview-summary.html">Kuromoji</a>: * Morphological analyzer for Japanese text. * <li><a href="{@docRoot}/../analyzers-morfologik/overview-summary.html">Morfologik</a>: * Dictionary-driven lemmatization for the Polish language. * <li><a href="{@docRoot}/../analyzers-phonetic/overview-summary.html">Phonetic</a>: * Analysis for indexing phonetic signatures (for sounds-alike search). * <li><a href="{@docRoot}/../analyzers-smartcn/overview-summary.html">Smart Chinese</a>: * Analyzer for Simplified Chinese, which indexes words. * <li><a href="{@docRoot}/../analyzers-stempel/overview-summary.html">Stempel</a>: * Algorithmic Stemmer for the Polish Language. * </ul> */

可以看出,Analyzer针对不同的语言给出了不同的方式

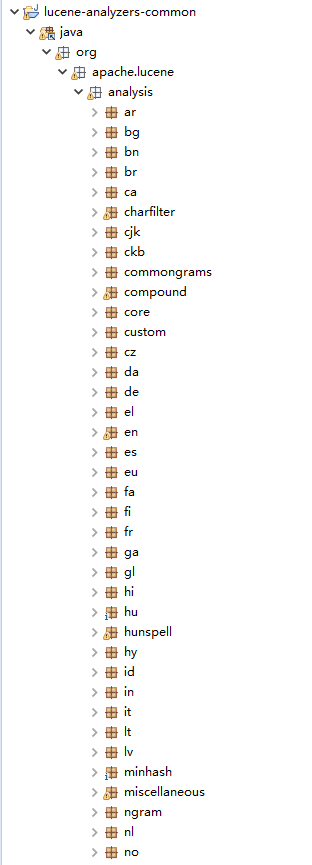

其中,common抽象出所有Analyzer类,如下图所示