环境:k8s v1.18.5

网络环境: calico,通过nodePort方式对外提供nginx服务

nginx-ingress-controller版本:0.20.0

所有配置文件位于:/home/ingress

包括(在下文中都会一一创建):mandatory.yaml、service-nodeport.yaml、deploy-demon.yaml、ingress-myapp.yaml、ingress-tomcat.yaml、tomcat-deploy.yaml

一、在每一个节点,提前下载所需镜像

defaultbackend

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

nginx-ingress-controller

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0

二、运行mandatory.yaml和service-nodeport.yaml文件

mandatory.yaml

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx --- apiVersion: apps/v1 kind: Deployment metadata: name: default-http-backend labels: app.kubernetes.io/name: default-http-backend app.kubernetes.io/part-of: ingress-nginx namespace: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: default-http-backend app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: default-http-backend app.kubernetes.io/part-of: ingress-nginx spec: terminationGracePeriodSeconds: 60 containers: - name: default-http-backend # Any image is permissible as long as: # 1. It serves a 404 page at / # 2. It serves 200 on a /healthz endpoint image: registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5 livenessProbe: httpGet: path: /healthz port: 8080 scheme: HTTP initialDelaySeconds: 30 timeoutSeconds: 5 ports: - containerPort: 8080 resources: limits: cpu: 10m memory: 20Mi requests: cpu: 10m memory: 20Mi --- apiVersion: v1 kind: Service metadata: name: default-http-backend namespace: ingress-nginx labels: app.kubernetes.io/name: default-http-backend app.kubernetes.io/part-of: ingress-nginx spec: ports: - port: 80 targetPort: 8080 selector: app.kubernetes.io/name: default-http-backend app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: nginx-configuration namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: tcp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: udp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress-serviceaccount namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: nginx-ingress-clusterrole labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - "extensions" resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "extensions" resources: - ingresses/status verbs: - update --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: nginx-ingress-role namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - pods - secrets - namespaces verbs: - get - apiGroups: - "" resources: - configmaps resourceNames: # Defaults to "<election-id>-<ingress-class>" # Here: "<ingress-controller-leader>-<nginx>" # This has to be adapted if you change either parameter # when launching the nginx-ingress-controller. - "ingress-controller-leader-nginx" verbs: - get - update - apiGroups: - "" resources: - configmaps verbs: - create - apiGroups: - "" resources: - endpoints verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: nginx-ingress-role-nisa-binding namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: nginx-ingress-role subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: nginx-ingress-clusterrole-nisa-binding labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-ingress-clusterrole subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: apps/v1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: serviceAccountName: nginx-ingress-serviceaccount hostNetwork: true containers: - name: nginx-ingress-controller image: registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0 args: - /nginx-ingress-controller - --default-backend-service=$(POD_NAMESPACE)/default-http-backend - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE # www-data -> 33 runAsUser: 33 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ---

service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

nodePort: 32080 #http

#- name: https

# port: 443

# targetPort: 443

# protocol: TCP

# nodePort: 32443 #https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

应用配置

kubectl apply -f mandatory.yaml

kubectl apply -f service-nodeport.yaml

验证是否部署成功

三、创建ingress-nginx后端服务

deploy-demon.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

selector:

app: myapp

release: canary

ports:

- name: http

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v2

ports:

- name: httpd

containerPort: 80

应用配置:

kubectl apply -f deploy-demon.yaml

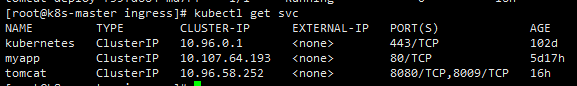

验证是否创建成功

四、将myapp作为ingress的规则

配置文件:ingress-myapp.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-myapp

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: danny.com

http:

paths:

- path:

backend:

serviceName: myapp

servicePort: 80

应用配置:

kubectl apply -f ingress-myapp.yaml

在host文件中,配置域名解释。此处的ip,应该是ingress-control所在的nodeIp

192.168.152.13 danny.com

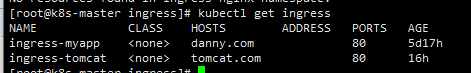

查看ingress是否部署成功

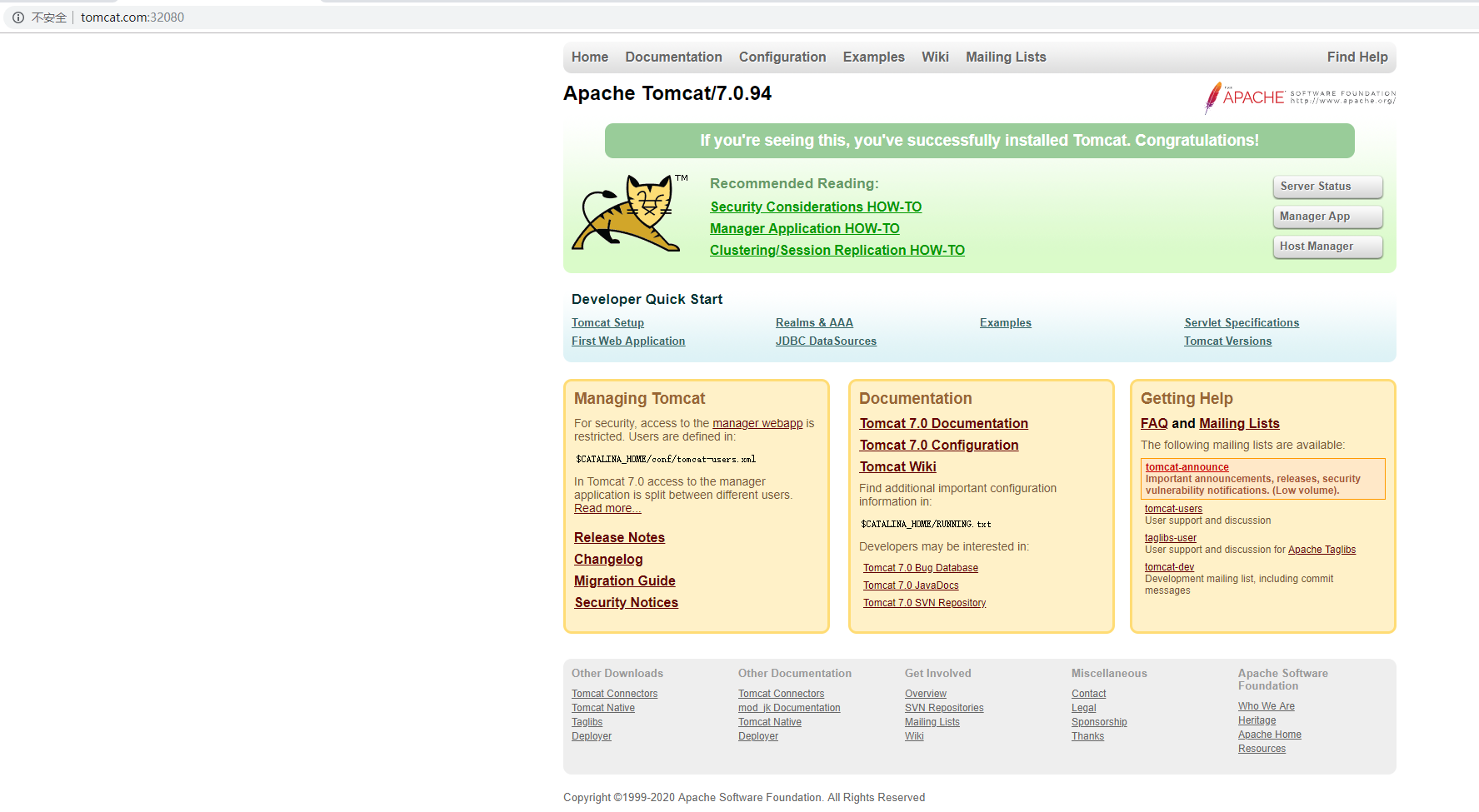

如果配置成功,呈现页面如下:

五、再创建tomat作为ingress

配置文件tomcat-deploy.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

namespace: default

spec:

selector:

app: tomcat

release: canary

ports:

- name: http

port: 8080

targetPort: 8080

- name: ajp

port: 8009

targetPort: 8009

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 2

selector:

matchLabels:

app: tomcat

release: canary

template:

metadata:

labels:

app: tomcat

release: canary

spec:

containers:

- name: tomcat

image: tomcat:7-alpine

ports:

- name: httpd

containerPort: 8080

- name: ajp

containerPort: 8009

配置文件ingress-tomcat.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-tomcat

namespace: default

annotations:

kubernets.io/ingress.class: "nginx"

spec:

rules:

- host: tomcat.com

http:

paths:

- path:

backend:

serviceName: tomcat

servicePort: 8080

应用配置

kubectl apply -f tomcat-deploy.yaml

kubectl apply -f ingress-tomcat.yaml

在host文件添加tomcat的域名映射。此处的ip,应该是ingress-control所在的nodeIp

192.168.152.13 tomcat.com

效果图:

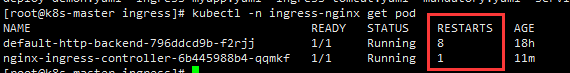

部署中遇到的问题:

Q1.在浏览器通过域名+端口的方式,无法访问服务。

排查步骤:

1.通过clusterIp直接访问myapp service,能够成功访问;

2.查看ingress-nginx(kubectl -n ingress-nginx get pod),发现服务状态虽然现实正常,但是在不断重启

3. 查看nginx-ingress容器的日志,找到原因

nginx version: nginx/1.15.5

W1028 02:37:29.974413 8 client_config.go:552] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I1028 02:37:29.974836 8 main.go:196] Creating API client for https://10.96.0.1:443

F1028 02:43:47.965192 8 main.go:248] Error while initiating a connection to the Kubernetes API server. This could mean the cluster is misconfigured (e.g. it has invalid API server certificates or Service Accounts configuration). Reason: Get https://10.96.0.1:443/version?timeout=32s: dial tcp 10.96.0.1:443: i/o timeout

Refer to the troubleshooting guide for more information: https://kubernetes.github.io/ingress-nginx/troubleshooting/

解决方法(参考来源:https://blog.csdn.net/weiguang1017/article/details/77102845):

mandatory.yaml添加配置:hostNetwork: true

spec:

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

containers:

Q2:ingress-controler服务会随机绑定到其中一台node节点

解决方案:

指定运行节点(需要打标签)

nginx-ingress-controller会随意选择一个node节点运行pod,为此需要我们把nginx-ingress-controller运行到指定的node节点上

首先需要给需要运行nginx-ingress-controller的node节点打标签,在此我们把nginx-ingress-controller运行在指定的node节点上

1.先确定ingress-controller落在哪一个节点

kubectl get pod -n ingress-nginx -o wide

2.为该节点打上ginx标签,实现每次nginx-ingress-controller都落到该节点

#打上nginx标签

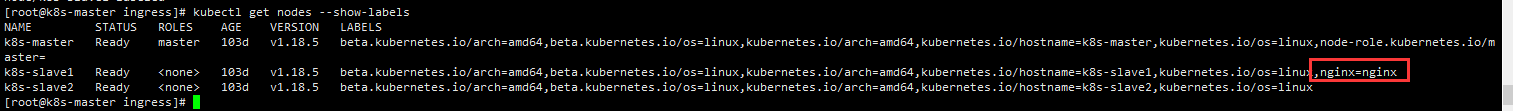

kubectl label nodes k8s-slave1 nginx=nginx

#验证是否成功打上标签

kubectl get nodes --show-labels

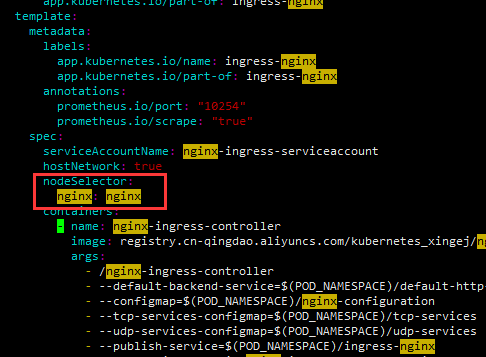

3.mandatory.yaml添加指定node节点配置

4.刷新mandatory.yaml配置

kubectl apply -f mandatory.yaml

5.删除原来的ingress-nginx pod,不然会因为一直占用端口,导致新的pod一直处于pending状态

#pod id需根据实际情况替换

kubectl -n ingress-nginx delete pod nginx-ingress-controller-55dc8d57fd-dlr4h

安装参考:https://www.cnblogs.com/panwenbin-logs/p/9915927.html#autoid-0-1-0

链接https://blog.csdn.net/weixin_44729138/article/details/105978555描述更加清晰,并描述了细节