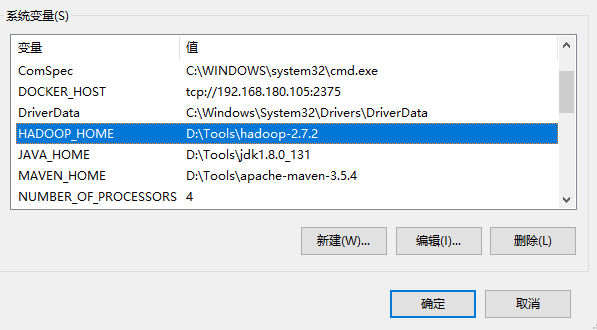

配置HADOOP_HOME和配置Path环境变量环境变量

创建一个Maven工程

<dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>RELEASE</version> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>2.8.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.2</version> </dependency> </dependencies>

需要在项目的src/main/resources目录下,新建一个文件,命名为“log4j.properties”,在文件中填入

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

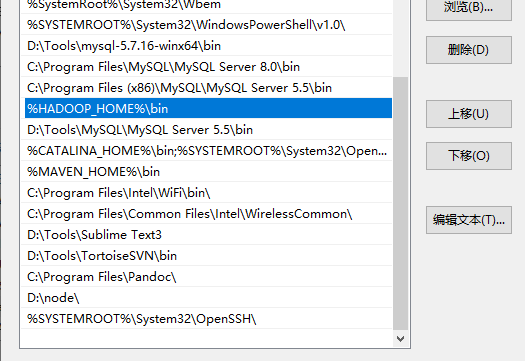

创建测试类

/** * @author WGR * @create 2020/1/20 -- 15:05 */ public class HDFSClient1 { public static void main(String[] args) throws URISyntaxException, IOException, InterruptedException { // 1 获取hdfs客户端对象 // FileSystem fs = FileSystem.get(conf ); Configuration configuration = new Configuration(); FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop002:9000"), configuration, "root"); // 2 在hdfs上创建路径 fileSystem.mkdirs(new Path("/0120/test/")); // 3 关闭资源 fileSystem.close(); System.out.println("over"); } }

查看HDFS

[root@hadoop002 hadoop-2.7.2]# hadoop fs -ls -R / drwxr-xr-x - root supergroup 0 2020-01-20 15:12 /0120 drwxr-xr-x - root supergroup 0 2020-01-20 15:12 /0120/test -rw-r--r-- 3 root supergroup 1366 2020-01-15 22:30 /README.txt drwxr-xr-x - root supergroup 0 2020-01-18 21:36 /tmp drwxrwxrwt - root supergroup 0 2020-01-18 21:36 /tmp/logs drwxrwx--- - dr.who supergroup 0 2020-01-18 21:36 /tmp/logs/dr.who drwxrwx--- - dr.who supergroup 0 2020-01-19 17:30 /tmp/logs/dr.who/logs drwxrwx--- - dr.who supergroup 0 2020-01-18 21:46 /tmp/logs/dr.who/logs/application_1579060789521_0001 drwxrwx--- - dr.who supergroup 0 2020-01-18 21:46 /tmp/logs/dr.who/logs/application_1579060789521_0002 drwxrwx--- - dr.who supergroup 0 2020-01-18 21:47 /tmp/logs/dr.who/logs/application_1579060789521_0003 drwxrwx--- - dr.who supergroup 0 2020-01-18 21:46 /tmp/logs/dr.who/logs/application_1579060789521_0004 drwxrwx--- - dr.who supergroup 0 2020-01-19 17:34 /tmp/logs/dr.who/logs/application_1579060789521_0005 drwxrwx--- - dr.who supergroup 0 2020-01-19 17:30 /tmp/logs/dr.who/logs/application_1579060789521_0006 drwxrwx--- - dr.who supergroup 0 2020-01-19 17:36 /tmp/logs/dr.who/logs/application_1579060789521_0007 drwxrwx--- - dr.who supergroup 0 2020-01-19 17:43 /tmp/logs/dr.who/logs/application_1579060789521_0008 -rw-r--r-- 3 root supergroup 18284613 2020-01-15 12:11 /tools.jar -rw-r--r-- 3 root supergroup 48 2020-01-15 12:05 /wc.input drwxr-xr-x - root supergroup 0 2020-01-15 21:49 /wgr drwxr-xr-x - root supergroup 0 2020-01-15 21:51 /wgr/test -rwxrwxrwx 2 root supergroup 15 2020-01-15 22:28 /wgr/test/kongming.txt [root@hadoop002 hadoop-2.7.2]#

文件上传

// 1 文件上传 @Test public void testCopyFromLocalFile() throws IOException, InterruptedException, URISyntaxException{ // 1 获取fs对象 Configuration conf = new Configuration(); conf.set("dfs.replication", "2"); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop002:9000"), conf , "root"); // 2 执行上传API fs.copyFromLocalFile(new Path("C:\Users\asus\Desktop\2.txt"), new Path("/2.txt")); // 3 关闭资源 fs.close(); }

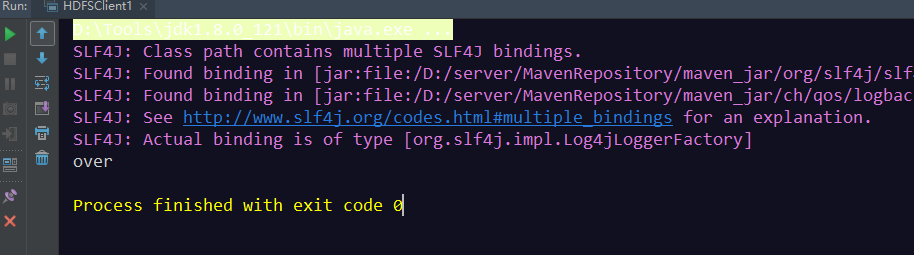

文件下载

// 2 文件下载 @Test public void testCopyToLocalFile() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop002:9000"), conf , "root"); // 2 执行下载操作 // fs.copyToLocalFile(new Path("/banhua.txt"), new Path("e:/banhua.txt")); fs.copyToLocalFile(false, new Path("/2.txt"), new Path("e:/1111.txt"), true); // 3 关闭资源 fs.close(); }

文件删除

// 3 文件删除 @Test public void testDelete() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop002:9000"), conf , "root"); // 2 文件删除 fs.delete(new Path("/0120"), true); // 3 关闭资源 fs.close(); }

文件重改名的

// 4 文件更名 @Test public void testRename() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop002:9000"), conf , "root"); // 2 执行更名操作 fs.rename(new Path("/2.txt"), new Path("/111.txt")); // 3 关闭资源 fs.close(); }

文件查看

// 5 文件详情查看 @Test public void testListFiles() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop002:9000"), conf , "root"); // 2 查看文件详情 RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true); while(listFiles.hasNext()){ LocatedFileStatus fileStatus = listFiles.next(); // 查看文件名称、权限、长度、块信息 System.out.println(fileStatus.getPath().getName());// 文件名称 System.out.println(fileStatus.getPermission());// 文件权限 System.out.println(fileStatus.getLen());// 文件长度 BlockLocation[] blockLocations = fileStatus.getBlockLocations(); for (BlockLocation blockLocation : blockLocations) { String[] hosts = blockLocation.getHosts(); for (String host : hosts) { System.out.println(host); } } System.out.println("------分割线--------"); } // 3 关闭资源 fs.close(); }

111.txt rw-r--r-- 1773 hadoop003 hadoop004 ------分割线-------- README.txt rw-r--r-- 1366 hadoop002 hadoop004 hadoop003 ------分割线-------- tools.jar rw-r--r-- 18284613 hadoop002 hadoop004 hadoop003 ------分割线-------- wc.input rw-r--r-- 48 hadoop003 hadoop002 hadoop004 ------分割线-------- kongming.txt rwxrwxrwx 15 hadoop004 hadoop003 ------分割线-------- Process finished with exit code 0

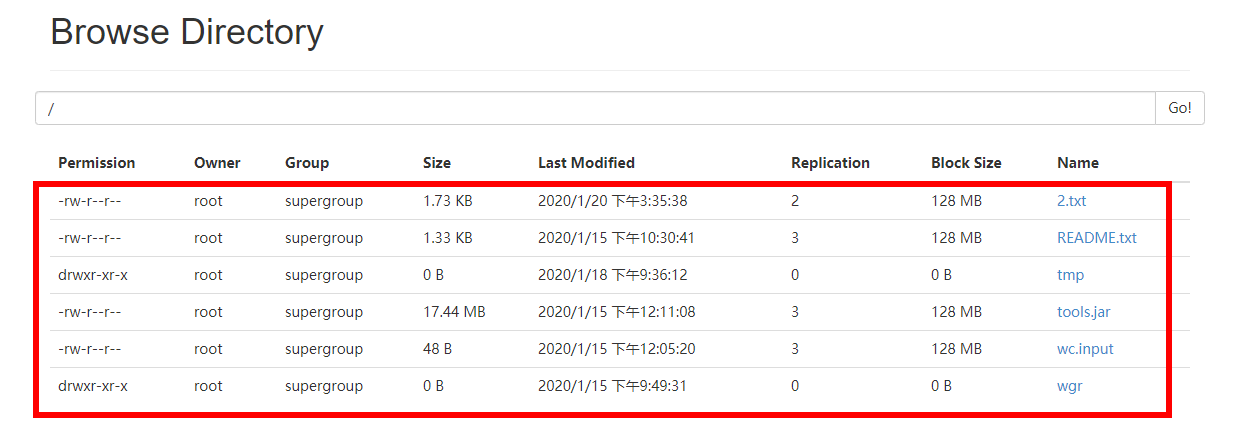

文件查看

// 6 判断是文件还是文件夹 @Test public void testListStatus() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop002:9000"), conf , "root"); // 2 判断操作 FileStatus[] listStatus = fs.listStatus(new Path("/")); for (FileStatus fileStatus : listStatus) { if (fileStatus.isFile()) { // 文件 System.out.println("f:"+fileStatus.getPath().getName()); }else{ // 文件夹 System.out.println("d:"+fileStatus.getPath().getName()); } } // 3 关闭资源 fs.close(); }

f:111.txt

f:README.txt

d:tmp

f:tools.jar

f:wc.input

d:wgr