centos 搭建redis主从

!!! 由于这是在虚拟机中做的测试,配置过程中可能出现错误,记得看日志

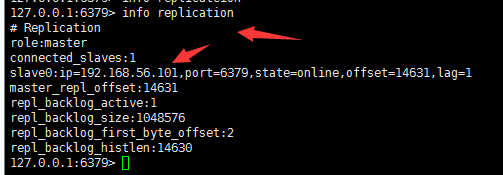

查看主从信息: info replication

搭建主从确保,两台服务器直接可以连通, ping 连接ok, redis 互相可以登录

redis 主: 192.168.56.3 redis 从: 192.168.56.101

我的这两台服务器redis密码都设置为123456, redis.conf 中bind 已经注释

1). 测试1:

[root@localhost vagrant]# ping 192.168.56.101 PING 192.168.56.101 (192.168.56.101) 56(84) bytes of data. 64 bytes from 192.168.56.101: icmp_seq=1 ttl=64 time=0.149 ms 64 bytes from 192.168.56.101: icmp_seq=2 ttl=64 time=0.163 ms 64 bytes from 192.168.56.101: icmp_seq=3 ttl=64 time=0.152 ms 64 bytes from 192.168.56.101: icmp_seq=4 ttl=64 time=0.173 ms ^C --- 192.168.56.101 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 2999ms rtt min/avg/max/mdev = 0.149/0.159/0.173/0.013 ms [root@localhost vagrant]#

[root@localhost vagrant]# ping 192.168.56.3 PING 192.168.56.3 (192.168.56.3) 56(84) bytes of data. 64 bytes from 192.168.56.3: icmp_seq=1 ttl=64 time=0.175 ms 64 bytes from 192.168.56.3: icmp_seq=2 ttl=64 time=0.134 ms 64 bytes from 192.168.56.3: icmp_seq=3 ttl=64 time=0.149 ms 64 bytes from 192.168.56.3: icmp_seq=4 ttl=64 time=0.140 ms ^C --- 192.168.56.3 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 2999ms rtt min/avg/max/mdev = 0.134/0.149/0.175/0.019 ms [root@localhost vagrant]#

测试2 , redis 互相登录:

#在192.168.56.3 上登录 192.168.56.101 [root@localhost vagrant]# /usr/bin/redis-cli -h 192.168.56.101 -a 123456 192.168.56.101:6379> keys * #在192.168.56.101 上登录 192.168.56.3 [root@localhost vagrant]# /usr/bin/redis-cli -h 192.168.56.3 -a 123456 192.168.56.3:6379> keys *

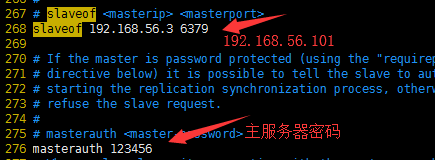

2). 配置主从:

在conf服务器上 redis.conf 中配置

slaveof 192.168.56.3 6379 masterauth 123456

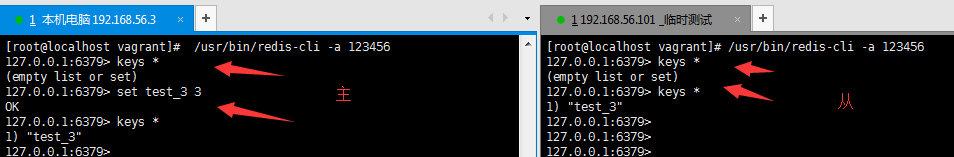

3). 配置完毕,测试数据: