▶ 书上的代码,逐步优化绘制 Julia 图形的代码

● 无并行优化(手动优化了变量等)

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N (1024 * 8) 6 7 int julia(const float cre, const float cim, float zre, float zim, const int maxIter)// 计算单点迭代次数 8 { 9 float zre2 = 0.0f, zim2 = 0.0f; 10 for (int iter = 1; iter < maxIter; iter += 2) // 一个迭代里计算两次 11 { 12 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 13 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 14 return iter; 15 16 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 17 if (zre * zre + zim * zim > 4.0f) 18 return iter; 19 } 20 return maxIter + 1 + (maxIter % 2); 21 } 22 23 int main() 24 { 25 const int maxIter = 128; // 最大迭代次数 26 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; // 迭代常数和画幅步长 27 int *image = (int *)malloc(sizeof(int) * N * N); 28 FILE *pf = fopen("R:/output.txt", "w"); 29 30 for (int i = 0; i < N; i++) 31 { 32 for (int j = 0; j < N; j++) 33 fprintf(pf, "%d ", julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter)); 34 fprintf(pf, " "); 35 } 36 37 fclose(pf); 38 free(image); 39 //getchar(); 40 return 0; 41 }

● 输出结果(后面所有代码的输出都相同,不再写了)

● 改进 1,计算并行化

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N (1024 * 8) 6 7 #pragma acc routine seq 8 int julia(const float cre, const float cim, float zre, float zim, const int maxIter) 9 { 10 float zre2 = 0.0f, zim2 = 0.0f; 11 for (int iter = 1; iter < maxIter; iter += 2) 12 { 13 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 14 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 15 return iter; 16 17 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 18 if (zre * zre + zim * zim > 4.0f) 19 return iter; 20 } 21 return maxIter + 1 + (maxIter % 2); 22 } 23 24 int main() 25 { 26 const int maxIter = 128; 27 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; 28 int *image = (int *)malloc(sizeof(int) * N * N); 29 30 #pragma acc data copyout(image[0:N * N]) // 数据域 31 { 32 #pragma acc kernels loop independent // loop 并行化,强制独立 33 for (int i = 0; i < N; i++) 34 { 35 for (int j = 0; j < N; j++) 36 image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter); 37 } 38 } 39 /*// 注释掉写入文件的部分,防止 Nvvp 加入分析 40 FILE *pf = fopen("R:/output.txt", "w"); 41 for (int i = 0; i < N; i++) 42 { 43 for (int j = 0; j < N; j++) 44 fprintf(pf, "%d ", image[i * N + j]); 45 fprintf(pf, " "); 46 } 47 fclose(pf); 48 */ 49 free(image); 50 //getchar(); 51 return 0; 52 }

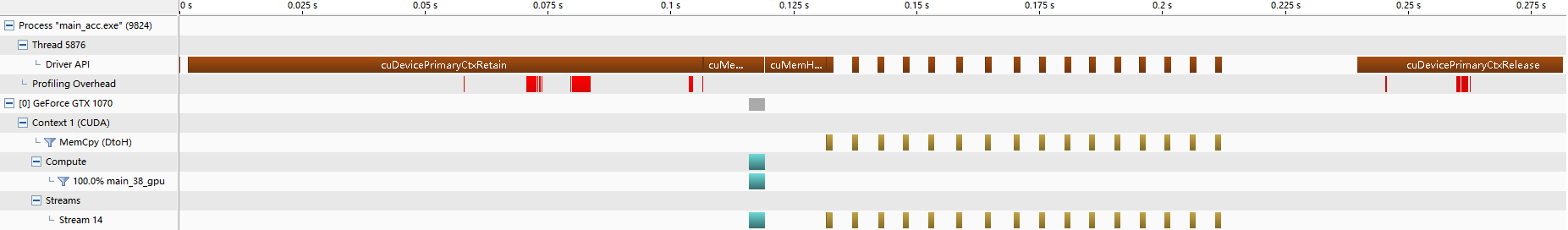

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe julia: 9, Generating acc routine seq Generating Tesla code 11, FMA (fused multiply-add) instruction(s) generated 17, FMA (fused multiply-add) instruction(s) generated main: 30, Generating copyout(image[:67108864]) 33, Loop is parallelizable FMA (fused multiply-add) instruction(s) generated 35, Loop is parallelizable Accelerator kernel generated Generating Tesla code 33, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 35, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 35, FMA (fused multiply-add) instruction(s) generated D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=35 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 20,486 30: data region reached 2 times 49: data copyout transfers: 17 device time(us): total=20,486 max=1,288 min=5 avg=1,205 32: compute region reached 1 time 35: kernel launched 1 time grid: [256x128] block: [32x4] device time(us): total=0 max=0 min=0 avg=0

● 改进 2,分块计算,没有明显性能提升,为异步做准备

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N (1024 * 8) 6 7 #pragma acc routine seq 8 int julia(const float cre, const float cim, float zre, float zim, const int maxIter) 9 { 10 float zre2 = 0.0f, zim2 = 0.0f; 11 for (int iter = 1; iter < maxIter; iter += 2) 12 { 13 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 14 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 15 return iter; 16 17 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 18 if (zre * zre + zim * zim > 4.0f) 19 return iter; 20 } 21 return maxIter + 1 + (maxIter % 2); 22 } 23 24 int main() 25 { 26 const int maxIter = 128; 27 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; 28 int *image = (int *)malloc(sizeof(int) * N * N); 29 30 #pragma acc data copyout(image[0:N * N]) 31 { 32 const int numblock = 4; // 指定分块数量 33 for (int block = 0; block < numblock; block++) // 每次计算一块 34 { 35 const int start = block * (N / numblock), end = start + N / numblock; // 每块的始末下标 36 #pragma acc kernels loop independent 37 for (int i = start; i < end; i++) 38 { 39 for (int j = 0; j < N; j++) 40 image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter); 41 } 42 } 43 } 44 /* 45 FILE *pf = fopen("R:/output.txt", "w"); 46 for (int i = 0; i < N; i++) 47 { 48 for (int j = 0; j < N; j++) 49 fprintf(pf, "%d ", image[i * N + j]); 50 fprintf(pf, " "); 51 } 52 fclose(pf); 53 */ 54 free(image); 55 //getchar(); 56 return 0; 57 }

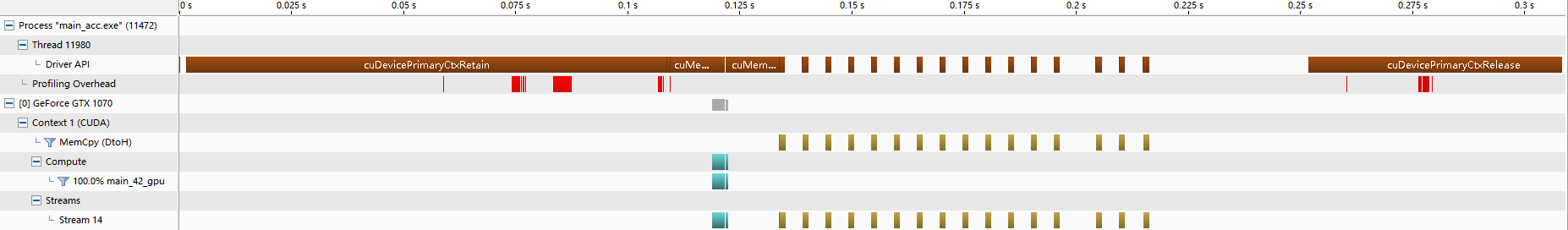

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe julia: 9, Generating acc routine seq Generating Tesla code 11, FMA (fused multiply-add) instruction(s) generated 17, FMA (fused multiply-add) instruction(s) generated main: 30, Generating copyout(image[:67108864]) 37, Loop is parallelizable FMA (fused multiply-add) instruction(s) generated 39, Loop is parallelizable Accelerator kernel generated Generating Tesla code 37, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 39, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 39, FMA (fused multiply-add) instruction(s) generated D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main =39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 20,456 30: data region reached 2 times 54: data copyout transfers: 17 device time(us): total=20,456 max=1,297 min=11 avg=1,203 36: compute region reached 4 times 39: kernel launched 4 times grid: [256x128] block: [32x4] device time(us): total=0 max=0 min=0 avg=0

● 改进 3,分块传输,没有明显性能提升,为异步做准备

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N (1024 * 8) 6 7 #pragma acc routine seq 8 int julia(const float cre, const float cim, float zre, float zim, const int maxIter) 9 { 10 float zre2 = 0.0f, zim2 = 0.0f; 11 for (int iter = 1; iter < maxIter; iter += 2) 12 { 13 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 14 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 15 return iter; 16 17 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 18 if (zre * zre + zim * zim > 4.0f) 19 return iter; 20 } 21 return maxIter + 1 + (maxIter % 2); 22 } 23 24 int main() 25 { 26 const int maxIter = 128; 27 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; 28 int *image = (int *)malloc(sizeof(int) * N * N); 29 30 #pragma acc data create(image[0:N * N]) // 改成 create,不需要从主机拷贝初始数据 31 { 32 const int numBlock = 4, blockSize = N * N / numBlock; // 仍然分块计算 33 for (int block = 0; block < numBlock; block++) 34 { 35 const int start = block * (N / numBlock), end = start + N / numBlock; 36 #pragma acc kernels loop independent 37 for (int i = start; i < end; i++) 38 { 39 for (int j = 0; j < N; j++) 40 image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter); 41 } 42 #pragma acc update host(image[block * blockSize : blockSize]) // 每计算完一块就向主机回传数据 43 } 44 } 45 /* 46 FILE *pf = fopen("R:/output.txt", "w"); 47 for (int i = 0; i < N; i++) 48 { 49 for (int j = 0; j < N; j++) 50 fprintf(pf, "%d ", image[i * N + j]); 51 fprintf(pf, " "); 52 } 53 fclose(pf); 54 */ 55 free(image); 56 //getchar(); 57 return 0; 58 }

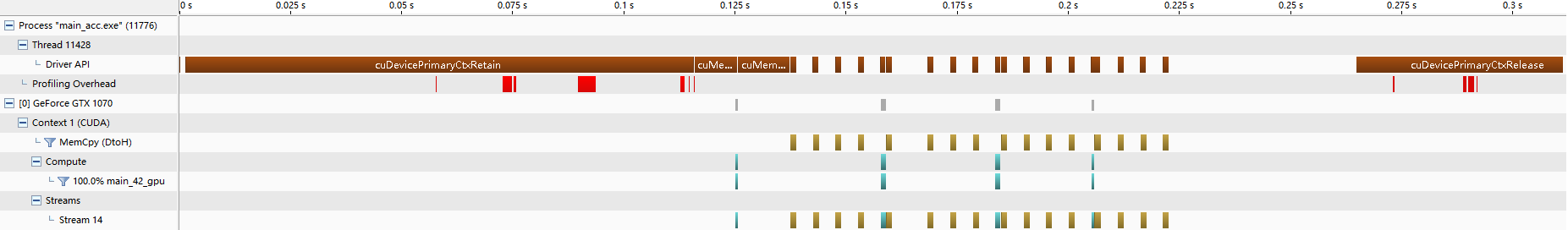

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe julia: 9, Generating acc routine seq Generating Tesla code 11, FMA (fused multiply-add) instruction(s) generated 17, FMA (fused multiply-add) instruction(s) generated main: 30, Generating create(image[:67108864]) 37, Loop is parallelizable FMA (fused multiply-add) instruction(s) generated 39, Loop is parallelizable Accelerator kernel generated Generating Tesla code 37, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 39, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 39, FMA (fused multiply-add) instruction(s) generated 43, Generating update self(image[block*blockSize:blockSize]) D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 20,474 30: data region reached 2 times 36: compute region reached 4 times 39: kernel launched 4 times grid: [256x128] block: [32x4] elapsed time(us): total=16,000 max=16,000 min=0 avg=4,000 43: update directive reached 4 times 43: data copyout transfers: 20 device time(us): total=20,474 max=1,287 min=5 avg=1,023

● 改进 4,异步计算 - 双向传输

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <openacc.h> 4 5 #define N (1024 * 8) 6 7 #pragma acc routine seq 8 int julia(const float cre, const float cim, float zre, float zim, const int maxIter) 9 { 10 float zre2 = 0.0f, zim2 = 0.0f; 11 for (int iter = 1; iter < maxIter; iter += 2) 12 { 13 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 14 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 15 return iter; 16 17 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 18 if (zre * zre + zim * zim > 4.0f) 19 return iter; 20 } 21 return maxIter + 1 + (maxIter % 2); 22 } 23 24 int main() 25 { 26 const int maxIter = 128; 27 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; 28 int *image = (int *)malloc(sizeof(int) * N * N); 29 30 #pragma acc data create(image[0:N * N]) 31 { 32 const int numBlock = 4, blockSize = N / numBlock * N; 33 for (int block = 0; block < numBlock; block++) 34 { 35 const int start = block * (N / numBlock), end = start + N / numBlock; 36 #pragma acc kernels loop independent async(block + 1) // 异步计算,用块编号作标记 37 for (int i = start; i < end; i++) 38 { 39 for (int j = 0; j < N; j++) 40 image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter); 41 } 42 #pragma acc update host(image[block * blockSize : blockSize]) async(block + 1) // 计算完一块就异步传输 43 } 44 #pragma acc wait 45 } 46 /* 47 FILE *pf = fopen("R:/output.txt", "w"); 48 for (int i = 0; i < N; i++) 49 { 50 for (int j = 0; j < N; j++) 51 fprintf(pf, "%d ", image[i * N + j]); 52 fprintf(pf, " "); 53 } 54 fclose(pf); 55 */ 56 free(image); 57 //getchar(); 58 return 0; 59 }

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe julia: 9, Generating acc routine seq Generating Tesla code 11, FMA (fused multiply-add) instruction(s) generated 17, FMA (fused multiply-add) instruction(s) generated main: 30, Generating create(image[:67108864]) 37, Loop is parallelizable FMA (fused multiply-add) instruction(s) generated 39, Loop is parallelizable Accelerator kernel generated Generating Tesla code 37, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 39, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 39, FMA (fused multiply-add) instruction(s) generated 43, Generating update self(image[block*blockSize:blockSize]) D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 queue=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 queue=2 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 queue=3 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=39 device=0 threadid=1 queue=4 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data Timing may be affected by asynchronous behavior set PGI_ACC_SYNCHRONOUS to 1 to disable async() clauses D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 20,503 30: data region reached 2 times 36: compute region reached 4 times 39: kernel launched 4 times grid: [256x128] block: [32x4] device time(us): total=0 max=0 min=0 avg=0 43: update directive reached 4 times 43: data copyout transfers: 20 device time(us): total=20,503 max=1,297 min=5 avg=1,025

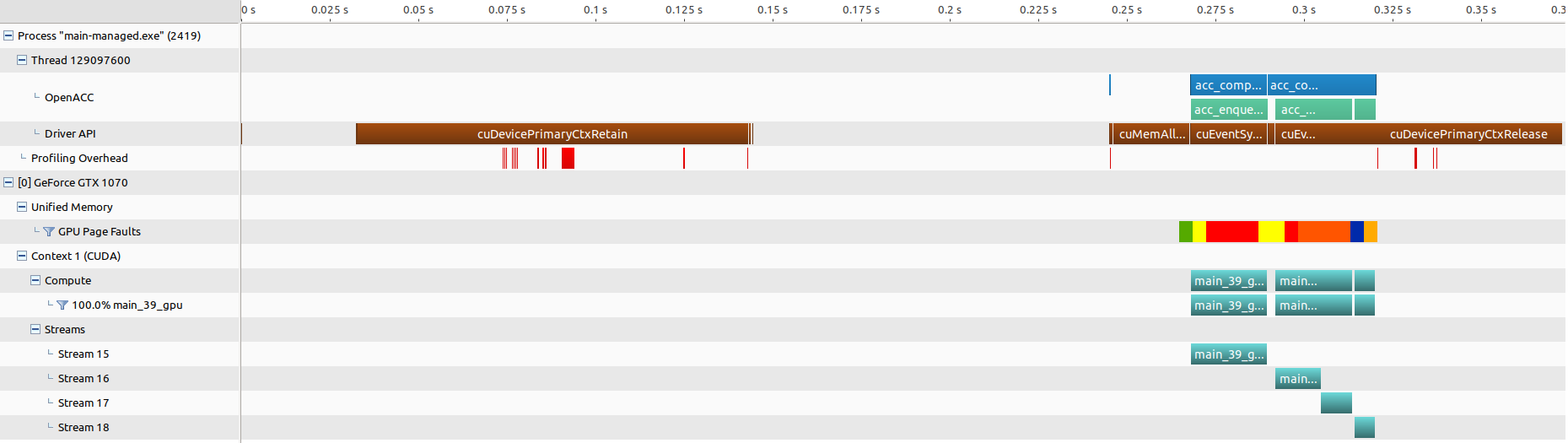

● 使用统一内存访址(Ubuntu,win64 不支持)

● 改进 4,多设备版本 1,使用 OpenMP

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <omp.h> 4 #include <openacc.h> 5 6 #define N (1024 * 8) 7 8 #pragma acc routine seq 9 int julia(const float cre, const float cim, float zre, float zim, const int maxIter) 10 { 11 float zre2 = 0.0f, zim2 = 0.0f; 12 for (int iter = 1; iter < maxIter; iter += 2) 13 { 14 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 15 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 16 return iter; 17 18 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 19 if (zre * zre + zim * zim > 4.0f) 20 return iter; 21 } 22 return maxIter + 1 + (maxIter % 2); 23 } 24 25 int main() 26 { 27 const int maxIter = 128; 28 const int numBlock = acc_get_num_devices(acc_device_nvidia), blockSize = N / numBlock * N; // 使用 OpenMP 检测目标设备数量,以此作为分块数 29 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; 30 31 int *image = (int *)malloc(sizeof(int) * N * N); 32 acc_init(acc_device_nvidia); // 一次性初始化全部目标设备 33 34 #pragma omp parallel num_threads(numBlock) // 使用多个线程,分别向目标设备发送任务 35 { 36 acc_set_device_num(omp_get_thread_num(), acc_device_nvidia);// 标记目标设备 37 #pragma omp for 38 for (int block = 0; block < numBlock; block++) 39 { 40 const int start = block * (N / numBlock), end = start + N / numBlock; 41 #pragma acc data copyout(image[block * blockSize : blockSize]) 42 { 43 #pragma acc kernels loop independent 44 for (int i = start; i < end; i++) 45 { 46 for (int j = 0; j < N; j++) 47 image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter); 48 } 49 } 50 } 51 } 52 /* 53 FILE *pf = fopen("R:/output.txt", "w"); 54 for (int i = 0; i < N; i++) 55 { 56 for (int j = 0; j < N; j++) 57 fprintf(pf, "%d ", image[i * N + j]); 58 fprintf(pf, " "); 59 } 60 fclose(pf); 61 */ 62 free(image); 63 //getchar(); 64 return 0; 65 }

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc -acc -mp -Minfo main.c -o main_acc.exe julia: 10, Generating acc routine seq Generating Tesla code 12, FMA (fused multiply-add) instruction(s) generated 18, FMA (fused multiply-add) instruction(s) generated main: 35, Parallel region activated 38, Parallel loop activated with static block schedule 41, Generating copyout(image[block*blockSize:blockSize]) 44, Loop is parallelizable FMA (fused multiply-add) instruction(s) generated 46, Loop is parallelizable Accelerator kernel generated Generating Tesla code 44, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 46, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 46, FMA (fused multiply-add) instruction(s) generated 51, Barrier 62, Parallel region terminated D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=46 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 20,462 41: data region reached 2 times 50: data copyout transfers: 17 device time(us): total=20,462 max=1,297 min=5 avg=1,203 43: compute region reached 1 time 46: kernel launched 1 time grid: [256x128] block: [32x4] device time(us): total=0 max=0 min=0 avg=0

● 改进 5,多设备版本 2,调整 OpenMP

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <omp.h> 4 #include <openacc.h> 5 6 #define N (1024 * 8) 7 8 #pragma acc routine seq 9 int julia(const float cre, const float cim, float zre, float zim, const int maxIter) 10 { 11 float zre2 = 0.0f, zim2 = 0.0f; 12 for (int iter = 1; iter < maxIter; iter += 2) 13 { 14 zre2 = zre * zre - zim * zim + cre, zim2 = 2 * zre * zim + cim; 15 if (zre2 * zre2 + zim2 * zim2 > 4.0f) 16 return iter; 17 18 zre = zre2 * zre2 - zim2 * zim2 + cre, zim = 2 * zre2 * zim2 + cim; 19 if (zre * zre + zim * zim > 4.0f) 20 return iter; 21 } 22 return maxIter + 1 + (maxIter % 2); 23 } 24 25 int main() 26 { 27 const int maxIter = 128; 28 const int numBlock = acc_get_num_devices(acc_device_nvidia), blockSize = N / numBlock * N; 29 const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; 30 31 int *image = (int *)malloc(sizeof(int) * N * N); 32 acc_init(acc_device_nvidia); 33 34 #pragma omp parallel for num_threads(numBlock) // 把函数 acc_set_device_num 单独放在一起 35 for(int block = 0;block<numBlock;block++) 36 acc_set_device_num(block, acc_device_nvidia); 37 38 #pragma omp for num_threads(numBlock) 39 for (int block = 0; block < numBlock; block++) 40 { 41 const int start = block * (N / numBlock), end = start + N / numBlock; 42 #pragma acc data copyout(image[block * blockSize : blockSize]) 43 { 44 #pragma acc kernels loop independent 45 for (int i = start; i < end; i++) 46 { 47 for (int j = 0; j < N; j++) 48 image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter); 49 } 50 } 51 } 52 /* 53 FILE *pf = fopen("R:/output.txt", "w"); 54 for (int i = 0; i < N; i++) 55 { 56 for (int j = 0; j < N; j++) 57 fprintf(pf, "%d ", image[i * N + j]); 58 fprintf(pf, " "); 59 } 60 fclose(pf); 61 */ 62 free(image); 63 //getchar(); 64 return 0; 65 }

● 输出结果

D:CodeOpenACCOpenACCProjectOpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe julia: 10, Generating acc routine seq Generating Tesla code 12, FMA (fused multiply-add) instruction(s) generated 18, FMA (fused multiply-add) instruction(s) generated main: 42, Generating copyout(image[block*blockSize:blockSize]) 45, Loop is parallelizable FMA (fused multiply-add) instruction(s) generated 47, Loop is parallelizable Accelerator kernel generated Generating Tesla code 45, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 47, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 47, FMA (fused multiply-add) instruction(s) generated D:CodeOpenACCOpenACCProjectOpenACCProject>main_acc.exe launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main line=47 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=256x128 block=32x4 PGI: "acc_shutdown" not detected, performance results might be incomplete. Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c main NVIDIA devicenum=0 time(us): 20,571 42: data region reached 2 times 51: data copyout transfers: 17 device time(us): total=20,571 max=1,336 min=11 avg=1,210 44: compute region reached 1 time 47: kernel launched 1 time grid: [256x128] block: [32x4] elapsed time(us): total=3,000 max=3,000 min=3,000 avg=3,000