Logstash 简介:

Logstash 是一个实时数据收集引擎,可收集各类型数据并对其进行分析,过滤和归纳。按照自己条件分析过滤出符合数据导入到可视化界面。Logstash 建议使用java1.8 有些版本是不支持的,比如java1.9。

一. 下载安装jdk1.8

下载地址:http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

下载好的安装包上传到cpy04.dev.xjh.com的/usr/local/ 目录下并三执行如下操作:

#解压文件 tar xf /usr/local/jdk1.8.0_111.tar.gz -C /usr/local mv /usr/local/jdk1.8.0_111 /usr/local/jdk-1.8.0 #添加环境变量 alternatives --install /usr/bin/java java /usr/local/jdk1.8.0/jre/bin/java 3000 alternatives --install /usr/bin/jar jar /usr/local/jdk1.8.0/bin/jar 3000 alternatives --install /usr/bin/javac javac /usr/local/jdk1.8.0/bin/javac 3000 alternatives --install /usr/bin/javaws javaws /usr/local/jdk1.8.0/jre/bin/javaws 3000 alternatives --set java /usr/local/jdk1.8.0/jre/bin/java alternatives --set jar /usr/local/jdk1.8.0/bin/jar alternatives --set javac /usr/local/jdk1.8.0/bin/javac alternatives --set javaws /usr/local/jdk1.8.0/jre/bin/javaws #切换java 版本 alternatives --config java

二. 安装logstash

1. 登陆cpy04.dev.xjh.com(需下载其他版本请点击:https://www.elastic.co/downloads/logstash )

wget https://artifacts.elastic.co/downloads/logstash/logstash-5.6.1.tar.gz -o /opt/logstash-5.6.1.tar.gz tar xf /opt/logstash-5.6.1.tar.gz -C /usr/local mv /usr/local/logstash-5.6.1 /usr/local/logstash

三、配置logstash

1. 编辑 /usr/local/logstash/config/logstash.yml配置文件修改如下内容:

node.name: cpy04.dev.xjh.com #设置节点名称,一般写主机名 path.data: /usr/local/logstash/plugin-data #创建logstash 和插件使用的持久化目录 config.reload.automatic: true #开启配置文件自动加载 config.reload.interval: 10 #定义配置文件重载时间周期 http.host: "cpy04.dev.xjh.com" #定义访问主机名,一般为域名或IP

2. 新建持久化目录:

mkdir -p /usr/local/logstash/plugin-data

3. 配置logstash 从Filebeat 输入、过滤、输出至elasticsearch(logstash 有非常多插件,详见官网,此处不列举)

3.1 安装logstash-input-jdbc 和logstash-input-beats-master 插件

/usr/local/logstash/bin/logstash-plugin install logstash-input-jdbc wget https://github.com/logstash-plugins/logstash-input-beats/archive/master.zip -O /opt/master.zip unzip -d /usr/local/logstash /opt/master.zip

3.2 配置logstash input 段

vim /usr/local/logstash/from_beat.conf

input {

beats {

port => 5044

}

}

output {

stdout { codec => rubydebug }

}

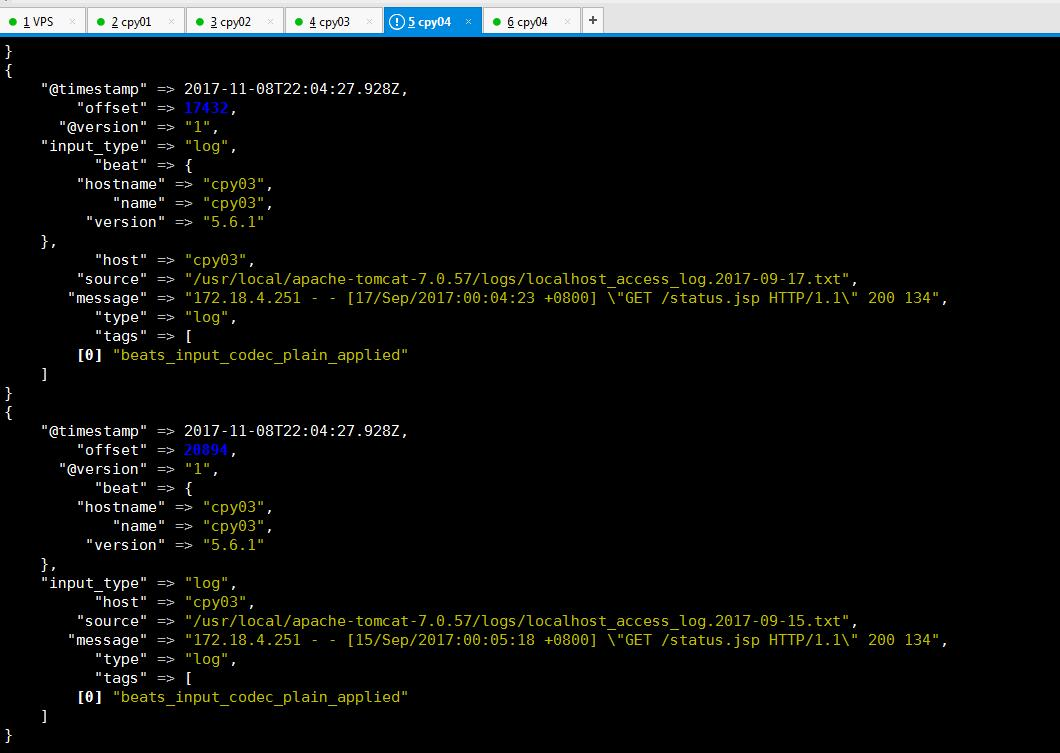

启动logstash 看是否能接收到filebeat 传过来的日志内容,要确保filebeat 在日志节点上启动正常。此时只测试传入是否正常,并未对原始日志进行过滤和筛选

/usr/local/logstash/bin/logstash -f /usr/local/logstash/config/from_beat.conf

启动后如果没有报错需要等待logstash 完成,此时间可能比较长

3.3 配置 logstash filter 段,修改/usr/local/logstash/from_beat.conf 为以下内容,配置完成后再次启动logstash,此时如果成功,输出内容应该是自己正则表达式捕获后的字段切分内容。

input {

beats {

port => 5044

}

}

filter {

#过滤access 日志

if ( [source] =~ "localhost\_access\_log" ) {

grok {

match => {

message => [ "%{COMMONAPACHELOG}" ]

}

}

date {

match => [ "request_time", "ISO8601" ]

locale => "cn"

target => "request_time"

}

#过滤tomcat日志

} else if ( [source] =~ "catalina" ) {

#使用正则匹配内容到字段

grok {

match => {

message => [ "(?<webapp_name>[w+])s+(?<request_time>d{4}-d{2}-d{2}s+w{2}:w{2}:w{2}\,w{3})s+(?<log_level>w+)s+(?<class_package>[^.^s]+(?:.[^.s]+)+).(?<class_name>[^s]+)s+(?<message_content>.+)" ]

}

}

#解析请求时间

date {

match => [ "request_time", "ISO8601" ]

locale => "cn"

target => "request_time"

}

} else {

drop {}

}

}

output {

stdout { codec => rubydebug }

}

3.4 配置 过滤后内容输出至elasticsearch,修改from_beat.conf 文件为以下内容:

input {

beats {

port => 5044

}

}

filter {

#过滤access 日志

if ( [source] =~ "localhost\_access\_log" ) {

grok {

match => {

message => [ "%{COMMONAPACHELOG}" ]

}

}

date {

match => [ "request_time", "ISO8601" ]

locale => "cn"

target => "request_time"

}

#过滤tomcat日志

} else if ( [source] =~ "catalina" ) {

#匹配内容到字段

grok {

match => {

message => [ "(?<webapp_name>[w+])s+(?<request_time>d{4}-d{2}-d{2}s+w{2}:w{2}:w{2}\,w{3})s+(?<log_level>w+)s+(?<class_package>[^.^s]+(?:.[^.s]+)+).(?<class_name>[^s]+)s+(?<message_content>.+)" ]

}

}

#解析请求时间

date {

match => [ "request_time", "ISO8601" ]

locale => "cn"

target => "request_time"

}

} else {

drop {}

}

}

output {

if ( [source] =~ "localhost_access_log" ) {

elasticsearch {

hosts => ["cpy04.dev.xjh.com:9200"]

index => "access_log"

}

} else {

elasticsearch {

hosts => ["cpy04.dev.xjh.com:9200"]

index => "tomcat_log"

}

}

stdout { codec => rubydebug }

}

至此,logstash 配置完成。如果需要做其他过滤或者输出至除elasticsearch 以外插件,如kafka 详见:https://www.elastic.co/guide/en/logstash/current/index.html

input {beats {port => 5044}}filter { #过滤access 日志 if ( [source] =~ "localhost\_access\_log" ) { grok { match => { message => [ "%{COMMONAPACHELOG}" ] } } date { match => [ "request_time", "ISO8601" ] locale => "cn" target => "request_time" } #过滤tomcat日志 } else if ( [source] =~ "catalina" ) { #匹配内容到字段 grok { match => {message => [ "(?<webapp_name>[w+])s+(?<request_time>d{4}-d{2}-d{2}s+w{2}:w{2}:w{2}\,w{3})s+(?<log_level>w+)s+(?<class_package>[^.^s]+(?:.[^.s]+)+).(?<class_name>[^s]+)s+(?<message_content>.+)" ] } } #解析请求时间 date { match => [ "request_time", "ISO8601" ] locale => "cn" target => "request_time" } } else { drop {} }}output { if ( [source] =~ "localhost_access_log" ) { elasticsearch { hosts => ["cpy04.dev.xjh.com:9200"] index => "access_log" } } else { elasticsearch { hosts => ["cpy04.dev.xjh.com:9200"] index => "tomcat_log" } } stdout { codec => rubydebug }}