从cuda 7.5开始引入原生fp16(Tegra X1是第一块支持的GPU https://gcc.gnu.org/onlinedocs/gcc/Half-Precision.html),实现了IEEE754标准中的半精度浮点型;

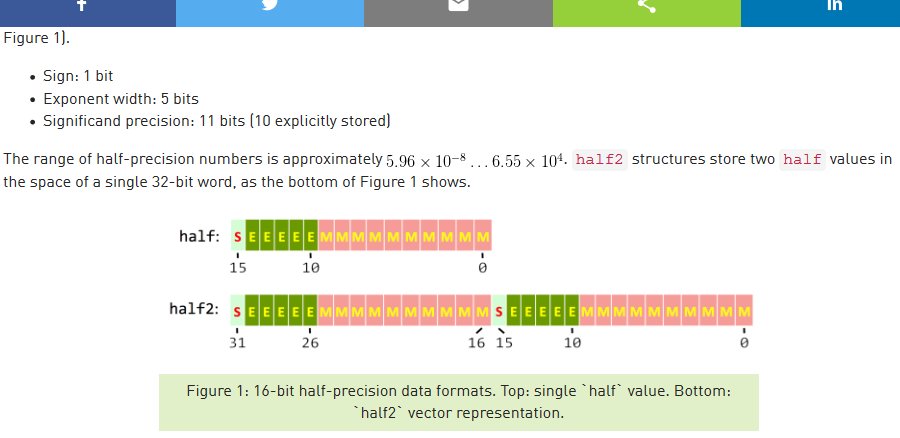

cuda使用half基本数据类型和half2结构体支持,需要引用cuda_fp16.h

Mixed Precision Performance on Pascal GPUs

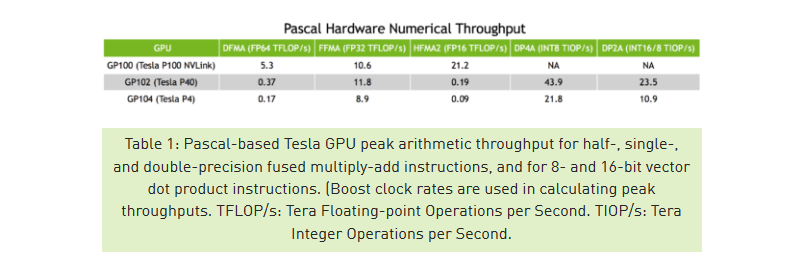

The half precision (FP16) Format is not new to GPUs. In fact, FP16 has been supported as a storage format for many years on NVIDIA GPUs, mostly used for reduced precision floating point texture storage and filtering and other special-purpose operations. The Pascal GPU architecture implements general-purpose, IEEE 754 FP16 arithmetic. High performance FP16 is supported at full speed on Tesla P100 (GP100), and at lower throughput (similar to double precision) on other Pascal GPUs (GP102, GP104, and GP106), as the following table shows.

CUDA使用FP16进行半精度运算 - CSDN博客

Nvidia GPU的浮点计算能力(FP64/FP32/FP16) - CSDN博客

让Faster R-CNN支持TX1的fp16(half float, float16)特性 - CSDN博客

CUDA Samples :: CUDA Toolkit Documentation

0_Simple/fp16ScalarProduct

caffe官方将会支持TX1的fp16的特性吗?

https://www.zhihu.com/question/39715684

https://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/5

ARM支持fp16

https://blog.csdn.net/hunanchenxingyu/article/details/47003279

http://gcc.gnu.org/onlinedocs/gcc/Half-Precision.html

https://blog.csdn.net/tanli20090506/article/details/71435777

https://blog.csdn.net/soaringlee_fighting/article/details/78885394

https://developer.arm.com/technologies/neon/intrinsics

https://developer.arm.com/technologies/floating-point

https://blog.csdn.net/softee/article/details/79494335

https://blog.csdn.net/qq_18229381/article/details/71104059

http://half.sourceforge.net/

https://blog.csdn.net/cubesky/article/details/51793525

https://gcc.gnu.org/onlinedocs/gcc/Half-Precision.html

https://en.wikipedia.org/wiki/F16C

https://en.wikipedia.org/wiki/Half-precision_floating-point_format

https://docs.microsoft.com/en-us/windows/desktop/api/directxpackedvector/nf-directxpackedvector-xmconvertfloattohalf

https://software.intel.com/en-us/node/524287

https://software.intel.com/en-us/node/524286