测试程序:从kafka中读取车辆定位数据,进行计算过滤,将超出指定电子围栏区域的定位数据保存到mysql,以便于后续进一步处理。

该测试程序源自业务部门的需求:对监控的车辆行驶轨迹进行实时分析,如果超出电子围栏区域则需要及时报警处理。由于这个场景的实时性要求高,因此比较适合用flink来直接处理。如果先将车辆定位数据保存到doris,再进行定时计算,则在时效性上可能就无法保证了。

由于flink目前只支持java跟scala,为了写程序,被迫学起了java。本例使用idea来编写(题外话:java跟c#的很多概念、甚至语法都已经非常接近了,写起代码来并不费劲;费劲是需要理解idea的配置管理、包引用、编译发布环境等这些概念,对于习惯了使用宇宙第一ide的vs新手来说,确实非常头疼(╥﹏╥))。

项目的pom配置如下:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>catsti.flink</groupId> <artifactId>kafka-compute-mysql</artifactId> <version>1.0-SNAPSHOT</version> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <flink.version>1.13.2</flink.version> <java.version>1.8</java.version> <scala.binary.version>2.11</scala.binary.version> <maven.compiler.source>${java.version}</maven.compiler.source> <maven.compiler.target>${java.version}</maven.compiler.target> </properties> <dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>${flink.version}</version> <scope>compile</scope> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_${scala.binary.version}</artifactId> <version>${flink.version}</version> <scope>compile</scope> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.34</version> </dependency> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-dbcp2</artifactId> <version>2.1.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_2.11</artifactId> <version>1.11.3</version> <scope>compile</scope> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.28</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-simple</artifactId> <version>1.7.25</version> <scope>compile</scope> </dependency> </dependencies> </project>

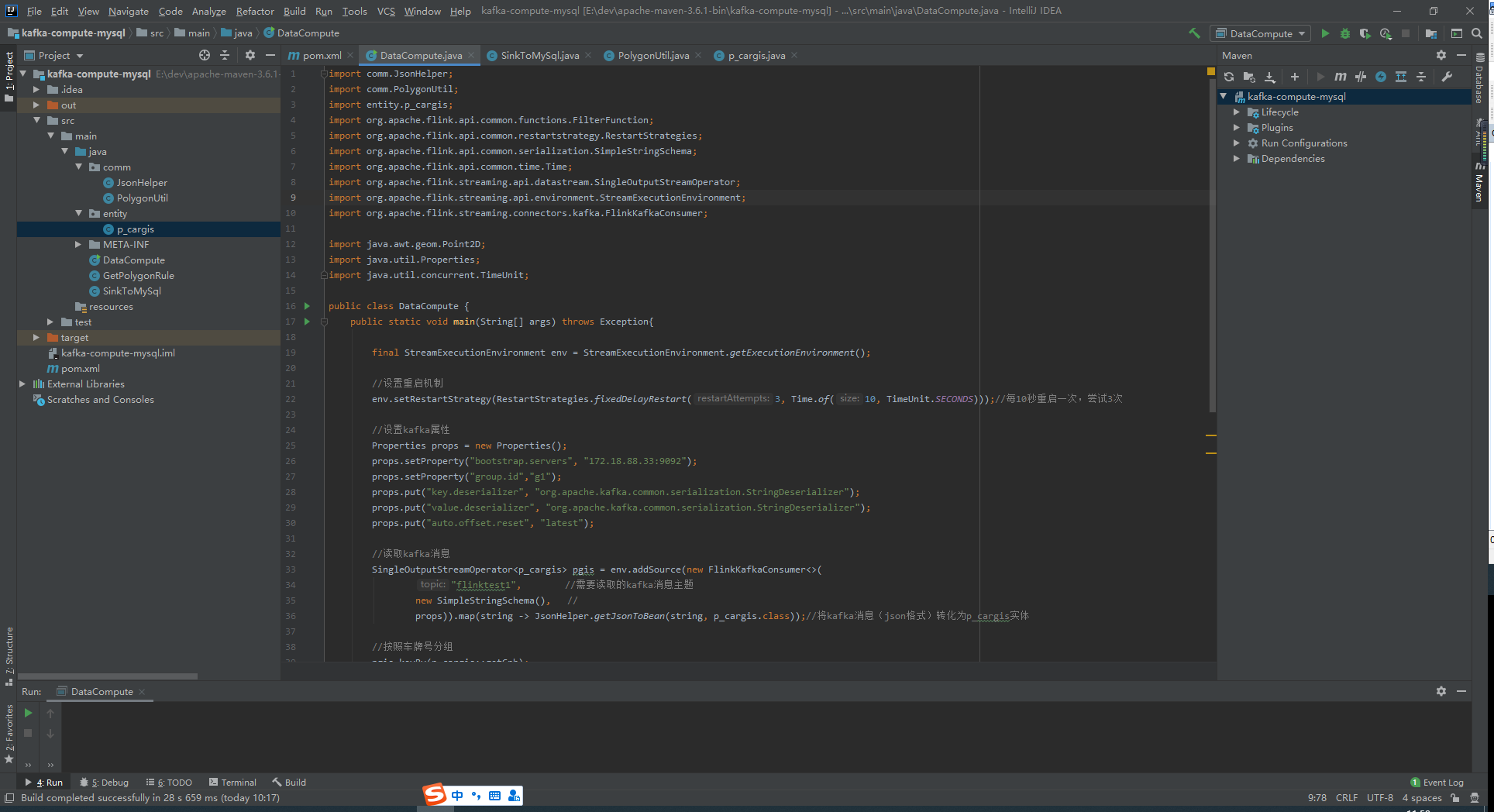

主类代码如下:

import comm.JsonHelper; import comm.PolygonUtil; import entity.p_cargis; import org.apache.flink.api.common.functions.FilterFunction; import org.apache.flink.api.common.restartstrategy.RestartStrategies; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.api.common.time.Time; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer; import java.awt.geom.Point2D; import java.util.Properties; import java.util.concurrent.TimeUnit; public class DataCompute { public static void main(String[] args) throws Exception{ final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); //设置重启机制 env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, Time.of(10, TimeUnit.SECONDS)));//每10秒重启一次,尝试3次 //设置kafka属性 Properties props = new Properties(); props.setProperty("bootstrap.servers", "172.18.88.33:9092"); props.setProperty("group.id","g1"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("auto.offset.reset", "latest"); //读取kafka消息 SingleOutputStreamOperator<p_cargis> pgis = env.addSource(new FlinkKafkaConsumer<>( "flinktest1", //需要读取的kafka消息主题 new SimpleStringSchema(), // props)).map(string -> JsonHelper.getJsonToBean(string, p_cargis.class));//将kafka消息(json格式)转化为p_cargis实体 //按照车牌号分组 pgis.keyBy(p_cargis::getCph); //对数据进行过滤 SingleOutputStreamOperator<p_cargis> filter = pgis.filter(new FilterFunction<p_cargis>() { @Override public boolean filter(p_cargis p_cargis) throws Exception { //判断数据是否在指定区域内 Point2D.Double point = new Point2D.Double(); point.x = p_cargis.lat; point.y = p_cargis.lon; return !PolygonUtil.isInPolygon(point,GetPolygonRule.getTestPolygonRule()); } }); filter.print(); //将过滤出来的数据保存到mysql filter.addSink(new SinkToMySql()).name("Save to Mysql"); //启动执行 env.execute("测试程序(读kafka,计算车辆定位数据是否超出范围,将超出数据写入mysql)"); } }

多边形工具类(用于判定指定点位是否在指定的区域内):

package comm; import java.awt.geom.Point2D; import java.util.List; public class PolygonUtil { /** * 地球半径 */ private static double EARTH_RADIUS = 6378138.0; private static double rad(double d) { return d * Math.PI / 180.0; } /** * 计算是否在圆上(单位/千米) * * @Title: GetDistance * @Description: TODO() * @param radius 半径 * @param lat1 纬度 * @param lng1 经度 * @return * @return double * @throws */ public static boolean isInCircle(double radius,double lat1, double lng1, double lat2, double lng2) { double radLat1 = rad(lat1); double radLat2 = rad(lat2); double a = radLat1 - radLat2; double b = rad(lng1) - rad(lng2); double s = 2 * Math.asin(Math.sqrt(Math.pow(Math.sin(a/2),2) + Math.cos(radLat1)*Math.cos(radLat2)*Math.pow(Math.sin(b/2),2))); s = s * EARTH_RADIUS; s = Math.round(s * 10000) / 10000; if(s > radius) {//不在圆上 return false; }else { return true; } } /** * 是否在矩形区域内 * @Title: isInArea * @Description: TODO() * @param lat 测试点经度 * @param lng 测试点纬度 * @param minLat 纬度范围限制1 * @param maxLat 纬度范围限制2 * @param minLng 经度限制范围1 * @param maxLng 经度范围限制2 * @return * @return boolean * @throws */ public static boolean isInRectangleArea(double lat,double lng,double minLat, double maxLat,double minLng,double maxLng){ if(isInRange(lat, minLat, maxLat)){//如果在纬度的范围内 if(minLng*maxLng>0){ if(isInRange(lng, minLng, maxLng)){ return true; }else { return false; } }else { if(Math.abs(minLng)+Math.abs(maxLng)<180){ if(isInRange(lng, minLng, maxLng)){ return true; }else { return false; } }else{ double left = Math.max(minLng, maxLng); double right = Math.min(minLng, maxLng); if(isInRange(lng, left, 180)||isInRange(lng, right,-180)){ return true; }else { return false; } } } }else{ return false; } } /** * 判断是否在经纬度范围内 * @Title: isInRange * @Description: TODO() * @param point * @param left * @param right * @return * @return boolean * @throws */ public static boolean isInRange(double point, double left,double right){ if(point>=Math.min(left, right)&&point<=Math.max(left, right)){ return true; }else { return false; } } /** * 判断点是否在多边形内 * @Title: IsPointInPoly * @Description: TODO() * @param point 测试点 * @param pts 多边形的点 * @return * @return boolean * @throws */ public static boolean isInPolygon(Point2D.Double point, List<Point2D.Double> pts){ int N = pts.size(); boolean boundOrVertex = true; int intersectCount = 0;//交叉点数量 double precision = 2e-10; //浮点类型计算时候与0比较时候的容差 Point2D.Double p1, p2;//临近顶点 Point2D.Double p = point; //当前点 p1 = pts.get(0); for(int i = 1; i <= N; ++i){ if(p.equals(p1)){ return boundOrVertex; } p2 = pts.get(i % N); if(p.x < Math.min(p1.x, p2.x) || p.x > Math.max(p1.x, p2.x)){ p1 = p2; continue; } //射线穿过算法 if(p.x > Math.min(p1.x, p2.x) && p.x < Math.max(p1.x, p2.x)){ if(p.y <= Math.max(p1.y, p2.y)){ if(p1.x == p2.x && p.y >= Math.min(p1.y, p2.y)){ return boundOrVertex; } if(p1.y == p2.y){ if(p1.y == p.y){ return boundOrVertex; }else{ ++intersectCount; } }else{ double xinters = (p.x - p1.x) * (p2.y - p1.y) / (p2.x - p1.x) + p1.y; if(Math.abs(p.y - xinters) < precision){ return boundOrVertex; } if(p.y < xinters){ ++intersectCount; } } } }else{ if(p.x == p2.x && p.y <= p2.y){ Point2D.Double p3 = pts.get((i+1) % N); if(p.x >= Math.min(p1.x, p3.x) && p.x <= Math.max(p1.x, p3.x)){ ++intersectCount; }else{ intersectCount += 2; } } } p1 = p2; } if(intersectCount % 2 == 0){//偶数在多边形外 return false; } else { //奇数在多边形内 return true; } } }

车辆定位数据实体类:

package entity; public class p_cargis { public String cph;//车牌号 public int cpys;//车牌颜色 public String dt;//定位数据生成时间 public double lat;//经度 public double lon;//纬度 public int xssd;//行驶速度 public p_cargis() { } public String getCph(){ return cph; } public int getCpys(){ return cpys; } public String getDt() { return dt; } public double getLat() { return lat; } public double getLon() { return lon; } public int getXssd() { return xssd; } public void setCph(String cph) { this.cph = cph; } public void setCpys(int cpys) { this.cpys = cpys; } public void setDt(String dt) { this.dt = dt; } public void setLat(double lat) { this.lat = lat; } public void setLon(double lon) { this.lon = lon; } public void setXssd(int xssd) { this.xssd = xssd; } }

将过滤出来的数据写入mysql,通过SinkToMySql类完成:

import entity.p_cargis; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.functions.sink.RichSinkFunction; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; /** * 将结果保存到数据库 * */ public class SinkToMySql extends RichSinkFunction<p_cargis> { PreparedStatement ps; private Connection connection; /** * open() 方法中建立连接,这样不用每次 invoke 的时候都要建立连接和释放连接 * * @param parameters * @throws Exception */ @Override public void open(Configuration parameters) throws Exception { super.open(parameters); connection = getConnection(); String sql = "insert into T_ResultData(datatype, dataname, datavalue) values(?, ?, ?);"; ps = this.connection.prepareStatement(sql); } @Override public void close() throws Exception { super.close(); //关闭连接和释放资源 if (connection != null) { connection.close(); } if (ps != null) { ps.close(); } } /** * 每条数据的插入都要调用一次 invoke() 方法 * * @param value * @param context * @throws Exception */ @Override public void invoke(p_cargis value, Context context) throws Exception { insertDB(); } private void insertDB(p_cargis value) throws Exception { //组装数据,执行插入操作 ps.setInt(1, value.getCpys()); ps.setString(2, value.getCph()); ps.setString(3, String.valueOf(value.getLat()) + "-" + String.valueOf(value.getLon())); ps.executeUpdate(); } private static Connection getConnection() { Connection con = null; try { Class.forName("com.mysql.jdbc.Driver"); con = DriverManager.getConnection("jdbc:mysql://172.16.170.74:3306/source_data?useUnicode=true&characterEncoding=UTF-8", "username", "password"); } catch (Exception e) { System.out.println("-----------mysql get connection has exception , msg = "+ e.getMessage()); } return con; } }

整个项目结构如下:

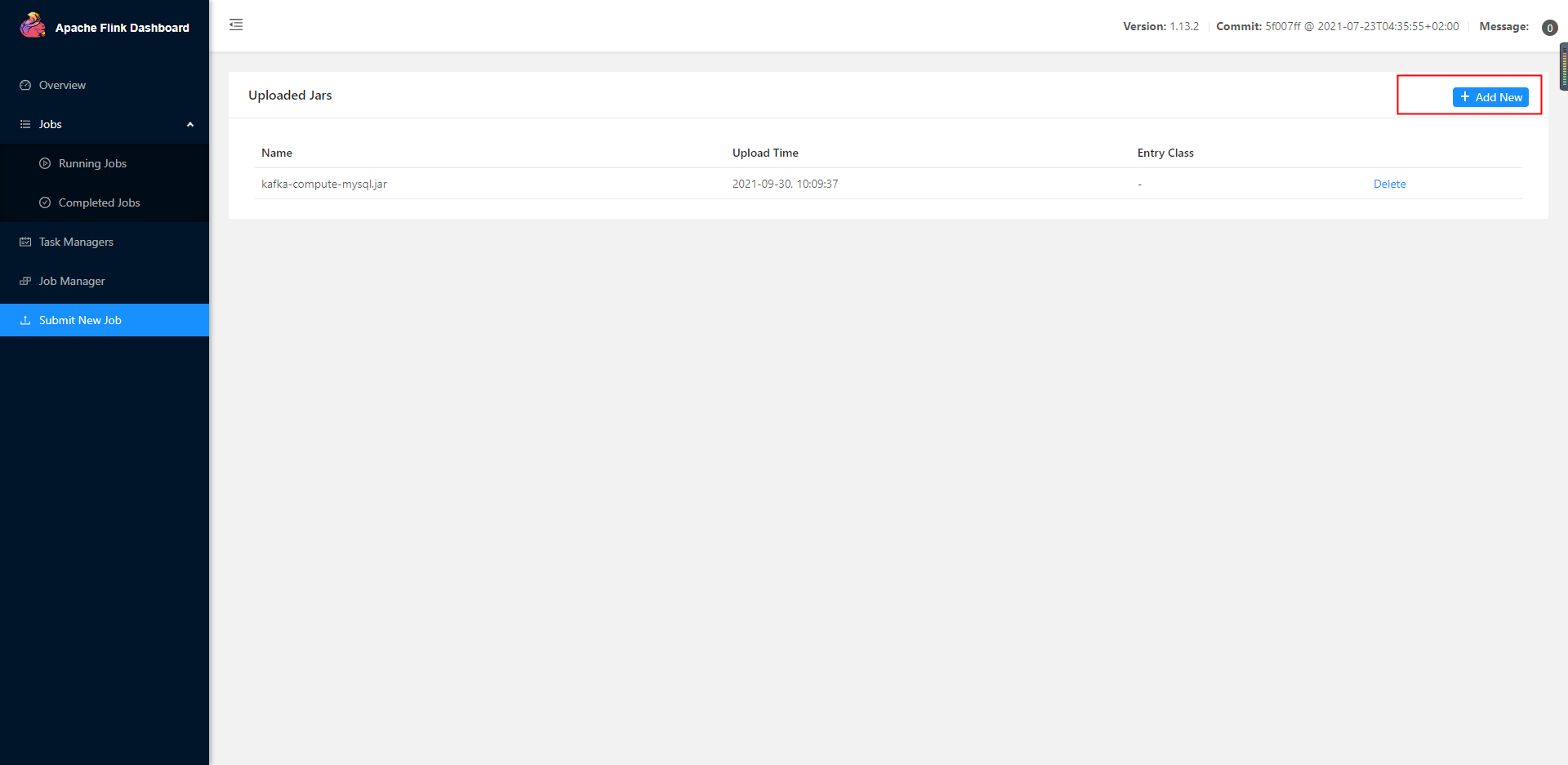

编译发布生成jar包,然后到flink服务器上add上去:

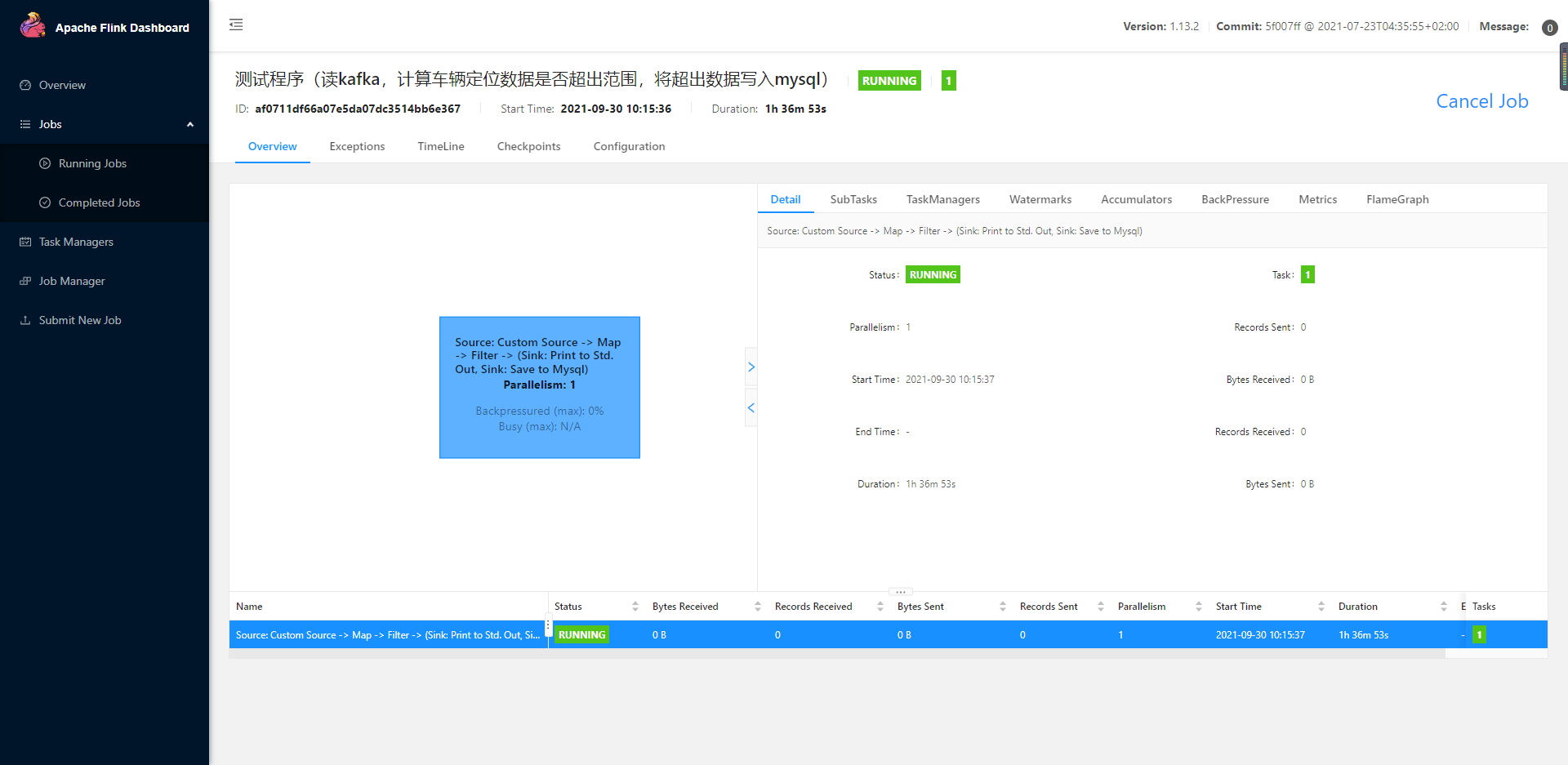

之后submit就可以运行起来了:

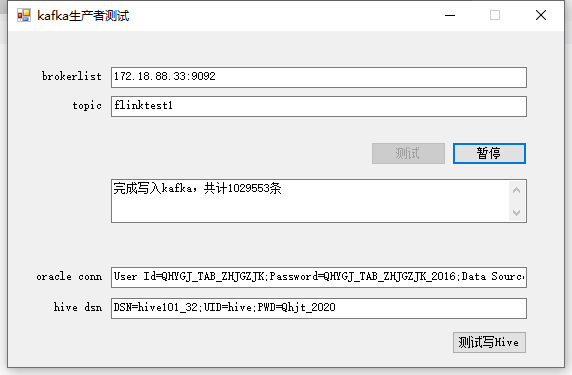

使用一个测试程序,向kafka里写测试数据:

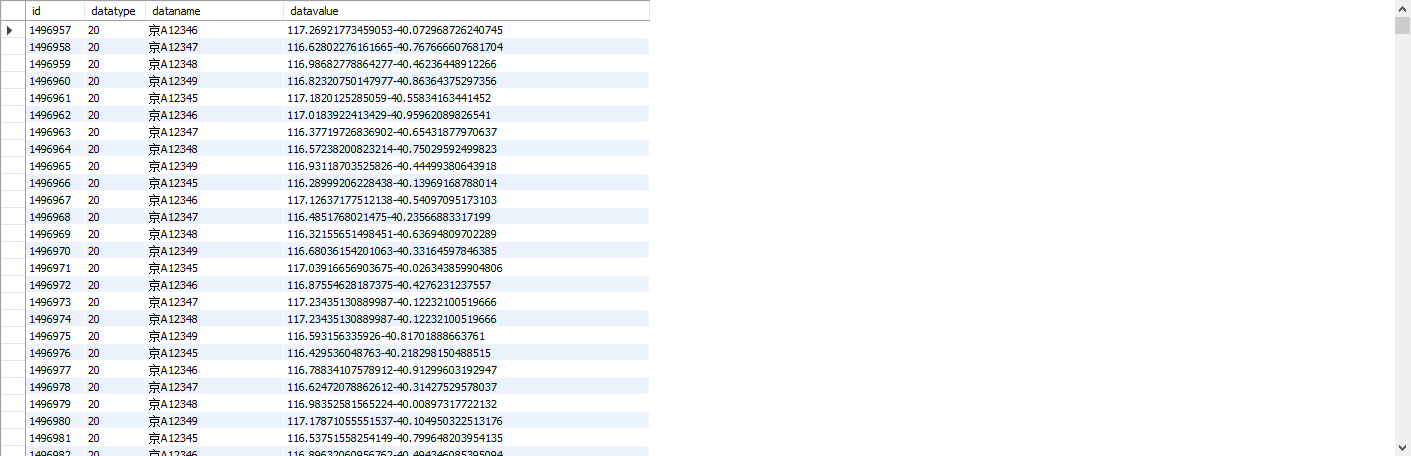

很快mysql里产生了经过flink代码过滤出来的预警数据(不在指定区域内的车辆定位数据):