Python中提供了函数和类来实现多进程

创建多进程

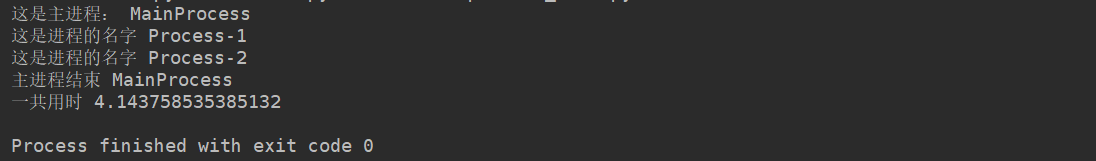

使用函数方式

import multiprocessing

import time

def worker(sec):

time.sleep(sec)

print('这是进程的名字', multiprocessing.current_process().name)

time.sleep(sec)

if __name__ == '__main__':

print('这是主进程:', multiprocessing.current_process().name)

s_time = time.time()

p1 = multiprocessing.Process(target=worker, args=(2,))

p2 = multiprocessing.Process(target=worker, args=(2,))

p1.start()

p2.start()

p1.join()

p2.join()

print('主进程结束', multiprocessing.current_process().name)

print('一共用时', time.time()-s_time)

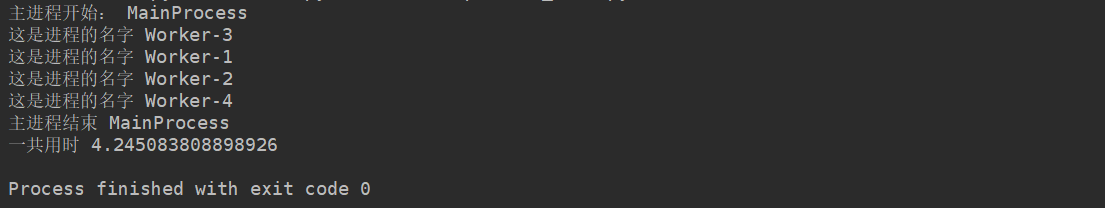

使用类方式

import multiprocessing

import time

class Worker(multiprocessing.Process):

def __init__(self, sec):

super(Worker, self).__init__()

self.sec = sec

def run(self):

time.sleep(self.sec)

print('这是进程的名字', multiprocessing.current_process().name)

time.sleep(self.sec)

if __name__ == '__main__':

print('主进程开始:', multiprocessing.current_process().name)

s_time = time.time()

pro_list = []

for i in range(4):

worker = Worker(2)

pro_list.append(worker)

for p in pro_list:

p.start()

for p in pro_list:

p.join()

print('主进程结束', multiprocessing.current_process().name)

print('一共用时', time.time()-s_time)

使用以上两种方式创建多进程时,join()方法与多线程效果相同,多线程设置守护线程的命令是

t.setDaemon(True), 而多进程设置守护进程的命令是p.daemon = True

进程锁Lock()

进程锁可以避免因为多个进程访问共享资源而发生冲突。

- 不使用进程锁

import multiprocessing

import sys

def worker(f):

f = open(f, 'w')

n = 100000

while n > 1:

f.write("The number is %s

" %n)

n -= 1

f.close()

if __name__ == "__main__":

f = "file.txt"

p1 = multiprocessing.Process(target=worker, args=(f,))

p2 = multiprocessing.Process(target=worker, args=(f,))

p1.start()

p2.start()

可以看到,文件在写入的时候并没有按照我们想要的顺序。

- 使用进程锁

import multiprocessing

import sys

def worker(lock, f):

lock.acquire()

fs = open(f, 'a+')

n = 100

while n > 1:

fs.write("The number is

")

n -= 1

fs.close()

lock.release()

if __name__ == "__main__":

lock = multiprocessing.Lock()

f = "file.txt"

p1 = multiprocessing.Process(target=worker, args=(lock, f))

p2 = multiprocessing.Process(target=worker, args=(lock, f))

p1.start()

p2.start()

Semaphore

Semaphore控制访问共享资源的数量

import multiprocessing

import time

def worker(s, i):

s.acquire()

print("进程%s acquire" %multiprocessing.current_process().name);

time.sleep(i)

print('进程%s 正在访问资源' %multiprocessing.current_process().name)

print("进程%s release" %multiprocessing.current_process().name);

s.release()

if __name__ == "__main__":

s = multiprocessing.Semaphore(2)

pro_list = []

for i in range(5):

p = multiprocessing.Process(target = worker, args=(s, i))

pro_list.append(p)

for p in pro_list:

p.start()

由于我们设置了访问某个资源的最大进程数是2,所以最多只能有两个进程可以同时访问该资源,当某一个进程释放之后,其他的进程才可以访问,以此来保证不超过最大访问数。

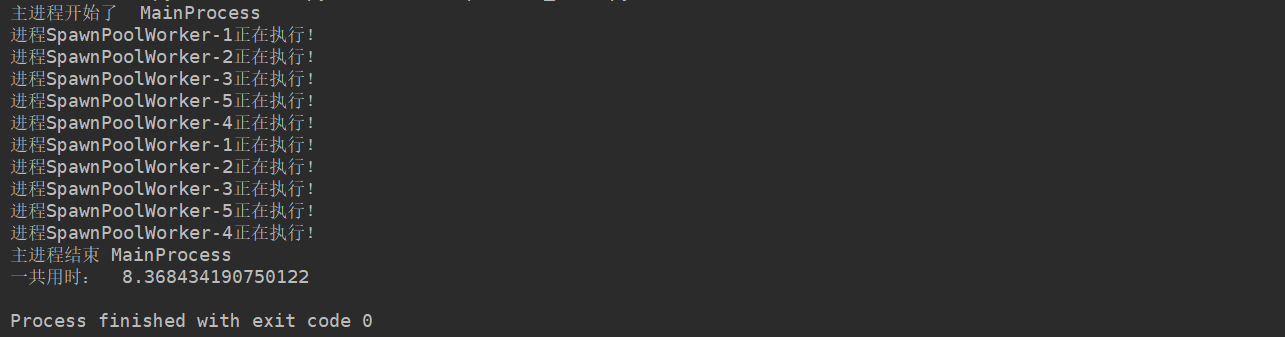

进程池Pool

import multiprocessing

from multiprocessing import Process,Pool

import time

def run(sec):

time.sleep(sec)

print('进程%s正在执行!' % multiprocessing.current_process().name)

time.sleep(sec)

if __name__ == '__main__':

print('主进程开始了 ' ,multiprocessing.current_process().name)

s_time = time.time()

p = Pool(5) # 最大并发进程数

for i in range(10):

p.apply_async(run, args=(2,))

p.close() # 关闭进程池

p.join()

print('主进程结束', multiprocessing.current_process().name)

print('一共用时: ', time.time()-s_time)