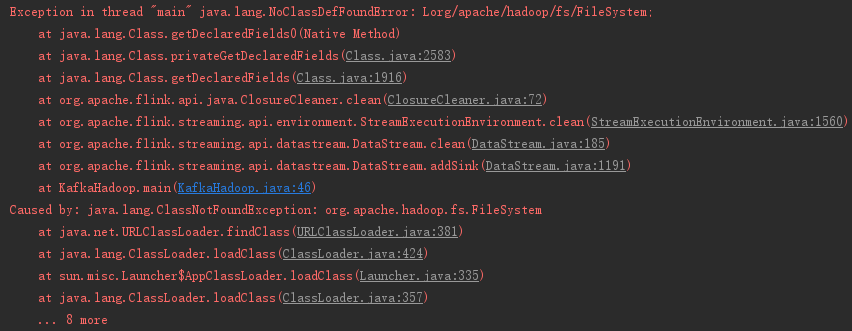

报错现象:

Exception in thread "main" java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem; at java.lang.Class.getDeclaredFields0(Native Method) at java.lang.Class.privateGetDeclaredFields(Class.java:2583) at java.lang.Class.getDeclaredFields(Class.java:1916) at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72) at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1560) at org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185) at org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1191) at KafkaHadoop.main(KafkaHadoop.java:46) Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 8 more

报错背景:

当时我是在做kafka+flink+hadoop数据存取的功能,一直在报这个错,代码也没有问题,困扰了很长时间。

报错解决:

第一步:我怀疑是依赖冲突问题,于是将关于hadoop的pom依赖给一一注释掉,发现做完之后还是报错;

第二步:我开始怀疑是没有这个jar包,但是当我去MVNREPOSITORY网站搜索关于org.apache.hadoop.fs.FileSystem的依赖时,搜索到了一下几个依赖:

hadoop-hdfs、flink-connector-filesystem_2.11、flink-hadoop-fs 相关的,但是放上去之后发现并没有什么卵用;

第三步:于是我就开始百度 org.apache.hadoop.fs.FileSystem 相关信息,

(1)找到了下面这个网站入口:

(2)点进去发现:

(3)然后点了进去:

虽然看不懂,但是我感觉很有可能就是在下面这几个jar包中,于是我就去MVNREPOSITORY网站搜索hadoop-core,

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-core --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-core</artifactId> <version>1.1.0</version> </dependency>

将这个依赖加了进去,然后报错解决。

完整pom:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>yk.bigdate</groupId> <artifactId>flinkHDFS</artifactId> <version>1.0-SNAPSHOT</version> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <flink.version>1.6.1</flink.version> <slf4j.version>1.7.7</slf4j.version> <log4j.version>1.2.17</log4j.version> </properties> <dependencies> <!--******************* flink *******************--> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.11</artifactId> <version>${flink.version}</version> </dependency> <!-- <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_2.11</artifactId> <version>${flink.version}</version> </dependency>--> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka-0.11_2.11</artifactId> <version>${flink.version}</version> <scope> compile</scope> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-filesystem_2.11</artifactId> <version>${flink.version}</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-core --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-core</artifactId> <version>1.1.0</version> </dependency> <!--******************* kafka *******************--> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>1.1.1</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>3.3</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> <!--打jar包--> <plugin> <artifactId>maven-assembly-plugin</artifactId> <configuration> <archive> <manifest> <mainClass>com.allen.capturewebdata.Main</mainClass> </manifest> </archive> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> </plugin> </plugins> </build> </project>