上文中本人是通过Hadoop的eclipse插件来管理HDFS文件系统的,在实际生产环境通常是用编程的方式来管理hadoop的文件系统

下面我们编程方式开发一个管理hadoop文件系统的应用

首先打开eclipse开发工具,新建Map/Reduce Project项目

点击Next,填写项目名称,并且配置hadoop安装目录

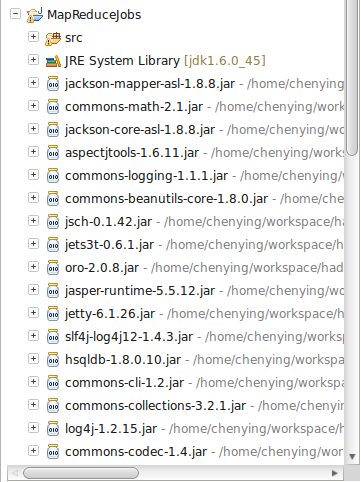

新建立的项目会自动导入hadoop的相关依赖jar文件,不必我们手动添加了

接下来我们调用hadoop文件系统的API来执行文件操作,我们先建立一个文件管理工具类

public class HDFSUtil { /** * 从本地复制文件到HDFS * @param srcFilePath * @param dstFilePath * @throws IOException */ public static void copyFile2HDFS(String srcFilePath,String dstFilePath){ Configuration conf = new Configuration(); Path src = new Path(srcFilePath); Path dst = new Path(dstFilePath); FileSystem hdfs=null; try { hdfs = FileSystem.get(conf); hdfs.copyFromLocalFile(src, dst); FileStatus[] files = hdfs.listStatus(dst); if(files!=null) { for(int i=0;i<files.length;i++) { System.out.println("the file is:"+files[i].getPath().getName()); } }else { System.out.println("no files"); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } /** * 在HDFS上创建文件 * @param content * @param dstFile * @throws IOException */ public static void createFileInHDFS(String content,String dstFile) { Configuration conf = new Configuration(); //conf.addResource(new Path("/home/chenying/hadoop-1.2.0/conf/core-site.xml")); Path dst = new Path(dstFile); FileSystem hdfs = null; FSDataOutputStream out = null; try { hdfs=FileSystem.get(conf); out = hdfs.create(dst); // out.writeBytes(content); out.write(content.getBytes("UTF-8")); out.flush(); } catch (IOException e) { e.printStackTrace(); }finally { if(hdfs != null) { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } if(out != null) { try { out.close(); } catch (IOException e) { e.printStackTrace(); } } } } /** * 在HDFS上创建目录 * @param content * @param dstFile * @throws IOException */ public static void createDirectoryInHDFS(String dstDir) { Configuration conf = new Configuration(); Path dst = new Path(dstDir); FileSystem hdfs = null; try { hdfs=FileSystem.get(conf); hdfs.mkdirs(dst); } catch (IOException e) { e.printStackTrace(); //throw new IOException(e); }finally { if(hdfs != null) { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } } /** * 重命名文件 * @param originalFile * @param newFile * @throws IOException */ public static void renameFileInHDFS(String originalFile,String newFile) { Configuration conf = new Configuration(); Path originalPath = new Path(originalFile); Path newPath = new Path(newFile); FileSystem hdfs=null; try { //hdfs = newPath.getFileSystem(conf); hdfs =FileSystem.get(conf); boolean isRename=hdfs.rename(originalPath, newPath); String result=isRename?"成功":"失败"; System.out.println("文件重命名结果为:"+result); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } /** * 获得文件的最后修改时间 * @param dstFile * @throws IOException */ public static void getFileLastModifyTime(String dstFile) { Configuration conf = new Configuration(); Path dstPath = new Path(dstFile); FileSystem hdfs=null; try { //hdfs = dstPath.getFileSystem(conf); hdfs =FileSystem.get(conf); FileStatus file = hdfs.getFileStatus(dstPath); long time = file.getModificationTime(); System.out.println("the last modify time is : "+new SimpleDateFormat("yyyy-MM-dd HH:mm:ss").format(new Date(time))); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } /** * 检查文件是否存在 * @param dstFile * @throws IOException */ public static void checkFileIsExists(String dstFile) { Configuration conf = new Configuration(); Path dstPath = new Path(dstFile); FileSystem hdfs=null; try { //hdfs = dstPath.getFileSystem(conf); hdfs =FileSystem.get(conf); boolean flag = hdfs.exists(dstPath); System.out.println("is the file exists:"+flag); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } /** * 获得文件的存放位置 * @param dstFile * @throws IOException */ public static void getFileLocations(String dstFile){ Configuration conf = new Configuration(); Path dstPath = new Path(dstFile); FileSystem hdfs=null; try { //hdfs = dstPath.getFileSystem(conf); hdfs =FileSystem.get(conf); FileStatus file = hdfs.getFileStatus(dstPath); BlockLocation[] blkLocations = hdfs.getFileBlockLocations(file, 0, file.getLen()); if(blkLocations != null) { int len = blkLocations.length; for(int i=0;i<len;i++) { String[] hosts = blkLocations[i].getHosts(); for(String host:hosts) { System.out.println("the location'host is : "+host); } } } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } /** * 删除文件 * @throws IOException */ public static void deleteFile(String dstFile){ Configuration conf = new Configuration(); Path dstPath = new Path(dstFile); FileSystem hdfs=null; try { //hdfs = dstPath.getFileSystem(conf); hdfs =FileSystem.get(conf); boolean flag = hdfs.delete(dstPath, true);////如果是目录则递归删除 System.out.println("is deleted : "+flag); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { try { hdfs.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } }

(如果你愿意折腾,可以尝试将上面的方法改造成接口回调,本人就不做这个无谓的折腾了)

如果遇到Exception in thread "main" java.lang.IllegalArgumentException: Wrong FS: hdfs expected: file:///之类的异常,需要将${hadoop.root}/conf/目录的hadoop配置文件core-site.xml、hdfs-site.xml、mapred-site.xml拷贝到eclipse项目的bin目录里面

下面是测试类

public class HdfsClient { /** * 上传文件 * @throws IOException */ @Test public void testCopyFile2HDFS() throws IOException { String srcFilePath = "/home/chenying/FetchTask.java"; String dstFilePath = "hdfs://localhost:9000/user/coder/in"; HDFSUtil.copyFile2HDFS(srcFilePath, dstFilePath); } /** * 创建文件 */ @Test public void testCreateFileInHDFS() { String content = "hey,Hadoop. 测试中文"; String dstFile = "hdfs://localhost:9000/user/coder/input/hey"; HDFSUtil.createFileInHDFS(content, dstFile); } /** * 重命名文件 */ @Test public void testRenameFileInHDFS() { String originalFile = "hdfs://localhost:9000/user/coder/input/hey"; String newFile = "hdfs://localhost:9000/user/coder/input/hey_hadoop"; HDFSUtil.renameFileInHDFS(originalFile, newFile); } /** * 获取文件的最后修改时间 */ @Test public void testGetFileLastModifyTimme() { String dstFile = "hdfs://localhost:9000/user/coder/input/hey_hadoop"; HDFSUtil.getFileLastModifyTime(dstFile); } /** * 检查文件是否存在 */ @Test public void testCheckFileIsExists() { String dstFile = "hdfs://localhost:9000/user/coder/input/hey_hadoop"; HDFSUtil.checkFileIsExists(dstFile); } /** * 获取文件的存放位置 */ @Test public void testGetFileLocations() { String dstFile = "hdfs://localhost:9000/user/coder/input/hey_hadoop"; HDFSUtil.getFileLocations(dstFile); } /** * 删除文件 */ @Test public void testDeleteFile(){ String dstFile = "hdfs://localhost:9000/user/coder/output"; HDFSUtil.deleteFile(dstFile); } }

运行该测试类,正常情况下,上面的测试用例会显示绿色

后面部分,本人打算采用HDFS开发一个图片服务器

---------------------------------------------------------------------------

本系列Hadoop1.2.0开发笔记系本人原创

转载请注明出处 博客园 刺猬的温驯

本文链接 http://www.cnblogs.com/chenying99/archive/2013/06/02/3113449.html