httpclient

HttpClient笔记

1.简介

HttpClient 是 Apache Jakarta Common 下的子项目,可以用来提供高效的、最新的、功能丰富的支持 HTTP 协议的客户端编程工具包,并且它支持 HTTP 协议最新的版本和建议。

最新版本4.5 http://hc.apache.org/httpcomponents-client-4.5.x/

官方文档: http://hc.apache.org/httpcomponents-client-4.5.x/tutorial/html/index.html

maven地址:

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.2</version>

</dependency>

HTTP 协议可能是现在 Internet 上使用得最多、最重要的协议了,越来越多的 Java 应用程序需要直接通过 HTTP 协议来访问网络资源。虽然在 JDK 的 java net包中已经提供了访问 HTTP 协议的基本功能,但是对于大部分应用程序来说,JDK 库本身提供的功能还不够丰富和灵活。HttpClient 是 Apache Jakarta Common 下的子项目,用来提供高效的、最新的、功能丰富的支持 HTTP 协议的客户端编程工具包,并且它支持 HTTP 协议最新的版本和建议。HttpClient 已经应用在很多的项目中,比如 Apache Jakarta 上很著名的另外两个开源项目 Cactus 和 HTMLUnit 都使用了 HttpClient。现在HttpClient最新版本为 HttpClient 4.5 (GA) (2015-09-11)我们搞爬虫的,主要是用HttpClient模拟浏览器请求第三方站点url,然后响应,获取网页数据,然后用Jsoup来提取我们需要的信息;

2.入门

public static void main(String[] args) {

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://www.tuicool.com/"); // 创建httpget实例

CloseableHttpResponse response=null;

try {

response=httpClient.execute(httpGet); // 执行http get请求

} catch (ClientProtocolException e) { // http协议异常

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) { // io异常

// TODO Auto-generated catch block

e.printStackTrace();

}

HttpEntity entity=response.getEntity(); // 获取返回实体

try {

System.out.println("网页内容:"+EntityUtils.toString(entity, "utf-8")); // 获取网页内容

} catch (ParseException e) { // 解析异常

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) { // io异常

// TODO Auto-generated catch block

e.printStackTrace();

}

try {

response.close(); // response关闭

} catch (IOException e) { // io异常

// TODO Auto-generated catch block

e.printStackTrace();

}

try {

httpClient.close(); // httpClient关闭

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

就可以得到网页源代码信息

3.模拟浏览器抓取网页

public static void main(String[] args)throws Exception {

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://www.tuicool.com/"); // 创建httpget实例

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0");

CloseableHttpResponse response=httpClient.execute(httpGet); // 执行http get请求

HttpEntity entity=response.getEntity(); // 获取返回实体

System.out.println("网页内容:"+EntityUtils.toString(entity, "utf-8")); // 获取网页内容

response.close(); // response关闭

httpClient.close(); // httpClient关闭

}服务器会被当做浏览器被请求

采集jar包

public static void main(String[] args)throws Exception {

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://central.maven.org/maven2/HTTPClient/HTTPClient/0.3-3/HTTPClient-0.3-3.jar"); // 创建httpget实例

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0");

CloseableHttpResponse response=httpClient.execute(httpGet); // 执行http get请求

HttpEntity entity=response.getEntity(); // 获取返回实体

System.out.println("Content-Type:"+entity.getContentType().getValue());

//System.out.println("网页内容:"+EntityUtils.toString(entity, "utf-8")); // 获取网页内容

response.close(); // response关闭

httpClient.close(); // httpClient关闭

}

获取浏览器相应类型

public static void main(String[] args)throws Exception {

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://www.open1111.com/"); // 创建httpget实例

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0");

CloseableHttpResponse response=httpClient.execute(httpGet); // 执行http get请求

System.out.println("Status:"+response.getStatusLine().getStatusCode());

HttpEntity entity=response.getEntity(); // 获取返回实体

System.out.println("Content-Type:"+entity.getContentType().getValue());

//System.out.println("网页内容:"+EntityUtils.toString(entity, "utf-8")); // 获取网页内容

response.close(); // response关闭

httpClient.close(); // httpClient关闭

}

4.抓取图片

public static void main(String[] args)throws Exception {

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://www.java1234.com/gg/dljd4.gif"); // 创建httpget实例

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0");

CloseableHttpResponse response=httpClient.execute(httpGet); // 执行http get请求

HttpEntity entity=response.getEntity(); // 获取返回实体

if(entity!=null){

System.out.println("ContentType:"+entity.getContentType().getValue());

InputStream inputStream=entity.getContent();

FileUtils.copyToFile(inputStream, new File("C://dljd4.gif"));

}

response.close(); // response关闭

httpClient.close(); // httpClient关闭

}

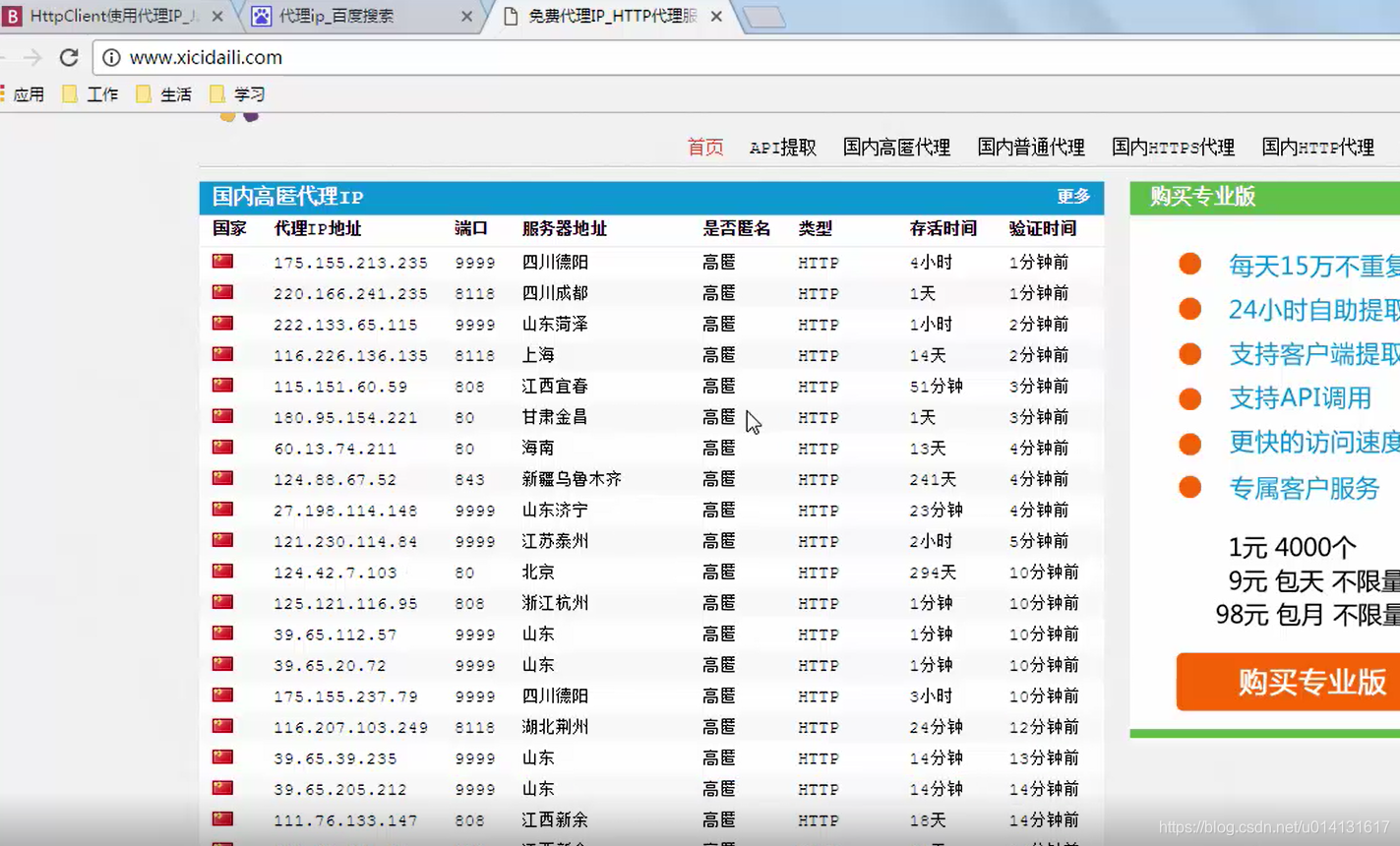

5.设置代理ip

public static void main(String[] args)throws Exception {

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://www.tuicool.com/"); // 创建httpget实例

HttpHost proxy=new HttpHost("175.155.213.235", 9999);

RequestConfig config=RequestConfig.custom().setProxy(proxy).build();

httpGet.setConfig(config);

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0");

CloseableHttpResponse response=httpClient.execute(httpGet); // 执行http get请求

HttpEntity entity=response.getEntity(); // 获取返回实体

System.out.println("网页内容:"+EntityUtils.toString(entity, "utf-8")); // 获取网页内容

response.close(); // response关闭

httpClient.close(); // httpClient关闭

}|

|

==============================================================================================

jsoup

工具类

|

package com.cxl.comment.tools;

import java.io.IOException;

import org.apache.http.HttpEntity; import org.apache.http.ParseException; import org.apache.http.client.ClientProtocolException; import org.apache.http.client.methods.CloseableHttpResponse; import org.apache.http.client.methods.HttpGet; import org.apache.http.impl.client.CloseableHttpClient; import org.apache.http.impl.client.HttpClients; import org.apache.http.util.EntityUtils;

public class CreeperWebSiteUtils {

public static String get(String website) { //创建httpclient CloseableHttpClient httpClient = HttpClients.createDefault(); //创建httpget实例 HttpGet httpGet = new HttpGet(website); CloseableHttpResponse response = null; try { response = httpClient.execute(httpGet); } catch (ClientProtocolException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } //获取返回实体 HttpEntity entity = response.getEntity(); String content = null; try { content = EntityUtils.toString(entity,"utf-8"); } catch (ParseException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } //System.err.println("网页内容:"+content); //关闭流和释放系统资源 try { response.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return content; }

}

|

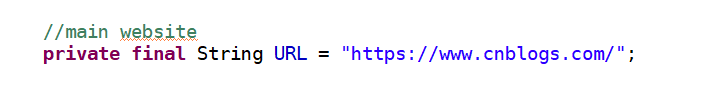

使用

|

@GetMapping("/list") public Object getBlogList(HttpServletResponse respones) { //logger.info("=====================进入博客列表方法=================================="); respones.setHeader("Access-Control-Allow-Origin", "*");

//获取网页内容 String content = CreeperWebSiteUtils.get(URL);//获取网页内容 Document doc = Jsoup.parse(content);//解析网页内容 //获取参数 //新闻标题 Elements title = doc.getElementsByClass("titlelnk");//根据class类样获取标题

///logger.info("======获取博客信息==========="); Elements minicontent = doc.getElementsByClass("post_item_summary"); Elements time = doc.getElementsByClass("post_item_foot"); Elements read = doc.getElementsByClass("gray");

List<Blog> blogList = new ArrayList<>();

for (int i = 0; i < title.size(); i++) {

Blog blog = new Blog(title.get(i).text(), time.get(i).text(), title.get(i).attr("href"), read.get(i).text(), minicontent.get(i).text()); blogList.add(blog); } ///logger.info("===========博客列表方法结束==========="); return blogList; } |