超分的开篇之作,2014 ECCV 港中文 Chao Dong

三层网络,文中还对各层网络的意义做出了解释

使用caffe训练模型,matlab做inference,代码见 http://mmlab.ie.cuhk.edu.hk/projects/SRCNN.html

700*700像素的图重建出来需要30s+,效果还是比较朦胧

可以用自己的图片做一下直观了解

path = 'E:Download超分辨率 est est';

list = dir(path);

for i1 = 1: size(list,1)

name = list(i1).name;

if name == '.'

continue;

end

fullName = fullfile(path, name);

demo_SR(fullName); % 需要把作者的demo源文件改为function

end

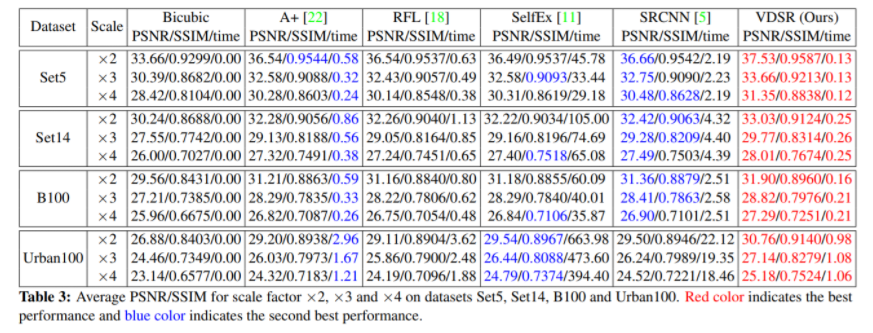

评估方法

计算PSNR、SSIM,计算的方式可以参见VDSR的matlab代码

常用评估数据集 set5,set14,B100,urban100,之后的新paper,还用了manga109、部分DIV2K等

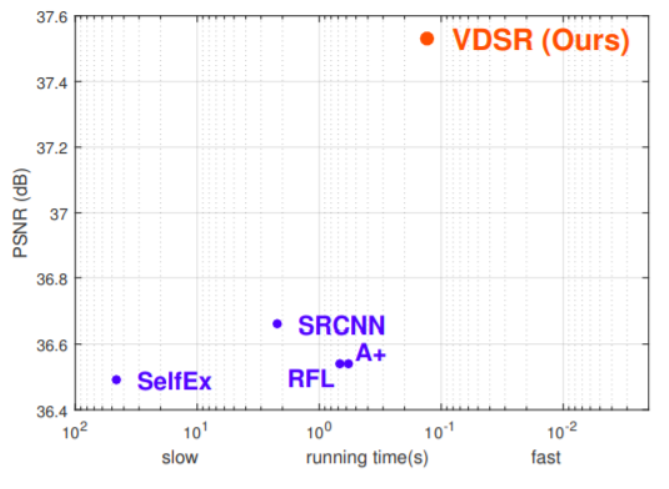

用二维坐标表示性能也相当直观

缺点:

1、Works for only a single scale

2、卷积感受野太小

3、学习速率 1e-5 太慢,需要加快训练速度。而且inference的速度也比较慢

如何训练model

关于训练数据的说明:

For a fair comparison with traditional example-based methods, we use the same training set, test sets, and protocols as in [20]. Speci cally, the training set consists of 91 images. The Set5 [2] (5 images) is used to evaluate the performance of upscaling factors 2, 3, and 4, and Set14 [28] (14 images) is used to evaluate the upscaling factor 3. In addition to the 91-image training set, we also investigate a larger training set in Section 5.2.

Anchored Neighborhood Regression for Fast Example-Based Super-Resolution

http://www.vision.ee.ethz.ch/~timofter/ICCV2013_ID1774_SUPPLEMENTARY/index.html

而cvpr2018的SR文章使用的训练集,基本就是比较大的数据集了,其实选什么都可以的,只要不包含test data即可。DIV2K consists of 800 training images, 100 validation images, and 100 test images. We train all of our models with 800 training images and use 5 validation images in the training process. For testing, we use five standard benchmark datasets: Set5 [1], Set14 [33], B100 [18], Urban100 [8], and Manga109 [19]. The SR results are evaluated with PSNR and SSIM [32] on Y channel (i.e., luminance) of transformed YCbCr space.

整理了SRCNN的训练数据 91,测试数据集 set5,set14,B100,urban100

链接: https://pan.baidu.com/s/1f5CrntYV2RgsAVoDx3hvUg 提取码: jv2f 复制这段内容后打开百度网盘手机App,操作更方便哦

keras版本的模型训练代码,可以参考一下

https://github.com/DeNA/SRCNNKit/tree/master/script

使用misc缩放图片,处理图片,获得网络的训练数据

建立网络,使用generator产生batch来训练网络

VDSR

CVPR 2016 首尔大学

https://cv.snu.ac.kr/research/VDSR/