k8s安装Prometheus+Grafana:

1.在kubernetest集群中创建namespace:

[root@master k8s-promethus]# cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ns-monitor

labels:

name: ns-monitor

[root@master k8s-promethus]# kubectl apply -f namespace.yaml

2.安装node-exporter:

[root@master k8s-promethus]# cat node-exporter.yaml

kind: DaemonSet

apiVersion: apps/v1

metadata:

labels:

app: node-exporter

name: node-exporter

namespace: ns-monitor

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

ports:

- containerPort: 9100

protocol: TCP

name: http

hostNetwork: true

hostPID: true

tolerations:

- effect: NoSchedule

operator: Exists

---

kind: Service

apiVersion: v1

metadata:

labels:

app: node-exporter

name: node-exporter-service

namespace: ns-monitor

spec:

ports:

- name: http

port: 9100

protocol: TCP

type: NodePort

selector:

app: node-exporter

[root@master k8s-promethus]# kubectl apply -f node-exporter.yaml

[root@master k8s-promethus]# kubectl get pod -n ns-monitor -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-cl6d5 1/1 Running 15 3h15m 192.168.100.64 test <none> <none>

node-exporter-gwwnj 1/1 Running 0 3h15m 192.168.100.201 node1 <none> <none>

node-exporter-hbglm 1/1 Running 0 3h15m 192.168.100.200 master <none> <none>

node-exporter-kwsfv 1/1 Running 0 3h15m 192.168.100.202 node2 <none> <none>

[root@master k8s-promethus]# kubectl get svc -n ns-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

node-exporter-service NodePort 10.99.128.173 <none> 9100:30372/TCP 3h17m

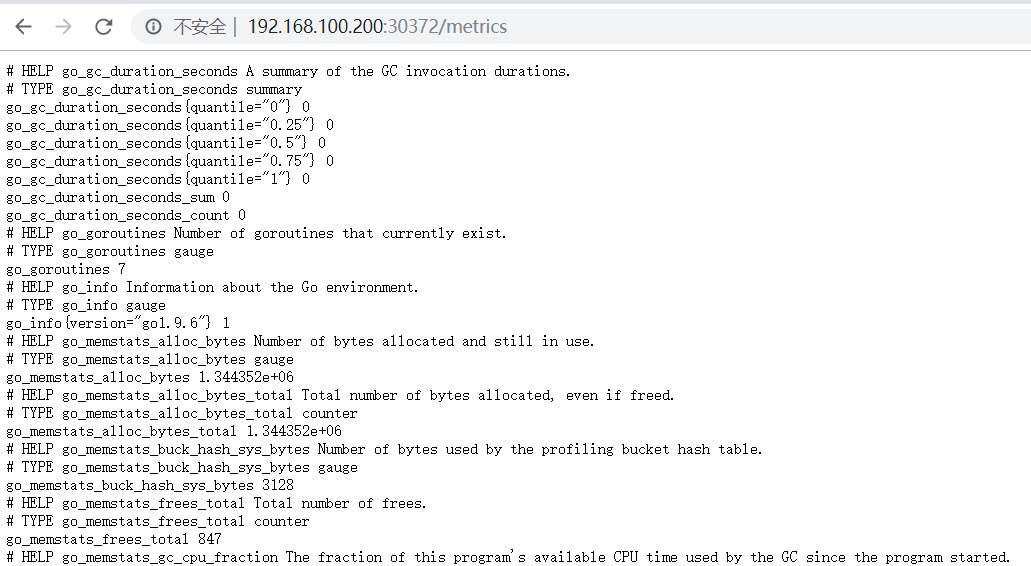

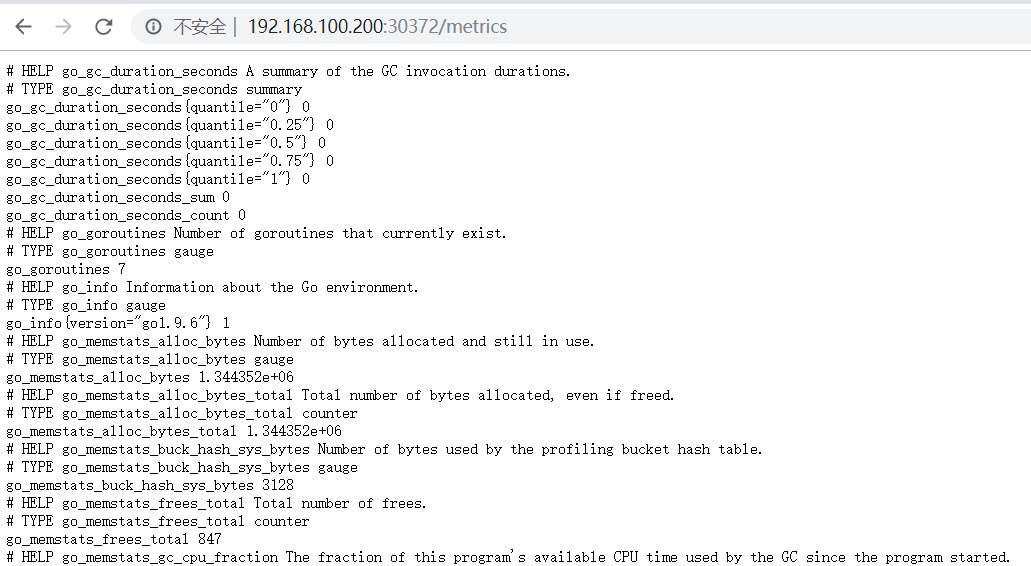

访问测试:

说明:node-exporter已经运行在成功。

3.部署Prometheus :

注意:prometheus.yaml 中包含rbac认证、ConfigMap等

[root@master k8s-promethus]# kubectl apply -f prometheus.yaml

clusterrole.rbac.authorization.k8s.io/prometheus unchanged

serviceaccount/prometheus unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus unchanged

configmap/prometheus-conf unchanged

configmap/prometheus-rules unchanged

persistentvolume/prometheus-data-pv unchanged

persistentvolumeclaim/prometheus-data-pvc unchanged

deployment.apps/prometheus unchanged

service/prometheus-service unchanged

注意:这里prometheus是用的pv,pvc做本地存储。所以,需要在这之前需要部署好nfs,此处省略部署nfs

[root@master k8s-promethus]# kubectl get pv -n ns-monitor

NAME CAPACITY ACCESSMODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

prometheus-data-pv 5Gi RWO Recycle Bound ns-monitor/prometheus-data-pvc 157m

[root@master k8s-promethus]# kubectl get pvc -n ns-monitor

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

prometheus-data-pvc Bound prometheus-data-pv 5Gi RWO 158m

说明:此时pvc已经成功绑定pv.

[root@master k8s-promethus]# kubectl get pod -n ns-monitor

NAME READY STATUS RESTARTS AGE

node-exporter-cl6d5 1/1 Running 15 3h27m

node-exporter-gwwnj 1/1 Running 0 3h27m

node-exporter-hbglm 1/1 Running 0 3h27m

node-exporter-kwsfv 1/1 Running 0 3h27m

prometheus-dd69c4889-qwmww 1/1 Running 0 161m

[root@master k8s-promethus]# kubectl get svc -n ns-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

node-exporter-service NodePort 10.99.128.173 <none> 9100:30372/TCP 3h29m

prometheus-service NodePort 10.96.119.235 <none> 9090:31555/TCP 162m

访问测试:

说明:prometheus已经成功运行。

4.部署kube-state-metrics:

wget https://github.com/kubernetes/kube-state-metrics/tree/master/examples/standard

[root@master kube-state-metrics]# for file in cluster-role-binding.yaml cluster-role.yaml service-account.yaml service.yaml deployment.yaml ; do wget https://raw.githubusercontent.com/kubernetes/kube-state-metrics/master/examples/standard/$file ;done

[root@master kube-state-metrics]# ll

总用量 24

-rw-r--r-- 1 root root 376 12月 8 20:29 cluster-role-binding.yaml

-rw-r--r-- 1 root root 1651 12月 8 20:14 cluster-role.yaml

-rw-r--r-- 1 root root 1068 12月 8 20:29 deployment.yaml

-rw-r--r-- 1 root root 634 12月 8 21:19 kube-state-svc.yaml

-rw-r--r-- 1 root root 192 12月 8 20:29 service-account.yaml

-rw-r--r-- 1 root root 452 12月 8 21:36 service.yaml

[root@master kube-state-metrics]# for i in cluster-role-binding.yaml deployment.yaml service-account.yaml service.yaml; do sed -i '/namespace/s/kube-system/ns-monitor/' $i ; done

注意:修改下来的需要修改与自己对应的名称空间

修改service.yaml

[root@master kube-state-metrics]# vim service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.8.0

name: kube-state-metrics

namespace: ns-monitor

annotations:

prometheus.io/scrape: "true" ##添加此参数,允许prometheus自动发现

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

[root@master kube-state-metrics]# kubectl apply -f ./

[root@master kube-state-metrics]# kubectl get pod -n ns-monitor

NAME READY STATUS RESTARTS AGE

grafana-576db894c6-4qtcl 1/1 Running 0 23h

kube-state-metrics-7b48c458ff-vwd5p 1/1 Running 0 137m

node-exporter-cl6d5 1/1 Running 15 24h

node-exporter-gwwnj 1/1 Running 0 24h

node-exporter-hbglm 1/1 Running 1 24h

node-exporter-kwsfv 1/1 Running 0 24h

prometheus-dd69c4889-qwmww 1/1 Running 0 23h

[root@master kube-state-metrics]# kubectl get svc -n ns-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana-service NodePort 10.104.174.223 <none> 3000:30771/TCP 23h

kube-state-metrics ClusterIP None <none> 8080/TCP,8081/TCP 112m

node-exporter-service NodePort 10.99.128.173 <none> 9100:30372/TCP 24h

prometheus-service NodePort 10.96.119.235 <none> 9090:31555/TCP 23h

说明:此时kube-state-metrics已经运行起来。

在prometheus查看:

说明:kube-state-metrics已经成功被prometheus监控。

5.在kubernetest中部署grafana:

[root@master k8s-promethus]# cat grafana.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: "grafana-data-pv"

labels:

name: grafana-data-pv

release: stable

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

nfs:

path: /data/volumes/v2

server: 192.168.100.64

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data-pvc

namespace: ns-monitor

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

selector:

matchLabels:

name: grafana-data-pv

release: stable

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: grafana

name: grafana

namespace: ns-monitor

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

securityContext:

runAsUser: 0

containers:

- name: grafana

image: grafana/grafana:latest

imagePullPolicy: IfNotPresent

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-data-volume

ports:

- containerPort: 3000

protocol: TCP

volumes:

- name: grafana-data-volume

persistentVolumeClaim:

claimName: grafana-data-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: grafana

name: grafana-service

namespace: ns-monitor

spec:

ports:

- port: 3000

targetPort: 3000

selector:

app: grafana

type: NodePort

[root@master k8s-promethus]# kubectl apply -f grafana.yaml

persistentvolume/grafana-data-pv unchanged

persistentvolumeclaim/grafana-data-pvc unchanged

deployment.apps/grafana unchanged

service/grafana-service unchanged

注意:此时grafana也需要pvc.

[root@master k8s-promethus]# kubectl get pv,pvc -n ns-monitor

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/grafana-data-pv 5Gi RWO Recycle Bound ns-monitor/grafana-data-pvc 164m

persistentvolume/prometheus-data-pv 5Gi RWO Recycle Bound ns-monitor/prometheus-data-pvc 168m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana-data-pvc Bound grafana-data-pv 5Gi RWO 164m

persistentvolumeclaim/prometheus-data-pvc Bound prometheus-data-pv 5Gi RWO 168m

[root@master k8s-promethus]# kubectl get pod -n ns-monitor

NAME READY STATUS RESTARTS AGE

grafana-576db894c6-4qtcl 1/1 Running 0 166m

node-exporter-cl6d5 1/1 Running 15 3h36m

node-exporter-gwwnj 1/1 Running 0 3h36m

node-exporter-hbglm 1/1 Running 0 3h36m

node-exporter-kwsfv 1/1 Running 0 3h36m

prometheus-dd69c4889-qwmww 1/1 Running 0 169m

[root@master k8s-promethus]# kubectl get svc -n ns-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana-service NodePort 10.104.174.223 <none> 3000:30771/TCP 166m

node-exporter-service NodePort 10.99.128.173 <none> 9100:30372/TCP 3h37m

prometheus-service NodePort 10.96.119.235 <none> 9090:31555/TCP 170m

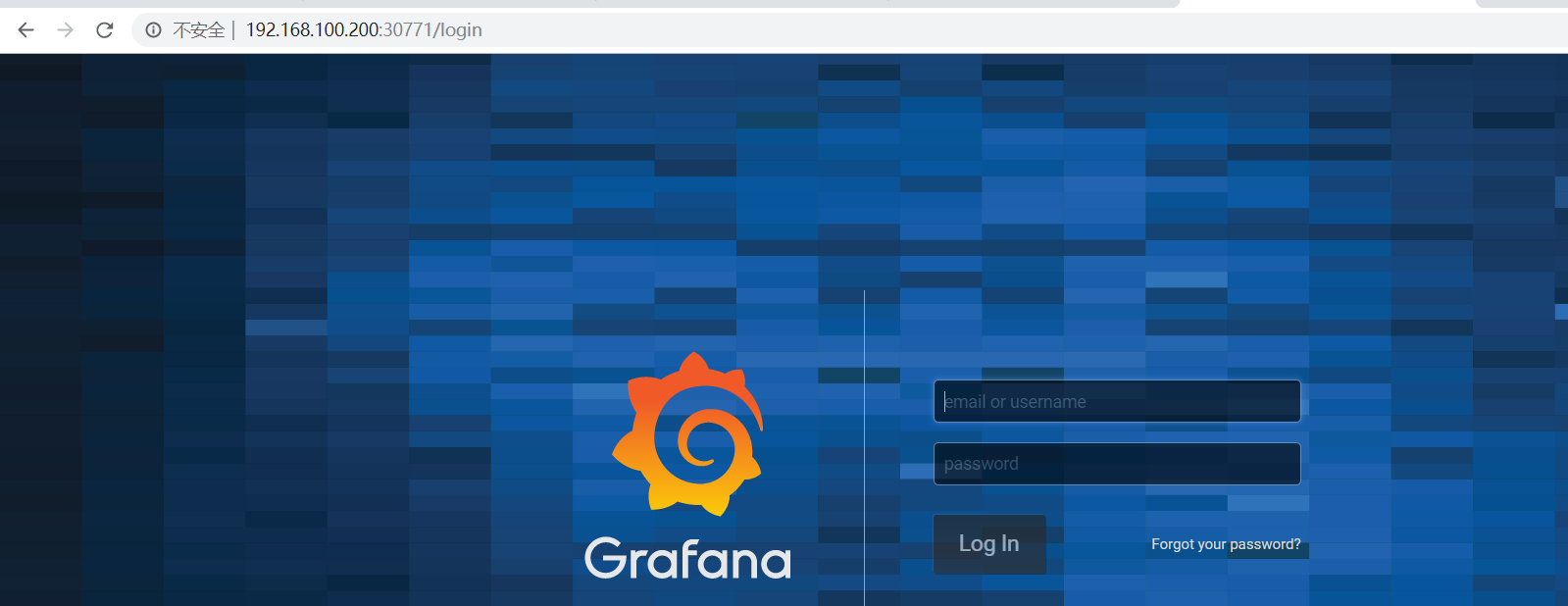

访问测试:

说明:Grafana已经部署完成,用户名/密码:admin/admin

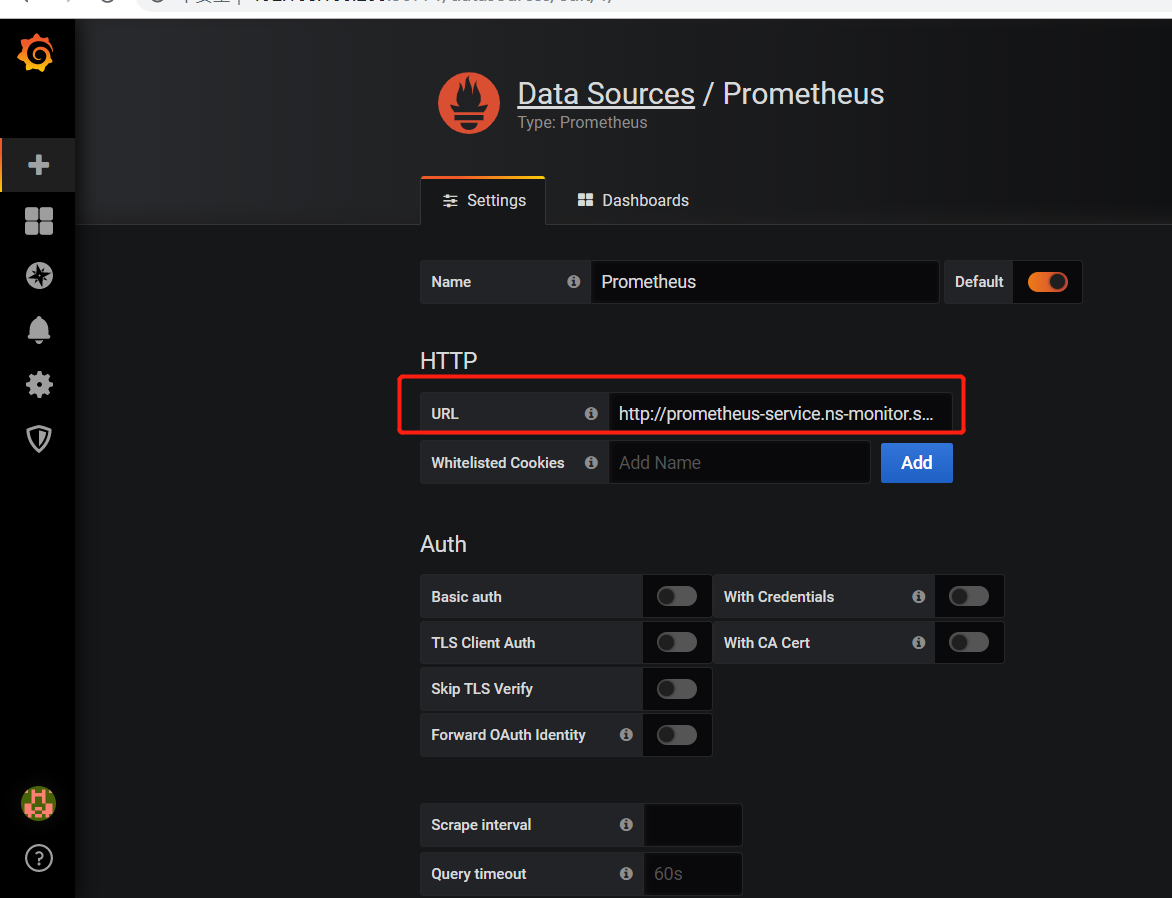

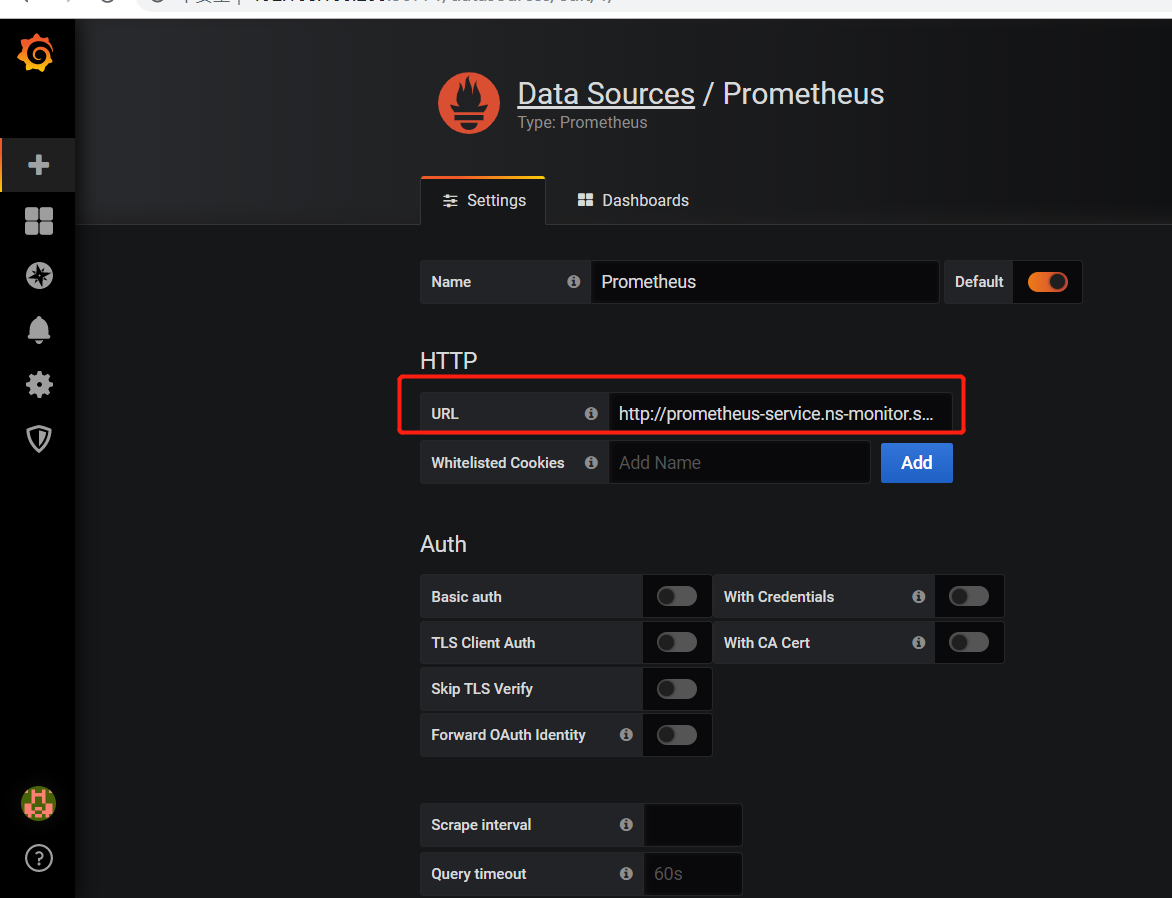

添加数据源:URL=http://prometheus-service.ns-monitor.svc.cluster.local:9090

导入模板: